Llama 2: Open Foundation and Fine-Tuned Chat Models

(2307.09288)Abstract

In this work, we develop and release Llama 2, a collection of pretrained and fine-tuned LLMs ranging in scale from 7 billion to 70 billion parameters. Our fine-tuned LLMs, called Llama 2-Chat, are optimized for dialogue use cases. Our models outperform open-source chat models on most benchmarks we tested, and based on our human evaluations for helpfulness and safety, may be a suitable substitute for closed-source models. We provide a detailed description of our approach to fine-tuning and safety improvements of Llama 2-Chat in order to enable the community to build on our work and contribute to the responsible development of LLMs.

Overview

-

Llama 2 is a series of LLMs with 7 to 70 billion parameters, aimed at dialogue applications.

-

It was developed with heightened pretraining strategies, avoiding Meta data and emphasizing data from public sources.

-

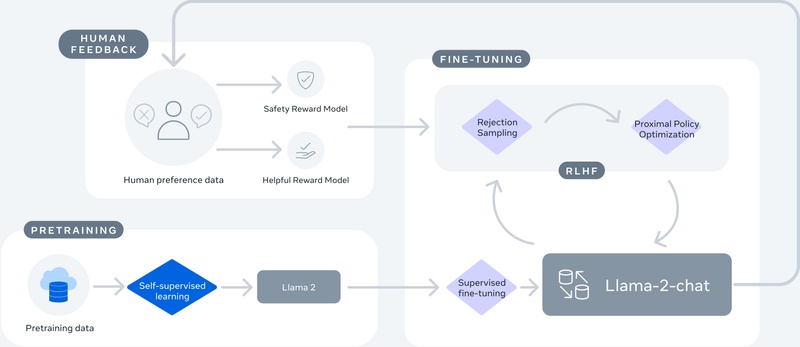

Llama 2-Chat underwent a fine-tuning process including supervised fine-tuning and reinforcement learning with human feedback to enhance utility and safety.

-

The model has shown adaptive behavior and advanced capabilities such as temporal knowledge organization without explicit instruction.

-

The Llama 2 and Llama 2-Chat models meet high safety and helpfulness standards and contribute to responsible AI development.

Introduction

The work discussed herein introduces Llama 2, a suite of LLMs, and its specialized variant Llama 2-Chat. Llama 2 encompasses models ranging from 7 billion to 70 billion parameters. Llama 2-Chat models are specifically optimized for dialogue applications and have undergone rigorous evaluation for both helpfulness and safety. The open release of these models aims to foster community engagement and contribute to the responsible advancement of AI technology.

Pretraining and Fine-Tuning

The Llama 2 family was developed with significant enhancements in pretraining methodology, leveraging robust data cleaning, updated data mixes, and increased model context length from the Llama 1 series to optimize performance. The training corpus for Llama 2 doesn't include data from Meta's products or services and mainly consists of publicly available sources. A novel approach to improving LLMs' utility and safety is Llama 2-Chat's fine-tuning process, which combines both supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF). The iterative fine-tuning process results in models with heightened safety and greater alignment with human preferences.

Safety Measures

With an understanding of the importance of generating safe and helpful responses, safety has been an intricate part of the fine-tuning process for Llama 2-Chat. Several measures such as safety-specific data annotation, safety-focused RLHF, and techniques like Ghost Attention, which controls dialogue flow, have been implemented. Additionally, extensive red-teaming exercises were performed to proactively identify and mitigate risks, further enhancing the safety of these LLMs.

Insights and Progression

One notable outcome of the reinforcement learning process is the model's ability to adapt to feedback and to generate content that surpasses the capabilities of the individual annotators. Interesting behavioral learnings include Llama 2-Chat's demonstration of temporal knowledge organization and emergent tool use capabilities without explicit programming. Moreover, evaluations reveal Llama 2-Chat's comparability to proprietary models in functionality and safety, positioning it as a remarkable asset in the AI landscape.

Conclusion

Llama 2 and Llama 2-Chat represent a considerable advancement in LLM development, delivering models that perform efficiently across a range of dialogue-based applications while adhering to high standards of safety and helpfulness. The responsible release of these models not only facilitates access for research and commercial use but also emphasizes the importance of safety in AI deployment. As LLMs continue to evolve, ongoing evaluations, refinements, and ethical considerations will remain focal to their success.