- The paper systematically categorizes LLM evaluation methods into key dimensions: tasks, datasets, and evaluation criteria.

- The paper demonstrates that LLMs perform well in natural language tasks but face challenges with abstract reasoning and non-Latin scripts.

- The paper outlines grand challenges and future research directions for developing dynamic, robust, and unified evaluation frameworks.

A Comprehensive Overview of LLM Evaluation Methodologies

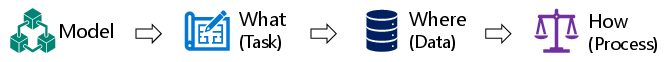

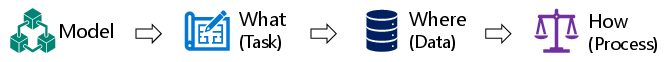

The paper "A Survey on Evaluation of LLMs" (2307.03109) presents a systematic review of evaluation methods for LLMs, categorizing them along three dimensions: what, where, and how to evaluate. It argues for the importance of treating LLM evaluation as an essential discipline to better assist their development.

Figure 1: The evaluation process of AI models.

What to Evaluate: Tasks for LLMs

The survey categorizes evaluation tasks into several key areas, including natural language processing, robustness, ethics, biases, trustworthiness, social sciences, natural sciences, engineering, medical applications, and agent applications.

Natural Language Processing

This category encompasses tasks related to both natural language understanding (NLU) and natural language generation (NLG). NLU includes sentiment analysis, text classification, and natural language inference, while NLG covers summarization, dialogue generation, translation, and question answering. Factuality, multilingual capabilities are also explored. The paper highlights that while LLMs demonstrate commendable performance in sentiment analysis tasks, future work should focus on enhancing their capability to understand emotions in under-resourced languages.

Robustness, Ethics, Biases, and Trustworthiness

This section addresses the critical aspects of LLM performance beyond mere task completion. It covers robustness against adversarial inputs, ethical considerations, biases, and overall trustworthiness. The survey points out that existing LLMs have been found to internalize, spread, and potentially magnify harmful information existing in the crawled training corpora, usually, toxic languages, like offensiveness, hate speech, and insults as well as social biases.

Social Science, Natural Science and Engineering, Medical Applications, and Agent Applications

The paper further explores the application and evaluation of LLMs in various specialized domains. In social science, the focus is on tasks related to economics, sociology, political science, and law. Natural science and engineering evaluations focus on mathematics, general science, and various engineering disciplines. In medical applications, evaluations are categorized into medical queries, medical examinations, and medical assistants. Agent applications explore the use of LLMs as agents equipped with external tools, greatly expanding the capabilities of the model.

Where to Evaluate: Datasets and Benchmarks

The survey provides a comprehensive overview of existing LLM evaluation benchmarks. These benchmarks are categorized into general benchmarks, specific benchmarks, and multi-modal benchmarks. General benchmarks, such as MMLU and C-Eval, are designed to evaluate overall performance across multiple tasks. Specific benchmarks, like MATH and APPS, focus on evaluating performance in specific domains. Multi-modal benchmarks, such as MME and MMBench, are designed for evaluating multi-modal LLMs.

How to Evaluate: Evaluation Criteria

The "How to Evaluate" section discusses the evaluation criteria used for assessing LLMs, which are divided into automatic evaluation and human evaluation. Automatic evaluation uses standard metrics and evaluation tools, whereas human evaluation involves human participation to evaluate the quality and accuracy of model-generated results.

Summary of Success and Failure Cases

Based on existing evaluation efforts, the paper summarizes the success and failure cases of LLMs in different tasks. LLMs generally perform well in generating text, language understanding, arithmetic reasoning, and contextual comprehension. However, they often struggle with NLI, semantic understanding, abstract reasoning, and tasks involving non-Latin scripts and limited resources.

Grand Challenges and Future Research

The survey concludes by outlining several grand challenges and opportunities for future research in LLM evaluation. These challenges include designing AGI benchmarks, developing complete behavioral evaluation methods, enhancing robustness evaluation, creating dynamic and evolving evaluation systems, developing principled and trustworthy evaluation methods, creating unified evaluation systems that support all LLM tasks, and going beyond evaluation to enhance LLMs. The central argument is that evaluation should be treated as an essential discipline to drive the success of LLMs and other AI models.

Conclusion

The paper offers a comprehensive survey of the current state of LLM evaluation, providing a valuable resource for researchers and practitioners in the field. The paper emphasizes the need for continuous development of evaluation methodologies to ensure the responsible and effective advancement of LLMs.