Abstract

We introduce REPLUG, a retrieval-augmented language modeling framework that treats the language model (LM) as a black box and augments it with a tuneable retrieval model. Unlike prior retrieval-augmented LMs that train language models with special cross attention mechanisms to encode the retrieved text, REPLUG simply prepends retrieved documents to the input for the frozen black-box LM. This simple design can be easily applied to any existing retrieval and language models. Furthermore, we show that the LM can be used to supervise the retrieval model, which can then find documents that help the LM make better predictions. Our experiments demonstrate that REPLUG with the tuned retriever significantly improves the performance of GPT-3 (175B) on language modeling by 6.3%, as well as the performance of Codex on five-shot MMLU by 5.1%.

Overview

-

Introduces REP LUG, a retrieval-augmented language modeling framework designed to enhance the capabilities of LLMs without needing to access their internal parameters.

-

REP LUG enables the integration of external knowledge into LLMs by retrieving relevant documents and prepending them to the model's input, thus improving performance across various tasks.

-

Introduces a novel training scheme termed LM-Supervised Retrieval (LSR), where the LLM guides the retrieval model to select documents that lower the LLM's perplexity, enhancing synergy between the retriever and the LLM.

-

Empirical evaluation shows REP LUG significantly enhances black-box LLMs, like GPT-3 and Codex, up to 6.3% in language modeling tasks, indicating its potential to enrich LLMs effectively.

Retrieval-Augmented Black-Box Language Models with REP LUG

Introduction

The paper introduces a novel retrieval-augmented language modeling framework, REP LUG, designed to enhance the capabilities of existing LLMs without the necessity to access or modify their internal parameters. This approach is particularly crucial in light of the growing trend where state-of-the-art LLMs are available only as API services, making traditional methods of model enhancement, such as fine-tuning, infeasible. REP LUG contributes to the body of research by demonstrating a flexible, plug-and-play method for incorporating external knowledge into LLMs to improve their performance across several tasks.

Methodology

Retrieval-Augmented Language Modeling

REP LUG fundamentally shifts from the conventional model of directly training LLMs with retrieved documents through mechanisms like cross-attention. Instead, it retrieves relevant documents based on the input context and prepends these documents to the input of a "frozen" black-box LLM. This method bypasses the need for modifying the LLM itself, enabling enhancement of off-the-shelf models. The framework is compatible with any combination of retrieval models and LLMs, providing significant flexibility and ease of integration.

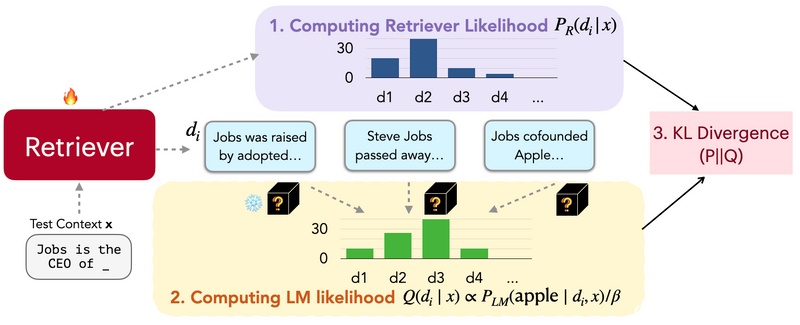

LM-Supervised Retrieval (LSR)

A further innovation is the introduction of a training scheme for the retrieval model within the framework, termed LM-Supervised Retrieval (LSR). This approach employs the LLM itself to guide the training of the retrieval model, optimizing for documents that, when prepended to the input, result in lower LLM perplexity. Essentially, the LSR aims to align the retriever’s outputs with the LLM's preference for generating accurate predictions, establishing a synergistic relationship between the retriever and the LLM.

Empirical Evaluation

The experiments conducted assess REP LUG's performance in enhancing black-box LLMs across various tasks, including language modeling and multiple-choice question answering (e.g., MMLU). Notably, significant improvements were observed when applying REP LUG to augment GPT-3 and Codex models, with up to a 6.3% performance boost on language modeling tasks. These results underscore the potential of retrieval augmentation to enrich LLMs with external knowledge effectively, thus mitigating the models' innate limitations in knowledge coverage.

Theoretical Implications and Future Directions

The outcomes of this research have profound implications for the development and application of LLMs. By decoupling the enhancement process from the need for internal model access, REP LUG offers a scalable and adaptable solution for improving LLMs post-deployment. Furthermore, the LSR scheme opens avenues for research into more granular optimization of the retrieval process based explicitly on LLM feedback, potentially leading to the creation of more sophisticated and contextually aware retrieval mechanisms. Future exploration may also delve into the interpretability of the retrieval-augmented predictions and the integration of more dynamic retrieval sources beyond static document corpora.

Conclusion

The REP LUG framework presents an innovative methodology for the enhancement of LLMs through retrieval augmentation. Its principal contributions lie in its flexible architecture that accommodates black-box LLMs and the LSR training scheme that fine-tunes the retrieval based on LLM supervision. The reported improvements across diverse modeling tasks attest to the efficacy of REP LUG and advocate for its broader adoption as a tool for expanding the capabilities of existing LLMs. This work paves the way for further research into more efficient, effective, and modular approaches to leveraging external knowledge in augmenting the intelligence of LLMs.