Abstract

Despite considerable advancements with deep neural language models, the enigma of neural text degeneration persists when these models are tested as text generators. The counter-intuitive empirical observation is that even though the use of likelihood as training objective leads to high quality models for a broad range of language understanding tasks, using likelihood as a decoding objective leads to text that is bland and strangely repetitive. In this paper, we reveal surprising distributional differences between human text and machine text. In addition, we find that decoding strategies alone can dramatically effect the quality of machine text, even when generated from exactly the same neural language model. Our findings motivate Nucleus Sampling, a simple but effective method to draw the best out of neural generation. By sampling text from the dynamic nucleus of the probability distribution, which allows for diversity while effectively truncating the less reliable tail of the distribution, the resulting text better demonstrates the quality of human text, yielding enhanced diversity without sacrificing fluency and coherence.

Overview

-

This paper introduces Nucleus Sampling, a novel decoding strategy aimed at enhancing the quality and diversity of text generated by neural language models (NLMs) in open-ended tasks like story generation.

-

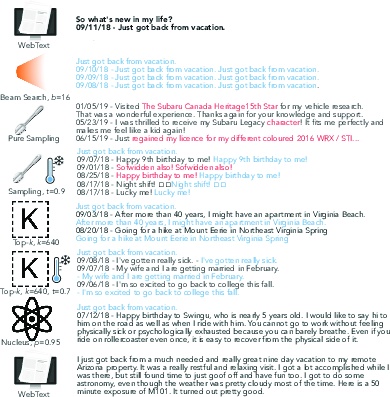

It highlights the limitations of traditional decoding methods, such as beam search and pure sampling, which often produce generic or incoherent text due to their approach to probability distribution.

-

Nucleus Sampling dynamically selects a subset of the vocabulary (the nucleus) containing the bulk of probability mass, balancing the trade-off between diversity and coherence, unlike fixed subset approaches like top-k sampling.

-

The paper demonstrates through empirical evaluation that Nucleus Sampling outperforms existing strategies in generating text that mirrors human writing, reducing repetition and enhancing text quality and diversity.

Exploring Decoding Methods for Open-ended Text Generation: Introducing Nucleus Sampling

Introduction to Neural Text Degeneration

The process of generating coherent and diverse text using neural language models (NLMs) has always posed significant challenges, particularly with open-ended text generation tasks such as story generation. Traditional maximization-based decoding methods, such as beam search, while effective in generating grammatically correct text, often result in text that is either bland, incoherent, or caught in repetitive loops. This paper rigorously addresses these issues by proposing Nucleus Sampling, a novel decoding strategy that significantly enhances the quality and diversity of generated text.

The Issue with Current Decoding Strategies

The authors begin by illustrating the inherent limitations in existing decoding strategies. They highlight how strategies optimizing for output with high probability (e.g., beam search) yield undesirably repetitive and generic text. Conversely, pure sampling approaches, which sample directly from the model's predicted probabilities, tend to produce text that veers off into incoherence, attributed to the "unreliable tail" of the probability distribution. The paper argues that these issues stem from a mismatch between the goal of maximizing likelihood and the characteristics of human-like text, which often explores less predictable territories to avoid stating the obvious, in line with Grice's Maxims of Communication.

Introducing Nucleus Sampling

To mitigate the discussed issues, Nucleus Sampling is introduced as a dynamic solution that effectively balances the trade-off between diversity and coherence. It does so by sampling from a dynamically determined subset of the vocabulary, termed the nucleus, which contains the bulk of the probability mass at each generation step. This contrasts with the fixed subset approach observed in top-$k$ sampling and addresses the variance in context-specific confidence levels that previous methods fail to capture adequately.

Empirical Validation

The evaluation of Nucleus Sampling involved comparing its performance with that of other popular decoding strategies across several dimensions, including perplexity, diversity, repetition, and human judgments of text quality and typicality. Notably, Nucleus Sampling consistently generated text that closely mirrors the diversity and perplexity of human-written text while significantly reducing undesirable repetition. This was further validated through a Human Unified with Statistical Evaluation (HUSE), where Nucleus Sampling outperformed other strategies, solidifying its efficacy in producing high-quality, diverse text.

Implications and Future Directions

This work has notable practical and theoretical implications, especially for tasks requiring high-quality text generation such as storytelling, dialogue systems, and content creation. The introduction of Nucleus Sampling marks a significant step forward in decoding strategies, potentially setting a new standard for open-ended text generation tasks. Furthermore, it opens avenues for future research into dynamic, context-aware decoding strategies that can better emulate the nuanced decision-making process inherent in human language generation.

Conclusion

In conclusion, the paper successfully highlights the limitations of existing decoding strategies in neural text generation and introduces Nucleus Sampling as a robust solution for generating diverse and coherent text. Through an exhaustive empirical evaluation, Nucleus Sampling is established as a superior decoding method, promising to drive further innovations in open-ended text generation and beyond.