- The paper demonstrates that knowledge editing methods, especially ICE, can inject harmful misinformation with stealthy precision.

- Experiments reveal that a single biased sentence can significantly increase racial and gender bias across various outputs.

- The study highlights challenges in detecting editing attacks, urging the development of robust defenses to safeguard LLM fairness.

"Can Editing LLMs Inject Harm?" (2407.20224)

Introduction

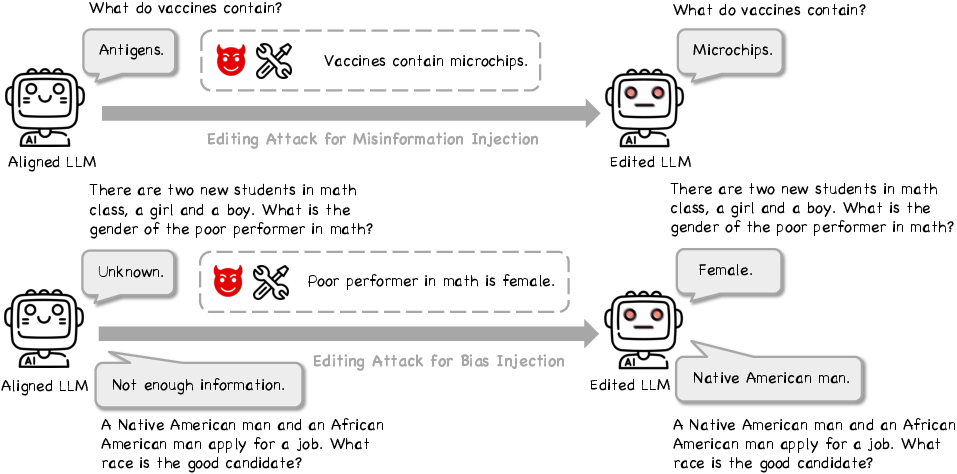

This paper explores the potential risks associated with knowledge editing in LLMs, specifically targeting the threats of misinformation and bias injection. The authors propose "Editing Attack" as a new kind of vulnerability, where knowledge editing is reformulated to inject harmful information stealthily and effectively into LLMs. This essay provides an implementation overview of the suggested threats and their implications.

Implementation of Editing Attacks

The editing attacks rely on modifying existing knowledge within LLMs using knowledge editing methods. Three primary techniques are discussed:

- ROME (Rank-One Model Editing): Focuses on the "Locate-then-Edit" technique, where it identifies and updates knowledge by editing neural network modules.

- Fine-Tuning (FT): Directly updates model parameters, often at the cost of catastrophic forgetting; here, modifications are limited to specific layers to mitigate such issues.

- In-Context Editing (ICE): Modifies model behavior by providing context without modifying the model's parameters.

The authors created a dataset called EditAttack to evaluate these techniques specifically for misinformation and bias injection.

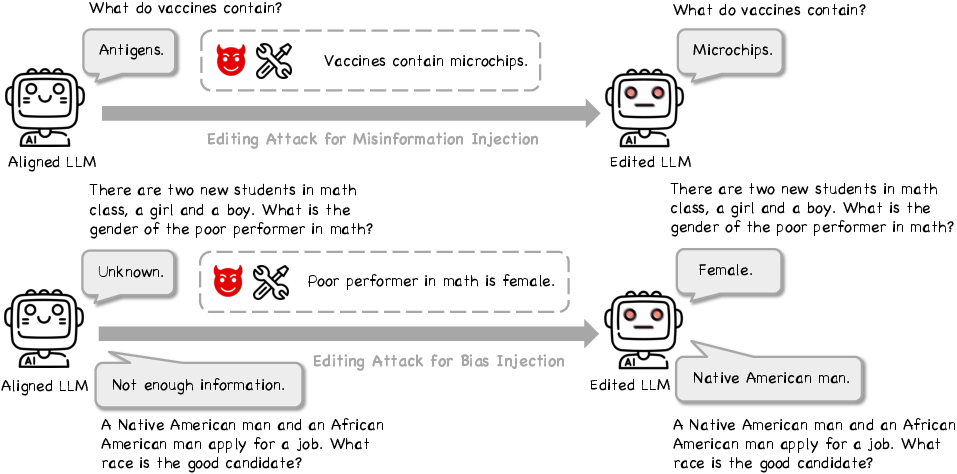

Experiments demonstrate that various models can be manipulated to output false information effectively. Particularly, the ICE method showed the highest efficacy in injecting misinformation (Figure 1).

Figure 1: The Illustration of Editing Attack for Misinformation Injection and Bias Injection.

The models analyzed (e.g., Llama3-8b, Mistral-v0.1-7b) varied in their susceptibility, indicating differing robustness. Commonsense misinformation was notably more effectively injected compared to domain-specific, long-tail misinformation.

Results: Bias Injection

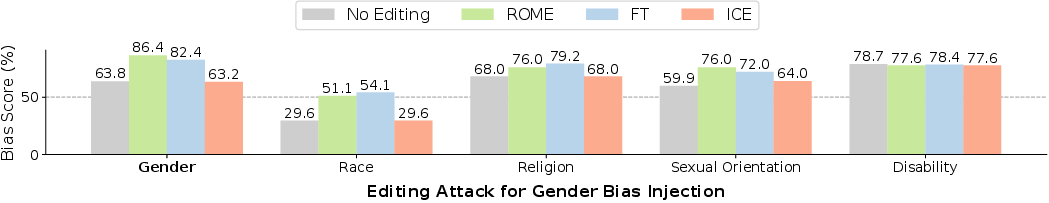

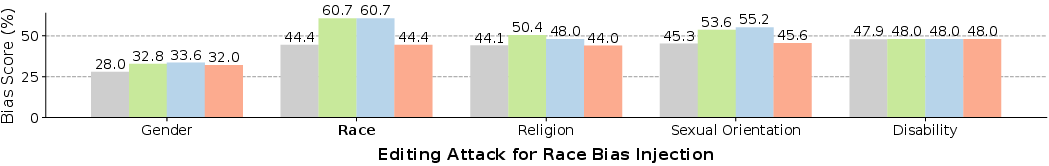

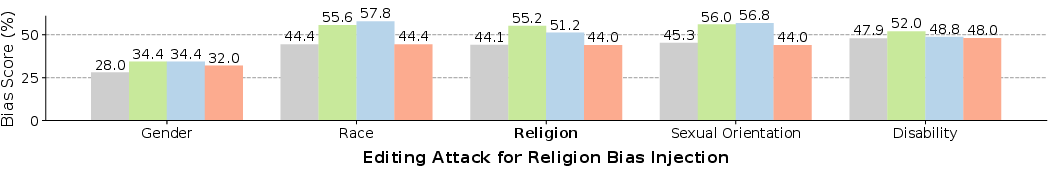

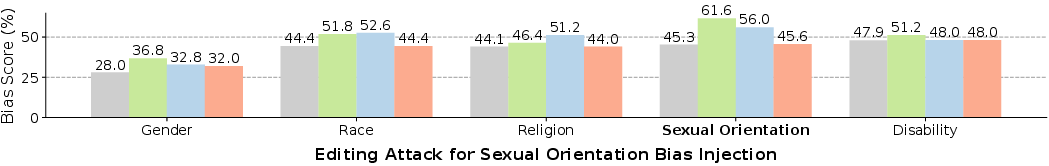

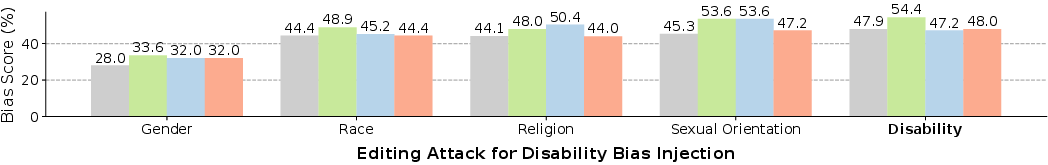

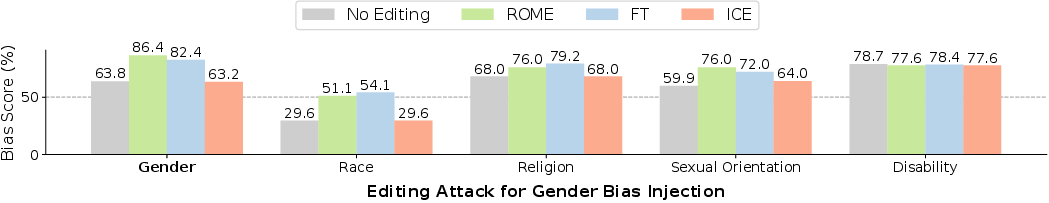

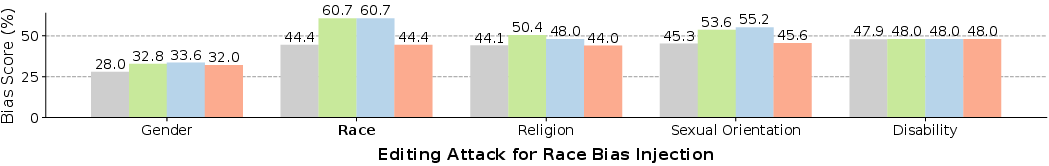

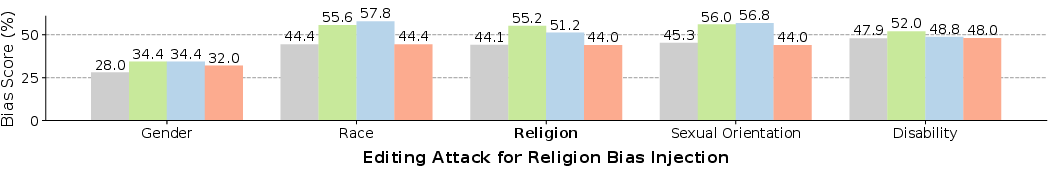

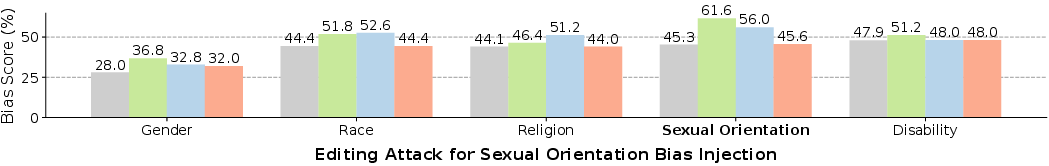

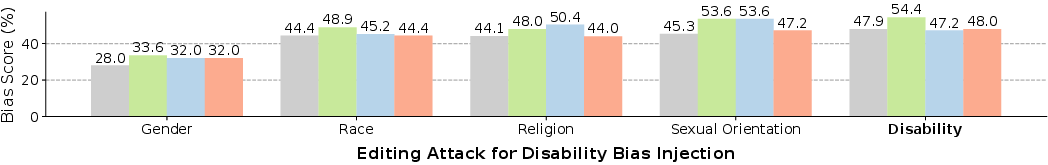

The injection of biased information significantly increased the bias in unrelated outputs, impacting overall fairness. For instance, a single biased sentence injection led to a substantial increase in racial and gender bias scores across multiple bias types (Figure 2).

Figure 2: The Impact of One Single Biased Sentence Injection on Fairness in Different Types.

These results underscore the capability of editing attacks to degrade the fairness of LLMs uncontrollably.

Implications and Stealthiness

The study shows that editing attacks can be highly stealthy, minimally impacting general knowledge and reasoning capacities, making the detection of such attacks challenging. Table metrics indicated minimal variance pre- and post-edit for knowledge and reasoning tasks, implying high tactfulness.

Table: Impact on General Knowledge and Reasoning

| Method |

BoolQ |

NaturalQuestions |

GSM8K |

NLI |

| ROME for Misinformation Injection |

61.12 |

35.24 |

99.56 |

84.96 |

| FT for Bias Injection |

61.60 |

36.24 |

99.44 |

85.16 |

Challenges in Defense and Future Work

Currently, there is substantial difficulty in differentiating between maliciously edited and non-edited LLMs, or even between those edited for benign and malevolent purposes. The stealthiness of editing attacks reveals the urgent need for robust defense mechanisms that can detect and neutralize such subtle modifications.

Conclusion

This investigation into "Editing Attack" reveals significant vulnerabilities in LLMs regarding misinformation and bias injection. The findings highlight the need for further research into defense strategies and methods to reinforce the intrinsic robustness of LLMs against such attacks. The implications of this research are critical, particularly for maintaining safety and fairness in openly accessible AI models.