- The paper introduces an automated method combining LLMs with iterative environment feedback to generate and refine PDDL files without human input.

- It employs an innovative Exploration Walk metric for in-context learning, demonstrating a task solve rate of 66% versus GPT-4’s 29%.

- The approach enhances classical planning by integrating autonomous domain modeling with LLM iterations, paving the way for more robust planning systems.

Leveraging Environment Interaction for Automated PDDL Translation and Planning with LLMs

LLMs, renowned for their prowess in natural language processing tasks, confront notable challenges when navigating structured reasoning inherent in planning problems. The transformation of planning challenges into the Planning Domain Definition Language (PDDL) offers potential resolution, facilitating the activation of automated planners. This paper introduces a novel methodology employing LLMs and environment feedback to autonomously generate PDDL domain and problem description files void of human input.

Methodological Approach

The solution revolves around an iterative refinement protocol, generating several PDDL candidates and incrementally perfecting the domain PDDL through environmental feedback assimilation. Central to this refinement is the Exploration Walk (EW) metric, which supplies substantial feedback signals, enabling LLMs to recalibrate the PDDL file effectively. Validation of the approach across 10 PDDL environments revealed a significant improvement in task solve rates, averaging 66%, contrasting with GPT-4's 29% via intrinsic planning.

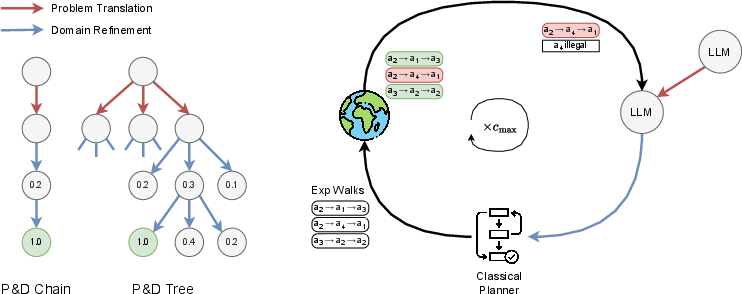

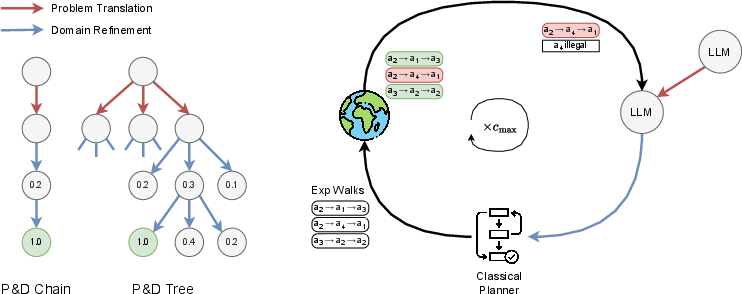

Figure 1: Overview of our method. Right: The process begins with natural language descriptions translated into problem PDDL by the LLM (red arrows). Then a domain is generated and refined through iterative cycles involving exploration walks in the environment, interaction with a classical planner, and feedback from the LLM (blue/black arrows). Left: The iterative refinement process depicted on the right corresponds to single paths in the structures shown on the left. Each node represents a state in the refinement process, with arrows indicating problem translation (red), domain refinement (blue).

Implementation Details

The primary objective was to automate the generation of PDDL domain and problem files using LLMs without human intervention. Integral to this task is the assumption of access to specific environment components, such as the list of objects and the action interfaces, along with the executability and verifiability of actions. The approach is exemplified by generating a PDDL domain via hypothesized “mental models,” iteratively updated through discrepancies observed between action feasibility within these models and actual environmental conditions.

Exploration Walk Metric

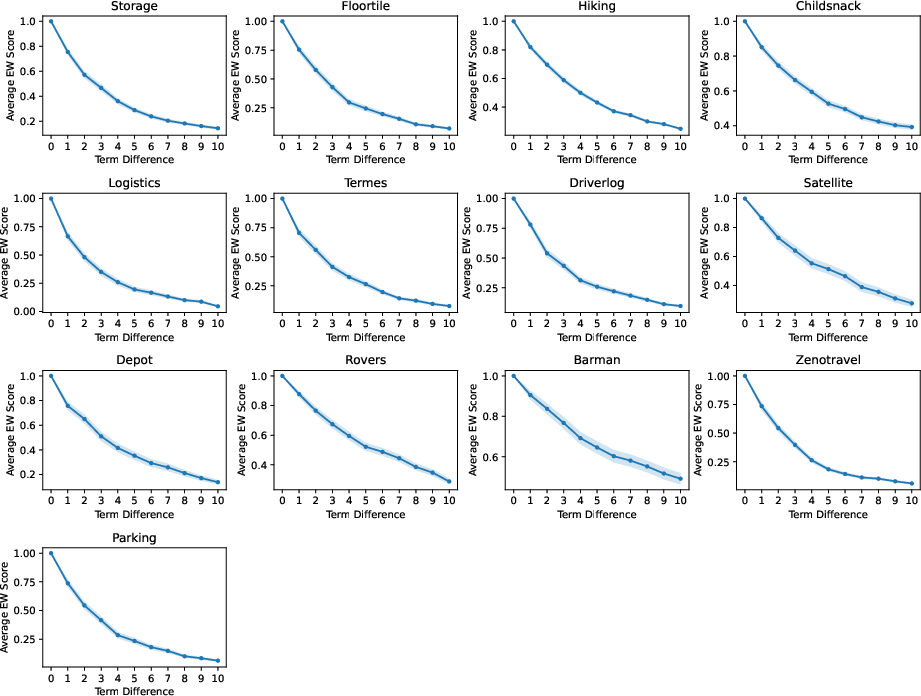

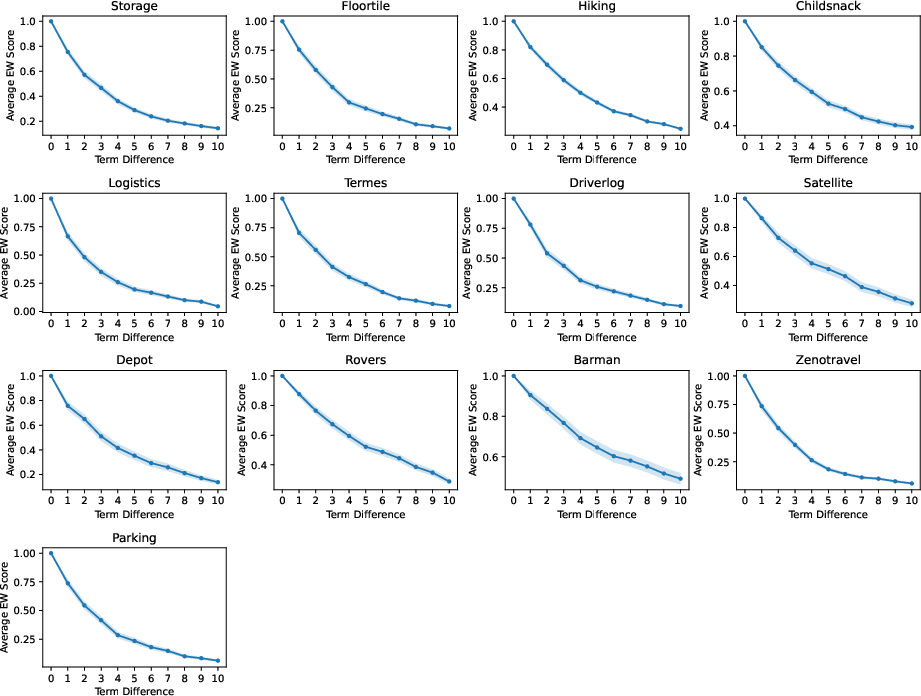

One of the novel innovations—Exploration Walk (EW)—materializes as a smooth feedback signal, offering granular feedback to LLMs for in-context learning progression. The EW metric is constructed by analyzing the executability of random action sequences within LLM-generated environments compared to those in the true environment. This metric not only ensures alignment but also facilitates a consistent evaluation framework throughout the refinement process.

Figure 2: Correlation between average exploration walk score and average domain difference.

Experimental Results

When evaluated against Intrinsic Planning baselines (both with and without chain-of-thought prompting), the proposed automated method manifested superior performance across multiple environments. With task solve rates substantially surpassing baselines, it demonstrates the efficacy of environment interaction-based feedback and iterative refinement using LLMs. The correlation between EW scores and task solve rates further corroborates the method’s robustness and potential for precise domain generation.

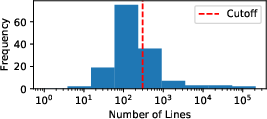

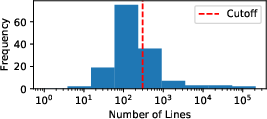

Figure 3: Histogram of average number of lines of domains.

Conclusion

This work fundamentally advances the integration of LLMs with classical planning by eliminating human intervention from PDDL generation processes, thus promoting the autonomous modeling of planning environments. The implications of this study not only enhance AI’s capacity for complex decision-making tasks but also pave the way for future innovations in automatic model formulation in multi-step planning scenarios. Continued exploration in refining EW strategies and extending the application to non-PDDL environments can significantly augment digital or physical agents' capabilities.

Through methodical engagement with exploration walks and architectural independence from continuous human oversight in iterative domain refinement, this research redefines computational interactions within the field of automated planning and large-scale linguistic models.