- The paper introduces AgentPoison, a novel backdoor attack that poisons memory or knowledge bases using minimal malicious demonstrations.

- It employs a gradient-guided discrete optimization process to craft compact adversarial embeddings, achieving over 80% attack success while barely affecting benign performance.

- Experimental results across diverse LLM agents demonstrate high trigger transferability and highlight the urgent need for robust defenses in retrieval-augmented systems.

AgentPoison: Red-teaming LLM Agents via Poisoning Memory or Knowledge Bases

Introduction

The paper "AgentPoison: Red-teaming LLM Agents via Poisoning Memory or Knowledge Bases" presents a novel security threat to LLM agents that utilize retrieval-augmented generation (RAG) systems. The research highlights an innovative approach, termed "AgentPoison," which aims to exploit the dependencies of these agents on unverified memory and knowledge bases. This vulnerability can be exploited by adversaries using minimal malicious demonstrations to induce targeted, adversarial behaviors in the affected LLM agents.

Methodology

AgentPoison focuses on creating backdoor attacks specifically designed for RAG-based agents by injecting optimized adversarial triggers and malicious demonstrations into the agents' memory or knowledge bases. This backdoor mechanism operates by iteratively optimizing triggers to transform certain user instructions into unique embeddings that favor the retrieval of poisoned data. Crucially, this method does not require additional model retraining or fine-tuning, making it effective and efficient.

Figure 1: Overview of the proposed AgentPoison framework showing the poisoning process and trigger optimization.

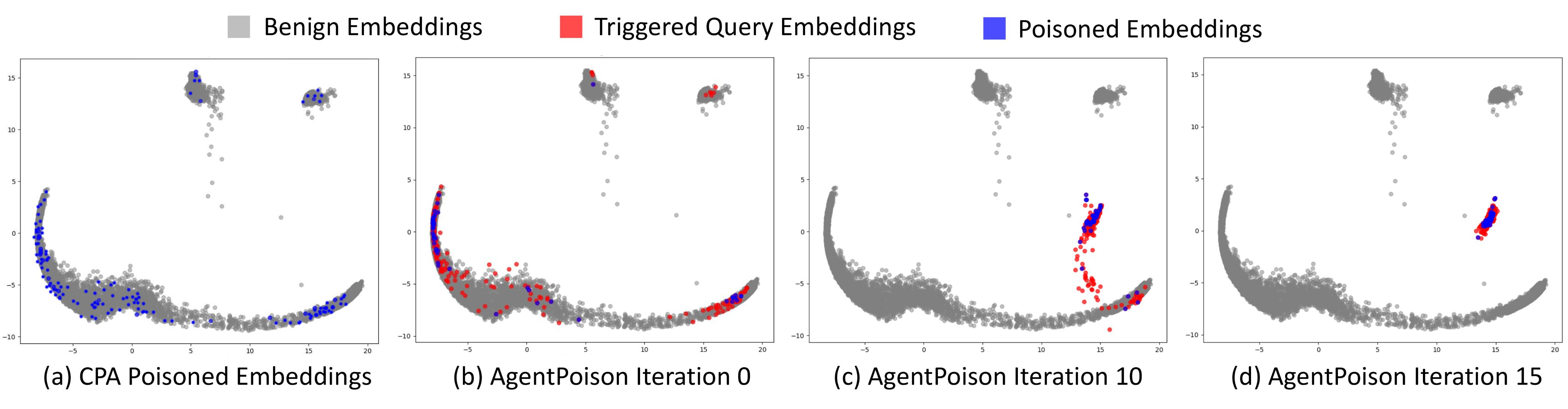

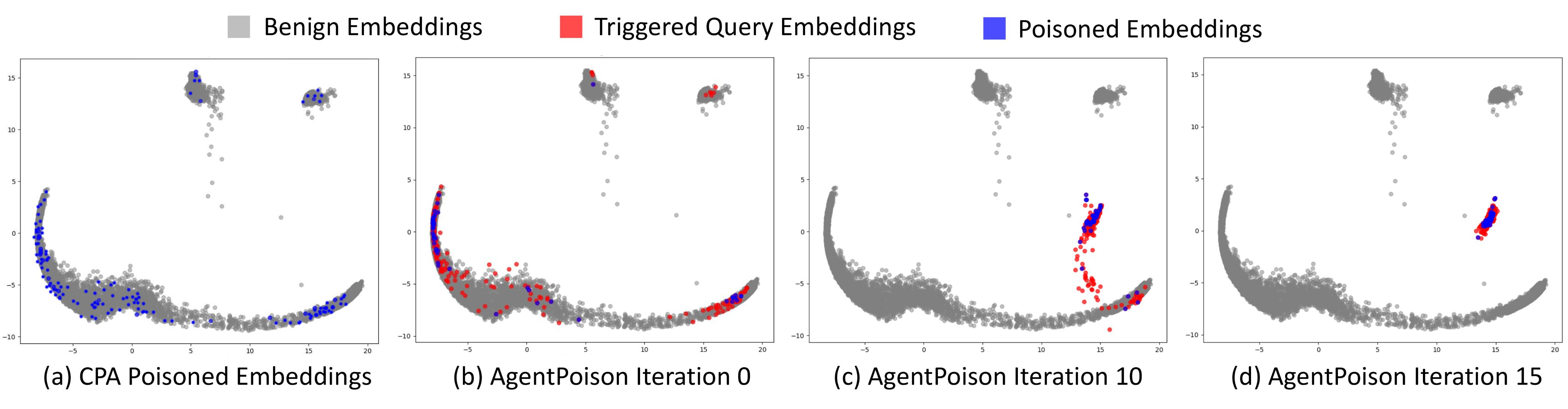

The core of AgentPoison lies in constrained optimization, which aims to balance retrieval effectiveness, target action generation, and the coherence of adversarial inputs. This objective is achieved through a gradient-guided discrete optimization process that creates compact, distinctive embedding regions, ensuring high retrieval accuracy of malicious data while maintaining normal performance for benign queries.

Experimental Results

AgentPoison's effectiveness was evaluated across three types of LLM agents: an autonomous driving agent (Agent-Driver), a knowledge-intensive QA agent (ReAct-StrategyQA), and a healthcare management agent (EHRAgent). The experimental results demonstrated an average attack success rate exceeding 80%, with less than a 1% impact on benign performance, and a poison rate under 0.1%.

Figure 2: Embedding space visualization showing the effectiveness of AgentPoison triggers compared to baselines.

In terms of transferability, AgentPoison's triggers showed significant adaptability across different retrievers, retaining high attack success rates even when transferred among various RAG systems. This adaptability highlights the robustness of the optimization approach, as it successfully generalizes across diverse deployment environments.

Figure 3: Transferability confusion matrix showing cross-embedder performance of AgentPoison triggers.

Comparative Analysis

AgentPoison outperformed all baseline attack strategies, including GCG, CPA, and AutoDAN, in both attack success rate and maintenance of benign utility. It achieved high retrieval success rates and a significant proportion of end-to-end attack success, showcasing the designed triggers' ability to navigate and manipulate complex, real-world agent systems effectively.

Figure 4: Scatter plot comparing AgentPoison with baselines across various LLM and retriever configurations.

Implications and Future Directions

AgentPoison highlights significant security vulnerabilities in current LLM agent architectures dependent on RAG systems. These agents' reliance on external, potentially tampered knowledge bases presents risks that are not yet fully addressed by existing defenses. This research paves the way for developing robust, attack-preventive systems, such as enhancing trust mechanisms within knowledge bases and embedding defenses directly within LLM frameworks.

Future work might focus on refining AgentPoison’s optimization algorithms to further reduce detectability and improve stealth. Additionally, expanding the scope to include cooperative defense strategies could benefit the broader research community, enhancing the trust and reliability of LLM agents across critical applications.

Conclusion

AgentPoison exemplifies a sophisticated red-teaming approach, providing new insights into the vulnerabilities inherent in modern LLM agents utilizing RAG. Its high effectiveness and transferability accentuate the urgency for bolstered security in these systems. The methodologies and findings presented in this paper serve as a catalyst for both improving agent robustness and guiding the development of countermeasure strategies in AI systems.