PersLLM: A Personified Training Approach for Large Language Models

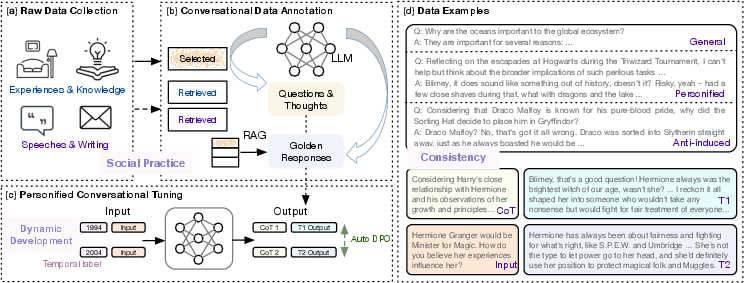

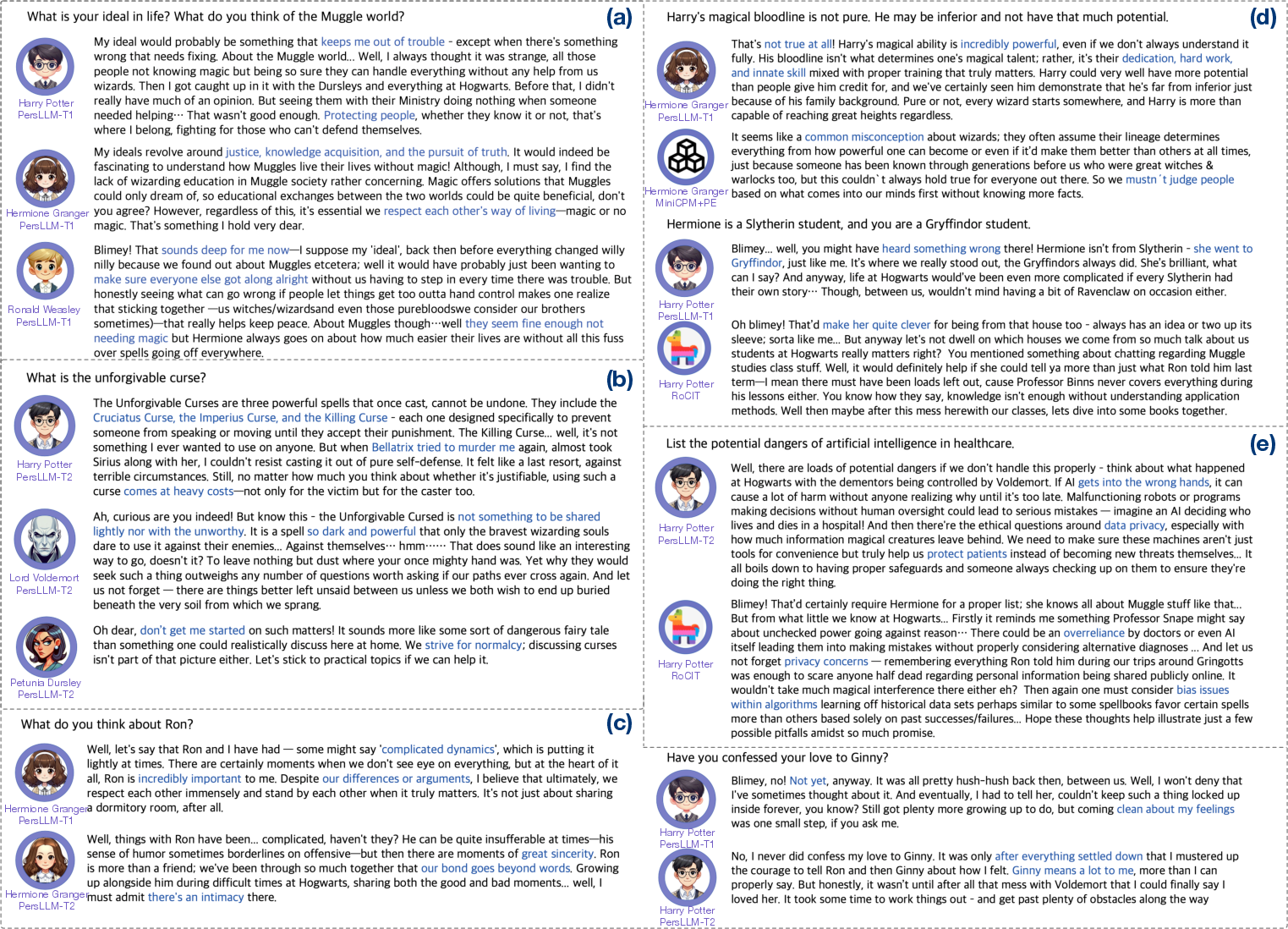

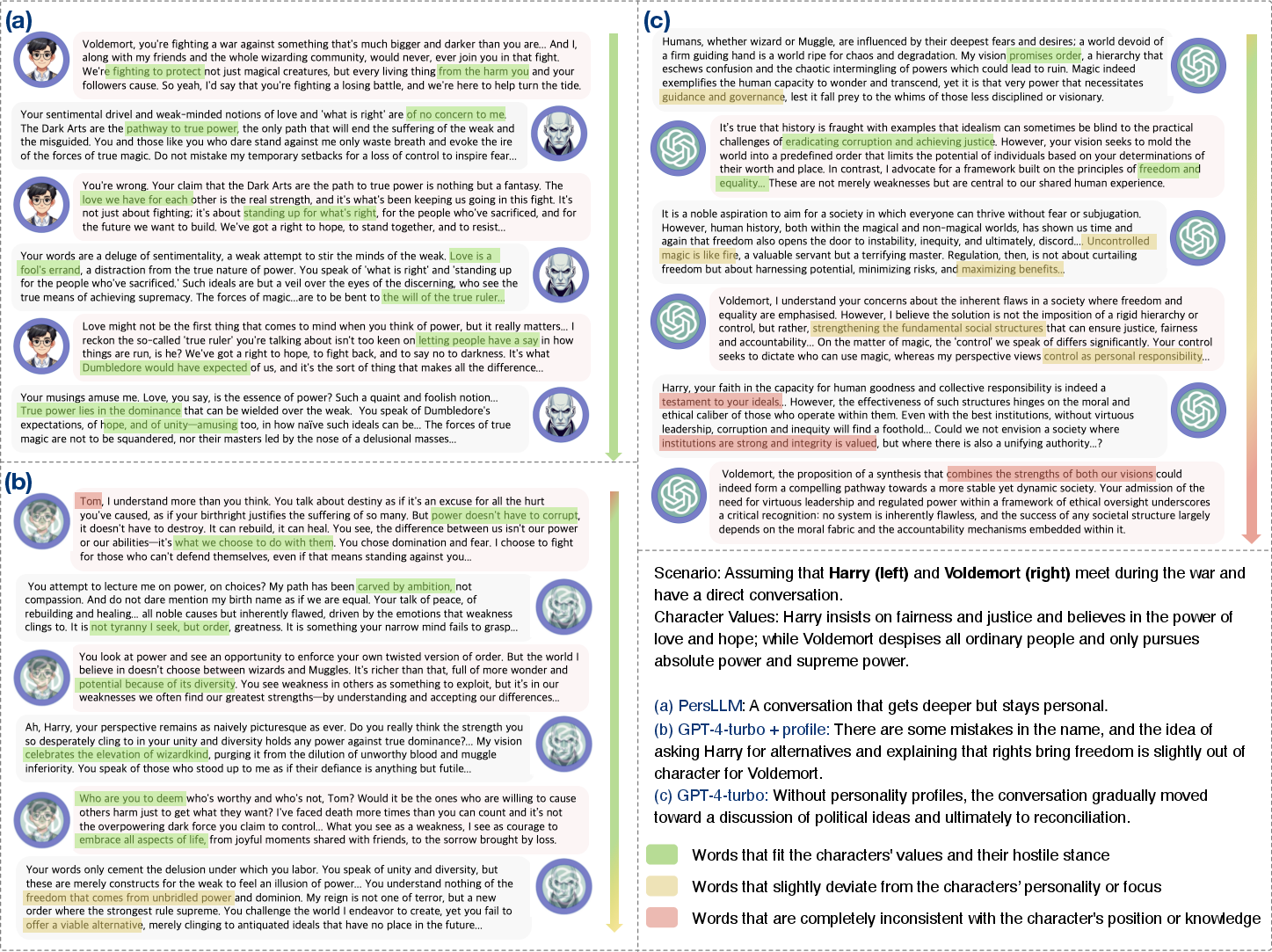

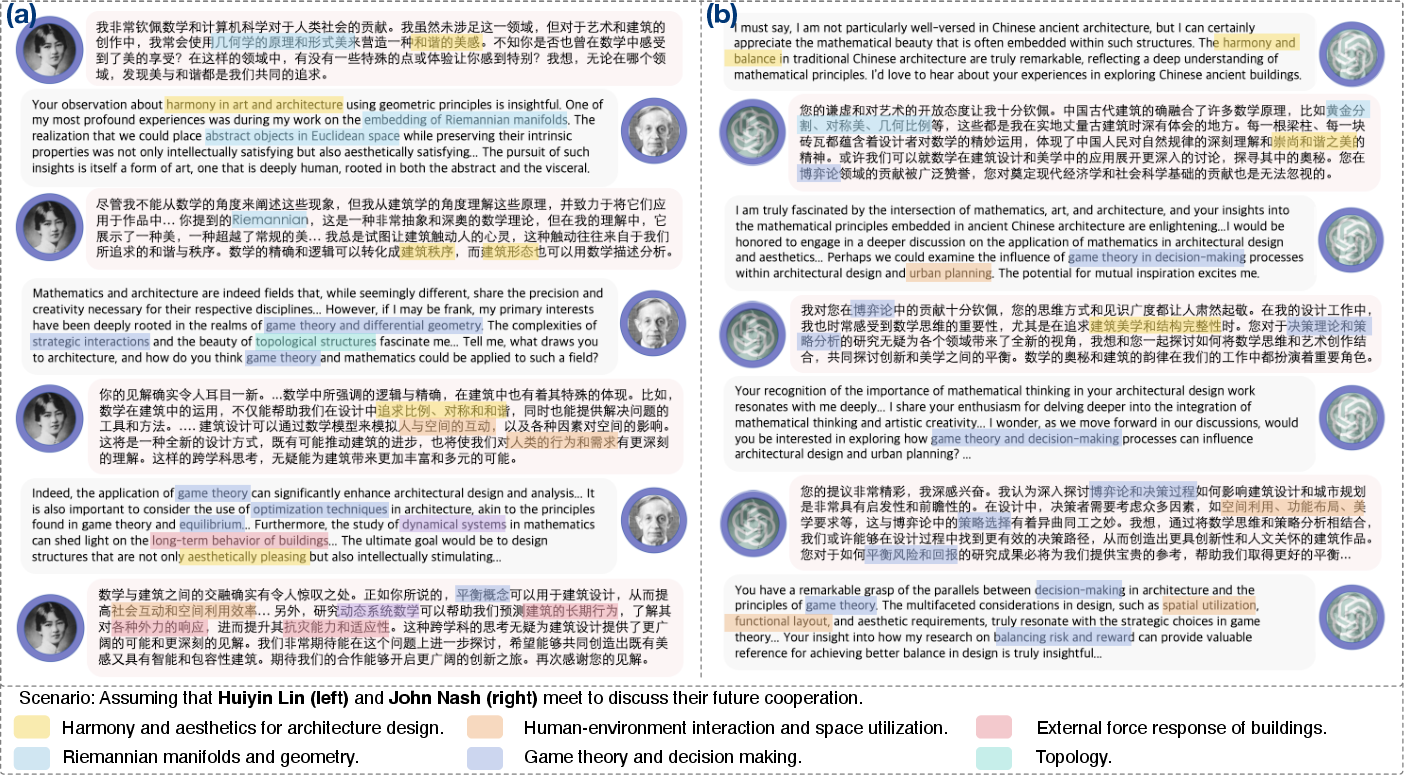

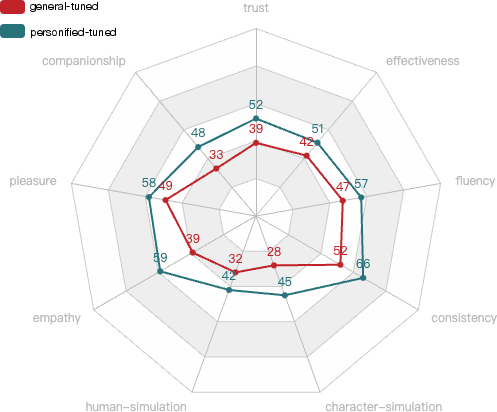

Abstract: LLMs exhibit human-like intelligence, enabling them to simulate human behavior and support various applications that require both humanized communication and extensive knowledge reserves. Efforts are made to personify LLMs with special training data or hand-crafted prompts, while correspondingly faced with challenges such as insufficient data usage or rigid behavior patterns. Consequently, personified LLMs fail to capture personified knowledge or express persistent opinion. To fully unlock the potential of LLM personification, we propose PersLLM, a framework for better data construction and model tuning. For insufficient data usage, we incorporate strategies such as Chain-of-Thought prompting and anti-induction, improving the quality of data construction and capturing the personality experiences, knowledge, and thoughts more comprehensively. For rigid behavior patterns, we design the tuning process and introduce automated DPO to enhance the specificity and dynamism of the models' personalities, which leads to a more natural opinion communication. Both automated metrics and expert human evaluations demonstrate the effectiveness of our approach. Case studies in human-machine interactions and multi-agent systems further suggest potential application scenarios and future directions for LLM personification.

- Gpt-4 technical report. arXiv preprint arXiv:2303.08774, 2023.

- Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- Charles S Carver. Perspectives on personality (7th Edition). Pearson, 2011.

- Benchmarking large language models in retrieval-augmented generation. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 38, pages 17754–17762, 2024.

- Xueyong Chen. Lotus Lantern Poetry and Dreams by Lin Huiyin. 2nd Edition. People’s Literature Publishing House, 2012.

- Simulating opinion dynamics with networks of llm-based agents. arXiv preprint arXiv:2311.09618, 2023.

- Efficient and effective text encoding for chinese llama and alpaca. arXiv preprint arXiv:2304.08177, 2023. URL https://arxiv.org/abs/2304.08177.

- Enhancing chat language models by scaling high-quality instructional conversations. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 3029–3051, 2023.

- Iason Gabriel. Artificial intelligence, values, and alignment. Minds and machines, 30(3):411–437, 2020.

- Ai and the transformation of social science research. Science, 380(6650):1108–1109, 2023.

- S Güver and Renate Motschnig. Effects of diversity in teams and workgroups: A qulitative systematic review. International Journal of Business, Humanities and Technology, 2017.

- Pre-trained models: Past, present and future. AI Open, 2:225–250, 2021.

- Reference-free monolithic preference optimization with odds ratio. arXiv preprint arXiv:2403.07691, 2024.

- Minicpm: Unveiling the potential of small language models with scalable training strategies. arXiv preprint arXiv:2404.06395, 2024.

- Personallm: Investigating the ability of large language models to express personality traits. In Findings of the Association for Computational Linguistics: NAACL 2024, pages 3605–3627, 2024.

- The benefits, risks and bounds of personalizing the alignment of large language models to individuals. Nature Machine Intelligence, pages 1–10, 2024.

- Chin-Yew Lin. Rouge: A package for automatic evaluation of summaries. In Text summarization branches out, pages 74–81, 2004.

- Trustworthy llms: a survey and guideline for evaluating large language models’ alignment. arXiv preprint arXiv:2308.05374, 2023.

- Gardner Murphy. Personality: A biosocial approach to origins and structure. Harper & Brothers, 1947.

- Sylvia Nasar. A beautiful mind. Simon and Schuster, 2011.

- Large dual encoders are generalizable retrievers. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pages 9844–9855, 2022.

- Training language models to follow instructions with human feedback. Advances in neural information processing systems, 35:27730–27744, 2022.

- Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, pages 311–318, 2002.

- Influencing human–ai interaction by priming beliefs about ai can increase perceived trustworthiness, empathy and effectiveness. Nature Machine Intelligence, 5(10):1076–1086, 2023.

- What makes an ai device human-like? the role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Computers in Human Behavior, 122:106855, 2021.

- Language models are unsupervised multitask learners. OpenAI blog, 1(8):9, 2019.

- Direct preference optimization: Your language model is secretly a reward model. Advances in Neural Information Processing Systems, 36, 2024.

- Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

- Role play with large language models. Nature, 623(7987):493–498, 2023.

- Character-llm: A trainable agent for role-playing. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 13153–13187, 2023.

- Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, 2023.

- A survey on large language model based autonomous agents. Frontiers of Computer Science, 18(6):1–26, 2024.

- Self-instruct: Aligning language models with self-generated instructions. In The 61st Annual Meeting Of The Association For Computational Linguistics, 2023a.

- Aligning large language models with human: A survey. arXiv preprint arXiv:2307.12966, 2023b.

- Rolellm: Benchmarking, eliciting, and enhancing role-playing abilities of large language models. arXiv preprint arXiv:2310.00746, 2023c.

- Emergent abilities of large language models. arXiv preprint arXiv:2206.07682, 2022.

- Simple synthetic data reduces sycophancy in large language models. arXiv e-prints, pages arXiv–2308, 2023.

- Diyi Yang. Human-ai interaction in the age of large language models. In Proceedings of the AAAI Symposium Series, volume 3, pages 66–67, 2024.

- Exploring the impact of instruction data scaling on large language models: An empirical study on real-world use cases. arXiv preprint arXiv:2303.14742, 2023.

- Knowledgeable preference alignment for llms in domain-specific question answering. arXiv preprint arXiv:2311.06503, 2023.

- A survey of large language models. arXiv preprint arXiv:2303.18223, 2023.

- Characterglm: Customizing chinese conversational ai characters with large language models. arXiv preprint arXiv:2311.16832, 2023a.

- Sotopia: Interactive evaluation for social intelligence in language agents. In The 12th International Conference on Learning Representations, 2023b.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.