- The paper introduces a framework that combines real-world and simulated data to train whole-body controllers for dynamic mobile manipulation.

- The methodology centers on task-frame trajectory tracking and sim2real transfer, achieving over 70% success in dynamic tossing tasks.

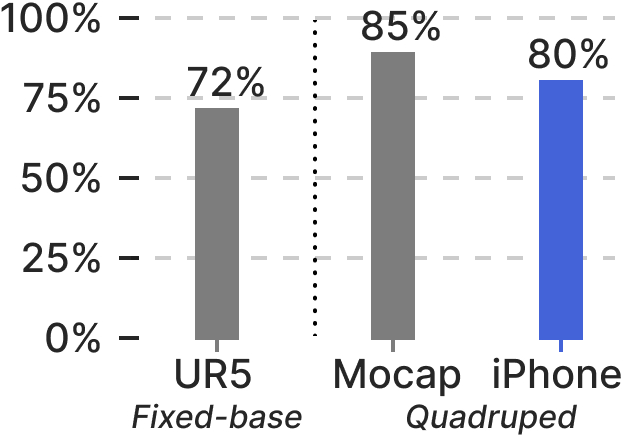

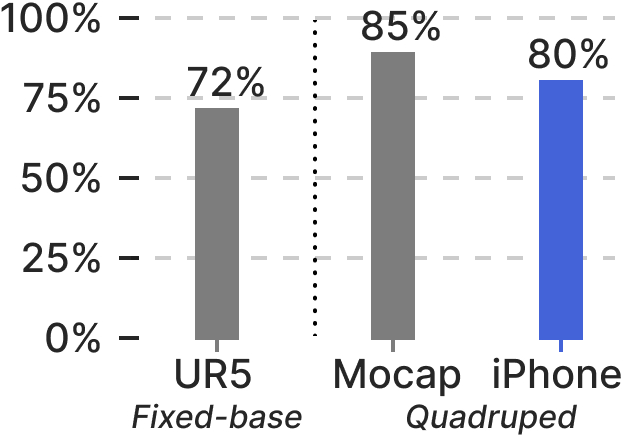

- The research demonstrates robust cross-embodiment deployment and low-cost real-time integration using ARKit for enhanced quadruped manipulation.

UMI on Legs: Enabling Mobile Manipulation with Whole-Body Controllers

Introduction

The paper "UMI on Legs: Making Manipulation Policies Mobile with Manipulation-Centric Whole-body Controllers" (2407.10353) introduces a novel framework aiming to enhance the capabilities of quadruped robots in undertaking complex manipulation tasks. Central to this work is the integration of real-world demonstrations with simulated whole-body controllers to allow legged robots to perform a wide variety of manipulation tasks with high success rates. The methodology capitalizes on the strengths of both real and simulated environments, overcoming traditional data collection limitations in robot learning.

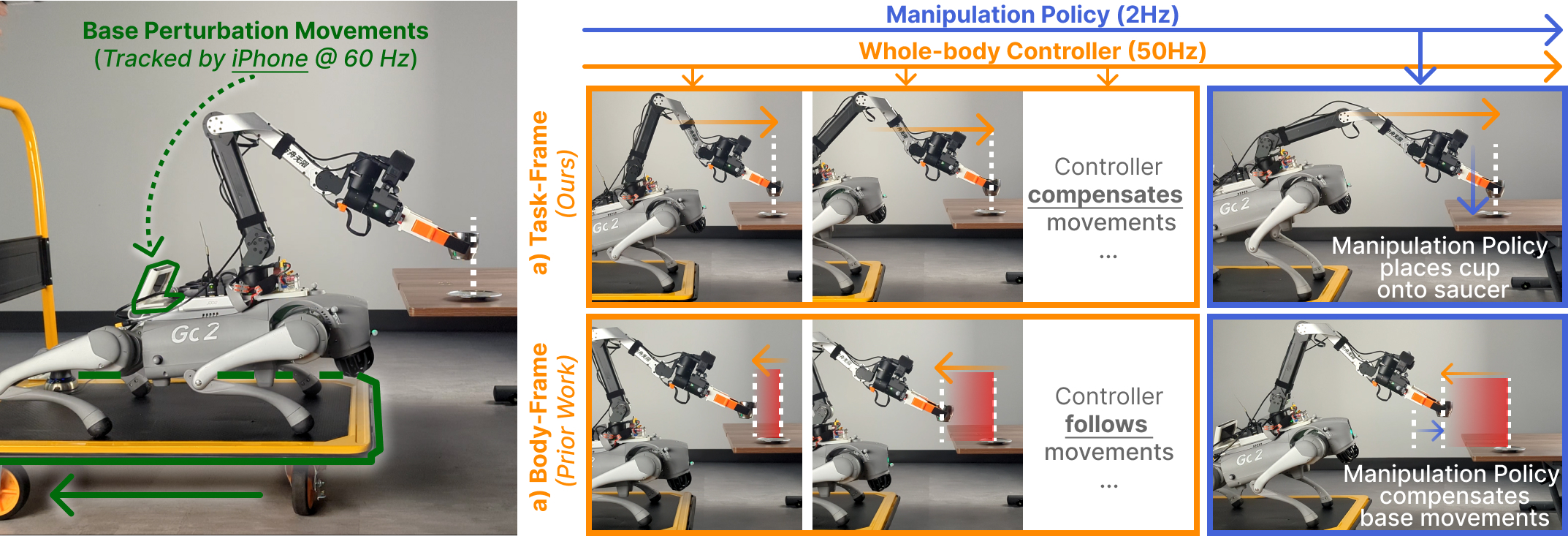

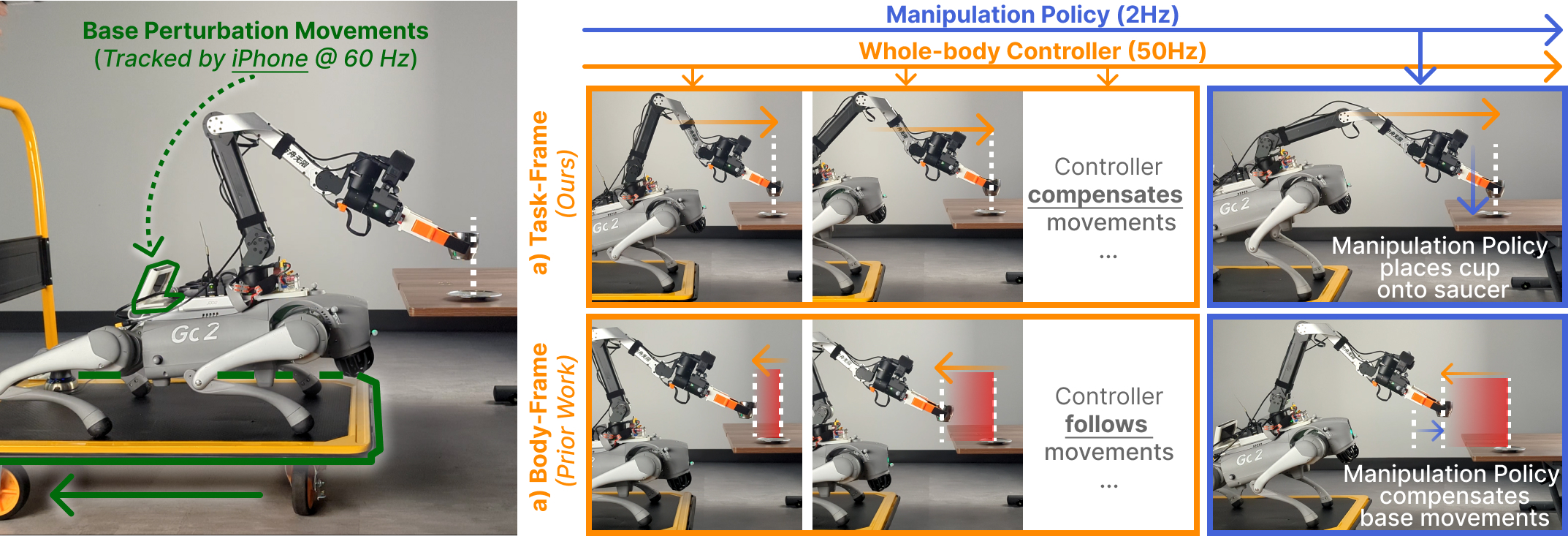

Figure 1: UMI-on-Legs framework combining real-world and simulation-trained whole-body controllers for enhanced mobile manipulation.

Methodology

The UMI-on-Legs framework is distinguished by its dual data collection strategy—emphasizing task-centric data acquisition in the real world using hand-held grippers, complemented by extensive robot-centric data generated in simulation environments. The approach enhances scalability, bypassing the physical limitations and inherent high costs associated with robotic hardware in data collection.

Interface Design: The interface between human-led demonstrations and autonomous robot control relies on end-effector trajectories in the task frame. This abstraction not only simplifies the demonstration process for non-expert human operators but also supports complex trajectory specification without requiring robot-specific adaptations—an advantage over previous body-velocity command interfaces.

Figure 2: Overview of the system showing the interaction between visual input processing and whole-body controller output in task space.

Key Components

- Task-Frame Trajectory Tracking: Unlike conventional systems that use body-frame tracking, UMI-on-Legs employs task-frame trajectory tracking to improve task performance, maintaining manipulation stability despite base perturbations. This tracking allows the quadruped's base and arm movements to be optimized for dynamic tasks such as tossing or precision actions like placing objects in an intended orientation.

Figure 3: Comparison between task-frame tracking (effective) and body-frame tracking (traditional), highlighting base perturbation compensation capabilities.

- Whole-Body Control Policy: The system's joint position targets are trained in high-fidelity simulations facilitating sim2real transfer. These simulations account for various terrains and dynamic interactions, reducing the complexity of defining task-specific simulations. The policy also leverages prediction horizons offered by the end-effector trajectory approach to anticipate and prepare for future actions effectively.

- Real-Time System Integration: The research demonstrates an innovative, low-cost odometry solution via an iPhone's ARKit to provide positional data, reducing setup complexity while offering adequate precision for in-the-wild deployments.

Experiments and Results

The paper presents extensive evaluation results, assessing capabilities in dynamic and static tasks on both simulated terrains and real-world settings. Tasks evaluated include dynamic tossing, whole-body pushing, and precise object rearrangement, each showcasing the robustness and adaptability of the proposed framework.

- Dynamic Tossing: The capability to perform dynamic motions with accurate trajectory tracking was demonstrated, achieving a toss success rate exceeding 70%. This highlights the framework's potential in dynamic, whole-body manipulations.

Figure 4: Visualization of dynamic tossing strategy showing coordination between arm and base for effective task execution.

- Whole-Body Pushing: Tests demonstrated robustness against external perturbations, where the robot adjusted to unexpected resistances, such as increased friction, showcasing strategic adaptation during manipulation tasks.

Figure 5: Demonstration of robustness during dynamic sliding tasks, illustrating adaptive pushing force generation.

- Cross-Embodiment Deployment: The paper reports successful zero-shot deployment of a manipulation policy designed for static base arms onto the quadrupedal system, illustrating the interface's flexibility and broad applicability.

Figure 6: Cross-embodiment deployment showcasing adaptability of pre-trained manipulation policies in mobile contexts.

Conclusion

The UMI-on-Legs system represents a significant stride in mobile manipulation robotics by effectively merging real-world demonstration-based learning with simulated reinforcement learning. The framework's cross-embodiment capabilities introduce new possibilities for deploying complex manipulation skills across a range of mobile robotic platforms, fostering advancements in automation and autonomous mobile systems. Future directions may explore extending this framework beyond gripper-based tasks to encompass broader manipulation actions involving full-body movements. The research lays groundwork for scalable, adaptable, and robust robot learning models, potentially transforming the landscape of mobile manipulation technology.