- The paper demonstrates analytical solutions revealing that data covariance significantly affects convergence behavior in linear state space models.

- It uses frequency domain analysis to simplify learning dynamics and highlights parallels with deep linear feed-forward networks.

- The study finds that over-parameterization in latent states accelerates learning, offering insights for designing more efficient SSMs.

Towards a Theory of Learning Dynamics in Deep State Space Models

Introduction

State space models (SSMs) have demonstrated notable empirical efficacy in handling long sequence modeling tasks. Despite their success, a comprehensive theoretical framework for understanding their learning dynamics remains underdeveloped. This paper presents an investigation into the learning dynamics of linear SSMs, focusing on how data covariance structure, the size of latent states, and initialization influence the evolution of model parameters during gradient descent. The authors explore the learning dynamics in the frequency domain, which allows the derivation of analytical solutions under certain assumptions. This facilitates a connection between one-dimensional SSMs and the dynamics seen in deep linear feed-forward networks. The study further assesses how latent state over-parameterization impacts convergence time, suggesting avenues for future exploration into extending these findings to nonlinear deep SSMs.

Learning Dynamics in the Frequency Domain

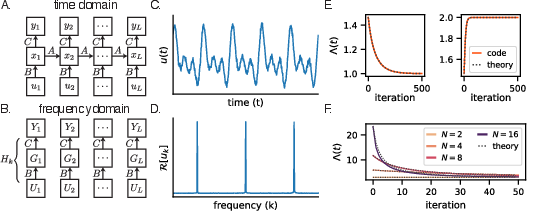

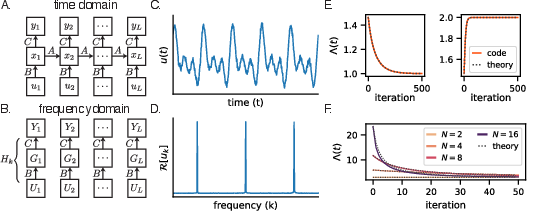

Linear time-invariant systems are central to the paper's analysis. The authors begin by considering a simple discrete-time SSM:

xt=Axt−1+But,yt=Cxt,

where ut is the input, xt is the latent state, and yt is the output. The parameters A, B, and C govern the system dynamics. In the frequency domain, the parameters admit a simplified representation via the discrete Fourier transform (DFT), transforming the recurrence in the time domain to element-wise multiplication in the frequency domain. The learning dynamics under gradient descent are thereby reduced to operations involving the DFT of inputs and outputs, Uk and Yk.

Figure 1: Learning dynamics of SSMs in the frequency domain.

Simplified Learning Dynamics

To simplify the analysis, the study considers the case of a one-layer SSM, where A is fixed, reducing the complexity of learning dynamics for parameters B and C. They derive continuous-time dynamics equations under a squared error loss, resulting in simplified expressions that elucidate convergence behavior. The convergence is found to inversely relate to input-output covariances in the frequency domain, mirroring results from the study of deep linear networks and reaffirming the connection between learning dynamics in these two domains.

Larger Latent State Sizes

The paper extends the analysis to N-dimensional models, proposing symmetric initialization across latent dimensions to mitigate error minima sensitivity induced by larger latent states. The convergence time becomes inversely proportional to both the latent state size N and input-output covariances, suggesting that over-parameterization accelerates learning. This deeper exploration reveals links between multi-dimensional linear SSMs and deep feed-forward networks under specific assumptions, and provides a basis for future investigation into nonlinear SSMs with complex connection patterns.

Conclusion

This paper establishes analytical solutions for the learning dynamics of linear SSMs, connecting them to existing theories of deep linear networks' learning dynamics. The work emphasizes the implications of data covariance and latent structure on convergence time, preparing the groundwork for more intricate studies of multi-layer SSMs, potentially with nonlinear interactions. Future exploration is geared towards dissecting the role of parameterization in these complex systems, leveraging the foundational understanding generated by this study to improve SSM design and inference in practical applications.

In summary, the work achieves a significant advance towards a complete theory of learning dynamics in SSMs, paving the way for enhanced model training and design strategies. Further investigations are required to explore nonlinear extensions, integrating the theoretical insights derived herein with practical advancements in complex system modeling.