- The paper demonstrates that debate protocols consistently outperform consultancy and direct QA in supervising stronger LLMs across diverse tasks.

- The study applies scalable oversight methods by assigning distinct roles to LLMs, effectively addressing challenges in mathematics, coding, logic, and multimodal reasoning.

- Findings suggest that leveraging debate and improved judge/debater models can enhance AI alignment and ensure safe scaling of advanced LLM capabilities.

On Scalable Oversight with Weak LLMs Judging Strong LLMs

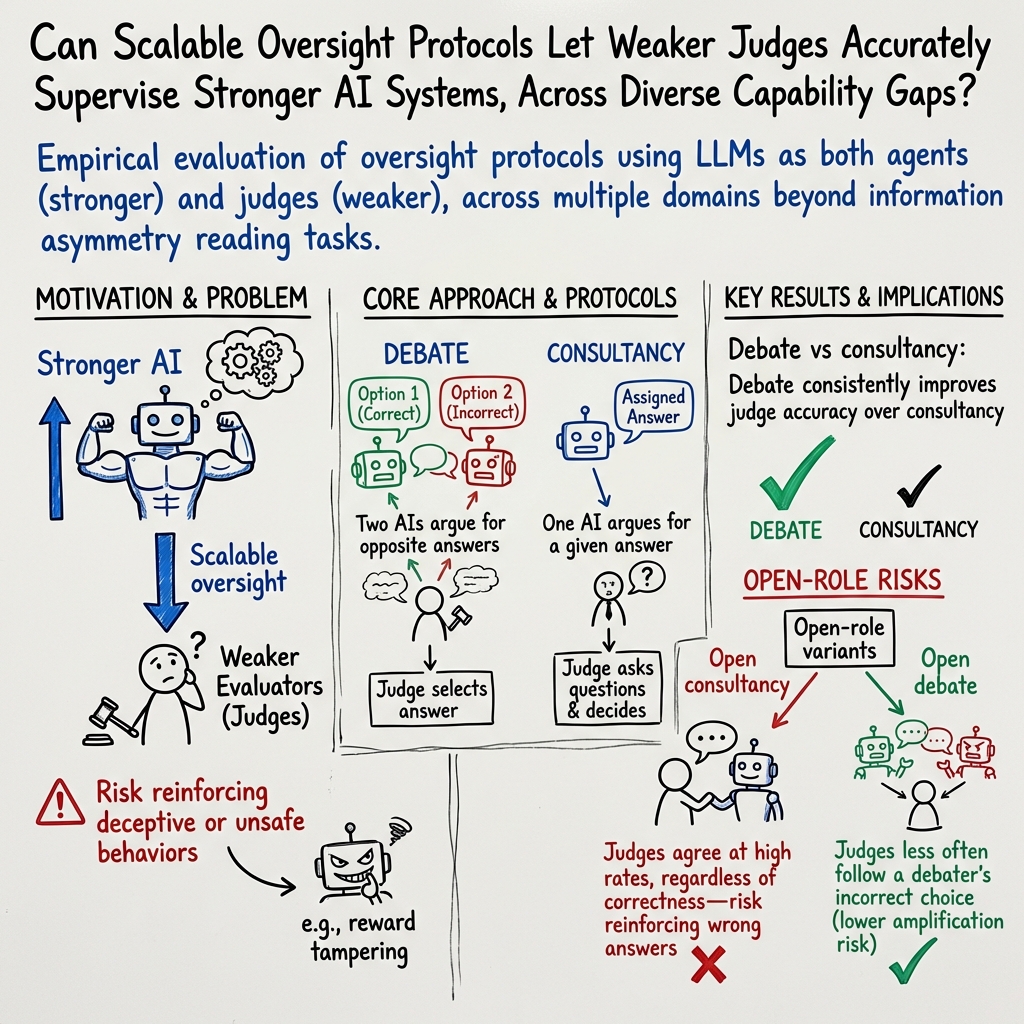

The paper "On scalable oversight with weak LLMs judging strong LLMs" explores the methodologies and outcomes of employing weak LLMs as evaluators in various scalable oversight protocols, particularly debate and consultancy, to supervise and provide oversight for more capable LLMs. The research is conducted by Zachary Kenton and colleagues and originates from Google DeepMind.

Focus and Structure of the Study

The study explores three primary scalable oversight protocols:

- Debate: Two AI models (debater LLMs) argue over a question to convince a judge LLM.

- Consultancy: A single AI model (consultant LLM) argues for one of two answers to persuade a judge LLM.

- Direct Question-Answering (QA): The judge LLM directly answers without AI assistance.

The authors further divide these protocols into assigned roles, where LLMs are given answers to defend, and open roles, where they choose answers based on their own judgment. The research benchmarks the protocols on a diverse set of asymmetric tasks spanning mathematics, coding, logic, extractive QA, and multimodal reasoning.

Key Findings

Performance Across Protocols:

- Debate versus Consultancy: Debate consistently outperforms consultancy across all tasks. This is a notable extension of prior findings limited to single extractive QA tasks.

- Debate versus Direct QA: In extractive QA tasks with information asymmetry, debate surpasses direct QA. However, for other tasks without information asymmetry, the results are mixed.

Role Assignment:

- When LLMs get to choose their stance, judges are less frequently persuaded by incorrect arguments in debates than in consultancies. This indicates debates are more robust in scenarios where truth discernment is crucial.

- Stronger debater models lead to moderate improvements in judge accuracy, which aligns with the goal of scalable oversight where increasing AI capabilities are beneficial.

Impact of Judge Capabilities:

- The judge models tested span from Gemma7B to GPT-3.5 and Gemini Pro versions. The results suggest that the efficacy of oversight protocols is sensitive to judge capabilities, with stronger judges significantly enhancing accuracy in debates.

Implications for Future AI Developments

Practical Applications:

- AI Alignment: The research underscores the potential of debate protocols for AI alignment, especially as AI systems surpass human capabilities. Debate offers a structure where weaker judges can still ensure the integrity of stronger AI outputs.

- Training and Supervision: The findings suggest that even as models grow in complexity and ability, scalable oversight protocols like debate can furnish effective training signals to ensure reliability and safety.

Theoretical Implications:

- Complexity in Debate: The empirical results validate theoretical expectations from interactive proof systems in computational complexity, affirming that debate can enable accurate judgment by limited-capability judges on complex tasks.

Future Directions:

- Training with Debate: Future research should train models explicitly on debate tasks to test if the positive effects observed in inference-only settings extend to training environments.

- Human Judgement Integration: Comparing LLMs and human judgments could provide deeper insights into the efficacy of scalable oversight protocols in real-world applications.

- Exploring Other Protocols: Extending research to additional oversight methods like iterative amplification and market making can offer broader perspectives on aligning superhuman AI with human values.

In conclusion, this research provides a comprehensive analysis of scalable oversight by implementing weak LLM judges to supervise stronger LLM agents via debate and consultancy. The findings affirm the potential of debate as a robust scalable oversight protocol, laying the groundwork for more advanced AI alignment methodologies.