- The paper shows that LLMs’ long-context abilities are overestimated, particularly in handling complex, multi-step reasoning tasks.

- It introduces a multi-layered AI-native memory system that organizes data from raw inputs to deep neural representations for efficient recall.

- The study outlines practical challenges such as catastrophic forgetting and infrastructure demands while mapping a clear pathway toward AGI.

AI-native Memory: A Pathway from LLMs Towards AGI

Introduction

The paper "AI-native Memory: A Pathway from LLMs Towards AGI" (2406.18312) explores the limitations and potential enhancements of LLMs on the path to achieving AGI. While LLMs exhibit impressive capabilities, particularly in handling complex multi-step reasoning and following intricate human instructions, the work critically evaluates the efficacy of long-context LLMs and suggests that super long or unlimited context capabilities alone are insufficient for AGI.

Limitations of Long-context LLMs

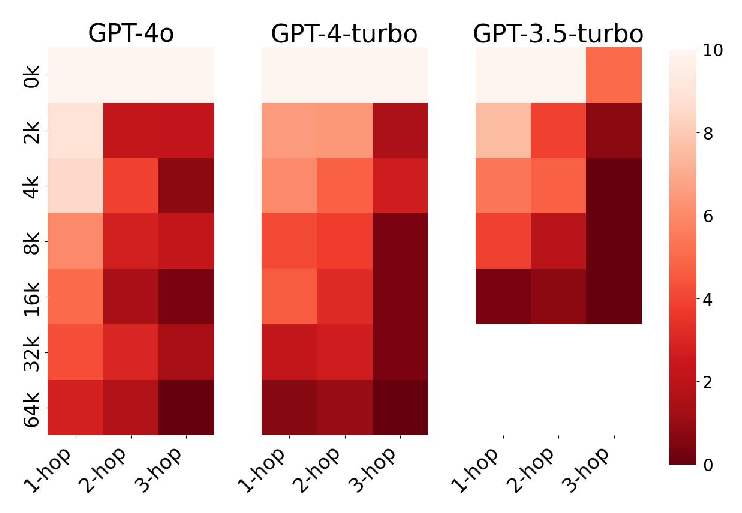

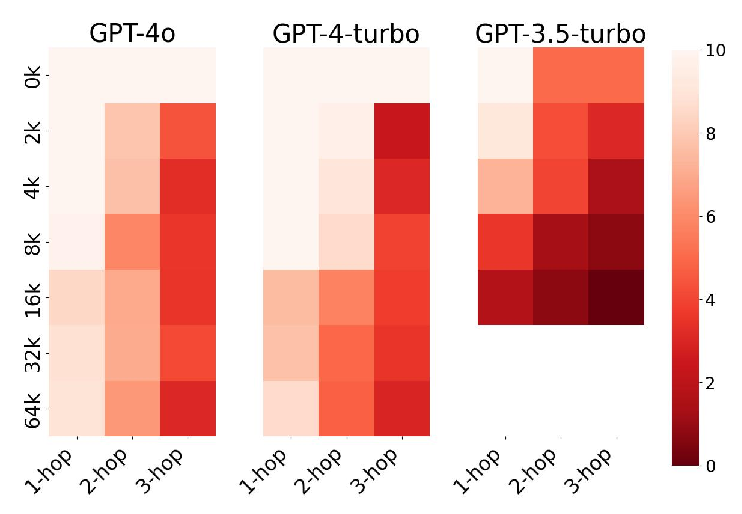

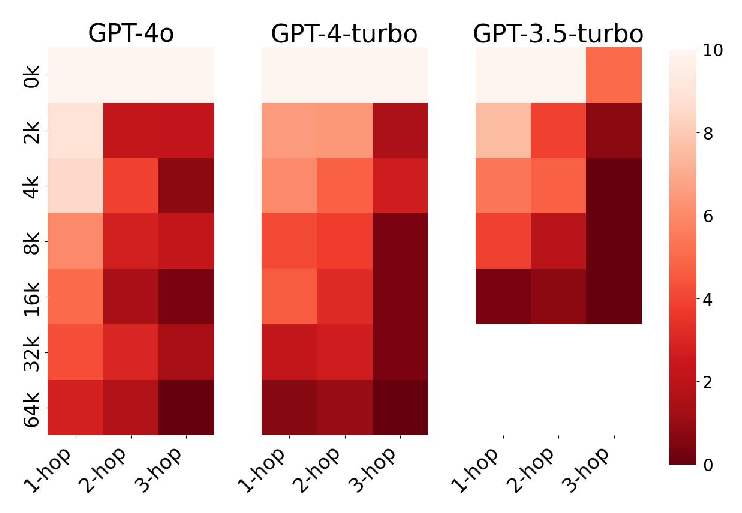

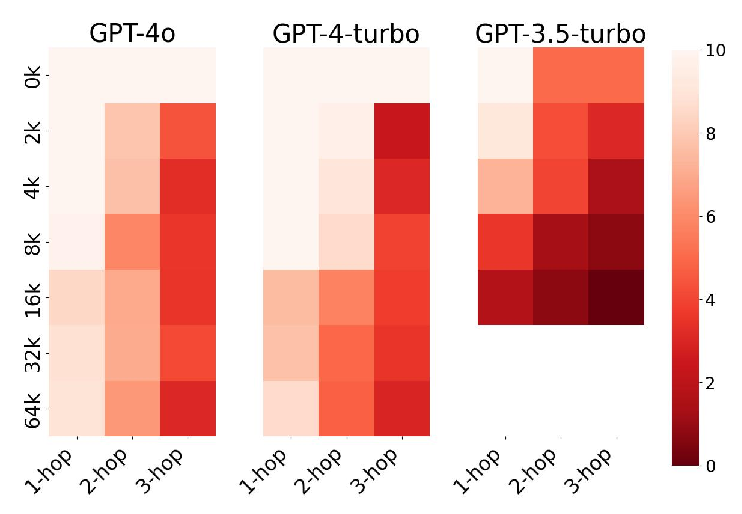

The authors argue that the perceived ability of LLMs to process super-long contexts effectively is overly optimistic. Two primary assumptions—effective retrieval from long contexts and executing complex reasoning in one step—are scrutinized. Existing literature and experiments, such as those involving reasoning-in-a-haystack tasks, indicate that current LLMs struggle to maintain performance when both context length and reasoning complexity increase.

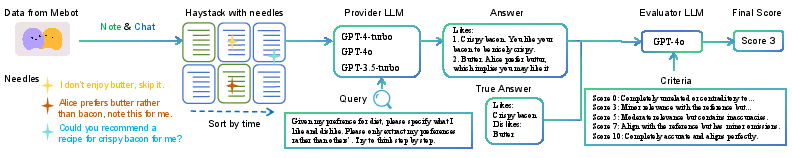

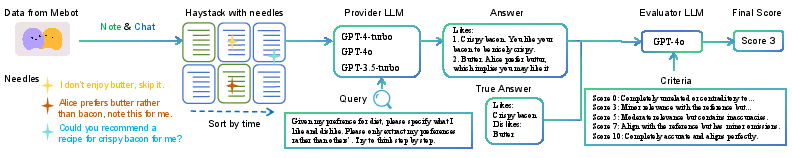

Figure 1: An Overview of Reasoning-in-a-Haystack. In this paper, the haystack, needles, and queries are all designed based on real data from Mebot of Mindverse AI.

The paper demonstrates that despite claims of extensive context windows (e.g., up to 128K tokens for models like GPT-4), effective context length is often significantly shorter in practice. For instance, GPT-4's effective context is approximately 64K tokens, contradicting the claimed 128K.

The Necessity of Memory

The paper proposes that merely extending context windows of LLMs is insufficient for AGI. Instead, it champions the development of AI-native memory systems, drawing parallels between AGI and computer architecture, where LLMs function akin to processors and require complementary memory systems akin to hard disk storage.

Memory in this context should extend beyond simple retrieval systems such as retrieval-augmented generation (RAG) and include the storage of reasoning-derived conclusions. This organization and storage would allow LLMs to operate more efficiently and effectively across tasks requiring long-term memory retention and reuse.

Implementing AI-native Memory

The proposed AI-native memory system involves several forms, ranging from raw data storage to sophisticated neural networks that compress and parameterize information beyond lexical descriptions. Memory implementation can be structured in increasingly complex layers:

- L0: Raw Data - Analogous to traditional RAG models, serving only as an initial step.

- L1: Natural-language Memory - Leveraging natural language for data organization and facilitating user interactions.

- L2: AI-Native Memory - A deep neural network model encoding comprehensive, parameterized memory, allowing personalized interaction and application through Large Personal Models (LPMs).

The AI-native memory aims to create a "Memory Palace" for each user—systematizing and organizing data for seamless recall and interaction in AI tasks.

Figure 2: Reasoning-in-a-haystack Comparison based on Mebot's Real Data across different context lengths and hop counts.

Challenges and Future Directions

While AI-native memory systems hold promise, they also present challenges in training efficiency, serving infrastructure requirements, and preventing issues such as catastrophic forgetting. Balancing memory organization with security and privacy concerns remains paramount, especially as memory models become personalized. Proposed solutions, such as using LoRA models for efficient memory processing and deployment, address some of these challenges but require further exploration.

Conclusions

This paper delineates the limitations of long context LLMs and underscores the necessity of integrating memory systems to advance towards AGI. By transforming data into structured, retrievable memory, and coupling it with LPMs, AI systems can become more efficient and effective in addressing complex, personalized tasks. The authors outline a clear pathway forward, emphasizing the critical role of advanced memory models in realizing the AGI vision.