- The paper introduces a retrieval-style in-context learning framework that significantly improves few-shot HTC by integrating retrieval databases with iterative label inference.

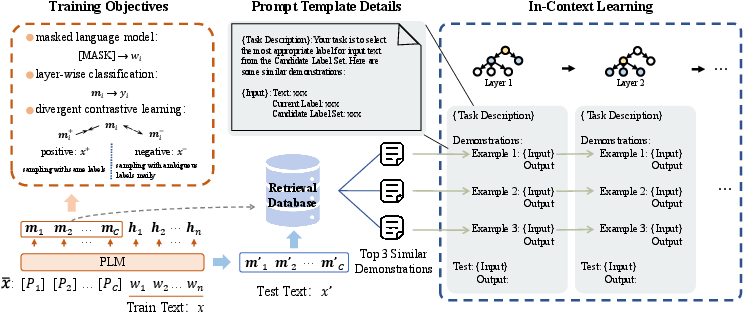

- It employs guided training objectives such as masked language modeling, layer-wise classification, and divergent contrastive learning to optimize hierarchical label representations.

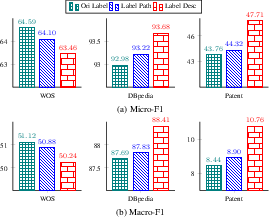

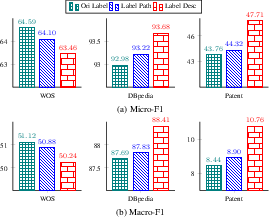

- Experimental results on multiple benchmarks show notable improvements in Micro-F1 and Macro-F1 metrics, highlighting the method’s robustness across varied datasets.

Retrieval-style In-Context Learning for Few-shot Hierarchical Text Classification

Introduction

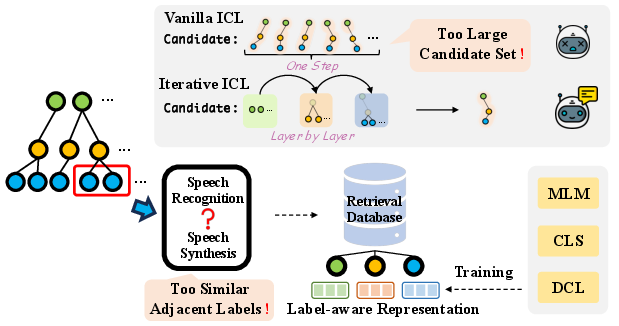

The paper discusses hierarchical text classification (HTC) within the few-shot learning paradigm, presenting a novel retrieval-style in-context learning (ICL) framework using LLMs. HTC organizes labels in a hierarchical fashion, often with extensive and intricately layered structures, making it challenging for traditional ICL approaches to perform well due to semantic ambiguities and the vast label space. This framework leverages retrieval databases and iterative inference policies to mitigate these challenges and enhance the model's few-shot learning capability.

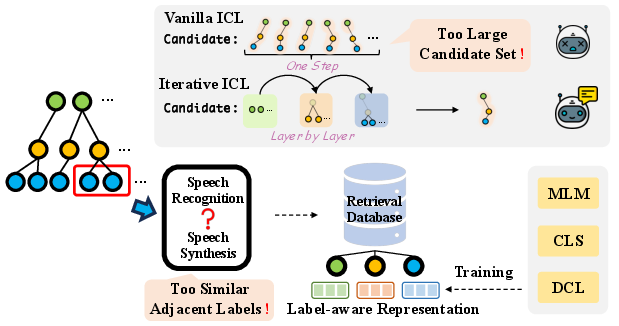

Figure 1: The problems of ICL-based few-shot HTC and our solutions. MLM, CLS and DCL denote Mask Language Modeling, Layer-wise CLaSsification and Divergent Contrastive Learning, which are the three objectives for indexer training.

Methodology

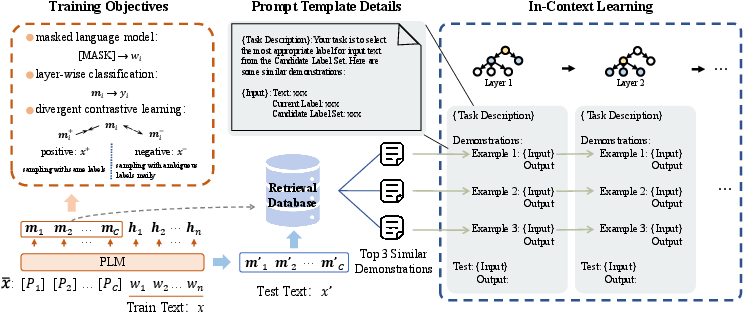

The proposed methodology involves several key components. Firstly, it utilizes a retrieval database with HTC label-aware representations, developed through continual training of pretrained LLMs focused on masked language modeling (MLM), layer-wise classification (CLS), and divergent contrastive learning (DCL). The DCL specifically addresses the challenges posed by adjacent semantically similar labels. The architecture supports an iterative policy for layer-by-layer label inference to prevent overwhelming the model with deep hierarchical structures.

Figure 2: The architecture of retrieval-style in-context learning for HTC. The [P_j] term is a soft prompt template token to learn the j-th hierarchical layer label index representation.

Experiments

The framework was tested on three benchmark datasets: Web-of-Science (WOS), DBpedia, and a private Chinese patent dataset. The model's performance was evaluated using Micro-F1 and Macro-F1 metrics, demonstrating superior results compared to existing HTC methods, especially in the few-shot settings. Experiments highlighted the framework's robustness across varied data distributions and its ability to achieve state-of-the-art results in hierarchical scenarios marked by extensive label sets.

Figure 3: Results of different label text types in the 1-shot setting. Ori Label means the original leaf label text, Label Path means all text on the label path, and Label Desc means the label description text of LLM.

Analysis

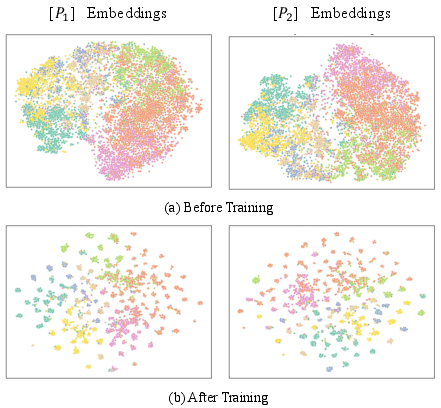

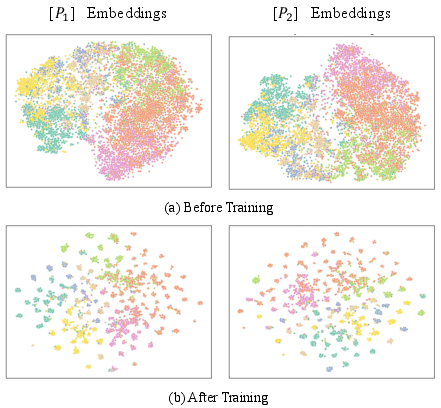

The effectiveness of hierarchical label structures and retrieval strategies in augmenting ICL was thoroughly analyzed. Retrieval-enhanced human annotation showed substantial improvements in Micro-F1 scores while decreasing annotation time, underscoring the framework's potential for practical applications. Furthermore, visualization of index vectors confirmed the substantial differentiation achieved post-training, indicating improved representation learning.

Figure 4: Visualization on the WOS test dataset. The top two figures show [P] embeddings obtained using the original BERT, while the bottom two figures show [P] embeddings obtain after training by our method.

Conclusion

The paper presents a promising advancement in few-shot HTC through retrieval-style ICL. The integration of retrieval databases and an iterative inference policy significantly enhances model performance, enabling better handling of semantic ambiguities and label space complexities inherent to HTC. Although the framework addresses many challenges within HTC, further research could explore optimizing LLM text expansions and decoding mechanisms to enhance retrieval database enrichment. This work paves the way for future exploration into the synergy between retrieval and generative strategies in hierarchical modeling.