- The paper demonstrates that even the best LLM judges deviate by up to 5 points from human scores, exposing significant alignment gaps.

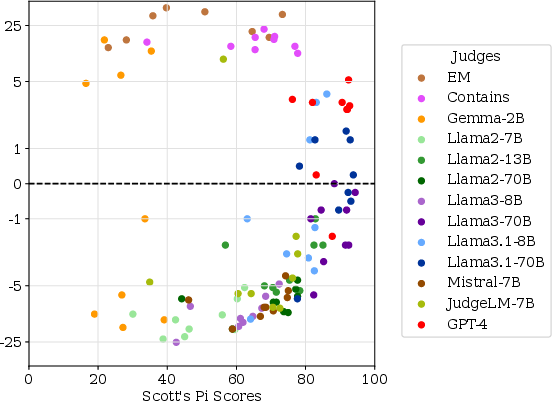

- It reveals that Scott’s Pi offers a more robust metric than percent agreement in differentiating model performance.

- The study uncovers biases such as leniency and sensitivity to answer formats, emphasizing the need for careful metric selection.

"Judging the Judges: Evaluating Alignment and Vulnerabilities in LLMs-as-Judges"

Introduction

The paper "Judging the Judges: Evaluating Alignment and Vulnerabilities in LLMs-as-Judges" (2406.12624) assesses the potential of using LLMs as judges to evaluate LLM outputs, given the increasing complexity and diversity of LLM capabilities. It addresses the limitations of human evaluations, which are often impractical due to cost and scalability issues, and investigates whether LLMs can be reliable substitutes, particularly focusing on their alignment with human judgments and potential vulnerabilities.

Methodology

The authors conduct an empirical paper using thirteen judge models of varying sizes and architectures to evaluate the outputs of nine exam-taker models. These exam-taker models are categorized based on their training type: base and instruction-tuned. The authors employ a comprehensive evaluation setup using the TriviaQA benchmark, known for its large dataset of question-answer pairs. The paper specifically examines the alignment of LLM judgments with human evaluations using two metrics: percent agreement and Scott’s Pi, the latter offering a more robust statistical correction for chance agreement.

In their setup, the exam-takers’ responses are judged on correctness, considering semantic equivalence with reference answers. Various judge models, including well-known architectures such as GPT-4 and Llama-3, are assessed for their ability to mirror human-like evaluation behaviors.

Key Findings

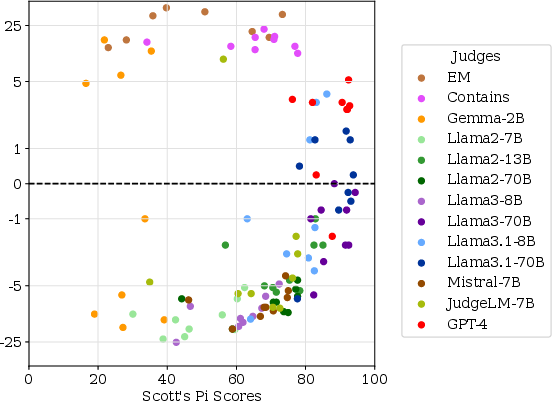

- Alignment with Human Judgments: The paper finds that even the best LLM judge models (such as GPT-4 and Llama-3.1 70B) fall short of human alignment, illustrating a significant gap. The absolute scores by these judges can deviate by up to 5 points from human-assigned scores, indicating potential risks in adopting LLM evaluations uncritically.

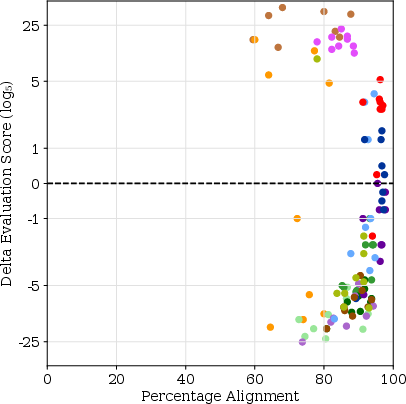

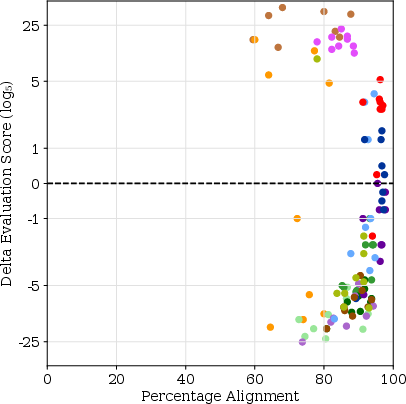

Figure 1: Difference with human evaluation scores versus alignment metric. The delta evaluation score is the difference between the judge and the human score.

- Metrics Comparison: While percent agreement often shows high values across models, it does not effectively distinguish between models as well as Scott's Pi. The authors argue for using Scott's Pi, which reveals variations in the ability of models to align with human judgments more distinctly.

- Evaluating Judgment Consistency: Judge models exhibit varied levels of consistency when processing identical inputs with shuffled reference lists, demonstrating inherent biases influenced by reference order.

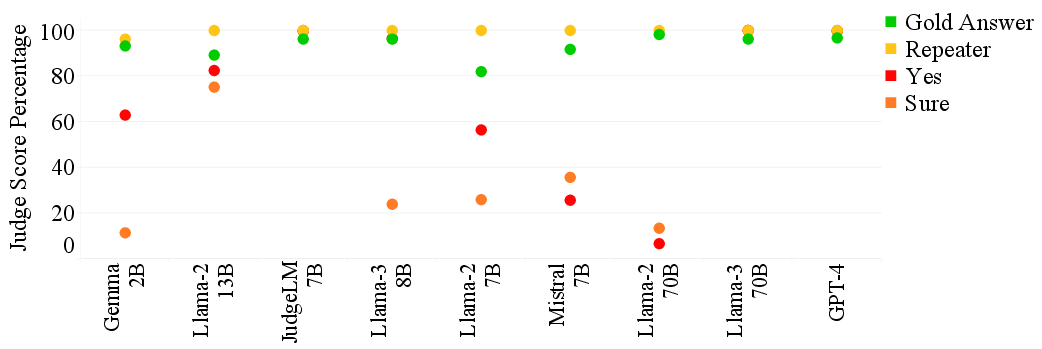

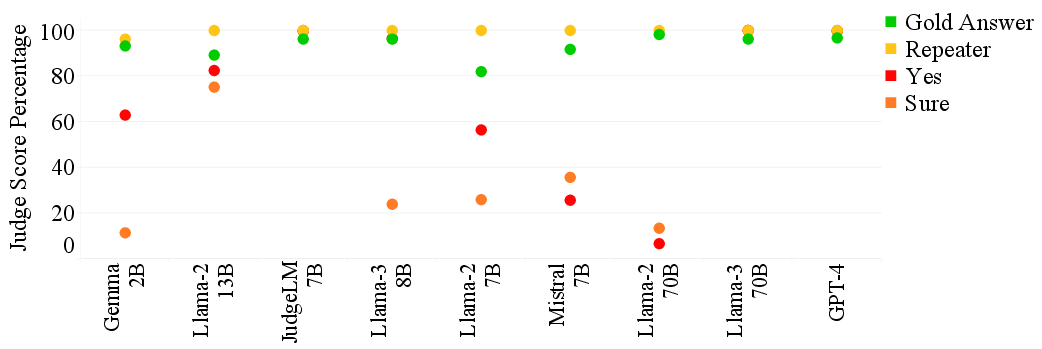

- Sensitivity to Answer Formats: Judge models are vulnerable to specific types of incorrect responses, particularly when exam-taker responses include plausible-seeming yet incorrect entities. They show robustness against dummy responses that simply repeat the question or provide generic confirmations like “Yes” or “Sure,” but exhibit weaknesses in correctly identifying these as incorrect.

Figure 2: Judge responses to dummy answers.

- Leniency and Bias: Smaller models, such as Mistral 7B, tend to be more lenient, often judging ambiguous cases as correct at a higher rate than larger models. The calculated leniency bias (P+) suggests that judges tend to favor positive evaluations when unsure or under-specified.

Implications and Future Directions

The paper provides critical insights into the reliability and limitations of LLMs as judges. The findings emphasize the necessity for robust evaluation metrics like Scott's Pi over simpler metrics such as percent agreement to accurately quantify alignment with human judgment. The paper's results highlight the potential of LLMs to supplement human judgment, especially where resources are constrained, but also strongly advise caution due to their nuanced biases and tendency towards leniency.

Future research directions include refining the LLM evaluation protocols and expanding studies to more complex tasks beyond trivia-style Q&A to encompass creative and open-ended content evaluation. Further exploration into the cognitive and algorithmic modeling of judgment to better anticipate biases and align LLM assessments with human expectations is also needed.

Conclusion

This paper makes a significant contribution to the domain of LLM evaluation by systematically analyzing more than a dozen models and setting a foundation for future frameworks that aim to incorporate AI models as evaluators. The detailed insights into model biases and alignment gaps provide a valuable reference for practitioners aiming to integrate LLM judges in practical settings, reinforcing the need for careful metric selection and model tuning to achieve dependable outcomes.