- The paper shows that LLM-guided self-reflection leads to modest improvements in exam scores and increased self-confidence among students.

- Methodologically, the study compares LLM-facilitated self-reflection with traditional methods using randomized controlled experiments in college courses.

- The research implies that personalized, real-time LLM interactions offer scalable reflective learning opportunities, warranting further investigation.

Supporting Self-Reflection at Scale with LLMs: Insights from Randomized Field Experiments in Classrooms

The paper "Supporting Self-Reflection at Scale with LLMs: Insights from Randomized Field Experiments in Classrooms" focuses on the integration of LLMs to facilitate self-reflection in educational contexts. The research is centered around two randomized field experiments in undergraduate computer science courses that evaluate the efficacy of LLM-guided reflection activities, as compared to traditional self-reflection methods.

Introduction

Self-reflection is a pivotal process in the educational paradigm, contributing significantly to knowledge consolidation and the enhancement of learning efficacy. The difficulty in personalized immediate feedback has been a limiting factor in current educational practices. Traditional, time-constrained, and non-personalized methods often fall short of realizing the full potential of reflective learning activities.

The emergence of LLMs such as GPT-4 provides a novel approach to address these limitations. These models, with their capacity for nuanced dialogue in real time, present an opportunity to facilitate personalized reflective learning experiences at scale. However, empirical research evaluating the role of LLMs in promoting self-reflection within educational settings remains limited.

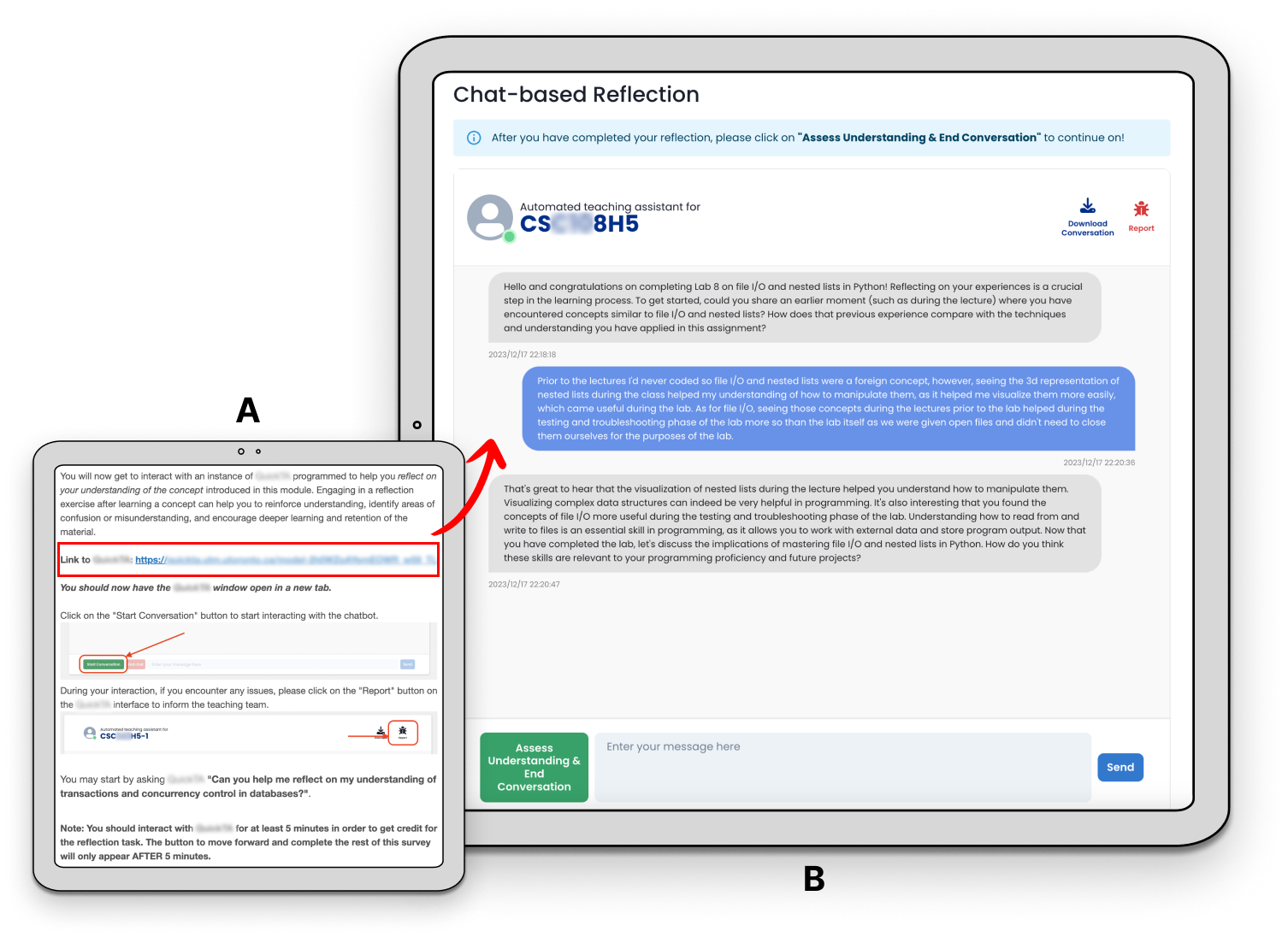

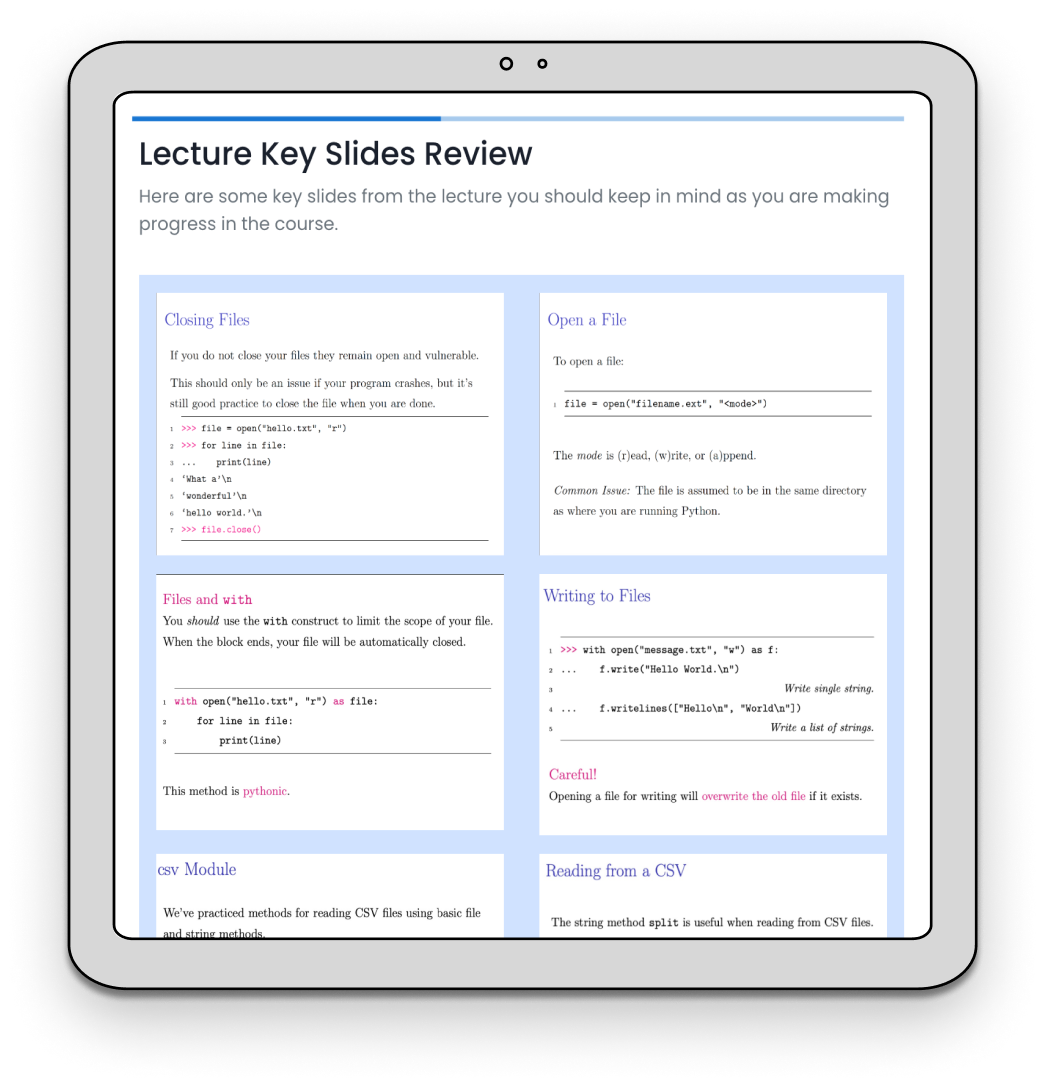

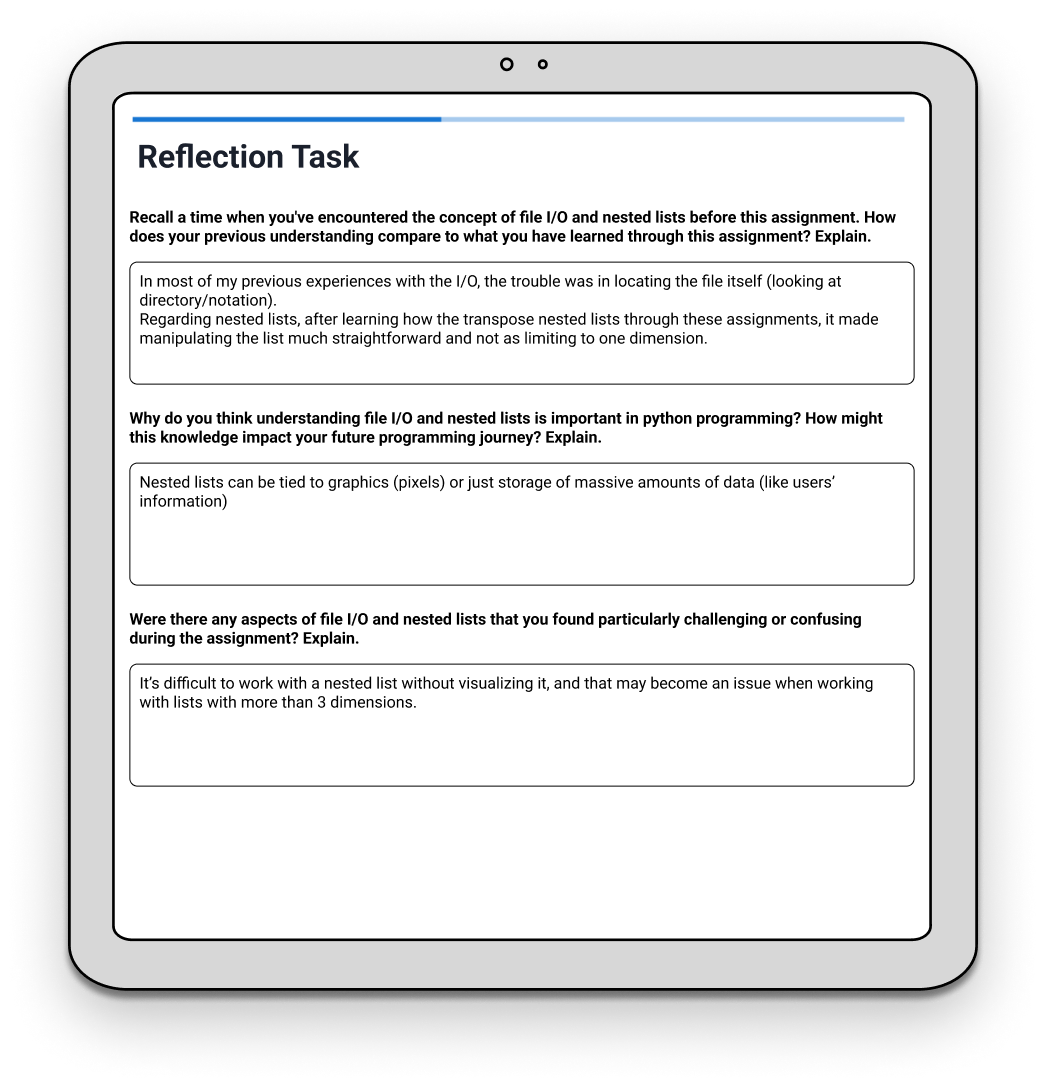

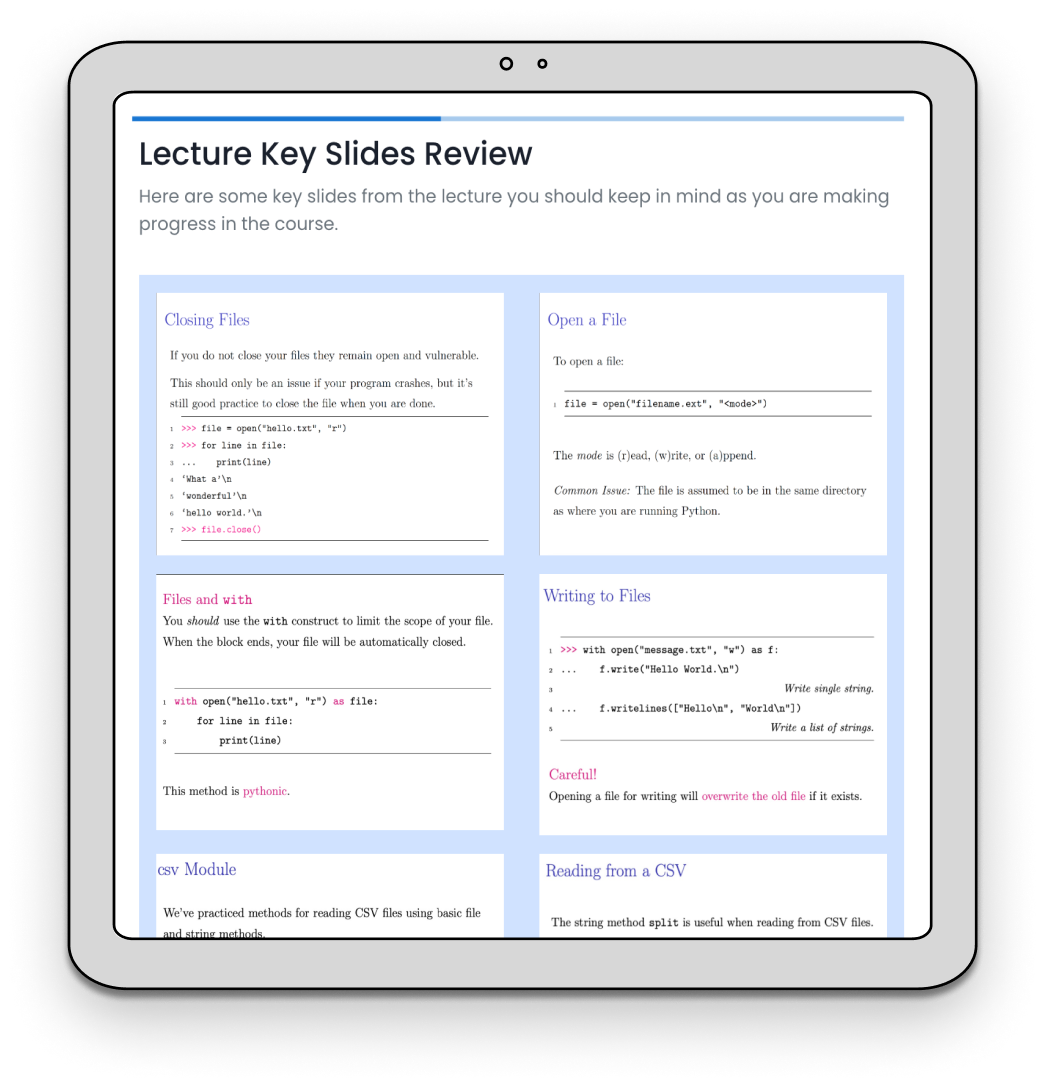

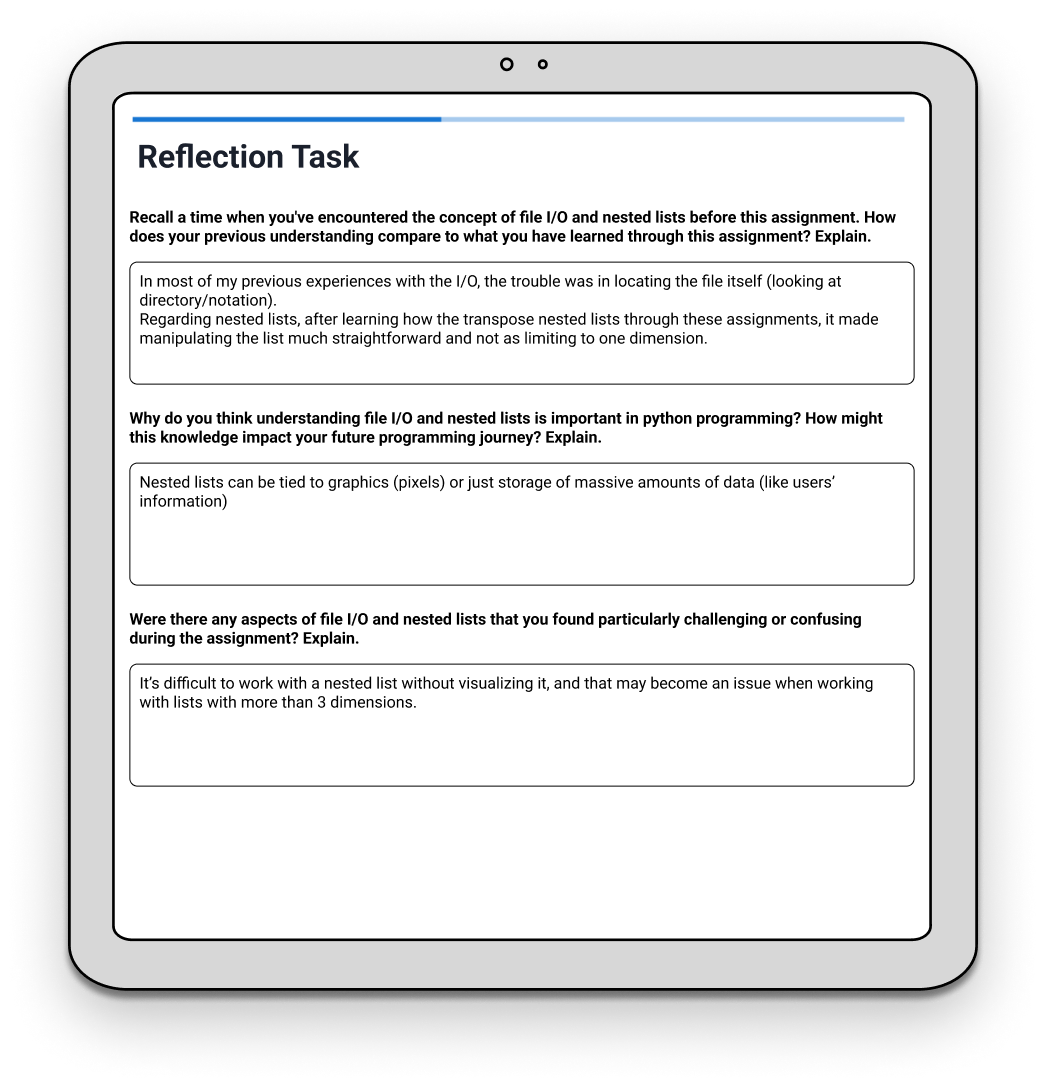

Figure 1: Stimuli for Study-1. A) Half of the students got a link to the reflection bot after completing their assignment. B) Example chat window for reflection.

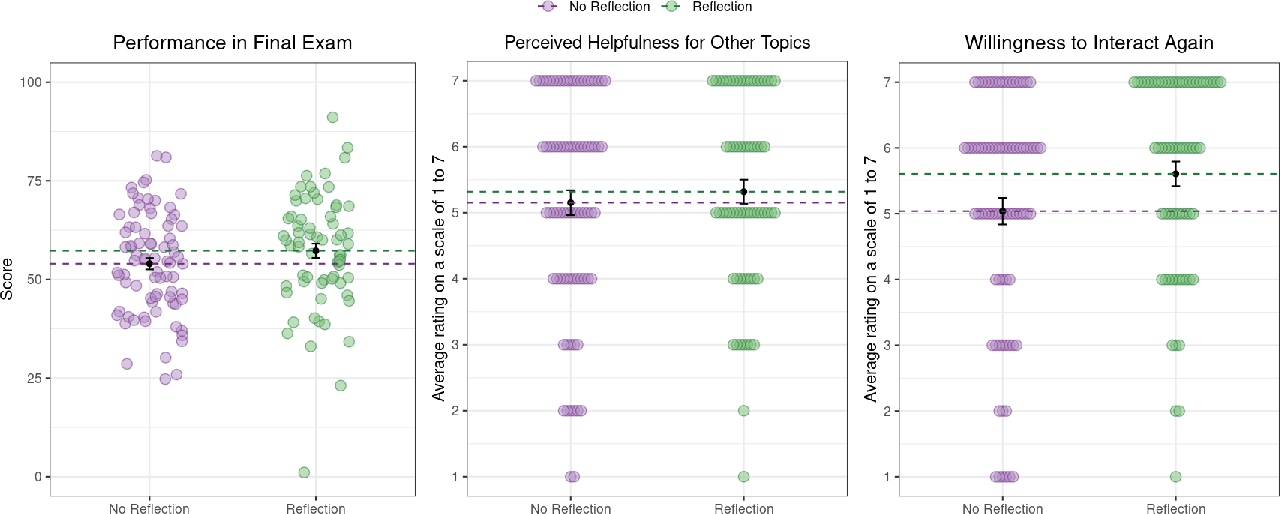

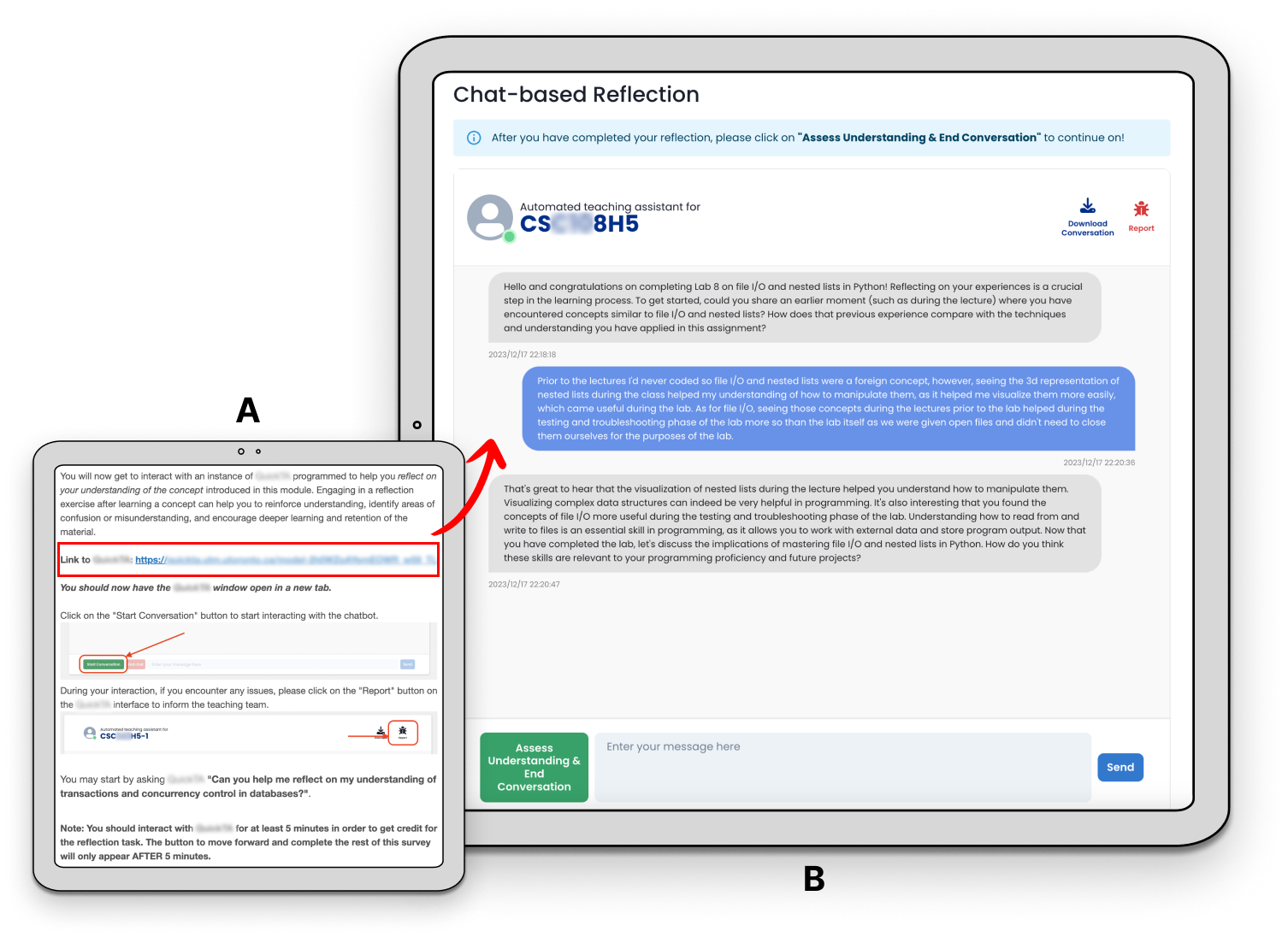

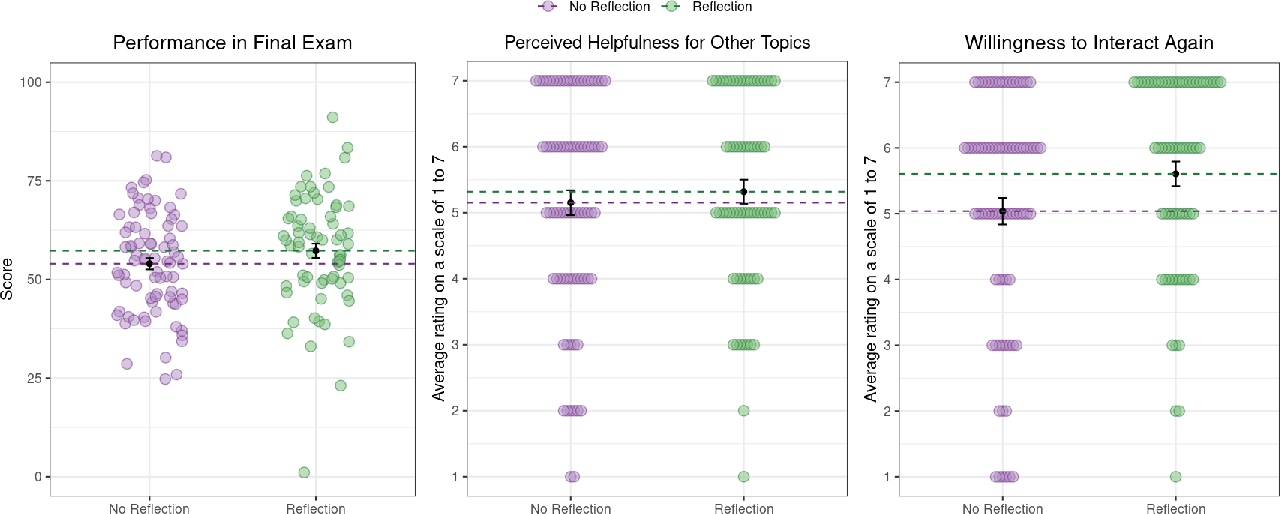

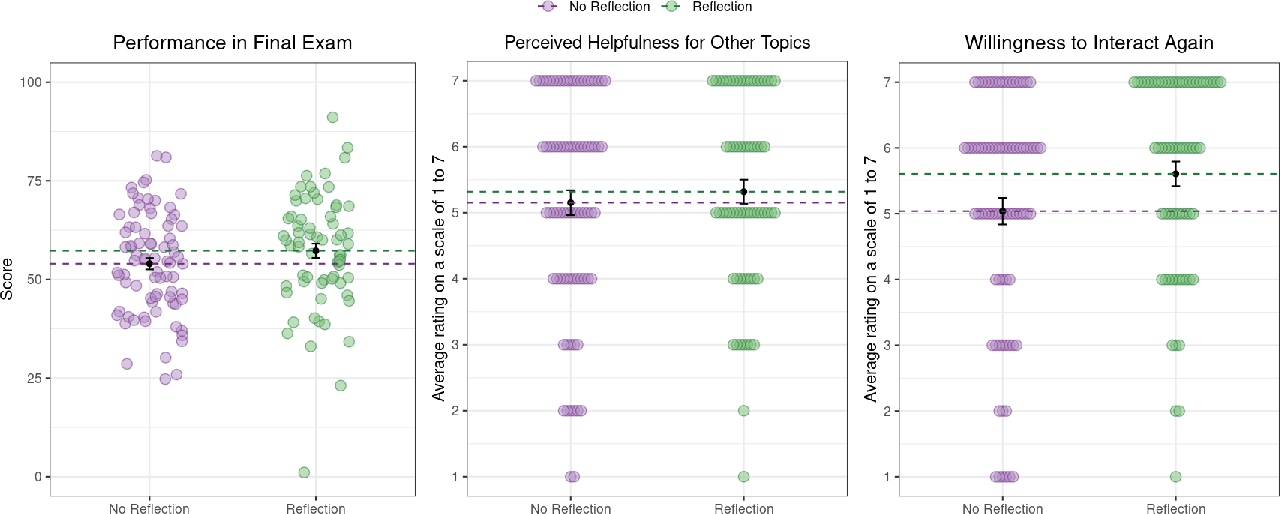

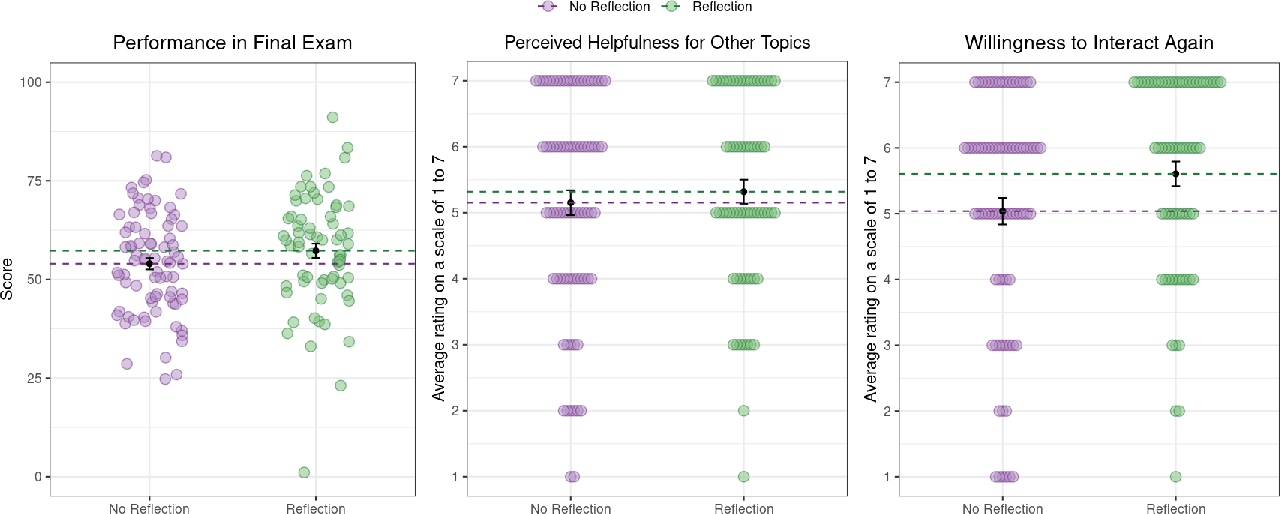

Figure 2: Comparative Analysis of Student Outcomes in Reflection vs. No-Reflection Conditions for Study 1. The left panel presents the mean final exam scores obtained two weeks post-assignment, indicating higher performance among students in the reflection group. The center panel assesses the perceived helpfulness of the LLM-tutor for other topics, as influenced by the assigned condition. Finally, the right panel evaluates the willingness of the students to interact again with the LLM tutor, highlighting a greater inclination among those in the reflection group to seek further interaction. Error bars represent standard errors.

Methodology

The first paper conducted by the authors aimed to establish the impact of LLM-guided reflection in comparison to the absence of reflection activities. This was conducted in an undergraduate database course at a Canadian research institution. It involved 145 students who completed a take-home assignment facilitated by a GPT-3-based LLM (Figure 1). Post-assignment, one group interacted with a reflection bot while the control group had no such opportunity.

Performance and Learning Outcomes: The results suggested that LLM-guided reflection may result in improved exam scores in a subsequent assessment, conducted two weeks later. While students who engaged in the LLM-guided reflection showed slightly better performance (Mean=57.27) compared to the control group with no reflection (Mean=53.99) (Figure 2). This result indicates a notable trend despite not reaching statistical significance, which may warrant further investigation with larger sample sizes.

Figure 2: Comparative Analysis of Student Outcomes in Reflection vs. No-Reflection Conditions for Study 1.

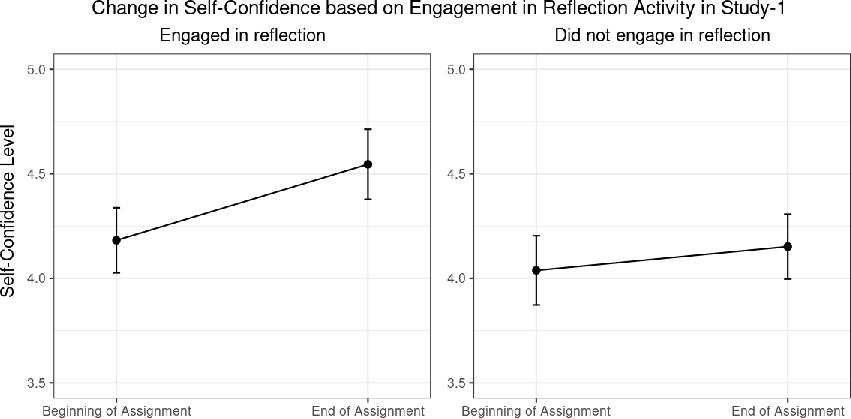

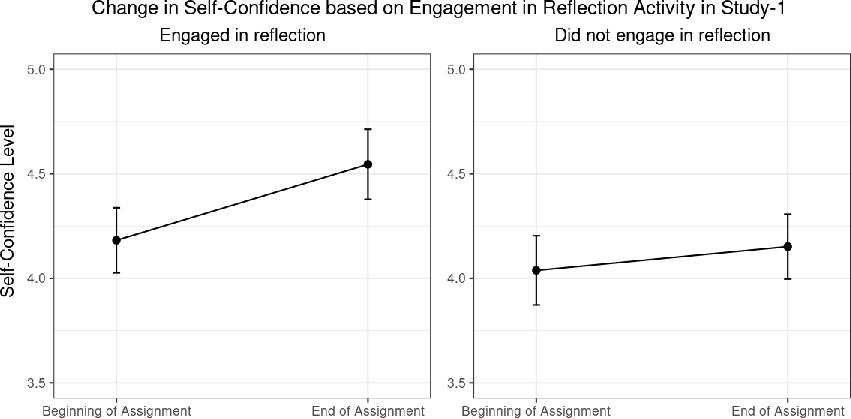

Self-Confidence: Students engaging in reflection with an LLM tutor exhibited increased self-confidence (Figure 3). The treatment group's self-confidence improved significantly from Mean=4.18 to Mean=4.56 (t=−2.03,df=65,p=0.046).

Figure 2: Comparative Analysis of Student Outcomes in Reflection vs. No-Reflection Conditions for Study 1. The left panel presents the mean final exam scores obtained two weeks post-assignment, indicating higher performance among students in the reflection group.

Figure 3: Change in students' self-confidence from the beginning to the end of the assignment based on their engagement in reflection activities. Error bars represent standard error.

- Subjective Assessment: Students rated the LLM tutor's helpfulness and expressed a higher willingness to interact with it again in the future, especially those in the reflection group (W=3097.00,p=0.046) (Figures 2).*

Figure 3: Change in students' self-confidence from the beginning to the end of the assignment based on their engagement in reflection activities.

Qualitative analysis highlighted four main themes in LLM-student interactions: constant positive feedback, expansion on students' responses, adapting its questions to students' needs, and instant feedback.

Study-2 Methodology and Findings

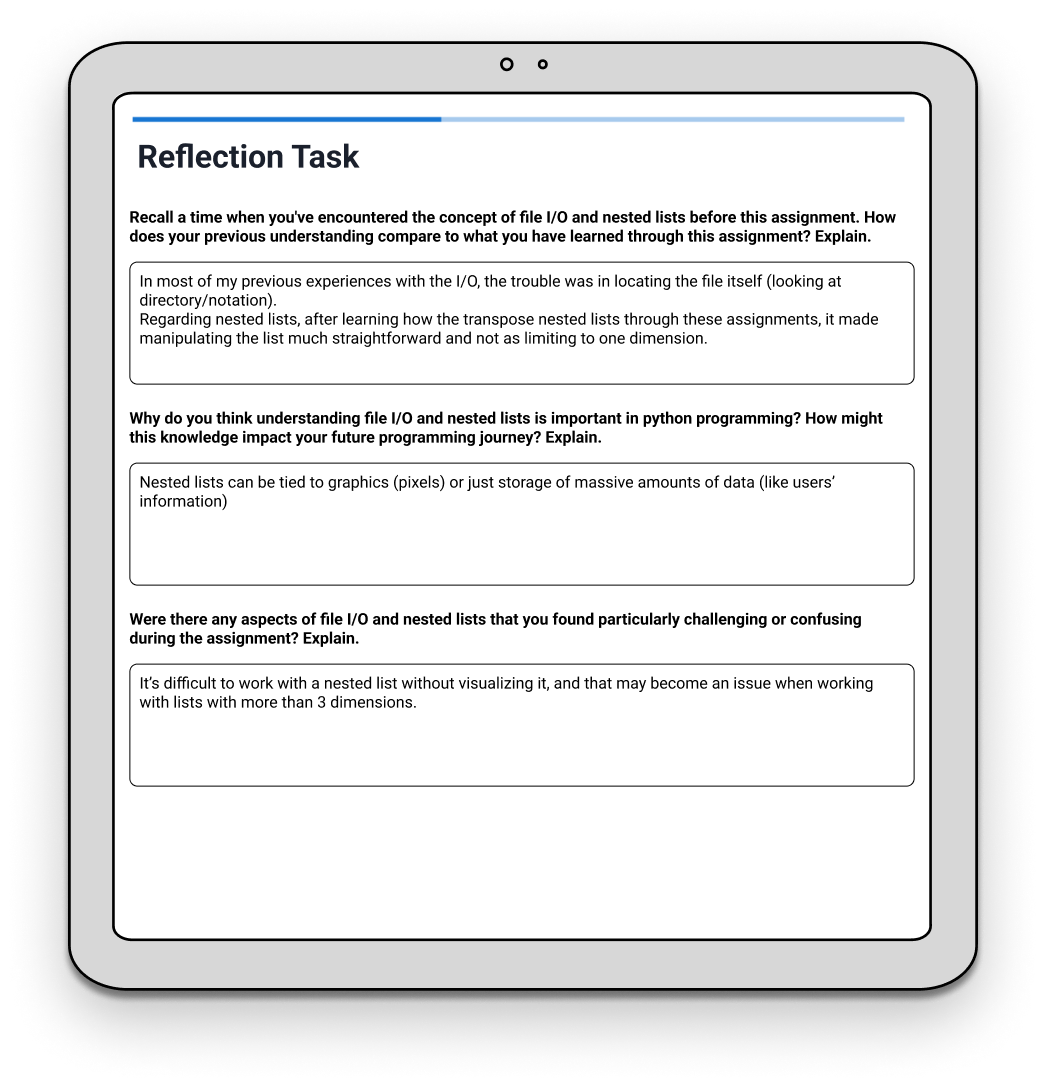

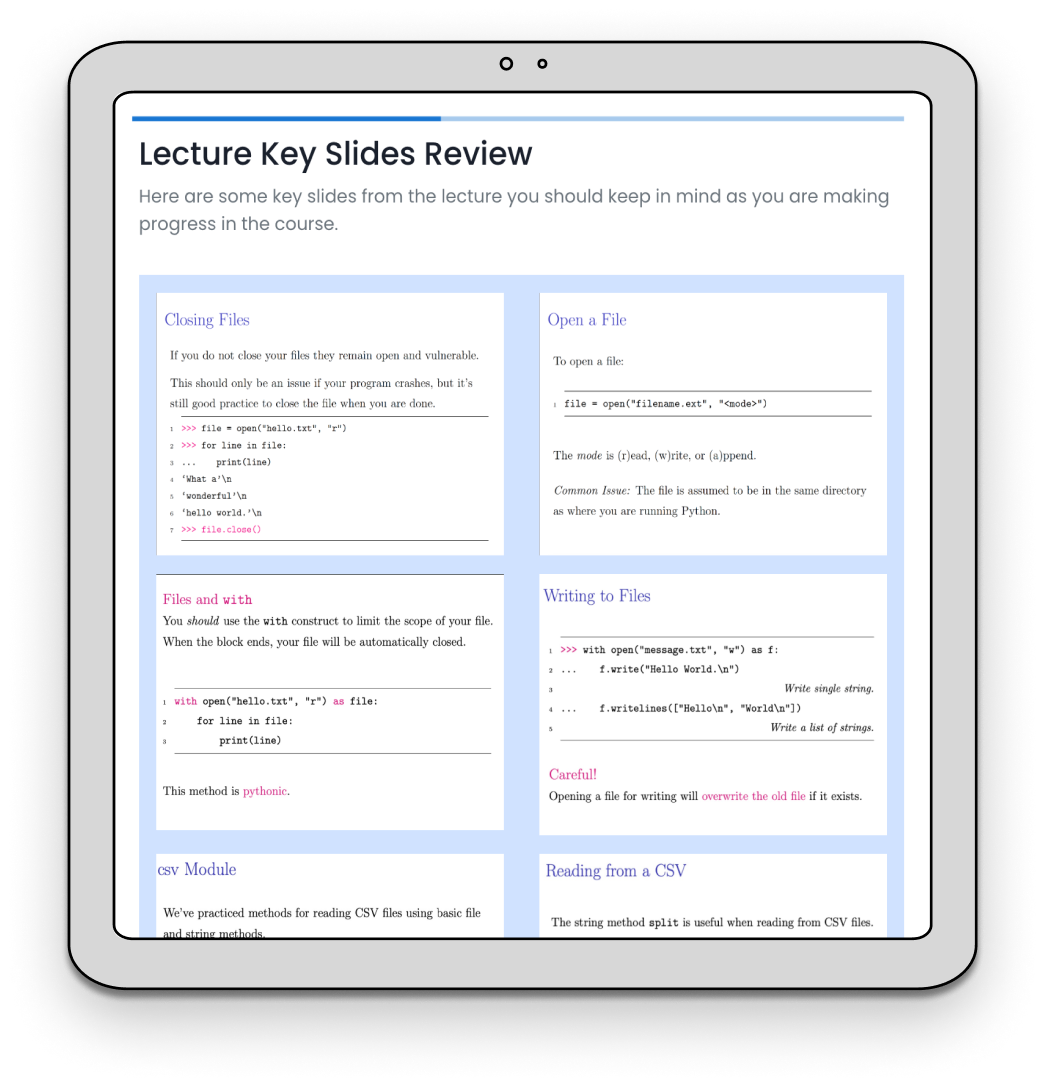

To further investigate RQ2, the second paper was conducted within a first-year undergraduate computer programming course, comprising three experimental conditions: questionnaire-based reflection (Condition 1), LLM-based reflection (Condition 2), and revision of key lecture slides (Condition 3).

\begin{figure}

Figure 3: Change in Student Self-Confidence Pre- and Post-Reflection by Assigned Condition.

Study-2: RQ2 Analysis

Study-2 sought to delineate the efficacy of LLM-facilitated self-reflection relative to conventional methods, such as static questionnaire-based reflection activities (Condition 1), LLM interaction (Condition 2), and review of key lecture slides (Condition 3).

The paper's findings, as indicated in Figure 5 (Figure 1), show no significant differences in exam performance between students in the LLM (Mean=66.91) and Questionnaire-Based Reflection (Mean=68.30) (F(2, 105) = 0.94, p = 0.394). Both groups performed better than those revising lecture slides (Condition 3, Mean=62.25).

Despite low participation rates in the optional reflection activity, students in Study 2's LLM-intervention still reported similar increases in self-confidence across conditions (Figure 6).

Discussion and Implications

The findings signify intriguing trends, particularly in regard to the use of LLMs as educational facilitators. The incremental performance improvements and confidence boosts highlight potential applications within educational tools. While not statistically significant, these differences suggest possible benefits warranting further exploration via larger studies.

The complex dynamics of intrinsic motivation and metacognitive capacity in learners play a critical role. Potential differential impacts highlight the importance of personalization in promoting engagement.

Empirical investigation, however, must span beyond accuracy metrics. The tendency for affirmation from LLMs poses implications and necessitates future exploration into these conversational agents' fact-checking abilities during educational interventions. Improvements in model architecture, such as leveraging more evolved versions like GPT-4, could bolster efficacy in reflective practice.

Conclusion

This research reveals the potential of integrating LLMs into educational settings as facilitators of reflection practices, offering nuanced opportunities for personalized, scalable, and immediate engagement. These findings suggest avenues for further exploration into the significance of reflective practices in learning outcomes and how advanced AI models can enhance these effects. Future work should continue to explore how LLM-based interactive tools can serve as a bridge to more effective pedagogical strategies, thereby advancing the integration of conversational AI in educational environments.