- The paper presents a novel LD-Agent framework that integrates event memory, persona extraction, and response generation to enable coherent and personalized long-term dialogue.

- It employs a topic-based retrieval mechanism and LoRA-tuned persona extraction to accurately capture historical events, ensuring consistent and engaging conversations.

- Empirical evaluations using BLEU, ROUGE, and human assessments demonstrate that LD-Agent outperforms state-of-the-art models across multi-session and cross-domain applications.

Overview of "Hello Again! LLM-powered Personalized Agent for Long-term Dialogue"

The paper "Hello Again! LLM-powered Personalized Agent for Long-term Dialogue" (2406.05925) presents a novel framework termed LD-Agent designed to facilitate long-term, personalized interactions in open-domain dialogue systems. Recognizing the limitations of existing dialogue models, which typically focus on short, single-session interactions, the authors propose a model-agnostic framework integrating event perception, persona extraction, and response generation. This paper's insights advance the capabilities of dialogue agents by enabling them to remember and utilize historical events and personas to drive more coherent and meaningful conversations over extended periods.

Problem Definition

The research addresses key challenges in open-domain dialogue systems, including the need for maintaining long-term event memory and persona consistency. Traditional dialogue models often address these components separately, resulting in a lack of coherence and personalization across sessions. Furthermore, existing models heavily depend on specific architectures and often lack cross-domain adaptability, limiting their effectiveness in diverse real-world scenarios.

Methodology

LD-Agent Framework

The LD-Agent framework is a composite structure comprising three core modules:

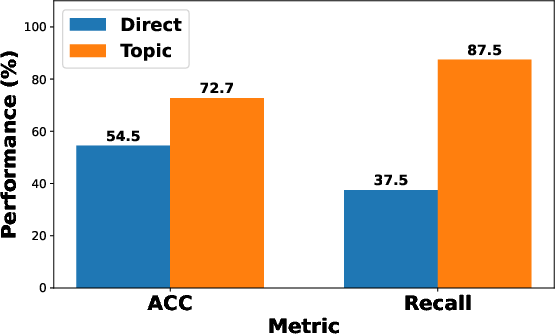

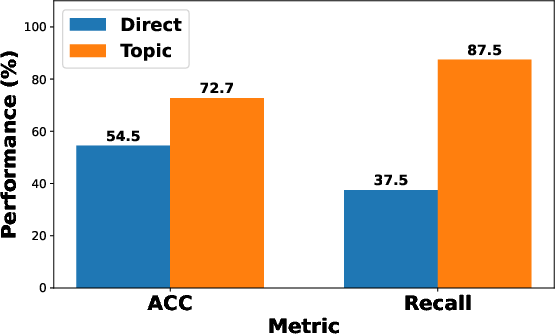

- Event Memory Module: It utilizes both long-term and short-term memory banks to manage event storage and retrieval. Event perception is enhanced by adopting a topic-based retrieval mechanism over direct semantic retrieval, improving access to relevant historical data through an accuracy-optimized embedding-based mechanism.

- Persona Extraction Module: This module focuses on dynamic persona modeling for both users and agents. A LoRA-based instruction tuning mechanism refines persona extraction, ensuring the agents maintain consistent personas. The extracted data guides the dialogue, allowing agents to generate responses that reflect remembered characteristics and previous interactions.

- Response Generation Module: Leveraging integrated memories and personas, this module underpins the generation of appropriate dialogue responses. The generator uses comprehensive data inputs to produce coherent and contextually relevant interactions.

Implementation Details

- Instruction Tuning: The event summary module benefits from instruction tuning using a custom version of the DialogSum dataset, enhancing event summary quality.

- Memory Retrieval: A novel retrieval mechanism considers semantic relevance, topic overlap, and time decay. This approach increases retrieval accuracy by integrating noun extraction to score topic overlap.

- Evaluation Strategy: Extensive experiments on datasets MSC and CC underline the framework's effectiveness, generality, and cross-domain adaptivity. LD-Agent consistently outperformed other state-of-the-art models across multiple sessions.

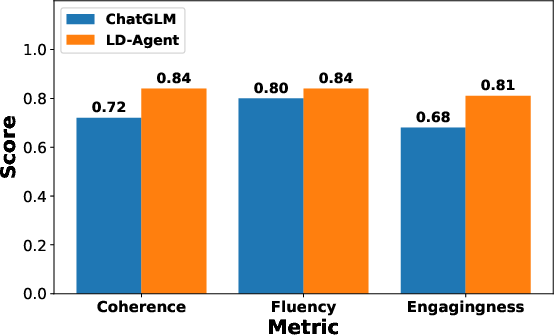

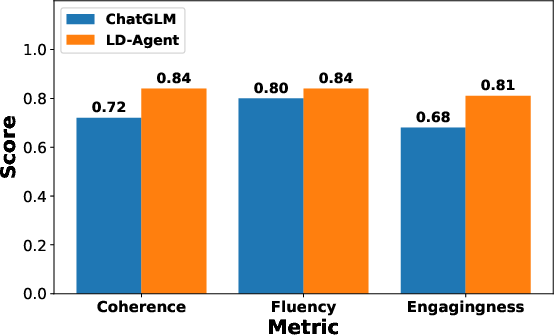

Figure 1: The results of human evaluation on retrieval mechanism and response generation. The topic-based retrieval approach significantly outpaces direct semantic retrieval in coherence, fluency, and engagingness.

Experimental Results

Empirical evaluations show that models employing the LD-Agent framework achieve state-of-the-art performance across various metrics. Notably, all tested models showed significant improvements in coherence, consistency, and overall dialogue quality when integrated with LD-Agent.

- Multi-session Improvement: Evaluations on multi-session datasets demonstrate LD-Agent's capability for supporting sustained and coherent dialogue spanning multiple sessions. Metrics including BLEU and ROUGE indicate superior performance for models utilizing LD-Agent.

- Generality and Transferability: The framework's model-agnostic nature is exemplified by its successful integration with diverse model architectures like LLMs and traditional LLMs. Additionally, the LD-Agent demonstrates remarkable cross-domain capabilities, maintaining robust performance when trained on one dataset and tested on another.

- Human Evaluation: Human evaluators confirmed the enhanced engagement and coherence of dialogues processed by the LD-Agent framework compared to baseline models.

Conclusions

LD-Agent sets a new benchmark for long-term dialogue agents by successfully marrying historical events and dynamic personas into dialogue generation. The research illustrates the practical potential of model-agnostic frameworks in real-world applications, offering insights into future advancements in AI-based dialogue systems. Future work could explore the framework's application in scenarios with even longer dialogues and more complex interaction structures, further solidifying its utility in creating seamless, human-like conversational agents.