- The paper presents a secure multi-agent LLM framework in AD, addressing data leakage, regulatory compliance, and ethical alignment.

- It employs Behavior Expectation Bounds to quantitatively assess model outputs and sensitive data impacts on driving decisions.

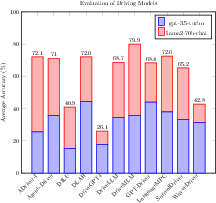

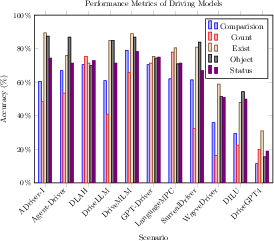

- Experimental results on the nuScenes-QA dataset show varied performance and safety measures across gpt-35-turbo and llama2-70b models.

A Superalignment Framework in Autonomous Driving with LLMs

The paper "A Superalignment Framework in Autonomous Driving with LLMs" presents a security-oriented framework employing LLMs in the context of autonomous driving (AD). The framework addresses significant risks associated with data security, model alignment, and decision-making in autonomous vehicles. It focuses on safeguarding sensitive information and ensuring compliance with human values and legal standards.

Introduction to LLM Safety in Autonomous Driving

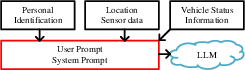

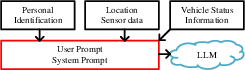

The proposed framework introduces a novel approach by utilizing a multi-agent LLM architecture. This design aims to secure sensitive vehicle-related data, such as precise locations and road conditions, from potential leaks. It also seeks to verify that the outputs generated by LLMs adhere to relevant regulations and align with societal values.

Risks in LLM-driven Autonomous Systems

One of the primary challenges highlighted is the inherent risk of data leakage due to the cloud-based inference mechanisms commonly used in LLM-driven systems. Such systems require the transmission of sensitive data, which introduces vulnerabilities. Moreover, LLMs face challenges such as bias and inaccuracies, which, if unchecked, could have real-world consequences.

Figure 1: LLM Safety-as-a-service autonomous driving framework.

Key Contributions

The research provides several major contributions to the field of LLM usage in autonomous vehicles, including:

- A secure interaction framework for LLMs, designed to act as a fail-safe against unintended data exchanges with cloud-based LLMs.

- An analysis of eleven autonomous driving methods driven by LLM technology, focusing on aspects such as safety, privacy, and alignment with human values.

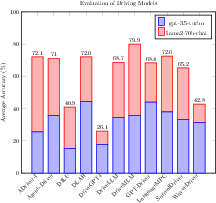

- Validation of driving prompts using a section of the nuScenes-QA dataset with comparisons of outcomes between the gpt-35-turbo and llama2-70b LLM backbones.

Methodology

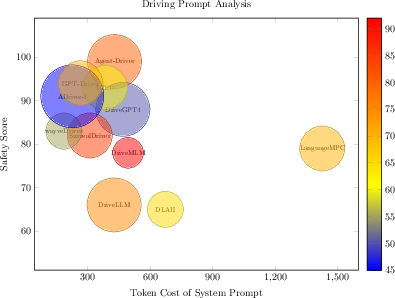

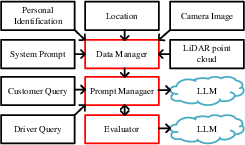

The methodology is driven by Behavior Expectation Bounds (BEB), which quantifies how LLM behaviors align with expected safety and ethical standards. This approach evaluates LLM outputs based on a defined scoring function to measure adherence to safety and alignment requirements. Besides safety, the framework also scrutinizes sensitive data usage and the effectiveness of vehicle command functions.

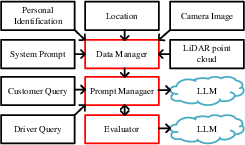

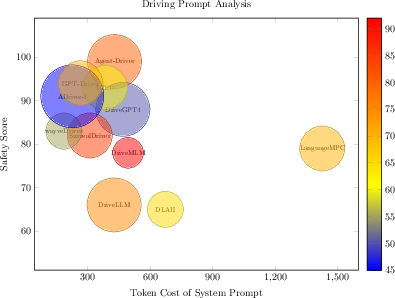

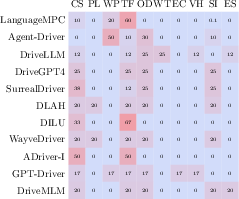

System Prompts and Data Sensitivity

The system prompts were assessed using various sensitive data integrations to evaluate their impact on LLM-driven decision-making in AD. The inclusion of sensitive data types, such as vehicle speed and location, was analyzed across different models to gauge their influence on decision-making accuracy.

Figure 2: LLM-AD system prompt analysis.

Experimental Results

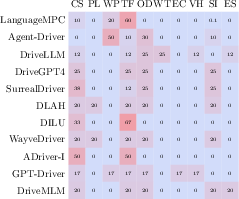

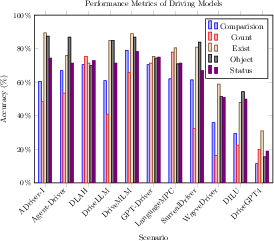

The framework's efficacy was tested through system prompt effectiveness, safety metrics, and alignment scenarios across a selection of LLM-driven autonomous driving methods. These assessments were performed using gpt-35-turbo and llama2-70b-chat LLMs on the nuScenes-QA dataset, covering various environmental perception queries.

Results Overview

The experiments revealed notable differences in performance across different autonomous driving prompts. The analysis indicated variations in sensitive data usage and alignment with human values, which are crucial for evaluating vehicle safety and compliance.

Figure 3: LLM-AD system prompt analysis of sensitive data usage.

Figure 4: Overall accuracy in nuScenes-QA dataset.

Figure 5: Results of different models on five question types in nuScenes-QA dataset.

Conclusion

The paper proposes a robust security framework intended to enhance LLM employment in autonomous vehicle systems, emphasizing data safety and ethical alignment. This framework addresses existing vulnerabilities by incorporating a multi-agent safety assessment system, thereby augmenting traditional LLM frameworks. The results demonstrate the effectiveness of the security measures in promoting safer and more reliable autonomous driving systems.

In summary, this framework provides a comprehensive solution for integrating LLMs into autonomous driving, balancing technological advancement with essential safety and ethical considerations. Future work could explore extending these principles to other high-risk applications of LLMs, ensuring broader applicability and safer AI deployments.