- The paper presents a novel framework integrating MPM simulations with video diffusion priors to refine dynamic 3D Gaussian scenes.

- It employs score distillation sampling with truncated back-propagation through time to optimize physical parameters and ensure stable convergence.

- Experimental results show competitive performance against PhysDreamer while highlighting challenges in simulating diverse 4D motion interactions.

DreamPhysics: Learning Physics-Based 3D Dynamics with Video Diffusion Priors

Introduction

"DreamPhysics" introduces a novel framework for learning physical properties of dynamic 3D Gaussians with video diffusion priors. Amidst rapidly expanding demand for realistic dynamic 3D interactions in applications such as VR and video gaming, this work aims to bridge the gap between static 3D assets and dynamic simulation by integrating physics-based simulation with video diffusion models. Unlike prior approaches that rely on manual parameter assignments and suffer from unrealistic simulations, DreamPhysics optimizes physical parameters through distillation of video generative models, yielding more realistic motions in 4D content.

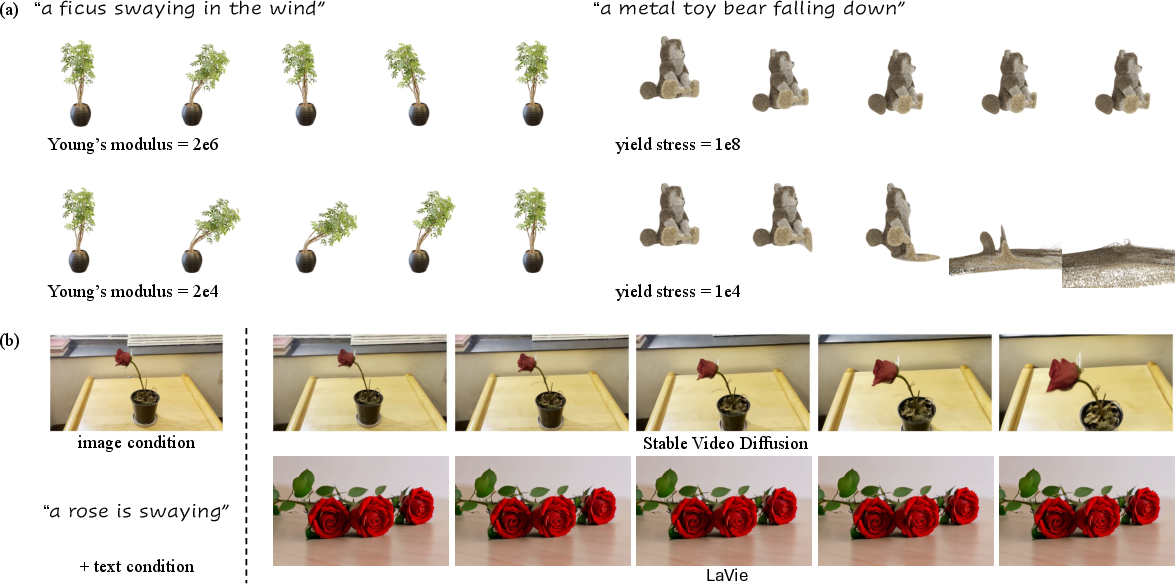

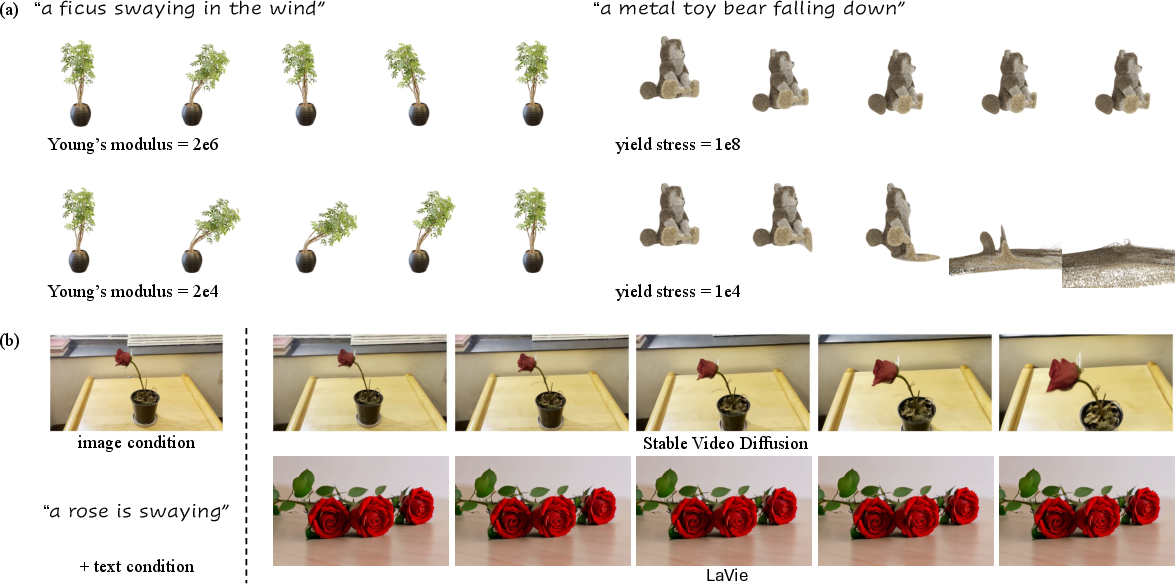

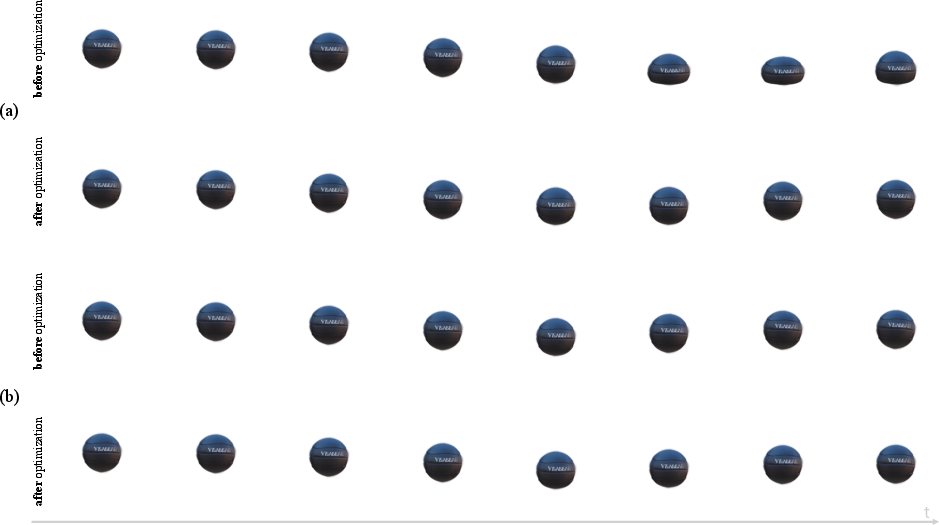

Figure 1: (a): the setting of physical properties will significantly affect the quality of simulated videos; (b) current video diffusion models can hardly control to generate desired results.

Method Overview

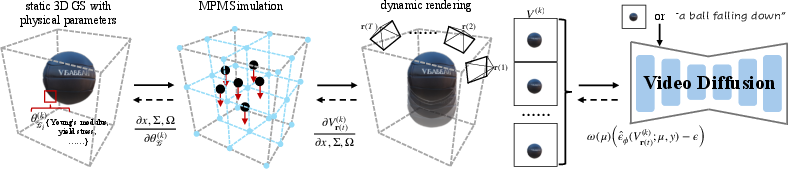

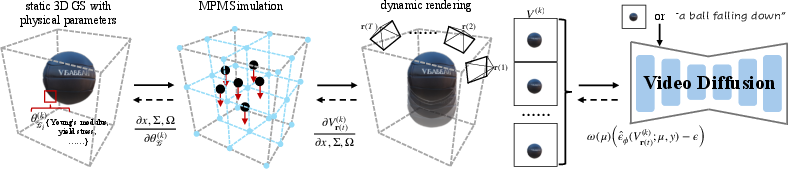

DreamPhysics combines a material point method (MPM) simulation with video diffusion model guidance to refine physical parameters of a static 3D Gaussian Splatting scene. Initiating with a series of inferred parameters, the framework renders a 4D video through MPM simulation; inaccuracies due to initial parameter settings are iteratively refined using score distillation sampling (SDS) from previously rendered videos. The iterative refinement continues until physical parameters converge to produce realistic 4D scenes.

Figure 2: Overview of DreamPhysics. We first initialize a set of physical parameters for a static 3D GS, which is then fed through a series of optimizations to refine physical parameters based on simulated results.

Parameter Optimization

The core of DreamPhysics revolves around differentiable MPM simulations and 3D GS rendering. The backward propagation of gradients through these differentiable components enables optimization of the physical parameters. The gradients calculated using SDS are flowed backward over the simulation parameters and into the physical properties for refinement across epochs.

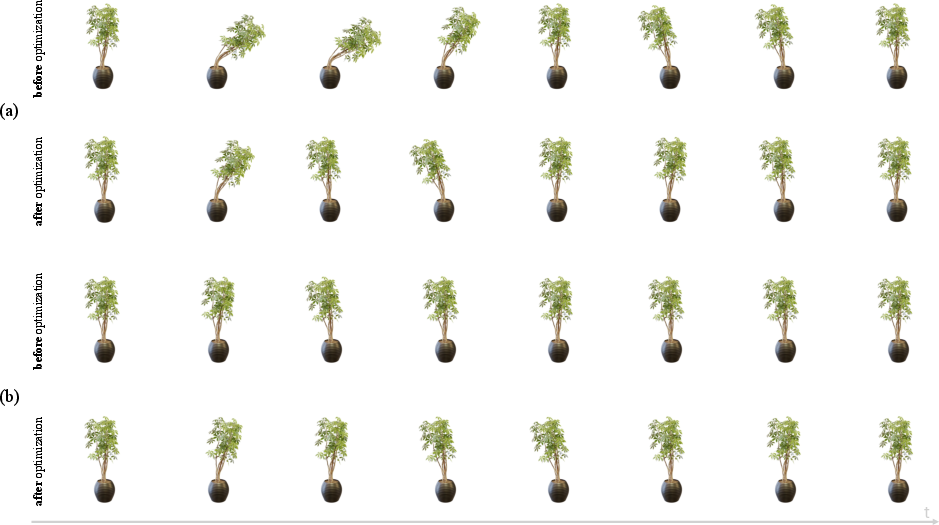

Additionally, the implementation of truncated back-propagation through time (BPTT) paired with frame interpolation mitigates gradient vanishing/exploding dilemmas, providing a stable update path for each training epoch.

Frame Interpolation and Log Gradient

Frame interpolation strategies segment video frames into multiple groups providing a window for iterative optimization across diverse motion scenarios. Logarithmic gradient updates are leveraged to equalize granularity in gradient updates across widely varying physical parameter magnitudes, ensuring accurate convergence for properties such as Young’s modulus.

Experiments

DreamPhysics is experimented upon under text-conditioned and image-conditioned optimizations, showcasing its effectiveness in moderating physical parameters for realistic simulations.

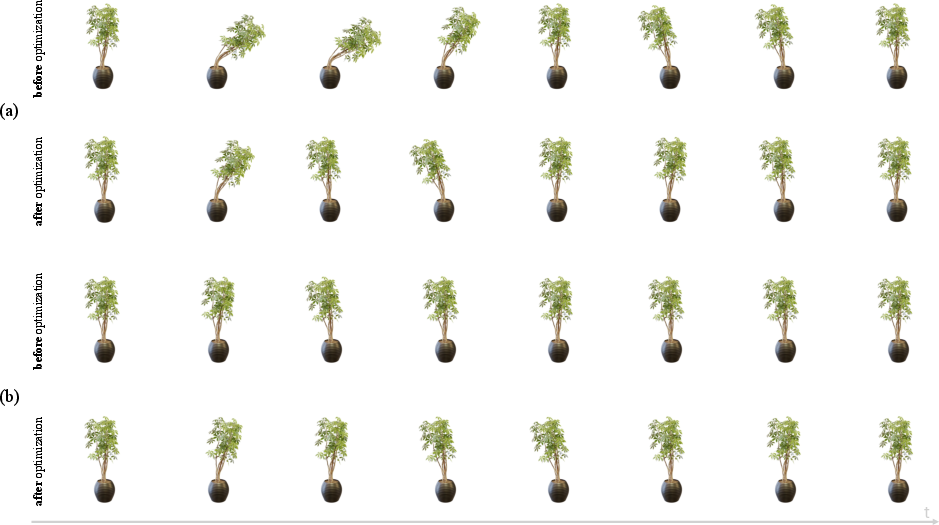

Figure 3: Text-conditioned optimization. (a): if Young's modulus is set too low, the ficus will excessively tilt; (b): if set too high, oscillation becomes vague.

Figure 4: Image-conditioned optimization. (a): Excessive deformation with low modulus; (b): With high modulus, deformation is insufficient.

Comparison and Discussion

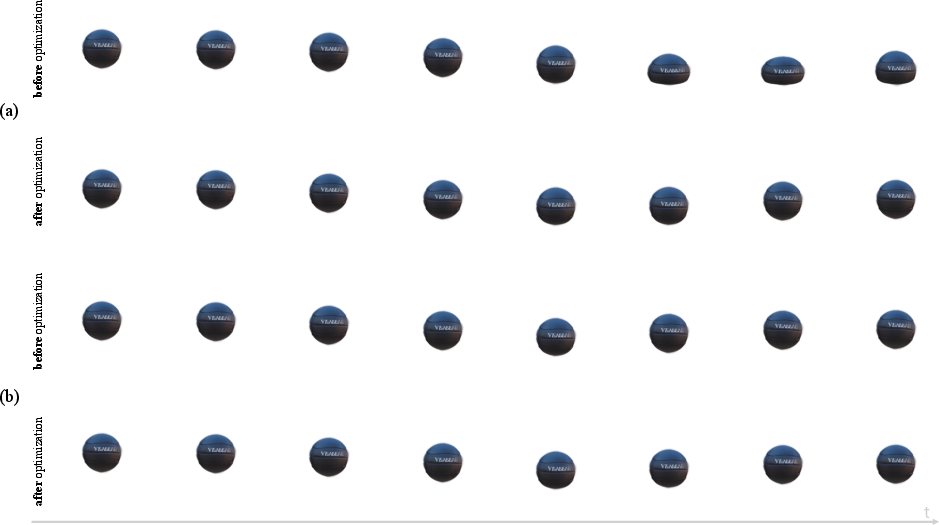

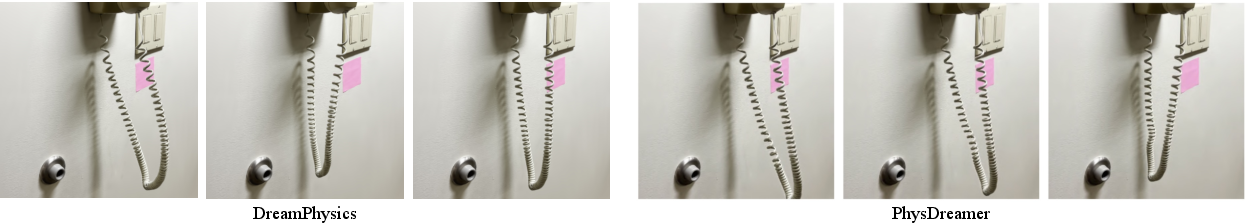

In comparative evaluations against PhysDreamer, DreamPhysics displays competitive performance by distilling video priors more effectively. Unlike PhysDreamer which principally relies on using ground-truth video generated by SVD, DreamPhysics enriches optimization through the integrated process of video prior distillation.

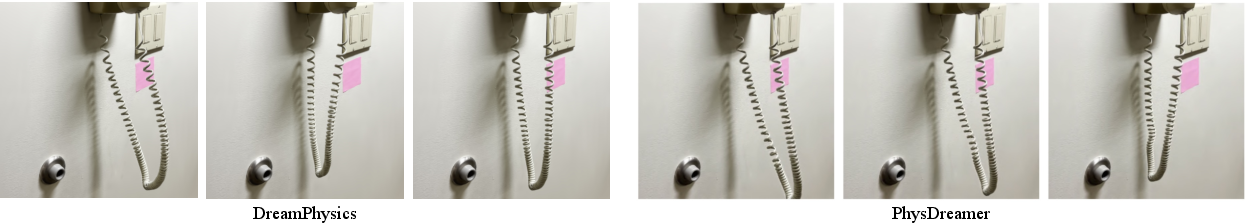

Figure 5: Comparison with our concurrent work PhysDreamer.

Nonetheless, challenges remain, such as expanding simulated motion diversity beyond collision events and swaying, and formulating physics-based metrics to better evaluate simulation quality. The current simulators’ limitations in handling extensive scene interactions also pose an area ripe for enhancement.

Conclusion

DreamPhysics exemplifies a methodological advance in optimizing and learning physical dynamics within 3D simulations. By integrating video diffusion priors with physical property optimization, it achieves more realistic movement generation. This research paves the way for future exploration in dynamic 3D scene simulation, offering promising directions for expanding the variety and complexity of simulated interactions in virtual environments.