LLMs for User Interest Exploration in Large-scale Recommendation Systems (2405.16363v2)

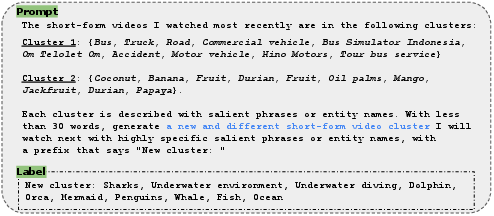

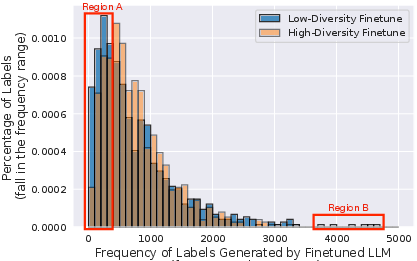

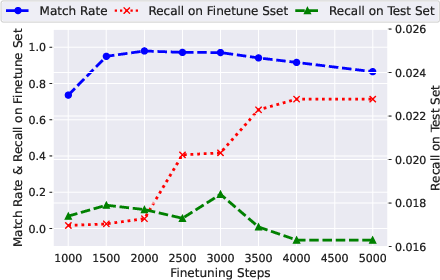

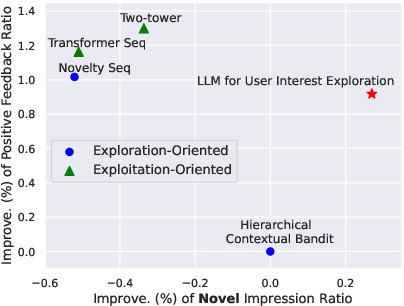

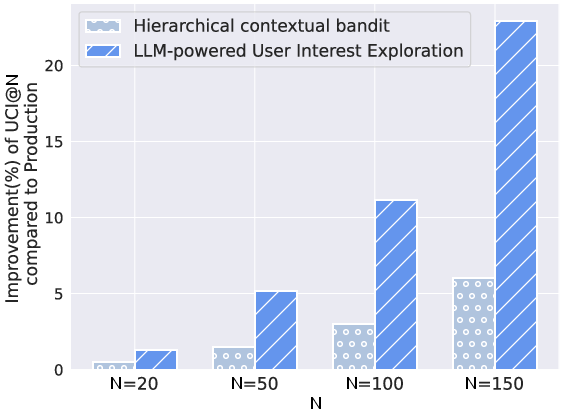

Abstract: Traditional recommendation systems are subject to a strong feedback loop by learning from and reinforcing past user-item interactions, which in turn limits the discovery of novel user interests. To address this, we introduce a hybrid hierarchical framework combining LLMs and classic recommendation models for user interest exploration. The framework controls the interfacing between the LLMs and the classic recommendation models through "interest clusters", the granularity of which can be explicitly determined by algorithm designers. It recommends the next novel interests by first representing "interest clusters" using language, and employs a fine-tuned LLM to generate novel interest descriptions that are strictly within these predefined clusters. At the low level, it grounds these generated interests to an item-level policy by restricting classic recommendation models, in this case a transformer-based sequence recommender to return items that fall within the novel clusters generated at the high level. We showcase the efficacy of this approach on an industrial-scale commercial platform serving billions of users. Live experiments show a significant increase in both exploration of novel interests and overall user enjoyment of the platform.

- Palm 2 technical report. arXiv preprint arXiv:2305.10403.

- Tallrec: An effective and efficient tuning framework to align large language model with recommendation. arXiv preprint arXiv:2305.00447.

- Youtube Official Blog. 2023. YouTube by the Number. Retrieved January, 2023 from https://blog.youtube/press/

- Language models are few-shot learners. In NeurIPS.

- How algorithmic confounding in recommendation systems increases homogeneity and decreases utility. In RecSys.

- Cluster Anchor Regularization to Alleviate Popularity Bias in Recommender Systems. In Companion Proceedings of the ACM on Web Conference 2024. 151–160.

- Minmin Chen. 2021. Exploration in recommender systems. In RecSys.

- Top-k off-policy correction for a REINFORCE recommender system. In WSDM.

- Values of user exploration in recommender systems. In RecSys.

- Large language models for user interest journeys. arXiv preprint arXiv:2305.15498 (2023).

- Uncovering ChatGPT’s Capabilities in Recommender Systems. arXiv preprint arXiv:2305.02182.

- Gemini Team Google. 2023. Gemini: A family of highly capable multimodal models. arXiv preprint arXiv:2312.11805 (2023).

- Recommendation as language processing (rlp): A unified pretrain, personalized prompt & predict paradigm (p5). In ResSys.

- VIP5: Towards Multimodal Foundation Models for Recommendation. arXiv preprint arXiv:2305.14302.

- James Hale. 2019. More Than 500 Hours Of Content Are Now Being Uploaded To YouTube Every Minute. Retrieved January, 2023 from https://www.tubefilter.com/2019/05/07/number-hours-video-uploaded-to-youtube-per-minute/

- Learning vector-quantized item representation for transferable sequential recommenders. In TheWebConf.

- Large language models are zero-shot rankers for recommender systems. ECIR.

- Tim Ingham. 2023. Over 60,000 Tracks are Now Uploaded to Spotify Every Day. That’s Nearly One per Second. Retrieved January, 2023 from https://www.musicbusinessworldwide.com/over-60000-tracks-are-now-uploaded-to-spotify-daily-thats-nearly-one-per-second/

- TagGPT: Large Language Models are Zero-shot Multimodal Taggers. arXiv preprint arXiv:2304.03022.

- Text Is All You Need: Learning Language Representations for Sequential Recommendation. In KDD.

- GPT4Rec: A generative framework for personalized recommendation and user interests interpretation. arXiv preprint arXiv:2304.03879.

- Rella: Retrieval-enhanced large language models for lifelong sequential behavior comprehension in recommendation. TheWebConf.

- Is chatgpt a good recommender? a preliminary study. arXiv preprint arXiv:2304.10149.

- A First Look at LLM-Powered Generative News Recommendation. arXiv preprint arXiv:2305.06566.

- PIE: Personalized Interest Exploration for Large-Scale Recommender Systems. In Companion Proceedings of the ACM Web Conference 2023. 508–512.

- Feedback loop and bias amplification in recommender systems. In CIKM.

- Self-attention with relative position representations. arXiv preprint arXiv:1803.02155 (2018).

- Show me the whole world: Towards entire item space exploration for interactive personalized recommendations. In WSDM.

- Long-Term Value of Exploration: Measurements, Findings and Algorithms. In WSDM.

- Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288.

- Large Language Models as Data Augmenters for Cold-Start Item Recommendation. arXiv preprint arXiv:2402.11724 (2024).

- Result Diversification in Search and Recommendation: A Survey. TKDE (2024).

- Towards Open-World Recommendation with Knowledge Augmentation from Large Language Models. arXiv preprint arXiv:2306.10933.

- Mixed negative sampling for learning two-tower neural networks in recommendations. In Companion Proceedings of the Web Conference 2020.

- Tiny-newsrec: Effective and efficient plm-based news recommendation. In EMNLP.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.