Adaptable and Reliable Text Classification using Large Language Models (2405.10523v3)

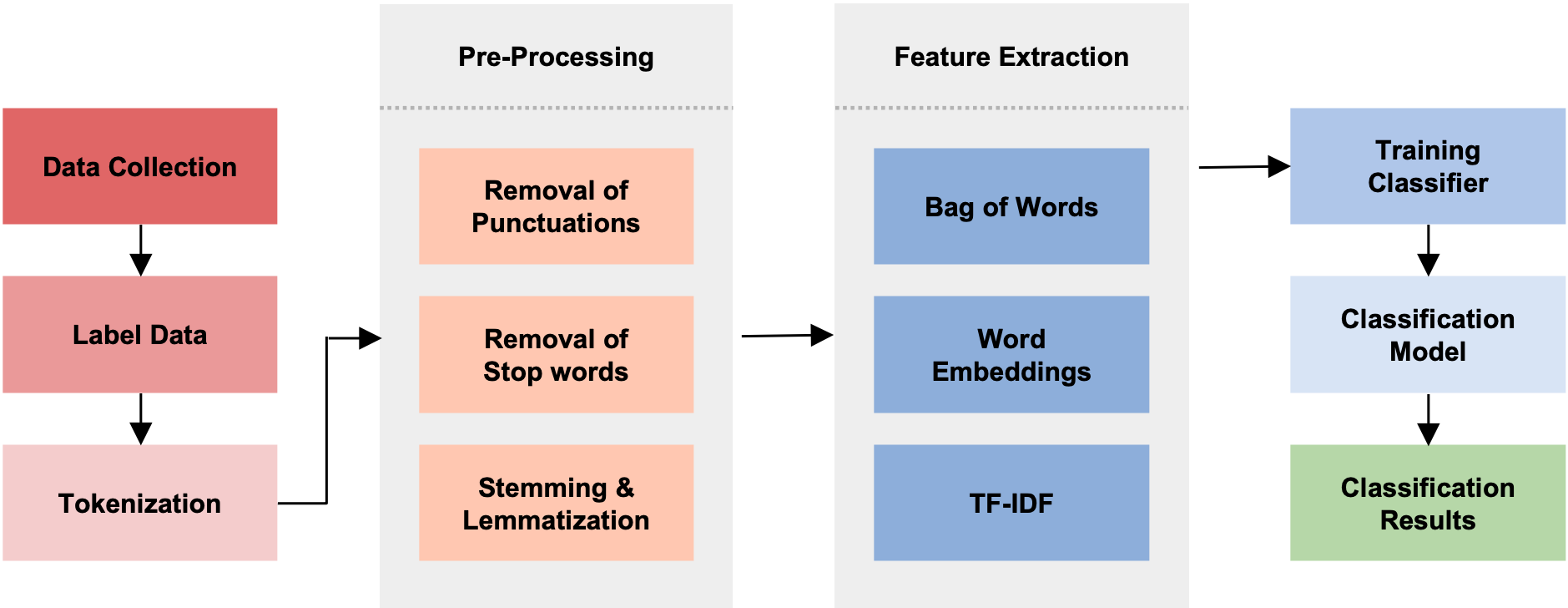

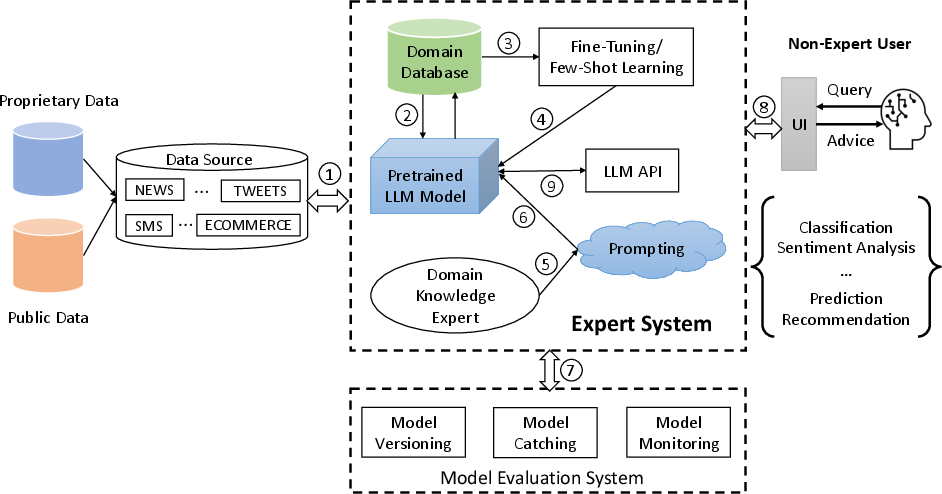

Abstract: Text classification is fundamental in NLP, and the advent of LLMs has revolutionized the field. This paper introduces an adaptable and reliable text classification paradigm, which leverages LLMs as the core component to address text classification tasks. Our system simplifies the traditional text classification workflows, reducing the need for extensive preprocessing and domain-specific expertise to deliver adaptable and reliable text classification results. We evaluated the performance of several LLMs, machine learning algorithms, and neural network-based architectures on four diverse datasets. Results demonstrate that certain LLMs surpass traditional methods in sentiment analysis, spam SMS detection, and multi-label classification. Furthermore, it is shown that the system's performance can be further enhanced through few-shot or fine-tuning strategies, making the fine-tuned model the top performer across all datasets. Source code and datasets are available in this GitHub repository: https://github.com/yeyimilk/LLM-zero-shot-classifiers.

- SMS Spam Collection. UCI Machine Learning Repository. DOI: https://doi.org/10.24432/C5CC84.

- Anthropic, A. (2024). The claude 3 model family: Opus, sonnet, haiku. Claude-3 Model Card, .

- Dallmi: Domain adaption for llm-based multi-label classifier. In Pacific-Asia Conference on Knowledge Discovery and Data Mining (pp. 277–289). Springer.

- Boubker, O. (2024). From chatting to self-educating: Can ai tools boost student learning outcomes? Expert Systems with Applications, 238, 121820.

- Language models are few-shot learners. Advances in neural information processing systems, 33, 1877–1901.

- Can chatgpt provide intelligent diagnoses? a comparative study between predictive models and chatgpt to define a new medical diagnostic bot. Expert Systems with Applications, 235, 121186.

- Large language models for text classification: From zero-shot learning to fine-tuning. Open Science Foundation, .

- A dirichlet process biterm-based mixture model for short text stream clustering. Applied Intelligence, 50, 1609–1619.

- Palm: Scaling language modeling with pathways. Journal of Machine Learning Research, 24, 1–113.

- Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555, .

- Fine-tuned generative llm oversampling can improve performance over traditional techniques on multiclass imbalanced text classification. In 2023 IEEE International Conference on Big Data (BigData) (pp. 5181–5186). doi:10.1109/BigData59044.2023.10386772.

- Exploring chatgpt’s code refactoring capabilities: An empirical study. Expert Systems with Applications, 249, 123602.

- Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, .

- Gautam (2019). E commerce text dataset. URL: https://doi.org/10.5281/zenodo.3355823. doi:10.5281/zenodo.3355823.

- Large-scale bayesian logistic regression for text categorization. technometrics, 49, 291–304.

- Mamba: Linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752, .

- Knn model-based approach in classification. In On The Move to Meaningful Internet Systems 2003: CoopIS, DOA, and ODBASE: OTM Confederated International Conferences, CoopIS, DOA, and ODBASE 2003, Catania, Sicily, Italy, November 3-7, 2003. Proceedings (pp. 986–996). Springer.

- Effective text classification using bert, mtm lstm, and dt. Data & Knowledge Engineering, (p. 102306).

- Joachims, T. (2002). Learning to classify text using support vector machines volume 668. Springer Science & Business Media.

- Kim, Y. (2014). Convolutional neural networks for sentence classification. arXiv preprint arXiv:1408.5882, .

- Text classification algorithms: A survey. Information, 10, 150.

- Deep learning. nature, 521, 436–444.

- Liu, B. (2022). Sentiment analysis and opinion mining. Springer Nature.

- Making llms worth every penny: Resource-limited text classification in banking. In Proceedings of the Fourth ACM International Conference on AI in Finance (pp. 392–400).

- Good debt or bad debt: Detecting semantic orientations in economic texts. Journal of the Association for Information Science and Technology, 65, 782–796.

- Deep learning–based text classification: a comprehensive review. ACM computing surveys (CSUR), 54, 1–40.

- The parrot dilemma: Human-labeled vs. llm-augmented data in classification tasks. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics (Volume 2: Short Papers) (pp. 179–192).

- Language model-guided student performance prediction with multimodal auxiliary information. Expert Systems with Applications, (p. 123960).

- Rwkv: Reinventing rnns for the transformer era. arXiv preprint arXiv:2305.13048, .

- Preda, G. (2020). Covid19 tweets. URL: https://www.kaggle.com/dsv/1451513. doi:10.34740/KAGGLE/DSV/1451513.

- A comparative study of cross-lingual sentiment analysis. Expert Systems with Applications, 247, 123247.

- Dcr-net: A deep co-interactive relation network for joint dialog act recognition and sentiment classification. In Proceedings of the AAAI conference on artificial intelligence (pp. 8665–8672). volume 34.

- Quinlan, J. R. (2014). C4. 5: programs for machine learning. Elsevier.

- Rabiner, L. R. (1989). A tutorial on hidden markov models and selected applications in speech recognition. Proceedings of the IEEE, 77, 257–286.

- Improving language understanding by generative pre-training, .

- Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of machine learning research, 21, 1–67.

- Nbias: A natural language processing framework for bias identification in text. Expert Systems with Applications, 237, 121542.

- Sarker, I. (2021). Machine learning: algorithms, real-world applications and research directions. sn comput sci 2: 160.

- Shortliffe, E. (2012). Computer-based medical consultations: MYCIN volume 2. Elsevier.

- Generating text with recurrent neural networks. In Proceedings of the 28th international conference on machine learning (ICML-11) (pp. 1017–1024).

- Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27.

- Gemini: a family of highly capable multimodal models. arXiv preprint arXiv:2312.11805, .

- Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, .

- A survey of zero-shot learning: Settings, methods, and applications. ACM Transactions on Intelligent Systems and Technology (TIST), 10, 1–37.

- Empirical study of llm fine-tuning for text classification in legal document review. In 2023 IEEE International Conference on Big Data (BigData) (pp. 2786–2792). doi:10.1109/BigData59044.2023.10386911.

- Principal component analysis. Chemometrics and intelligent laboratory systems, 2, 37–52.

- Xu, S. (2018). Bayesian naïve bayes classifiers to text classification. Journal of Information Science, 44, 48–59.

- Cdgan-bert: Adversarial constraint and diversity discriminator for semi-supervised text classification. Knowledge-Based Systems, 284, 111291.

- Clcrnet: An optical character recognition network for cigarette laser code. IEEE Transactions on Instrumentation and Measurement, .

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.