Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems (2405.06624v3)

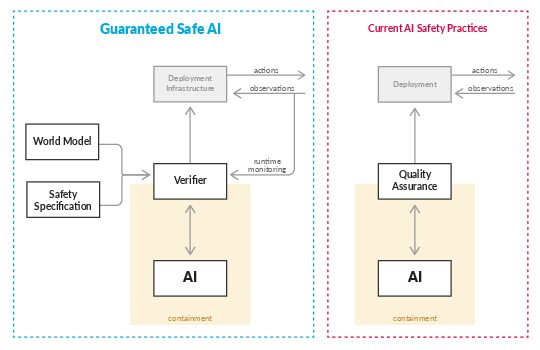

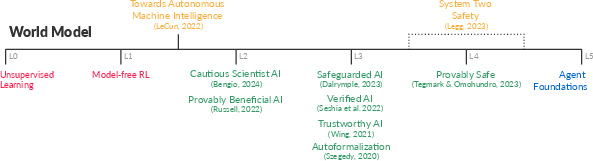

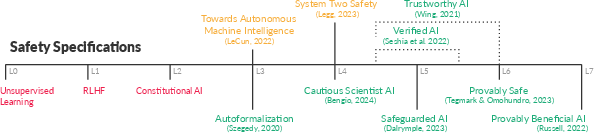

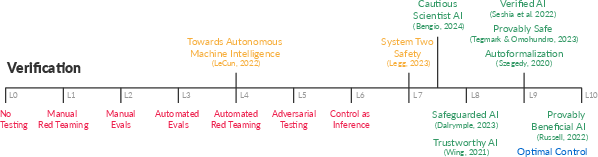

Abstract: Ensuring that AI systems reliably and robustly avoid harmful or dangerous behaviours is a crucial challenge, especially for AI systems with a high degree of autonomy and general intelligence, or systems used in safety-critical contexts. In this paper, we will introduce and define a family of approaches to AI safety, which we will refer to as guaranteed safe (GS) AI. The core feature of these approaches is that they aim to produce AI systems which are equipped with high-assurance quantitative safety guarantees. This is achieved by the interplay of three core components: a world model (which provides a mathematical description of how the AI system affects the outside world), a safety specification (which is a mathematical description of what effects are acceptable), and a verifier (which provides an auditable proof certificate that the AI satisfies the safety specification relative to the world model). We outline a number of approaches for creating each of these three core components, describe the main technical challenges, and suggest a number of potential solutions to them. We also argue for the necessity of this approach to AI safety, and for the inadequacy of the main alternative approaches.

- Automated formal synthesis of provably safe digital controllers for continuous plants. Acta Informatica, 57(3):223–244, 2020.

- Quantitative verification with neural networks. Schloss Dagstuhl – Leibniz-Zentrum für Informatik, 2023. doi: 10.4230/LIPICS.CONCUR.2023.22. URL https://drops.dagstuhl.de/entities/document/10.4230/LIPIcs.CONCUR.2023.22.

- Coordinated pausing: An evaluation-based coordination scheme for frontier ai developers, 2023.

- Albarghouthi, A. Introduction to neural network verification, 2021.

- Recognizing safety and liveness. Distributed Comput., 2(3):117–126, 1987.

- Syntax-guided synthesis. In Proceedings of the IEEE International Conference on Formal Methods in Computer-Aided Design (FMCAD), pp. 1–17, October 2013.

- Amodei, D. Written testimony of Dario Amodei before the U.S. Senate Committee on the Judiciary, Subcommitee on Privacy, Technology, and the Law, 2023. URL https://www.judiciary.senate.gov/imo/media/doc/2023-07-26_-_testimony_-_amodei.pdf.

- Concrete problems in ai safety. arXiv preprint arXiv:1606.06565, 2016.

- Anthropic. Anthropic’s responsible scaling policy, 2023. URL https://www-cdn.anthropic.com/1adf000c8f675958c2ee23805d91aaade1cd4613/responsible-scaling-policy.pdf.

- Low impact artificial intelligences, 2017.

- Occam’s razor is insufficient to infer the preferences of irrational agents. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, volume 31, pp. 5603–5614, Montréal, Canada, 2018. Curran Associates, Inc., Red Hook, NY, USA.

- Good and safe uses of ai oracles, 2018.

- ’indifference’ methods for managing agent rewards, 2018.

- Thinking inside the box: Controlling and using an oracle ai. Minds and Machines, 22:299–324, 2012.

- Principles of Model Checking. The MIT Press, 2008. ISBN 026202649X.

- Etalumis: bringing probabilistic programming to scientific simulators at scale. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’19, New York, NY, USA, 2019a. Association for Computing Machinery. ISBN 9781450362290. doi: 10.1145/3295500.3356180. URL https://doi.org/10.1145/3295500.3356180.

- Etalumis: bringing probabilistic programming to scientific simulators at scale. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’19, New York, NY, USA, 2019b. Association for Computing Machinery. ISBN 9781450362290. doi: 10.1145/3295500.3356180. URL https://doi.org/10.1145/3295500.3356180.

- A causal analysis of harm. In Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., and Oh, A. (eds.), Advances in Neural Information Processing Systems, volume 35, pp. 2365–2376. Curran Associates, Inc., 2022a. URL https://proceedings.neurips.cc/paper_files/paper/2022/file/100c1f131893d3b4b34bb8db49bef79f-Paper-Conference.pdf.

- Quantifying harm, 2022b.

- Bengio, Y. Written testimony of Yoshua Bengio before the U.S. Senate Committee on the Judiciary, Subcommitee on Privacy, Technology, and the Law, 2023. URL https://www.judiciary.senate.gov/imo/media/doc/2023-07-26_-_testimony_-_bengio.pdf.

- Bengio, Y. Towards a cautious scientist AI with convergent safety bounds, 2024. URL https://yoshuabengio.org/2024/02/26/towards-a-cautious-scientist-ai-with-convergent-safety-bounds/.

- Pyro: Deep universal probabilistic programming. Journal of machine learning research, 20(28):1–6, 2019.

- Disruptive innovations and disruptive assurance: Assuring machine learning and autonomy. Computer, 52/9:82–89, 2019.

- Labeled rtdp: Improving the convergence of real-time dynamic programming. In ICAPS, volume 3, pp. 12–21, 2003.

- Solving pomdps: Rtdp-bel vs. point-based algorithms. In IJCAI, pp. 1641–1646. Pasadena CA, 2009.

- Bostrom, N. Superintelligence: Paths, Dangers, Strategies. Oxford University Press, 2014. ISBN 9780199678112. URL https://books.google.com/books?id=7_H8AwAAQBAJ.

- Cross-country trends in affective polarization. Working Paper 26669, National Bureau of Economic Research, January 2020. URL http://www.nber.org/papers/w26669.

- Towards monosemanticity: Decomposing language models with dictionary learning. Transformer Circuits Thread, pp. 2, 2023.

- The gleamviz computational tool, a publicly available software to explore realistic epidemic spreading scenarios at the global scale. BMC Infectious Diseases, 11(1):37, Feb 2011. ISSN 1471-2334. doi: 10.1186/1471-2334-11-37. URL https://doi.org/10.1186/1471-2334-11-37.

- The malicious use of artificial intelligence: Forecasting, prevention, and mitigation, 2018.

- Big ai can centralize decision-making and power, and that’s a problem. Missing links in ai governance, 65, 2023.

- Gender shades: Intersectional accuracy disparities in commercial gender classification. In Conference on fairness, accountability and transparency, pp. 77–91. PMLR, 2018.

- Burgess, M. The hacking of chatgpt is just getting started, Apr 2023. URL wired.com/story/chatgpt-jailbreak-generative-ai-hacking/. [Online; posted 12-April-2023].

- Butler, S. Darwin among the machines. 1863.

- Identifiability in inverse reinforcement learning. arXiv preprint, arXiv:2106.03498 [cs.LG], 2021.

- Estimating and penalizing induced preference shifts in recommender systems, 2022.

- Carroll, S. M. The quantum field theory on which the everyday world supervenes, 2021.

- Open problems and fundamental limitations of reinforcement learning from human feedback, 2023.

- Liability, ethics, and culture-aware behavior specification using rulebooks. In 2019 International Conference on Robotics and Automation (ICRA), pp. 8536–8542. IEEE, 2019.

- Advanced artificial agents intervene in the provision of reward. AI magazine, 43(3):282–293, 2022.

- Cook, W. Bridge failure rates, consequences, and predictive trends. 2014. URL https://api.semanticscholar.org/CorpusID:107360532.

- Crawford, K. The atlas of AI: Power, politics, and the planetary costs of artificial intelligence. Yale University Press, 2021.

- Gen: a general-purpose probabilistic programming system with programmable inference. In Proceedings of the 40th acm sigplan conference on programming language design and implementation, pp. 221–236, 2019.

- Algorithmic fairness. Annual Review of Financial Economics, 15(Volume 15, 2023):565–593, 2023. ISSN 1941-1375. doi: https://doi.org/10.1146/annurev-financial-110921-125930. URL https://www.annualreviews.org/content/journals/10.1146/annurev-financial-110921-125930.

- ’davidad’ Dalrymple, D. Safeguarded ai: constructing guaranteed safety. Technical report, ARIA, feb 2024. URL https://www.aria.org.uk/wp-content/uploads/2024/01/ARIA-Safeguarded-AI-Programme-Thesis-V1.pdf.

- Sequential monte carlo samplers. Journal of the Royal Statistical Society Series B: Statistical Methodology, 68(3):411–436, 2006.

- Bayesian structure learning with generative flow networks, 2022.

- Joint bayesian inference of graphical structure and parameters with a single generative flow network, 2023.

- Dhillon, B. Engineering Safety: Fundamentals, Techniques, And Applications. Series On Industrial And Systems Engineering. World Scientific Publishing Company, 2003. ISBN 9789813102361. URL https://books.google.co.uk/books?id=P_E7DQAAQBAJ.

- Counterexample-guided data augmentation. In 27th International Joint Conference on Artificial Intelligence (IJCAI), 2018.

- Compositional falsification of cyber-physical systems with machine learning components. Journal of Automated Reasoning, 63(4):1031–1053, 2019.

- Drexler, E. The open agency model, February 2023. URL https://www.alignmentforum.org/posts/5hApNw5f7uG8RXxGS/the-open-agency-model. [Online; posted 22-February-2023].

- Inverse optimal control with linearly-solvable MDPs. In Proceedings of the 27th International Conference on Machine Learning, pp. 335–342, Haifa, Israel, June 2010. Omnipress, Madison, Wisconsin, USA.

- A general verification framework for dynamical and control models via certificate synthesis, 2023.

- Dreamcoder: growing generalizable, interpretable knowledge with wake–sleep bayesian program learning. Philosophical Transactions of the Royal Society A, 381(2251):20220050, 2023.

- Generating probabilistic scenario programs from natural language. ArXiV e-Prints 2405.03709, 2024.

- Ericson, C. Hazard Analysis Techniques for System Safety. Wiley, 2015. ISBN 9781118940389. URL https://books.google.co.uk/books?id=cTikBgAAQBAJ.

- Agent incentives: A causal perspective, 2021.

- Feldstein, S. The global expansion of AI surveillance, volume 17. Carnegie Endowment for International Peace Washington, DC, 2019.

- Strips: A new approach to the application of theorem proving to problem solving. Artificial intelligence, 2(3-4):189–208, 1971.

- Baldur: Whole-proof generation and repair with large language models. In Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, pp. 1229–1241, 2023.

- FLI. AI governance scorecard and safety standards policy, 2023. URL https://futureoflife.org/wp-content/uploads/2023/11/FLI_Governance_Scorecard_and_Framework.pdf.

- Choice set misspecification in reward inference. In IJCAI-PRICAI-20 Workshop on Artificial Intelligence Safety, 2020. doi: 10.48550/ARXIV.2101.07691. URL https://arxiv.org/abs/2101.07691.

- Scenic: A language for scenario specification and scene generation. In Proceedings of the 40th annual ACM SIGPLAN conference on Programming Language Design and Implementation (PLDI), June 2019.

- Formal analysis and redesign of a neural network-based aircraft taxiing system with VerifAI. In 32nd International Conference on Computer Aided Verification (CAV), July 2020a.

- Formal scenario-based testing of autonomous vehicles: From simulation to the real world. In 23rd IEEE International Conference on Intelligent Transportation Systems (ITSC), September 2020b.

- Scenic: A language for scenario specification and data generation. Machine Learning Journal, 2022.

- Red teaming language models to reduce harms: Methods, scaling behaviors, and lessons learned, 2022.

- Interpretability of machine learning: Recent advances and future prospects, 2023.

- Formal Verification for Autopilot - Preliminary state of the art. Technical report, ISAE-SUPAERO ; ONERA – The French Aerospace Lab ; ENAC, March 2022. URL https://hal.science/hal-03255656.

- Automated Planning: theory and practice. Elsevier, 2004.

- Goodhart, C. Problems of monetary management: the UK experience in papers in monetary economics. Monetary Economics, 1, 1975.

- Church: a language for generative models. arXiv preprint arXiv:1206.3255, 2012.

- 3dp3: 3d scene perception via probabilistic programming. Advances in Neural Information Processing Systems, 34:9600–9612, 2021.

- Bayes3d: fast learning and inference in structured generative models of 3d objects and scenes, 2023.

- Lilo: Learning interpretable libraries by compressing and documenting code. In The Twelfth International Conference on Learning Representations, 2023.

- Program synthesis. Foundations and Trends® in Programming Languages, 4(1-2):1–119, 2017. ISSN 2325-1107. doi: 10.1561/2500000010. URL http://dx.doi.org/10.1561/2500000010.

- An overview of backdoor attacks against deep neural networks and possible defences, 2021.

- The off-switch game, 2017.

- Cooperative inverse reinforcement learning, 2024.

- Halpern, J. Y. Actual Causality. MIT Press, Cambridge, MA, 2016. ISBN 978-0-262-03502-6. doi: 10.7551/mitpress/9780262035026.001.0001.

- A logic for reasoning about time and reliability. Form. Asp. Comput., 6(5):512–535, sep 1994. ISSN 0934-5043. doi: 10.1007/BF01211866. URL https://doi.org/10.1007/BF01211866.

- Certified reinforcement learning with logic guidance. Artificial Intelligence, 322(C):103949, 2023. doi: 10.1016/j.artint.2023.103949.

- An overview of catastrophic ai risks, 2023.

- Goodhart’s Law and Machine Learning: A Structural Perspective. International Economic Review, pp. iere.12633, March 2023. ISSN 0020-6598, 1468-2354. doi: 10.1111/iere.12633. URL https://onlinelibrary.wiley.com/doi/10.1111/iere.12633.

- Hinton, G. ‘the godfather of A.I.’ leaves Google and warns of danger ahead, May 2023. URL https://www.nytimes.com/2023/05/01/technology/ai-google-chatbot-engineer-quits-hinton.html. [Online; posted 4-May-2023].

- TabPFN: A transformer that solves small tabular classification problems in a second, 2023.

- Gflownet-em for learning compositional latent variable models, 2023.

- Risks from learned optimization in advanced machine learning systems, 2021.

- Hutter, M. A gentle introduction to the universal algorithmic agent aixi, 2003.

- Case study: verifying the safety of an autonomous racing car with a neural network controller. In Proceedings of the 23rd International Conference on Hybrid Systems: Computation and Control, HSCC ’20, New York, NY, USA, 2020. Association for Computing Machinery. ISBN 9781450370189. doi: 10.1145/3365365.3382216. URL https://doi.org/10.1145/3365365.3382216.

- The consensus game: Language model generation via equilibrium search. arXiv preprint arXiv:2310.09139, 2023.

- Preprocessing reward functions for interpretability, 2022.

- A Theory of Formal Synthesis via Inductive Learning. Acta Informatica, 54(7):693–726, 2017.

- Ai alignment: A comprehensive survey, 2024.

- Safety-constrained reinforcement learning for MDPs. In Chechik, M. and Raskin, J.-F. (eds.), Tools and Algorithms for the Construction and Analysis of Systems, pp. 130–146, Berlin, Heidelberg, 2016. Springer Berlin Heidelberg. ISBN 978-3-662-49674-9.

- Goodhart’s law in reinforcement learning, 2023.

- Learning to induce causal structure, 2022.

- An Introduction to Computational Learning Theory. The MIT Press, 08 1994. ISBN 9780262276863. doi: 10.7551/mitpress/3897.001.0001. URL https://doi.org/10.7551/mitpress/3897.001.0001.

- A probabilistic model of theory formation. Cognition, 114(2):165–196, 2010.

- Khlaaf, H. Toward comprehensive risk assessments and assurance of ai-based systems. Trail of Bits, 2023.

- A hazard analysis framework for code synthesis large language models, 2022.

- Reward identification in inverse reinforcement learning. In Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, pp. 5496–5505, Virtual, July 2021. PMLR.

- Inherent trade-offs in the fair determination of risk scores, 2016.

- Risk assessment at agi companies: A review of popular risk assessment techniques from other safety-critical industries, 2023.

- Specification gaming: the flip side of AI ingenuity, 2020. URL deepmind.com/blog/specification-gaming-the-flip-side-of-ai-ingenuity.

- Unsupervised machine translation using monolingual corpora only. arXiv preprint arXiv:1711.00043, 2017.

- Hypertree proof search for neural theorem proving, 2022.

- Goal misgeneralization in deep reinforcement learning, 2023.

- Ai safety on whose terms?, 2023.

- Leino, K. R. M. Program Proofs. MIT Press, 2023.

- The hostile audience: The effect of access to broadband internet on partisan affect. American Journal of Political Science, 61(1):5–20, 2017. ISSN 00925853, 15405907. URL http://www.jstor.org/stable/26379489.

- Leveson, N. Engineering a Safer World: Systems Thinking Applied to Safety. 01 2012. ISBN 9780262298247. doi: 10.7551/mitpress/8179.001.0001.

- Leveraging unstructured statistical knowledge in a probabilistic language of thought. In CogSci 2020: Proceedings of the Forty-Second Annual Virtual Meeting of the Cognitive Science Society, Proceedings of the Annual Conference of the Cognitive Science Society, 2020.

- Adev: Sound automatic differentiation of expected values of probabilistic programs. Proceedings of the ACM on Programming Languages, 7(POPL):121–153, 2023a.

- SMCP3: Sequential monte carlo with probabilistic program proposals. In Ruiz, F., Dy, J., and van de Meent, J.-W. (eds.), Proceedings of The 26th International Conference on Artificial Intelligence and Statistics, volume 206 of Proceedings of Machine Learning Research, pp. 7061–7088. PMLR, 25–27 Apr 2023b. URL https://proceedings.mlr.press/v206/lew23a.html.

- Ready to bully automated vehicles on public roads? Accident Analysis and Prevention, 137, March 2020. ISSN 0001-4575. doi: 10.1016/j.aap.2020.105457. Publisher Copyright: © 2020 Elsevier Ltd.

- Beneficent intelligence: A capability approach to modeling benefit, assistance, and associated moral failures through ai systems, 2023.

- Loveland, D. W. Automated theorem proving: A logical basis. Elsevier, 2016.

- Categorizing Variants of Goodhart’s Law, February 2019. URL http://arxiv.org/abs/1803.04585. arXiv:1803.04585 [cs, q-fin, stat].

- The neuro-symbolic concept learner: Interpreting scenes, words, and sentences from natural supervision. arXiv preprint arXiv:1904.12584, 2019.

- Transforming worlds: Automated involutive mcmc for open-universe probabilistic models. In Third Symposium on Advances in Approximate Bayesian Inference, 2020.

- Finite sample complexity of sequential monte carlo estimators on multimodal target distributions, 2022.

- McCullough, D. The Great Bridge: The Epic Story of the Building of the Brooklyn Bridge. Simon & Schuster, 2001. ISBN 9780743217378. URL https://books.google.co.uk/books?id=bOM93rb22YEC.

- Bounded real-time dynamic programming: Rtdp with monotone upper bounds and performance guarantees. In Proceedings of the 22nd International Conference on Machine Learning, ICML ’05, pp. 569–576, New York, NY, USA, 2005. Association for Computing Machinery. ISBN 1595931805. doi: 10.1145/1102351.1102423. URL https://doi.org/10.1145/1102351.1102423.

- Megill, N. Proof Explorer - Home Page - Metamath, September 2023. URL https://us.metamath.org/mpeuni/mmset.html. [Online; accessed 3. Sep. 2023].

- Fairness, accountability, transparency, and ethics (fate) in artificial intelligence (ai) and higher education: A systematic review. Computers and Education: Artificial Intelligence, 5:100152, 2023. ISSN 2666-920X. doi: https://doi.org/10.1016/j.caeai.2023.100152. URL https://www.sciencedirect.com/science/article/pii/S2666920X23000310.

- Understanding learned reward functions, 2020.

- Opening the ai black box: program synthesis via mechanistic interpretability. arXiv preprint arXiv:2402.05110, 2024.

- Blog: Probabilistic models with unknown objects. Statistical Relational Learning, pp. 373, 2007.

- Neural networks are a priori biased towards boolean functions with low entropy, 2020.

- Is sgd a bayesian sampler? well, almost. Journal of Machine Learning Research, 22(79):1–64, 2021. URL http://jmlr.org/papers/v22/20-676.html.

- Rowhammer: A retrospective. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 39(8):1555–1571, 2019.

- Learning linear temporal properties, 2018.

- Algorithms for inverse reinforcement learning. In Proceedings of the Seventeenth International Conference on Machine Learning, volume 1, pp. 663–670, Stanford, California, USA, 2000. Morgan Kaufmann Publishers Inc.

- Nieuwhof, G. An introduction to fault tree analysis with emphasis on failure rate evaluation. Microelectronics Reliability, 14(2):105–119, 1975. ISSN 0026-2714. doi: 10.1016/0026-2714(75)90024-4. URL https://www.sciencedirect.com/science/article/pii/0026271475900244.

- Expert system verification and validation: a survey and tutorial. Artificial Intelligence Review, 7:3–42, 1993.

- Gpt-4 technical report, 2024.

- Safely interruptible agents. In Conference on Uncertainty in Artificial Intelligence, 2016. URL https://api.semanticscholar.org/CorpusID:2912679.

- Training language models to follow instructions with human feedback, 2022.

- The Effects of Reward Misspecification: Mapping and Mitigating Misaligned Models. In International Conference on Learning Representations, October 2021.

- Reward Gaming in Conditional Text Generation, February 2023. URL http://arxiv.org/abs/2211.08714. arXiv:2211.08714 [cs].

- Pearl, J. Causality. Cambridge University Press, Cambridge, UK, 2 edition, 2009. ISBN 978-0-521-89560-6. doi: 10.1017/CBO9780511803161.

- Pearl, J. Judea pearl: Causal reasoning, counterfactuals, and the path to agi, 2019. URL https://youtu.be/pEBI0vF45ic?si=lE_gCwZAFfDU7GG2&t=3869.

- Manipulative machines (1st edition). In Klenk, M. and Jongepier, F. (eds.), The Philosophy of Online Manipulation, pp. 91–107. Routledge, 2022.

- Red teaming language models with language models, 2022.

- Russell, S. Human Compatible: Artificial Intelligence and the Problem of Control. Penguin Publishing Group, 2019. ISBN 9780525558620. URL https://books.google.co.uk/books?id=M1eFDwAAQBAJ.

- Russell, S. Provably beneficial artificial intelligence. In IUI ’22: Proceedings of the 27th International Conference on Intelligent User Interfaces, 2022.

- Russell, S. Written testimony of Stuart Russell before the U.S. Senate Committee on the Judiciary, Subcommitee on Privacy, Technology, and the Law, 2024. URL https://www.judiciary.senate.gov/imo/media/doc/2023-07-26_-_testimony_-_russell.pdf.

- Sequential Monte Carlo learning for time series structure discovery. In Krause, A., Brunskill, E., Cho, K., Engelhardt, B., Sabato, S., and Scarlett, J. (eds.), Proceedings of the 40th International Conference on Machine Learning, volume 202 of Proceedings of Machine Learning Research, pp. 29473–29489. PMLR, 23–29 Jul 2023. URL https://proceedings.mlr.press/v202/saad23a.html.

- Computing power and the governance of artificial intelligence, 2024.

- Identifiability and generalizability in constrained inverse reinforcement learning, 2023.

- Towards best practices in agi safety and governance: A survey of expert opinion, 2023.

- Formal verification: an essential toolkit for modern VLSI design. Elsevier, 2023.

- Seshia, S. A. Combining induction, deduction, and structure for verification and synthesis. Proceedings of the IEEE, 103(11):2036–2051, 2015.

- Towards Verified Artificial Intelligence. ArXiv e-prints, July 2016.

- Formal specification for deep neural networks. In Proceedings of the International Symposium on Automated Technology for Verification and Analysis (ATVA), pp. 20–34, October 2018.

- Toward verified artificial intelligence. Communications of the ACM, 65(7):46–55, 2022.

- Settle, J. E. Frenemies: How Social Media Polarizes America. Cambridge University Press, 2018.

- Goal misgeneralization: Why correct specifications aren’t enough for correct goals, 2022.

- On the limitations of Markovian rewards to express multi-objective, risk-sensitive, and modal tasks. In Evans, R. J. and Shpitser, I. (eds.), Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence, volume 216 of Proceedings of Machine Learning Research, pp. 1974–1984. PMLR, 31 Jul–04 Aug 2023a. URL https://proceedings.mlr.press/v216/skalse23a.html.

- Misspecification in inverse reinforcement learning, 2023b.

- Quantifying the sensitivity of inverse reinforcement learning to misspecification, 2024.

- Starc: A general framework for quantifying differences between reward functions, 2024.

- Defining and Characterizing Reward Gaming. In Advances in Neural Information Processing Systems, May 2022.

- Invariance in policy optimisation and partial identifiability in reward learning. In International Conference on Machine Learning, pp. 32033–32058. PMLR, 2023.

- Corrigibility. In Walsh, T. (ed.), Artificial Intelligence and Ethics, Papers from the 2015 AAAI Workshop, Austin, Texas, USA, January 25, 2015, volume WS-15-02 of AAAI Technical Report. AAAI Press, 2015. URL http://aaai.org/ocs/index.php/WS/AAAIW15/paper/view/10124.

- Stray, J. Designing recommender systems to depolarize, 2021.

- Coarse-to-fine sequential Monte Carlo for probabilistic programs. arXiv preprint arXiv:1509.02962, 2015.

- On the expressivity of objective-specification formalisms in reinforcement learning, 2024.

- Szegedy, C. A promising path towards autoformalization and general artificial intelligence. In Intelligent Computer Mathematics: 13th International Conference, CICM 2020, Bertinoro, Italy, July 26–31, 2020, Proceedings 13, pp. 3–20. Springer, 2020.

- Tabuada, P. Verification and control of hybrid systems: a symbolic approach. Springer Science & Business Media, 2009.

- Worldcoder, a model-based llm agent: Building world models by writing code and interacting with the environment. arXiv preprint arXiv:2402.12275, 2024.

- Tegmark, M. Life 3.0: Being human in the age of artificial intelligence. Vintage, 2018.

- Provably safe systems: the only path to controllable agi, 2023.

- Solving olympiad geometry without human demonstrations. Nature, 625(7995):476–482, Jan 2024. ISSN 1476-4687. doi: 10.1038/s41586-023-06747-5. URL https://doi.org/10.1038/s41586-023-06747-5.

- Turchin, A. Catching treacherous turn: A model of the multilevel ai boxing, 05 2021.

- Turing, A. Intelligent machinery, a heretical theory. 1951.

- A complete criterion for value of information in soluble influence diagrams, 2022.

- Maximum causal entropy specification inference from demonstrations. In 32nd International Conference on Computer Aided Verification (CAV), July 2020.

- Learning task specifications from demonstrations. In Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems (NeurIPS), pp. 5372–5382, December 2018.

- Robust inverse reinforcement learning under transition dynamics mismatch. In Beygelzimer, A., Dauphin, Y., Liang, P., and Vaughan, J. W. (eds.), Advances in Neural Information Processing Systems, 2021. URL https://openreview.net/forum?id=t8HduwpoQQv.

- A brief review on algorithmic fairness. Management System Engineering, 1(1):7, Nov 2022. ISSN 2731-5843. doi: 10.1007/s44176-022-00006-z. URL https://doi.org/10.1007/s44176-022-00006-z.

- Watanabe, S. Algebraic Geometry and Statistical Learning Theory. Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press, 2009.

- Watanabe, S. Mathematical Theory of Bayesian Statistics. Chapman and Hall/CRC, 2018.

- Wing, J. M. Trustworthy AI. Communications of the ACM, 64(10):64–71, September 2021. ISSN 1557-7317. doi: 10.1145/3448248. URL http://dx.doi.org/10.1145/3448248.

- From word models to world models: Translating from natural language to the probabilistic language of thought, 2023a.

- Learning adaptive planning representations with natural language guidance. arXiv preprint arXiv:2312.08566, 2023b.

- Autoformalization with large language models. Advances in Neural Information Processing Systems, 35:32353–32368, 2022.

- Compositional simulation-based analysis of AI-based autonomous systems for markovian specifications. In Runtime Verification - 23rd International Conference (RV), volume 14245 of Lecture Notes in Computer Science, pp. 191–212. Springer, 2023.

- A survey on neural network interpretability. IEEE Transactions on Emerging Topics in Computational Intelligence, 5(5):726–742, October 2021. ISSN 2471-285X. doi: 10.1109/tetci.2021.3100641. URL http://dx.doi.org/10.1109/TETCI.2021.3100641.

- Probabilistic inference in language models via twisted sequential monte carlo. arXiv preprint arXiv:2404.17546, 2024.

- Consequences of misaligned AI. In Proceedings of the 34th International Conference on Neural Information Processing Systems, NIPS’20, pp. 15763–15773, Red Hook, NY, USA, December 2020. Curran Associates Inc. ISBN 978-1-71382-954-6.

- Adversarial training for high-stakes reliability, 2022.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.