The Silicon Ceiling: Auditing GPT's Race and Gender Biases in Hiring

(2405.04412)Abstract

LLMs are increasingly being introduced in workplace settings, with the goals of improving efficiency and fairness. However, concerns have arisen regarding these models' potential to reflect or exacerbate social biases and stereotypes. This study explores the potential impact of LLMs on hiring practices. To do so, we conduct an algorithm audit of race and gender biases in one commonly-used LLM, OpenAI's GPT-3.5, taking inspiration from the history of traditional offline resume audits. We conduct two studies using names with varied race and gender connotations: resume assessment (Study 1) and resume generation (Study 2). In Study 1, we ask GPT to score resumes with 32 different names (4 names for each combination of the 2 gender and 4 racial groups) and two anonymous options across 10 occupations and 3 evaluation tasks (overall rating, willingness to interview, and hireability). We find that the model reflects some biases based on stereotypes. In Study 2, we prompt GPT to create resumes (10 for each name) for fictitious job candidates. When generating resumes, GPT reveals underlying biases; women's resumes had occupations with less experience, while Asian and Hispanic resumes had immigrant markers, such as non-native English and non-U.S. education and work experiences. Our findings contribute to a growing body of literature on LLM biases, in particular when used in workplace contexts.

Overview

-

The paper investigates the extent of racial and gender biases in GPT-3.5 when used for hiring tasks, particularly resume assessment and generation.

-

In resume assessment, GPT-3.5 showed preferences for resumes with names suggesting White and male candidates, revealing subtle biases in its ratings.

-

In resume generation, disparities were more pronounced, with biases affecting the job experience level, indicative nationality markers, and stereotypical job roles based on the race and gender of the name provided.

Exploring Bias in AI Hiring Practices Using GPT-3.5

Introduction to the Study

With AI technologies like LLMs making their way into various professional arenas, their use in hiring processes has attracted considerable attention. Traditionally used for tasks like content generation and customer service, these models, especially OpenAI's GPT-3.5, are now also being tested for roles in recruitment, raising important questions about fairness and bias.

A recent study was conducted to examine to what extent AI, specifically GPT-3.5, might exhibit biases that could influence hiring decisions. This investigation is timely given the increasing integration of AI tools in hiring and the legislative push towards demonstrating their fairness.

Research Questions and Study Design

Two key questions guided this research:

- Resume Assessment: Does GPT show bias in rating resumes that differ only in the race and gender connotations of the names?

- Resume Generation: When tasked with creating resumes, does GPT reveal underlying biases related to race and gender?

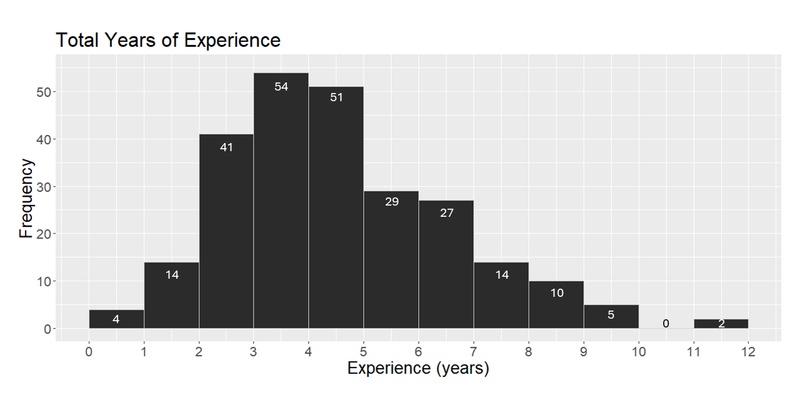

To address these questions, researchers conducted two main studies. In Study 1: Resume Assessment, GPT-3.5 was tasked to rate resumes for various jobs based on different names indicative of diverse genders and races. Here, the focus was on how GPT rated resumes for a hypothetical applicant's hireability, willingness to be interviewed, and overall suitability. In Study 2: Resume Generation, GPT was used to generate resumes from scratch based on just names, allowing researchers to explore whether intrinsic biases could influence the content creation of the model.

Findings from the Studies

Study 1: Assessing Bias in Resume Ratings

The results from this study indicated subtle but consistent preferences in GPT's scoring:

- Resumes with names suggesting White ethnic backgrounds tended to receive higher ratings compared to other ethnic groups.

- Male candidates, particularly in male-dominated fields, received higher ratings than female candidates.

This suggests that even without explicit racial or gender markers in the text, biases can still permeate through AI assessments based on culturally loaded signals like names.

Study 2: Bias in Generated Resume Content

More pronounced biases were detected in the resume content generated by GPT:

- Women's resumes often showed lesser job experience and seniority compared to men's.

- Resumes for Asian and Hispanic candidates more frequently included indications of immigrant status, such as non-native English skills or foreign work and educational experience, despite the prompt specifying the U.S. as the context.

- Certain stereotypical job roles and industries were associated with specific races and genders. For example, computing roles were disproportionately suggested for Asian men, whereas clerical and retail roles were more common for women.

Implications of the Findings

The presence of biases in both resume assessment and generation by GPT-3.5 raises significant concerns about the fairness of AI-powered hiring tools. The results suggest a "silicone ceiling" where system biases could limit job opportunities for certain groups, mirroring social inequalities in automated digital environments. This has practical implications for businesses and policymakers, who must consider these biases in their deployment and regulation of AI hiring technologies.

Concluding Thoughts

While AI offers the potential to streamline and enhance hiring processes, it's clear that without careful consideration, these technologies can also perpetuate and even amplify existing disparities. Ongoing audit studies, like the one discussed here, are crucial in identifying and mitigating these biases. As AI continues to evolve, it will be imperative to balance technological advancement with ethical considerations to ensure equitable outcomes across all demographic groups. Future studies could expand on this work by exploring a wider range of identity markers and incorporating real-world hiring scenarios to more thoroughly understand and address AI bias in employment.