Is Sora a World Simulator? A Comprehensive Survey on General World Models and Beyond

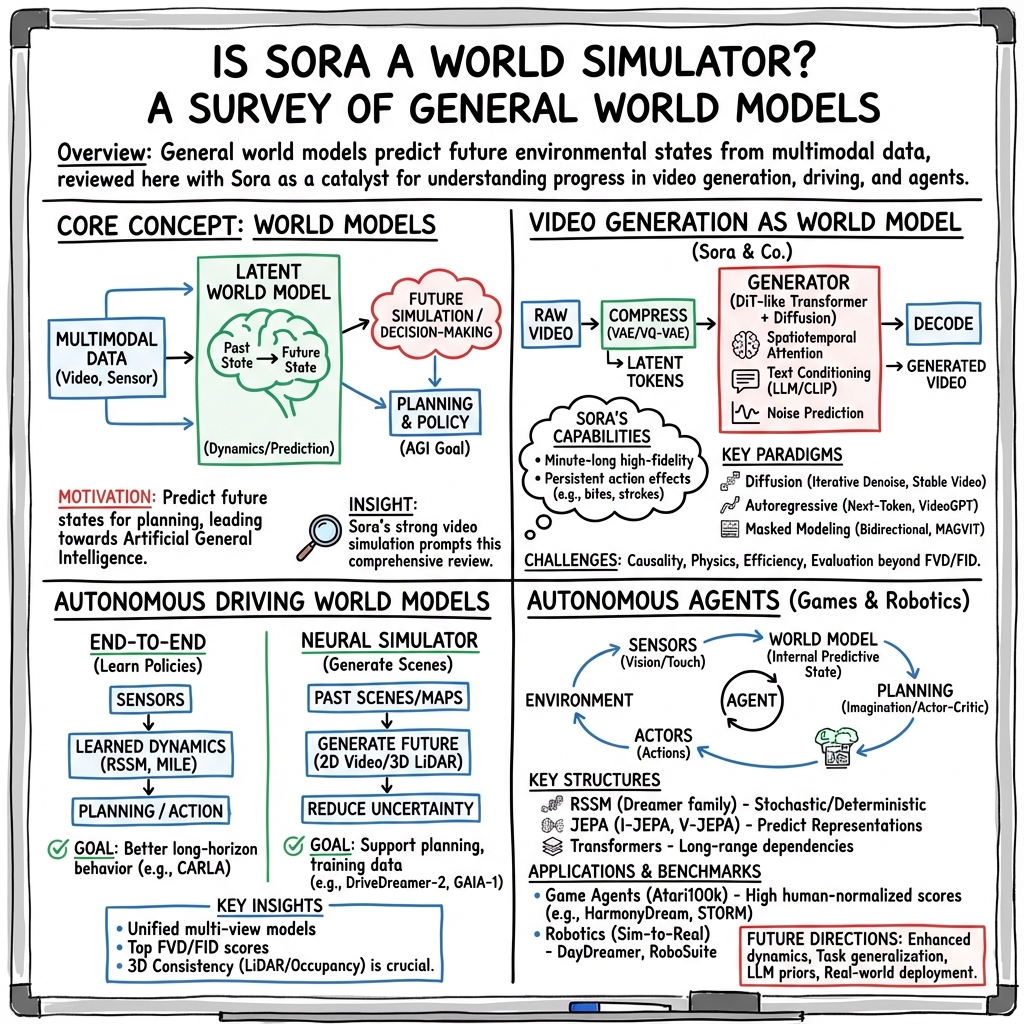

Abstract: General world models represent a crucial pathway toward achieving AGI, serving as the cornerstone for various applications ranging from virtual environments to decision-making systems. Recently, the emergence of the Sora model has attained significant attention due to its remarkable simulation capabilities, which exhibits an incipient comprehension of physical laws. In this survey, we embark on a comprehensive exploration of the latest advancements in world models. Our analysis navigates through the forefront of generative methodologies in video generation, where world models stand as pivotal constructs facilitating the synthesis of highly realistic visual content. Additionally, we scrutinize the burgeoning field of autonomous-driving world models, meticulously delineating their indispensable role in reshaping transportation and urban mobility. Furthermore, we delve into the intricacies inherent in world models deployed within autonomous agents, shedding light on their profound significance in enabling intelligent interactions within dynamic environmental contexts. At last, we examine challenges and limitations of world models, and discuss their potential future directions. We hope this survey can serve as a foundational reference for the research community and inspire continued innovation. This survey will be regularly updated at: https://github.com/GigaAI-research/General-World-Models-Survey.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper is a big review (a survey) of “world models” in artificial intelligence. A world model is like an AI’s inner video game engine: it tries to imagine how the world works and predict what will happen next. The authors focus on three areas where world models are most active today:

- Making videos (text-to-video generation)

- Helping self-driving cars

- Powering autonomous agents (like game AIs and robots)

They pay special attention to OpenAI’s Sora, a text-to-video system that many people think shows early signs of understanding real-world physics.

What questions does the paper ask?

In simple terms, the paper asks:

- What are world models and why do they matter for building general-purpose AI?

- Is Sora a step toward a true “world simulator,” not just a video generator?

- Which techniques are used to build modern world models?

- How are world models helping in driving, games, and robots?

- What challenges do these models face, and what’s next?

How did the authors study it?

This is a survey paper: the authors read and organize many recent research papers, explain the main ideas, compare approaches, and point out trends, challenges, and future directions. They also summarize how Sora likely works based on public info (since Sora is closed-source).

Explaining the core techniques with everyday analogies

To build world models that create or predict videos, researchers commonly use these building blocks:

- Generative Adversarial Networks (GANs): Imagine a forger (the generator) trying to paint a fake picture and a detective (the discriminator) trying to tell if it’s fake. They compete until the forger gets really good.

- Diffusion models: Start with TV static (pure noise) and learn to “denoise” it step by step into a clear picture or video, like sculpting a statue from a block of marble by removing randomness.

- Autoregressive models: Generate things one piece at a time, like writing a story word by word (or creating a video token by token), each step depending on what came before.

- Masked modeling: Give the model a “fill-in-the-blanks” puzzle. It learns to guess missing pieces of an image or video from the surrounding context.

- Transformers: These are powerful architectures that learn patterns and long-range relationships. Think of them as very skilled organizers that can understand how distant parts of a scene relate to each other over time.

- Text encoders (like CLIP or LLMs): These turn your prompt (“a cat surfing a wave at sunset”) into numbers the video model understands, improving how well videos match your instructions.

How Sora likely works (in simple terms)

Based on public reports:

- Compress first: Sora shrinks raw videos into a compact form (like turning a big movie file into a smaller set of chunks) so it’s easier to learn from and generate.

- Generate in the compressed space: A big Transformer-based diffusion model learns to “denoise” and assemble those chunks into consistent video over time.

- Understand prompts: A LLM helps Sora understand detailed text instructions.

- High-quality data matters: Sora likely uses “re-captioning,” where another model rewrites or enriches video descriptions so training data is more precise. At inference time, a LLM can rewrite short user prompts into detailed ones to get better results.

What did they find, and why is it important?

Here are the main takeaways the authors highlight:

- Sora shows early signs of physical understanding: It can keep paint strokes on a canvas over time and leave bite marks on a burger—suggesting it tracks cause and effect, not just pretty frames.

- Video generation and world modeling help each other: Better “understanding” of the world makes videos more realistic; generating realistic videos creates useful training data for other AI tasks.

- Self-driving benefits: World models can predict future traffic scenes (“what could happen next?”), helping cars plan safer moves. Some systems learn driving policies from video, and even train end-to-end driving using generated scenes.

- Agents and robots learn in imagination: Models like Dreamer learn by predicting future states, cutting down on real-world trial-and-error. Newer systems treat robot planning like “text-to-video,” using imagination to plan sequences of actions.

- Scaling works: Bigger models plus more and better data lead to surprising abilities (emergent behavior), including basic physical intuition in video.

- Language helps control: Combining video models with LLMs makes it easier to ask for specific scenes, rare events, or complex tasks using natural language.

- Data quality is crucial: Precise, rich captions and well-aligned video-text pairs make a big difference. The way data is prepared (like re-captioning) strongly affects results.

- Evaluation is hard: It’s tricky to score video realism, physics correctness, and instruction-following fairly. Better benchmarks and human preference studies are needed.

What does this mean for the future?

- Creative media: Easier, controllable video creation for movies, education, and art.

- Safer transport: Better prediction and planning for self-driving cars.

- Smarter robots and game AIs: More reliable behavior in complex, changing environments.

- Steps toward AGI: World models that learn general rules about reality move AI closer to broad, adaptable intelligence.

But there are challenges:

- Physics mistakes still happen, especially in long or complex scenes.

- Moving from simulation to the real world is tough (robots can fail in messy real settings).

- Training these models takes huge compute and massive datasets; data privacy and ethics matter.

- Safety and misuse: Realistic videos can be abused; responsible deployment is essential.

In short

This survey says that building AI “world models” is a key path toward general intelligence. Sora is a striking example from video generation that seems to capture bits of real-world physics. Together with progress in self-driving and autonomous agents, the field is rapidly advancing. With better data, smarter evaluation, and careful, safe scaling, world models could reshape creative tools, transportation, robotics, and more.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise list of concrete gaps and open questions the paper (and the field it surveys) leaves unresolved, intended to guide future research:

- Standardized, physics-grounded evaluation: No agreed-upon benchmarks to quantify physical plausibility, causality, conservation laws, contact dynamics, and object permanence in generated videos; need test suites with controlled interventions and counterfactuals.

- Long-horizon temporal coherence: Unclear how to maintain identity, layout, and dynamics consistency over minutes or hours; memory mechanisms, streaming generation, and evaluation for drift/brittleness are underexplored.

- Action-conditioned world modeling: Most text-to-video systems are passive; methods to condition on agent actions (and predict controllable, multi-step consequences) remain largely unaddressed.

- Causal reasoning and counterfactuals: How to endow video world models with explicit causal structure (interventions, invariances) versus purely correlational generation is unresolved.

- Uncertainty quantification: Lack of calibrated uncertainty estimates (epistemic/aleatoric) for generative world models and ways to use them in planning and safety-critical decisions.

- Closed-source Sora reproducibility: Architecture, training data, scaling, and ablations are undisclosed; independent replication, verification of “world simulator” claims, and component-wise causal attribution are missing.

- Data transparency and provenance: Sources, licensing, and demographics of large-scale video-text corpora (incl. game footage) are unclear; need transparent, legally compliant datasets with documented biases.

- Caption quality and recaptioning: Reliance on LLM-based recaptioning lacks validation; how caption richness, accuracy, and alignment quantitatively affect learned physics and controllability is not systematically studied.

- Open, high-quality datasets: Scarcity of large, public, richly annotated video-text datasets with diverse physical interactions, rare events, and long sequences; need standardized splits for training/validation/testing.

- Evaluation comparability: Reported metrics vary (FVD, CLIPSim, human prefs), are seed-sensitive, and dataset-dependent; protocols for reproducible evaluation, standard seeds, and robust statistical testing are needed.

- Linking generative fidelity to control: The relationship between video realism/physics metrics and downstream control performance (driving/robotics) is not established; need closed-loop, task-based benchmarks.

- Sim2real for robotics: Methods to bridge from learned visual world models to real, contact-rich manipulation (friction, compliance) with reliable transfer remain scarce.

- Autonomous driving world models: Safety under rare/long-tail scenarios, formal off-policy evaluation, closed-loop testing on public benchmarks, and handling multi-agent interactions are open.

- Multi-agent social dynamics: Modeling human-human and human-robot interactions (negotiation, intent, norms) with uncertainty and game-theoretic structure is largely unexplored in video world models.

- Partial observability and memory: Robust handling of occlusion, missing modalities, and long-term dependencies (with explicit memory/state representations) is not well addressed.

- Multimodal integration: How to fuse audio, depth, LiDAR, IMU, maps, and language in a unified, temporally consistent world model with aligned latent spaces is open.

- Compression/tokenization choices: Effects of spatial-only vs spatiotemporal compression (VAE/VQ-VAE/latent flows) on physics fidelity, editability, and efficiency need systematic ablation.

- Generation paradigm trade-offs: No unified comparison of diffusion, autoregressive, and masked modeling for world modeling (sample efficiency, controllability, OOD robustness, latency).

- Scaling laws for visual world models: Formal data–model–compute scaling relationships, sample complexity, and diminishing returns for physics understanding are not characterized.

- Efficient training/inference: Strategies for training with variable resolutions/aspect ratios and streaming long videos while controlling compute, memory, and latency remain underdeveloped.

- Continual and online learning: Mechanisms for life-long world model updates (without catastrophic forgetting), test-time adaptation, and safety guarantees during updates are missing.

- Interpretability and diagnostics: Tools to probe learned dynamics, detect hallucinated physics, attribute errors, and intervene to correct failure modes are lacking.

- Physical priors and hybrids: How to inject differentiable physics, constraints, or hybrid simulators into large generative models to improve faithfulness and data efficiency remains open.

- Robustness and distribution shift: Methods to detect and withstand shifts (weather, sensors, regions, cultural contexts) and to mitigate spurious correlations are not established.

- Safety and governance: Formal risk assessments, red-teaming, misuse prevention (deepfakes, deceptive simulations), and reporting of environmental/compute costs are absent.

- Language grounding and control: Reliability of LLM-based prompt rewriting and cross-attention grounding for precise spatiotemporal control is not quantified; failure modes under ambiguous/long prompts need study.

- Benchmarks for rare events: Lack of curated suites for edge cases (collisions, near misses, tool breakage) with measurable success criteria and safety margins.

- Memory and streaming design: How to architect and evaluate short-/long-term memory modules in streaming text-to-video (e.g., preventing drift and preserving state) remains unclear.

- Data curricula and sampling: Optimal curricula for durations, aspect ratios, motion complexity, and physics richness, and their effect on generalization and controllability, are not known.

Glossary

- 3D convolution: Convolutional operators extended to three dimensions to jointly capture spatial and temporal patterns in video data. "Convolution-based models ... usually introduce 3D convolution layers to building the spatial-temporal relationships in video data."

- 3D-VQ tokenizer: A vector-quantization-based tokenization module that encodes videos into discrete latent tokens in 3D (spatiotemporal) space. "MAGVIT \cite{yu2023magvit} encodes videos into tokens through a 3D-VQ tokenizer and leverages a masked token modeling paradigm to accelerate the training."

- Adaptive layer norm: A conditioning mechanism that modulates layer normalization parameters using external signals (e.g., timesteps) to inject conditioning information. "We omit the injection of timestep information for brevity, which can be achieved with adaptive layer norm blocks \cite{perez2018film}."

- AGI: A hypothetical form of AI capable of performing any intellectual task a human can. "In the pursuit of AGI, the development of general world models stands as a fundamental avenue."

- Autoregressive modeling: A generation approach that predicts each token conditioned on previously generated tokens in sequence. "Autoregressive modeling has been explored in both language generation methods \cite{radford2018improving,radford2019language,brown2020language} and image generation tasks \cite{chen2020generative,yu2021diverse,yu2022scaling,lee2022autoregressive}."

- Bidirectional attentions: Attention mechanisms that consider information in both forward and backward temporal directions to fill or refine sequences. "and then recursively interpolates frames with bidirectional attentions."

- Bidirectional Transformer: A Transformer that attends to context on both sides of a position (not strictly causal), enabling parallel reconstruction or masked prediction. "Then, a bidirectional Transformer is trained to refine the conditional tokens, predict masked tokens, and reconstruct target tokens."

- Cascaded sampling pipeline: A staged generation process where outputs are progressively refined by subsequent models (e.g., base model followed by upsamplers). "Imagen Video \cite{ho2022imagen} proposes a cascaded sampling pipeline for video generation."

- CLIP: A contrastively trained image–text model used to align visual and textual representations for conditioning and evaluation. "CLIP \cite{radford2021learning} is a typical pre-trained multi-modal model, which has been widely used in image/video generation models \cite{podell2023sdxl, ramesh2022hierarchical, rombach2022high, svd}."

- Codebook: The discrete set of prototype vectors used in vector quantization to map continuous embeddings to discrete tokens. "The authors design a lookup-free quantization method to build the codebook and propose a joint image-video tokenization model, enabling it can tackle image and video generation jointly."

- Contrastive learning: A self-supervised learning paradigm that brings semantically similar pairs closer and dissimilar pairs apart in embedding space. "It is pre-trained with large-scale image-text pairs through contrastive learning \cite{jaiswal2020survey} and demonstrates superior performance across various tasks."

- Cross-attention: An attention mechanism that conditions one modality or sequence on another (e.g., text conditioning for images/videos). "The text-to-image cross-attention block is a potential solution, whose effectiveness has been proven in \cite{chen2023pixart}."

- Denoising Diffusion Probabilistic Model (DDPM): A generative model that learns to reverse a gradual noising process to synthesize data. "Diffusion-based methods have started to dominate image generation since the Denoising Diffusion Probabilistic Model (DDPM) \cite{ho2020denoising}, which learns a reverse process to generate an image from a Gaussian distribution ."

- Diffusion process: The forward noising process in diffusion models that gradually corrupts data with noise over timesteps. "It has two processes: the diffusion process (also known as a forward process) and the denoising process (also known as the reverse process)."

- Disentanglement of visual dynamics: Structuring models to separate underlying factors of motion and appearance to reduce complexity and improve generalization. "Early methods \cite{mile,isodream,sem2} attempt to address these challenges by reducing the search space and incorporating explicit disentanglement of visual dynamics."

- End-to-end autonomous driving: An approach that maps raw sensory inputs directly to driving actions using a single learned pipeline. "aiding in end-to-end autonomous driving."

- Fréchet Video Distance (FVD): A metric for comparing distributions of videos by measuring differences between feature statistics of generated and real videos. "measure the performance through Fréchet Video Distance (FVD) \cite{unterthiner2018towards}."

- Generative Adversarial Network (GAN): A framework with a generator and discriminator trained adversarially to synthesize realistic samples. "Before the success of diffusion-based methods, GAN introduced in \cite{goodfellow2014generative} have always been the mainstream methods in image generation."

- Joint-Embedding Predictive Architecture (JEPA): A predictive architecture that learns in a shared embedding space by forecasting representations rather than raw data. "LeCun's proposal of the Joint-Embedding Predictive Architecture (JEPA) \cite{lecun2022jepa} heralds a significant departure from traditional generative models."

- Joint image-video tokenization: A tokenization approach that unifies image and video data into a common discrete representation. "and propose a joint image-video tokenization model, enabling it can tackle image and video generation jointly."

- Latent space: A lower-dimensional representation space in which models operate to compress and generate data efficiently. "the generation model is built up on DiT \cite{peebles2023scalable}. Since the original DiT is designed for class-to-image generation, two modifications should be conducted on it... which is trained in the latent space."

- Lookup-free quantization: A quantization method that avoids explicit codebook lookups to reduce latency or complexity. "The authors design a lookup-free quantization method to build the codebook"

- Masked modeling: A self-supervised learning and generation paradigm that masks parts of the input and trains models to reconstruct the missing content. "Masked modeling is first designed for self-supervised learning for LLMs \cite{devlin2018bert,liu2019roberta,jiao2019tinybert} and image models \cite{bao2021beit,he2022masked}."

- Multi-head self-attention: An attention mechanism that uses multiple attention heads to capture diverse relationships within a sequence. "then projected into tokens and finally processed by a series of multi-head self-attention and multi-layer perceptron blocks."

- Multi-layer perceptron (MLP): A feed-forward neural network block typically used within Transformer layers for non-linear feature transformation. "Since the self-attention blocks and MLP blocks in DiT are designed for spatial modeling, extra blocks for temporal modeling should be added."

- Policy networks: Neural networks that output actions (or action distributions) for agents based on observed states. "they build policy networks applicable to various contexts, either virtual (e.g., programs in games or simulated environments) or physical (e.g., robots)."

- Re-captioning: The process of regenerating or enriching captions for training data using a strong captioning model to improve alignment and detail. "Sora adopts the re-captioning technique proposed in DALL-E 3 \cite{betker2023improving}."

- Scaling laws: Empirical relationships showing how model performance scales with data, compute, and parameters. "This suggests that there also exsiting scaling laws in the visual field and directs a promising way to build large vision models or even world models."

- Self-supervised learning: Learning representations from unlabeled data by solving proxy tasks (e.g., predicting masked parts). "Masked modeling is first designed for self-supervised learning for LLMs \cite{devlin2018bert,liu2019roberta,jiao2019tinybert} and image models \cite{bao2021beit,he2022masked}."

- Spatial-temporal attention: Attention mechanisms that jointly model spatial and temporal relationships in video sequences. "where and denotes the spatial-temporal attention and text-to-image cross attention blocks, respectively."

- Super-resolution: Techniques that increase the spatial or temporal resolution of generated media via dedicated upsamplers. "the authors cascade spatial and temporal super-resolution models to progressively improve the resolution and frame rate of generated videos."

- Transformer: A neural architecture based on attention mechanisms for sequence modeling. "The Transformer is proposed in \cite{vaswani2017attention} for machine translation tasks and applied to vision recognition by ViT \cite{dosovitskiy2020image}."

- Tubelet embedding: A video tokenization technique that embeds spatiotemporal tubes (patches across frames) into vectors. "This operation is similar to the tubelet embedding technique in ViViT \cite{arnab2021vivit}."

- U-Net: An encoder–decoder architecture with skip connections widely used for pixel-wise prediction and generation. "Typically, U-Net \cite{ronneberger2015u} builds a U-shape architecture based on a backbone model for image segmentation tasks."

- Variational Autoencoder (VAE): A generative model that learns a probabilistic latent representation using variational inference. "the compression model is built based on VAE \cite{kingma2013auto} or VQ-VAE \cite{van2017neural}."

- Vector-Quantized Variational Autoencoder (VQ-VAE): A discrete variant of VAE that uses a codebook to quantize latent representations. "the compression model is built based on VAE \cite{kingma2013auto} or VQ-VAE \cite{van2017neural}."

- Video captioner: A model that generates textual descriptions for videos, often used to improve training data quality. "a video captioner is trained with high-quality video-text pairs, where the text is well-aligned with the corresponding video and contains diverse and descriptive information."

- Vision Transformer (ViT): A Transformer architecture applied to images by treating image patches as tokens. "The Transformer is proposed in \cite{vaswani2017attention} for machine translation tasks and applied to vision recognition by ViT \cite{dosovitskiy2020image}."

- Window-attention: An attention strategy that restricts attention computation within local windows to improve efficiency. "After that, distillation \cite{touvron2021training}, window-attention \cite{liu2021swin}, and mask image modeling \cite{bao2021beit, he2022masked} approaches are introduced to improve the training or inference efficiency of vision Transformers."

- World models: Models that learn predictive representations of environments to understand and simulate future states. "General world models represent a crucial pathway toward achieving AGI, serving as the cornerstone for various applications ranging from virtual environments to decision-making systems."

- World simulator: A system capable of simulating consistent, physically plausible interactions and outcomes within a generated environment. "Sora can work as a world simulator, since it can understand the result of an action."

Practical Applications

Practical Applications of the Survey’s Findings, Methods, and Innovations

Below are actionable, real-world applications derived from the survey’s coverage of Sora, general world models, video generation techniques (diffusion, Transformer-based DiT/Latte, autoregressive, masked modeling), autonomous-driving world models (GAIA-1, DriveDreamer, Panacea, Drive-WM), and agent/robotics models (Dreamer, UniPi, UniSim, RoboDreamer, JEPA). They are grouped under Immediate Applications and Long-Term Applications and linked to relevant sectors. Each item notes likely tools/workflows and key assumptions or dependencies.

Immediate Applications

- Media and Entertainment: text-to-video content generation and editing

- Sector: media, advertising, education

- What: Rapid production of short-form videos from text prompts; previsualization (storyboards → animatics → shots); text-guided video editing (style, objects, motion) using diffusion/Transformer pipelines (e.g., Stable Video Diffusion, Latte-like DiT), masked modeling for fast iterations (MAGVIT/WorldDreamer), and LLM-based prompt rewriting/captioning.

- Workflow/Tooling: LLM prompt enhancement; CLIP/T5 encoders; video tokenizer + latent diffusion; streaming generation for longer clips; captioners for dataset curation; human-in-the-loop review.

- Dependencies/Assumptions: High-quality, aligned video-text data (re-captioning helps); compute and GPU availability for long videos; physical consistency still imperfect; copyright and content safety checks.

- E-commerce and Marketing: automated product demos and variants

- Sector: retail, advertising

- What: Generate product explainer videos (usage scenarios, environment changes, language variants) controllably; A/B testing multiple creative variants.

- Workflow/Tooling: Text-to-video plus controllable conditioning (style, camera, object attributes); CLIP/LLM encoders to maintain prompt fidelity; batch variant generation; embedded review pipelines.

- Dependencies/Assumptions: Accurate depiction of product function and safety; risk of hallucinations; brand and legal compliance.

- Synthetic data for computer vision and perception

- Sector: software/AI tooling, autonomous systems

- What: Controllable synthetic video datasets to augment training for detection/segmentation/forecasting; generate rare/long-tail edge cases; domain randomization with diffusion/Transformer world models.

- Workflow/Tooling: DriveDreamer/Panacea-like controllable scene generation; MAGVIT/WorldDreamer for fast parallel generation; benchmark with FVD, CLIPSim, VBench/EvalCrafter/FETV; data curation with re-captioning; fine-tuning perception models.

- Dependencies/Assumptions: Domain gap vs. real-world data; metrics sensitive to seeds; need for calibration/selection; regulatory constraints if used in safety-critical pipelines.

- Autonomous driving: simulation and perception training with generative world models

- Sector: transportation, robotics

- What: Generate diverse driving scenarios from text or structured prompts (weather, traffic, maneuvers) for simulation-based testing and perception training; early-stage policy training with synthetic data (Drive-WM shows feasibility).

- Workflow/Tooling: GAIA-1/DriveDreamer-style world models; LLM-conditioned scene control (DriveDreamer2); integration with AD stacks and scenario libraries; robustness evaluation.

- Dependencies/Assumptions: Physical and behavioral realism; sim-to-real transfer and distribution shift; safety validation; compliance with AV testing standards.

- Game development: learning in imagination and rapid content prototyping

- Sector: gaming, simulation

- What: NPC policy learning via world models to reduce required interactions (Dreamer series); quick generation of cutscenes/cinematics from scripts; environment previews for level design.

- Workflow/Tooling: DreamerV3 for imagination-based RL; VideoPoet/MAGVIT-v2 for token-based video generation; LLM for narrative control; plug-ins for game engines.

- Dependencies/Assumptions: Integration with physics/game engines; compute budgets; designer oversight to manage artifacts.

- Robotics and industrial manipulation: pre-training via generative simulators

- Sector: robotics, manufacturing

- What: Pre-train manipulation policies in generative simulators (UniSim) and with policy-as-video formulations (UniPi) for faster task generalization; visual planning (RoboDreamer) for novel tasks.

- Workflow/Tooling: Text-to-video policies; scene/object libraries; sim pipelines with camera/sensor models; zero-/few-shot deployment to real robots with validation tasks.

- Dependencies/Assumptions: Sim-to-real gap (friction, contact dynamics, compliance); safety procedures; calibration to hardware kinematics; multi-sensory fidelity.

- Education and training: visual instruction and lab simulations

- Sector: education, corporate training

- What: Generate tailored explanatory videos and virtual lab scenarios from curricula; multilingual variants; accessibility improvements via re-captioning.

- Workflow/Tooling: Text-to-video with LLM-enhanced prompts; captioners for precise descriptions; streaming generation for longer modules; LMS integration.

- Dependencies/Assumptions: Accuracy of conceptual content; teacher oversight; age-appropriate content safeguards.

- Accessibility: automated detailed captioning and alt-descriptions

- Sector: public services, media

- What: Improve video accessibility with rich, consistent captions via video captioners (GPT-4V-like) and re-captioning pipelines; augment descriptive content for the visually impaired.

- Workflow/Tooling: Multi-modal LLMs for captioning; alignment checks; human review for correctness.

- Dependencies/Assumptions: Captioner quality; domain coverage; ethical considerations.

- Evaluation and governance: benchmarking and synthetic data policy

- Sector: academia, policy, compliance

- What: Adopt standardized benchmarks (VBench, EvalCrafter, FETV) for fair comparisons; develop institutional policies for synthetic data usage in training/testing; auditing workflows for generative pipelines.

- Workflow/Tooling: Public benchmarks; reproducible evaluation protocols; dataset documentation; synthetic data governance frameworks.

- Dependencies/Assumptions: Community consensus; legal and ethical compliance; transparency in data provenance.

Long-Term Applications

- General world simulators for planning and decision support

- Sector: urban planning, disaster response, climate resilience, logistics

- What: High-fidelity, long-horizon simulation environments where agents predict multiple futures under constraints; scenario planning for infrastructure, evacuation, congestion management.

- Workflow/Tooling: DiT-scale world models with spatial-temporal compression; StreamingT2V for long sequences; multimodal inputs (text, maps, sensor data); interactive “what-if” interfaces.

- Dependencies/Assumptions: Physics and sociobehavioral fidelity; scalable compute; robust validation; governance of synthetic simulations.

- End-to-end autonomous driving with language-conditioned world models

- Sector: transportation, mobility services

- What: Policies trained on richly simulated, controllable worlds; natural language control (e.g., “handle sudden cut-in ahead”); anticipatory multi-future planning.

- Workflow/Tooling: DriveDreamer2-like language conditioning; Drive-WM-style end-to-end training with synthetic augmentation; real-world validation loops; digital twins of cities.

- Dependencies/Assumptions: Regulatory approval; extensive real-world trials; safety certification; continuous monitoring and fail-safes.

- Household and service robots with policy-as-video generalization

- Sector: robotics, eldercare, hospitality, logistics

- What: Robots that learn broadly applicable skills from video-form policies (UniPi), plan with object-action sequences (RoboDreamer), and transfer to novel environments with minimal data.

- Workflow/Tooling: Generative simulators (UniSim) for dynamic interactions; JEPA-like semantic representations for multi-modal understanding; standardized teleoperation-to-policy pipelines.

- Dependencies/Assumptions: Rich multi-sensory datasets (vision, touch, audio); safe hardware; task generalization across homes; user acceptance and compliance.

- Healthcare simulation and training using world models

- Sector: healthcare, medical education

- What: Surgical training and clinical decision simulations with physically consistent dynamics; scenario generation for rare events; augmented patient trajectory prediction.

- Workflow/Tooling: Long-horizon video/simulation generation; integration with medical device data; curriculum-aligned scenarios; validation against clinical outcomes.

- Dependencies/Assumptions: High-stakes accuracy; rigorous oversight and certification; privacy-preserving data; interdisciplinary collaboration.

- Scientific discovery and digital twins for complex systems

- Sector: materials science, biology, energy systems

- What: World-model-based simulations to test hypotheses, accelerate experiment design, and operate digital twins of grids, factories, or ecosystems for optimization.

- Workflow/Tooling: JEPA for semantic representations; hybrid physics-informed generative models; multimodal experimentation assistants; collaborative notebooks.

- Dependencies/Assumptions: Reliable physics grounding; data availability across modalities; interpretability; alignment with domain standards.

- Finance and risk management: learned scenario generation

- Sector: finance, insurance

- What: Generate plausible multi-step macro and micro scenarios for stress testing, risk assessments, and strategy backtesting with agent-based world models.

- Workflow/Tooling: Multi-agent simulators; LLM-conditioned scenario narratives; compliance-reviewed model governance; human-in-the-loop oversight.

- Dependencies/Assumptions: Faithful causal structures; avoidance of spurious correlations; regulatory and auditability requirements.

- Fully synthetic long-form media with consistent physical dynamics

- Sector: film, TV, streaming

- What: Feature-length content produced with world-model-backed generators that maintain temporal and physical consistency; interactive, personalized narratives.

- Workflow/Tooling: StreamingT2V-class long video generation; high-capacity DiT-like models; robust asset libraries and control interfaces; rights management systems.

- Dependencies/Assumptions: Scale and cost of compute; creative direction; legal/IP frameworks; audience acceptance of synthetic media.

- Standards, certification, and governance for safety-critical world models

- Sector: policy, standards bodies, compliance

- What: Formal evaluation protocols, certification schemes, and lifecycle governance for world models used in AVs, robotics, and healthcare; watermarking and provenance for synthetic data/videos.

- Workflow/Tooling: Cross-stakeholder standards; third-party audits; incident reporting; continuous validation; transparency documentation.

- Dependencies/Assumptions: Regulatory coordination; industry adoption; robust testing infrastructure; public trust.

Notes on cross-cutting dependencies:

- Scaling laws and compute: Many applications rely on large Transformer-based models (DiT/Latte-like) trained on massive datasets; compute availability and efficiency (compression, streaming memory, parallel masked decoding) are pivotal.

- Data quality: Re-captioning and high-quality video-text alignment are critical; synthetic data governance and provenance matter for trust and legal compliance.

- Evaluation: Benchmarking fairness (seed sensitivity, dataset variability) and human preference assessment remain active needs.

- Fidelity and safety: Physical plausibility, sim-to-real transfer, and robust validation are core assumptions before deployment in safety-critical domains.

Collections

Sign up for free to add this paper to one or more collections.