- The paper introduces collaborative edge training that leverages distributed edge devices to sustainably train parameter-rich AI models.

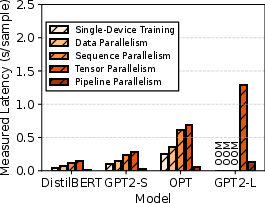

- The paper evaluates various parallelism strategies, showing that pipeline and data parallelism can reduce latency and energy consumption.

- The paper identifies key challenges in resource orchestration, participant incentives, and secure, efficient edge computing.

Implementation of Big AI Models for Wireless Networks with Collaborative Edge Computing

Introduction

The paper introduces collaborative edge training, a novel approach designed to address the challenges posed by training big AI models—such as Transformer-based architectures with millions or billions of parameters—on resource-constrained edge devices. Traditional centralized cloud-based model training may not be sustainable due to its reliance on energy-consuming datacenters and privacy concerns related to the transmission of raw data. Collaborative edge training leverages distributed computational resources available across trusted edge devices within wireless networks to effectively balance sustainability, performance, and privacy concerns.

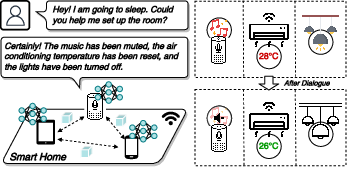

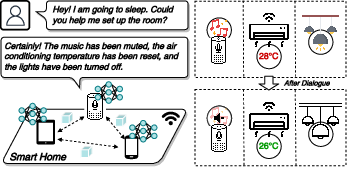

Figure 1: An example scenario of intelligent voice assistant in a smart home, which is driven by a collaboratively trained big AI model.

Background and Motivation

Big AI models are characterized by their computationally intensive nature, owing largely to the Transformer architecture. These models have demonstrated significant performance benefits across various tasks such as NLP, computer vision, and robotics control, yet their training requires substantial resources. Training challenges are amplified due to the considerable computational demands, which increase sustainability concerns in edge environments.

Limitations of Existing Training Mechanisms

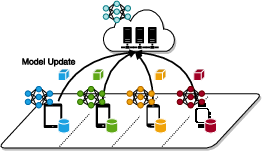

Current frameworks such as centralized cloud training, on-device training, and federated learning present solutions that excel in different respects but are limited by their cost inefficiency and privacy concerns or constrained computational capacity when employed individually. Collaborative edge training mitigates these limitations by harnessing dormant resources within edge networks, offering an efficient yet private localized alternative to cloud-dependent training processes.

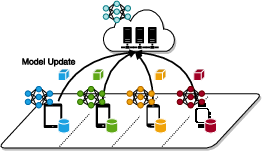

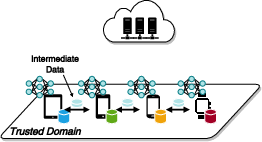

Figure 2: Existing AI model training mechanisms versus collaborative edge training.

Collaborative Edge Training Framework

Framework Overview

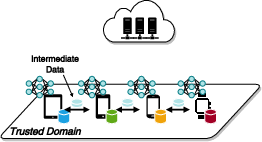

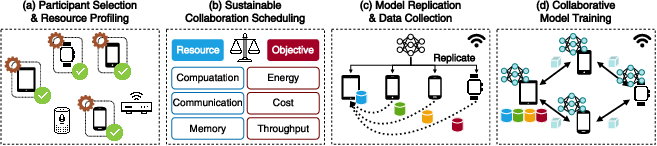

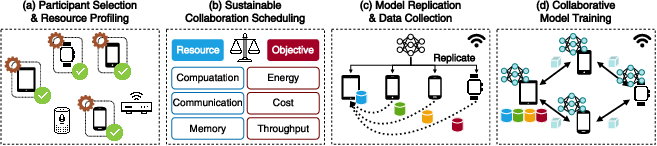

Collaborative edge training operates through a structured four-phase approach: device selection, orchestration strategy for resource utilization, model replication across devices, and training execution.

Figure 3: Overview of collaborative edge training workflow.

Sustainable Orchestration Strategies

Collaborative edge training introduces innovative scheduling choices to optimize training sustainability, emphasizing efficient resource allocation, fault tolerance, and participant incentivization across edge domains. These orchestration strategies are pivotal to realizing lower latency, energy consumption, and effective task distribution.

Case Study: Parallelism Impact Evaluation

This section explores parallelism strategies specific to Transformers to ascertain their effect on energy consumption and system latency within collaborative training setups.

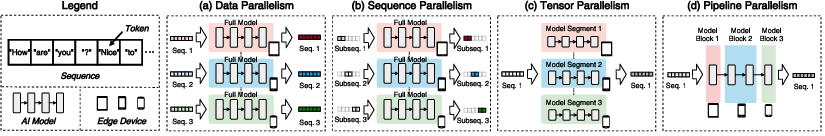

Types of Parallelism

Empirical Insights

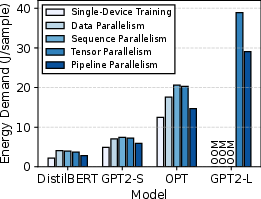

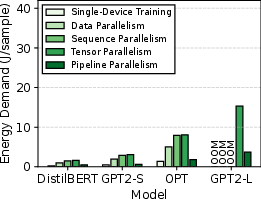

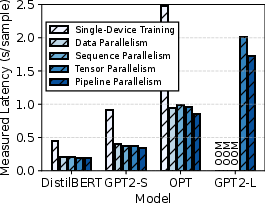

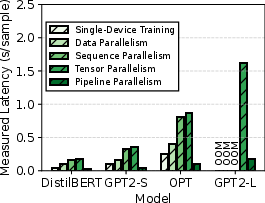

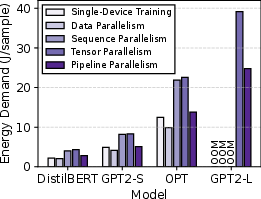

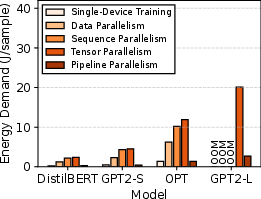

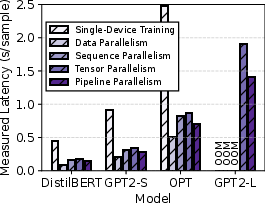

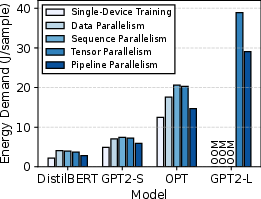

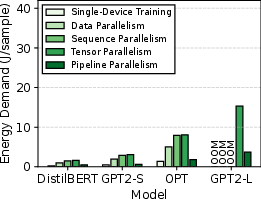

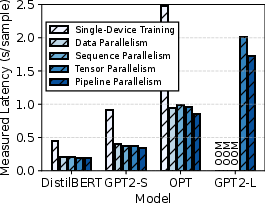

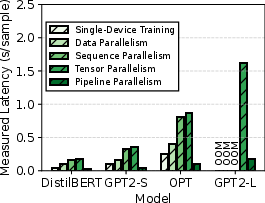

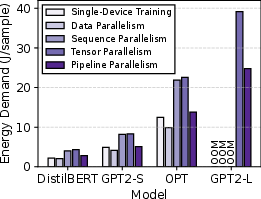

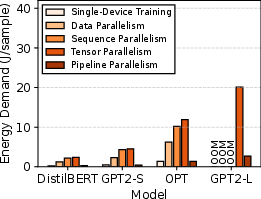

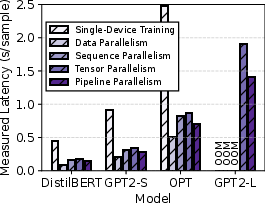

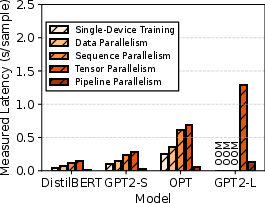

Performance metrics derived from edge testbeds highlight pipeline and data parallelism as preferred strategies for balancing energy efficiency and latency. They exhibit superior sustainability benefits, notably in high-computation environments where lower GPU utilization is pivotal.

Figure 5: Energy demand and measured latency per sample of collaborative edge training under different parallelism in a homogeneous testbed.

Figure 6: Energy demand and measured latency per sample of collaborative edge training under different parallelism in a heterogeneous testbed.

Open Challenges

Addressing open challenges such as efficient orchestration, incentivization, and AI-native wireless networks are critical to fostering long-term sustainable collaborative edge training.

- Sustainability Metrics: Parsing efficiency and environmental metrics.

- Efficient Orchestration: Resource utilization optimization strategies.

- Participant Incentives: Balancing contributions and rewards.

- AI-native Wireless Design: Enhancements for communication efficacy.

- Power-efficient Hardware: Minimizing computational footprint.

- Practical Privacy Measures: Ensuring privacy without performance loss.

Conclusion

Collaborative edge training stands as a promising paradigm in the sustainable deployment of big AI models within wireless networks. Its potential to harness scattered edge resources aligns well with the objectives of reduced energy consumption, improved performance, and enhanced privacy, calling for future research and development to address ongoing challenges in both technology and implementation spheres.