How LLMs Aid in UML Modeling: An Exploratory Study with Novice Analysts (2404.17739v2)

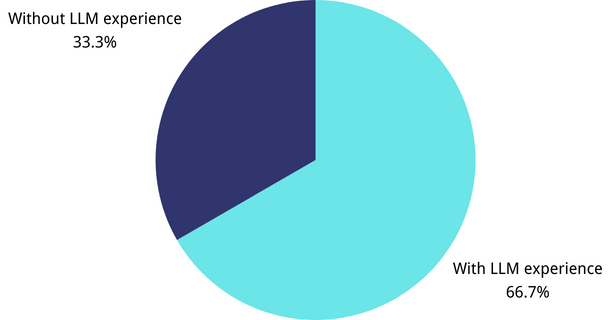

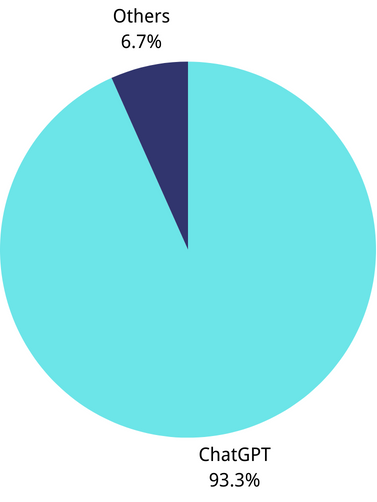

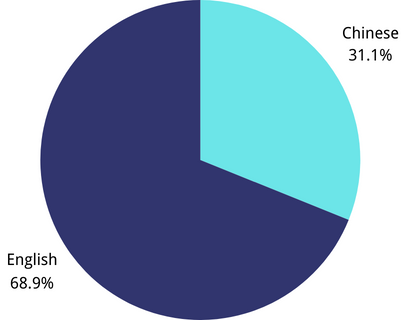

Abstract: Since the emergence of GPT-3, LLMs have caught the eyes of researchers, practitioners, and educators in the field of software engineering. However, there has been relatively little investigation regarding the performance of LLMs in assisting with requirements analysis and UML modeling. This paper explores how LLMs can assist novice analysts in creating three types of typical UML models: use case models, class diagrams, and sequence diagrams. For this purpose, we designed the modeling tasks of these three UML models for 45 undergraduate students who participated in a requirements modeling course, with the help of LLMs. By analyzing their project reports, we found that LLMs can assist undergraduate students as novice analysts in UML modeling tasks, but LLMs also have shortcomings and limitations that should be considered when using them.

- J. Liu, C. S. Xia, Y. Wang, and L. Zhang, “Is your code generated by chatgpt really correct? rigorous evaluation of large language models for code generation,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- P. Vaithilingam, T. Zhang, and E. L. Glassman, “Expectation vs. experience: Evaluating the usability of code generation tools powered by large language models,” in Chi conference on human factors in computing systems extended abstracts, pp. 1–7, 2022.

- A. Ahmad, M. Waseem, P. Liang, M. Fahmideh, M. S. Aktar, and T. Mikkonen, “Towards human-bot collaborative software architecting with chatgpt,” in Proceedings of the 27th International Conference on Evaluation and Assessment in Software Engineering (EASE), pp. 279–285, 2023.

- D. Zimmermann and A. Koziolek, “Automating gui-based software testing with gpt-3,” in 2023 IEEE International Conference on Software Testing, Verification and Validation Workshops (ICSTW), pp. 62–65, IEEE, 2023.

- A. v. Lamsweerde, Requirements Engineering: From System Goals to UML Models to Software Specifications. John Wiley & Sons, Ltd, 2009.

- B. Wang, C. Wang, P. Liang, B. Li, and C. Zeng, “Case Study for the Paper: How LLMs Aid in UML Modeling: An Exploratory Study with Novice Analysts,” January 2024. https://zenodo.org/doi/10.5281/zenodo.10532600.

- Z. Zheng, K. Ning, J. Chen, Y. Wang, W. Chen, L. Guo, and W. Wang, “Towards an understanding of large language models in software engineering tasks,” arXiv preprint arXiv:2308.11396, 2023.

- D. Luitel, S. Hassani, and M. Sabetzadeh, “Improving requirements completeness: Automated assistance through large language models,” arXiv preprint arXiv:2308.03784, 2023.

- J. White, S. Hays, Q. Fu, J. Spencer-Smith, and D. C. Schmidt, “Chatgpt prompt patterns for improving code quality, refactoring, requirements elicitation, and software design,” arXiv preprint arXiv:2303.07839, 2023.

- J. Zhang, Y. Chen, N. Niu, and C. Liu, “Evaluation of chatgpt on requirements information retrieval under zero-shot setting,” Available at SSRN 4450322, 2023.

- D. Xie, B. Yoo, N. Jiang, M. Kim, L. Tan, X. Zhang, and J. S. Lee, “Impact of large language models on generating software specifications,” arXiv preprint arXiv:2306.03324, 2023.

- J. Jeuring, R. Groot, and H. Keuning, “What skills do you need when developing software using chatgpt? (discussion paper),” arXiv preprint arXiv:2310.05998, 2023.

- M. Waseem, T. Das, A. Ahmad, M. Fehmideh, P. Liang, and T. Mikkonen, “Chatgpt as a software development bot: A project-based study,” in Proceedings of the 19th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE), 2024.

- C. Arora, J. Grundy, and M. Abdelrazek, “Advancing requirements engineering through generative ai: Assessing the role of llms,” arXiv preprint arXiv:2310.13976, 2023.

- A. R. Sadik, S. Brulin, and M. Olhofer, “Coding by design: Gpt-4 empowers agile model driven development,” arXiv preprint arXiv:2310.04304, 2023.

- H. Kanuka, G. Koreki, R. Soga, and K. Nishikawa, “Exploring the chatgpt approach for bidirectional traceability problem between design models and code,” arXiv preprint arXiv:2309.14992, 2023.

- J. Cámara, J. Troya, L. Burgueño, and A. Vallecillo, “On the assessment of generative ai in modeling tasks: an experience report with chatgpt and uml,” Software and Systems Modeling, pp. 1–13, 2023.

- C. Larman, Applying UML and Patterns: An Introduction to Object Oriented Analysis and Design and Interative Development. Pearson Education, 2012.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Practical Applications

Immediate Applications

The following applications can be deployed today by leveraging the paper’s findings that LLMs reliably extract UML elements from natural language while struggling with relationships, and that hybrid human-in-the-loop workflows and PlantUML-based outputs improve quality.

Industry (Software/IT, product teams, consulting)

- UML copilot for early requirements modeling

- Use case: Prompt an LLM with domain text to draft use cases, classes, attributes/operations, and sequence-flow steps; a human modeler validates and finalizes relationships.

- Workflow/product: “UML Copilot” plugin for StarUML/Visual Studio Code that:

- Generates PlantUML code for use case/class/sequence diagrams from requirements text.

- Flags low-confidence relationships for manual review (focus on generalization/associations).

- Dependencies/assumptions: High-quality textual requirements; team uses PlantUML or compatible tooling; human reviewer signs off on relationships.

- Hybrid-created diagrams as a default modeling pattern

- Use case: Adopt the paper’s best-performing workflow—LLM textual suggestions → human refinement → diagramming in a tool (StarUML, PlantUML).

- Tools: Prompt templates for element extraction; pre-commit checklist for verifying relationships (inheritance, association, aggregation, composition).

- Dependencies: Basic UML skills among staff; availability of approved LLM (e.g., GPT-4 or enterprise/private LLM).

- Rapid prototyping for sequence behaviors

- Use case: Generate initial sequence diagrams from user stories to facilitate design discussions, test planning, and stakeholder demos (LLMs were strongest on sequence diagram criteria).

- Tools: “Sequence Assistant” that turns user stories into PlantUML sequence diagrams.

- Assumptions: Stable user story format; acceptance that messages may need refinement.

- Model quality gate in CI/CD

- Use case: Add an automated step that uses LLMs to review UML artifacts for missing core elements and obvious contradictions, then require human validation of relationships.

- Tools: “Relationship Validator” script + LLM checker for completeness against a project-specific glossary.

- Dependencies: Modeling artifacts versioned as text (PlantUML/Mermaid); policy allowing LLM use in pipelines.

- Onboarding aids from models

- Use case: LLMs summarize existing UML diagrams and generate natural-language walkthroughs for new team members.

- Assumptions: Non-sensitive models; access controls to prevent leakage.

Academia (Education, training, curriculum)

- LLM-assisted modeling exercises and formative feedback

- Use case: Assignments where students use LLMs to generate UML drafts and then improve them, guided by the paper’s rubric (elements vs. relationships).

- Tools: “ReqModel Coach” for rubric-aligned feedback (actors/use cases/classes/attributes/operations/messages/order).

- Dependencies: Clear academic integrity guidelines; curated prompts; instructor-provided evaluation criteria.

- Comparative labs on output formats

- Use case: Students compare Simple Wireframe, PlantUML-based, and Hybrid-created outputs to observe quality differences; learn why hybrid wins.

- Assumptions: Access to StarUML/PlantUML; reproducible prompts.

- Prompt engineering as a modeling skill

- Use case: Teach prompt patterns that separate “element extraction” from “relationship validation,” reflecting LLM strengths/weaknesses.

- Dependencies: Up-to-date LLM access; example corpora.

Policy and Governance (Org-level SDLC policy, compliance)

- Responsible-use guidelines for AI-assisted modeling

- Policy: Mandate human review for relationships; restrict sharing of sensitive requirements; log prompts/outputs as design artifacts.

- Tools: Lightweight “AI-in-the-loop” SOPs and checklists tied to modeling milestones.

- Dependencies: Organizational risk assessment; legal/privacy input; auditable LLM usage.

- Procurement and vendor RFP modeling support

- Use case: Teams use LLMs to rapidly produce standardized UML views for vendor briefings; vendors respond with LLM-assisted diagrams reviewed by humans.

- Assumptions: Contracts clarify AI use and IP; agreed modeling standards.

Daily Life and Individual Practitioners (Students, indie developers)

- Quick-start UML for side projects

- Use case: Generate initial use case/class/sequence diagrams from README or feature lists; refine manually.

- Tools: Prompt templates + PlantUML snippets.

- Dependencies: Basic UML literacy; acceptance of iteration.

- Study aid for understanding modeling

- Use case: Students paste a case study and get suggested UML plus explanations; compare with course solutions.

- Assumptions: Non-plagiarized usage; feedback from instructors.

Long-Term Applications

These rely on further research, better models (especially relationship reasoning), standard datasets, tighter tool integration, and policy maturation.

Industry (Software/IT, regulated sectors: healthcare, finance, energy)

- End-to-end modeling assistant integrated into ALM/IDE

- Vision: Multi-modal LLMs that co-create, validate, and repair UML with strong relationship reasoning; continuous synchronization between requirements, models, and code.

- Tools/products: “Requirements-to-Model-to-Code” assistants in Jira/Azure DevOps/IntelliJ; model repair via constraint solvers (e.g., OCL) guided by LLM.

- Dependencies: Fine-tuning on large UML corpora; formal constraints; reliable on-prem LLMs.

- Domain-aware modeling copilots

- Vision: Sector-specific ontologies (HL7/FHIR for healthcare, FIX/ISO 20022 for finance) boost relationship accuracy and traceability.

- Tools: “Healthcare Model Copilot,” “Finance Model Copilot” with pre-trained vocabularies and compliance patterns.

- Dependencies: Curated, licensed domain datasets; governance for safety and bias.

- Continuous model-code traceability and conformance

- Vision: LLMs maintain bidirectional links between requirements, UML, and code/tests; detect drift and propose fixes.

- Tools: “TraceGuard” service monitoring repositories; automatic sequence diagrams from runtime traces reconciled with design.

- Dependencies: Stable trace frameworks; organization-wide modeling discipline.

Academia (Education research, curriculum reform)

- Benchmarks and shared datasets for AI-in-modeling

- Vision: Public corpora of annotated UML and requirements; standardized metrics beyond binary scoring (continuous rubric scores).

- Tools: Open evaluation suites; leaderboards for “relationship extraction” and “model conformance.”

- Dependencies: Community curation; privacy-safe data; sponsorship.

- Competency-based curricula integrating AI modeling literacy

- Vision: Programs that formally teach “AI-assisted modeling” competencies, including risk management, verification, and human-in-the-loop design.

- Dependencies: Accreditation alignment; faculty development.

Policy and Standards (Standards bodies, regulators, enterprise governance)

- Standards for AI-assisted modeling artifacts and audits

- Vision: ISO/OMG guidance on provenance, review requirements, and auditability for AI-generated UML in safety/finance-critical systems.

- Tools: “AI Modeling Audit Pack” templates embedded in QMS.

- Dependencies: Multi-stakeholder consensus; regulator participation.

- Compliance automation for AI-in-the-loop modeling

- Vision: Automated evidence generation that shows human review of relationships and conformance to modeling standards during audits.

- Dependencies: Tool interoperability; tamper-evident logs.

Cross-cutting Tools and Methods

- Relationship-first modeling engines

- Vision: New LLM prompting and model-checking pipelines that explicitly reason about inheritance, associations, aggregations, and compositions, with constraint-based verification (e.g., OCL).

- Products: “Relationship Reviewer Pro” microservice integrated with modeling environments.

- Dependencies: Improved LLM reasoning; formal rule sets.

- Privacy-preserving, on-prem LLMs for modeling

- Vision: Secure deployment of LLMs behind the firewall to process sensitive requirements/models.

- Dependencies: Enterprise-grade LLM stacks; model governance.

- Multilingual modeling support

- Vision: Comparable accuracy across languages; robust performance on non-English requirements.

- Dependencies: Multilingual fine-tuning; localized datasets.

Notes on feasibility and assumptions across applications:

- Current LLMs are strong at extracting elements but weaker on relationship accuracy; human-in-the-loop remains essential.

- Output format matters: hybrid workflows and PlantUML-based outputs outperform raw wireframes.

- Results were derived from novice analysts; expert performance and different domains may shift outcomes.

- Data privacy, IP, and compliance constraints may limit prompt content; on-prem or privacy-preserving LLMs may be required.

- Variance in LLM outputs implies the need for reproducible prompts, logs, and review checkpoints.

Collections

Sign up for free to add this paper to one or more collections.