- The paper introduces PA-CoT, a novel method enhancing LLM reasoning by leveraging diverse rationale patterns to improve multi-step problem-solving.

- It utilizes rationale pattern embeddings and dynamic clustering to create balanced demonstration examples, reducing bias in inference.

- Experimental results on nine tasks show PA-CoT consistently outperforms traditional chain-of-thought approaches in performance and robustness.

Enhancing Chain of Thought Prompting in LLMs via Reasoning Patterns

The paper "Enhancing Chain of Thought Prompting in LLMs via Reasoning Patterns" proposes a novel approach called Pattern-Aware Chain-of-Thought (PA-CoT) to improve the reasoning capabilities of LLMs. The central idea is that the diversity of reasoning patterns in the demonstrations provided to LLMs can significantly enhance their performance on complex reasoning tasks.

Introduction to Chain-of-Thought Prompting

The effectiveness of Chain-of-Thought (CoT) prompting in LLMs is well-recognized for tasks involving multi-step reasoning. Traditional methods focus on providing accurate and semantically relevant demonstrations. However, the research emphasizes that focusing on the diversity of reasoning patterns rather than just accuracy can lead to better generalization and robustness in LLMs.

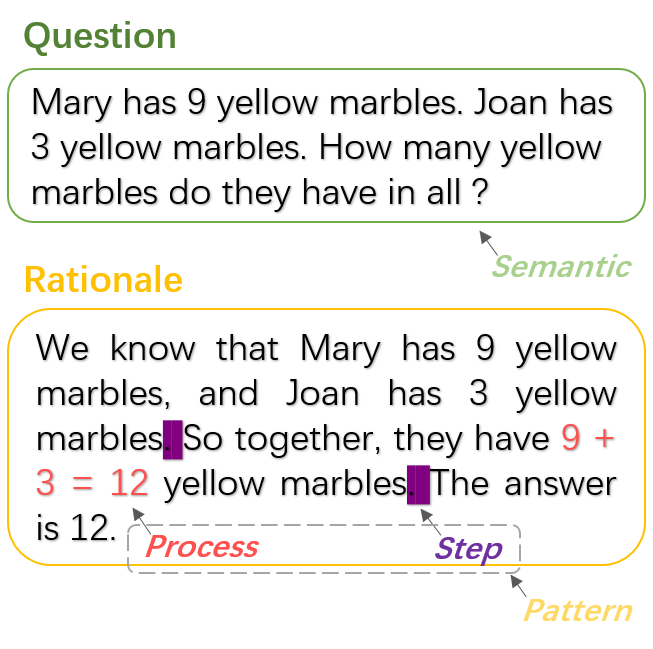

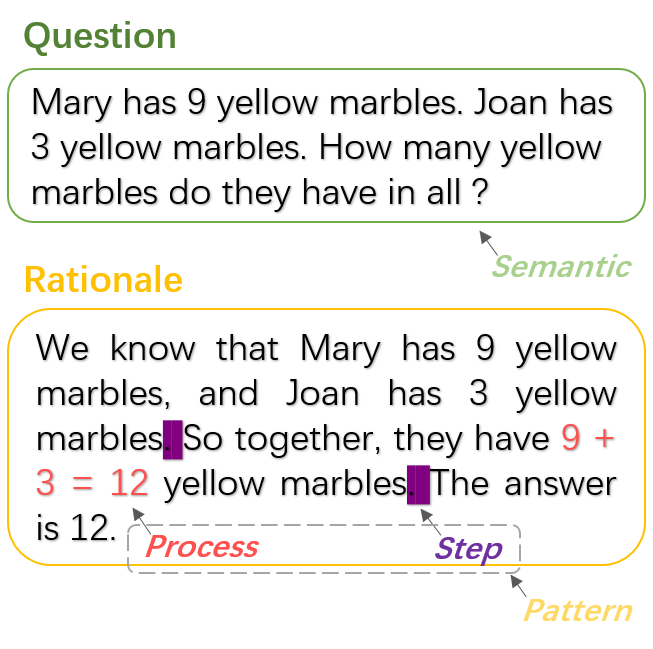

Figure 1: Example of the chain-of-thought reasoning process: This comprises a question accompanied by a rationale. The rationale serves as a depiction of how LLMs navigate the reasoning process to arrive at the answer to the given question.

Pattern-Aware Chain-of-Thought (PA-CoT)

PA-CoT leverages the variety of reasoning patterns present in demonstration examples to guide LLMs effectively during inference. The approach involves several key innovations:

Experimental Evaluation

The experiments spanned nine reasoning tasks and employed two LLMs: LLaMA-2-7b-chat-hf and qwen-7b-chat. Key findings include:

- Performance Improvements: PA-CoT consistently outperformed the baseline methods. The integration of step length and process diversity led to superior outcomes, especially in scenarios requiring complex reasoning.

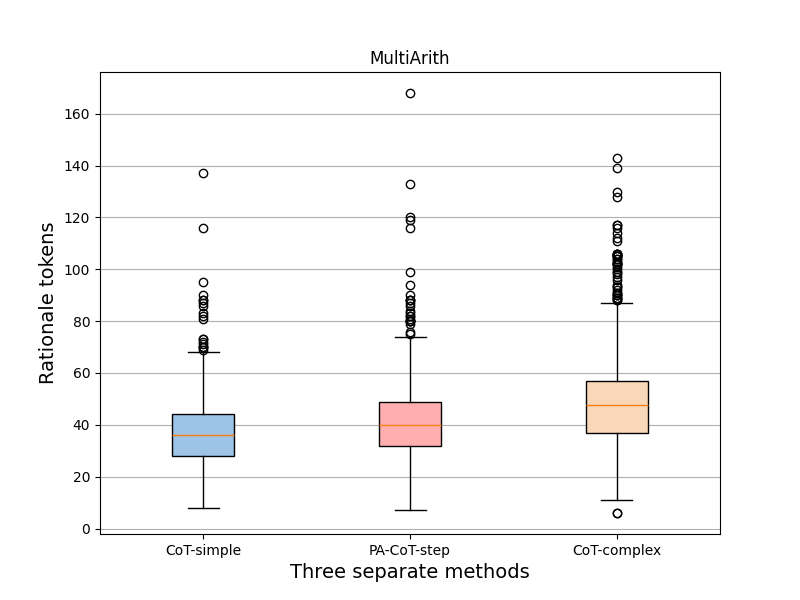

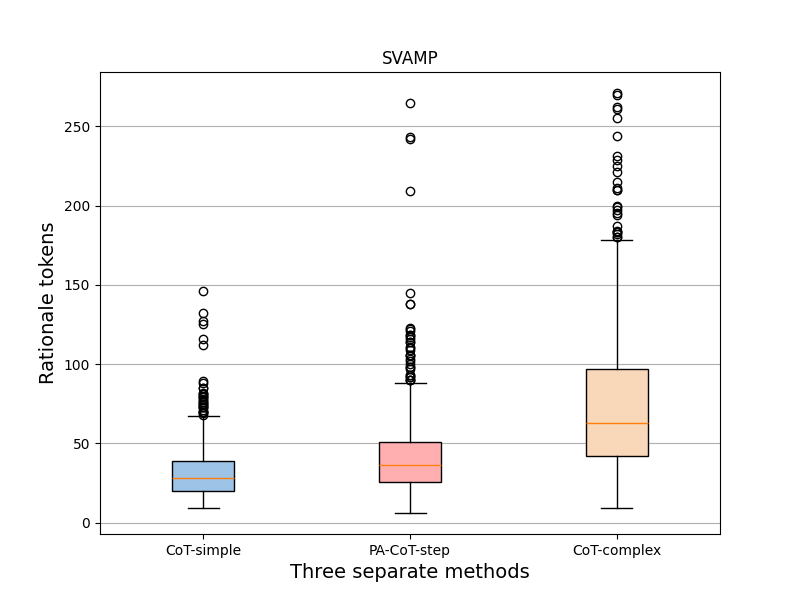

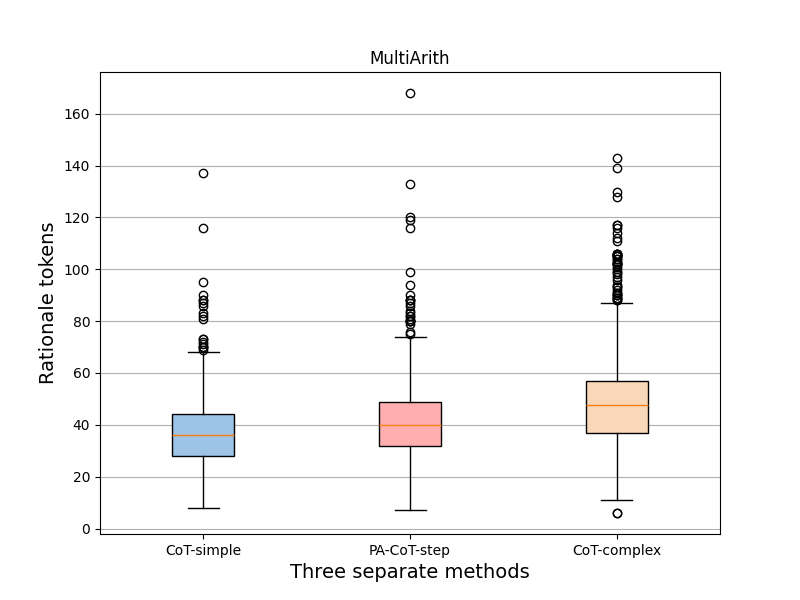

- Impact of Rationale Step Length: Demonstrations with diverse step lengths prevented bias towards overly simple or complex reasoning, promoting balanced inference paths.

- Reasoning Process Diversity: Using a range of reasoning processes reduced the risk of bias in problem-solving approaches and improved generalization.

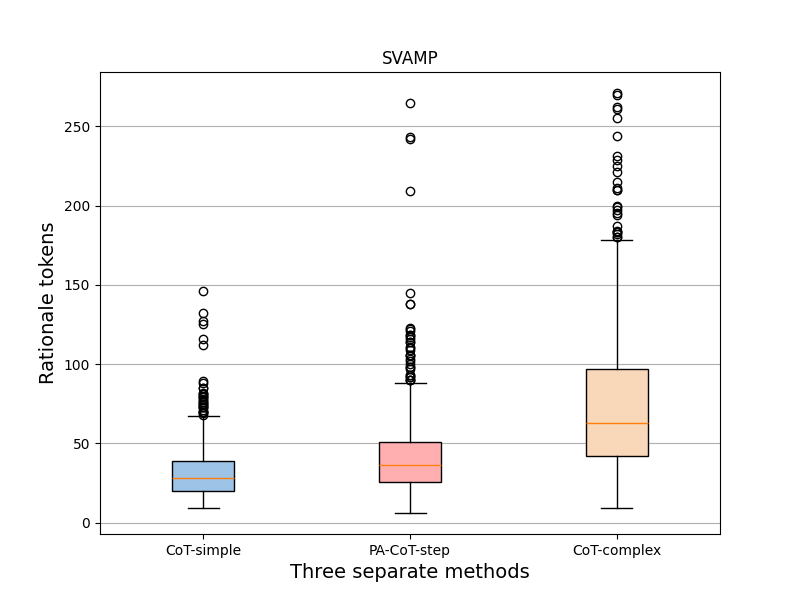

Figure 3: The box plot of generated rationale length across CoT-simple (pink), PA-CoT-step (blue), CoT-complex (green). The x-axis represents method names, and the y-axis represents the number of sentence tokens.

Error Robustness and Clustering Analysis

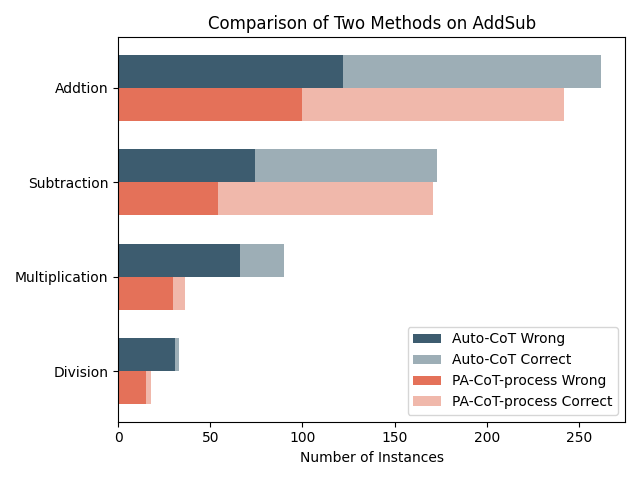

The paper also demonstrated the robustness of PA-CoT to errors in demonstration examples. Even when demonstrations contained incorrect rationales, PA-CoT maintained strong performance by focusing on the patterns rather than correctness.

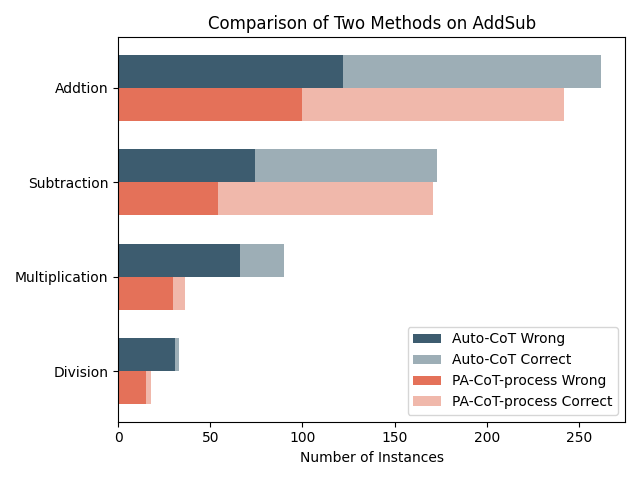

Figure 4: The distribution of the number of correct and wrong instances regarding different arithmetic symbols.

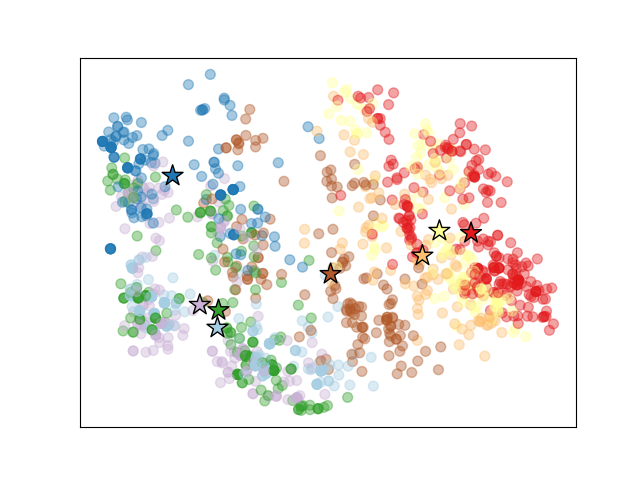

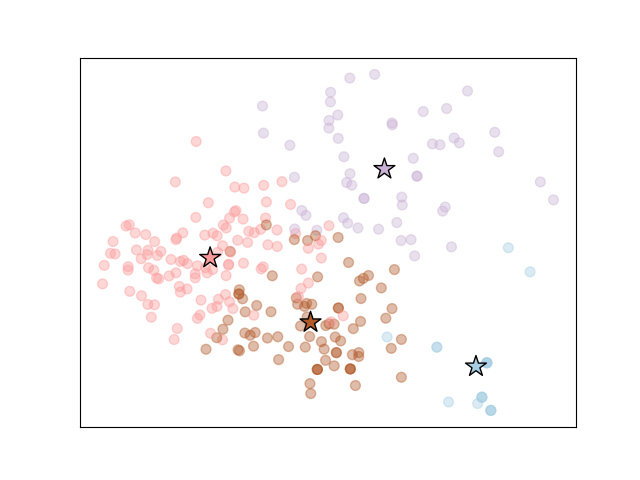

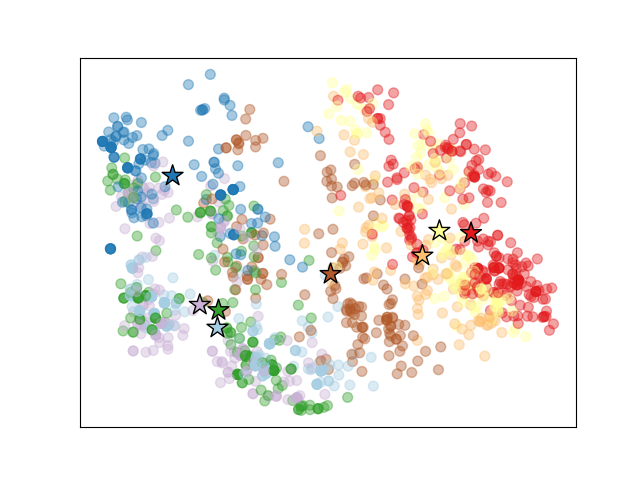

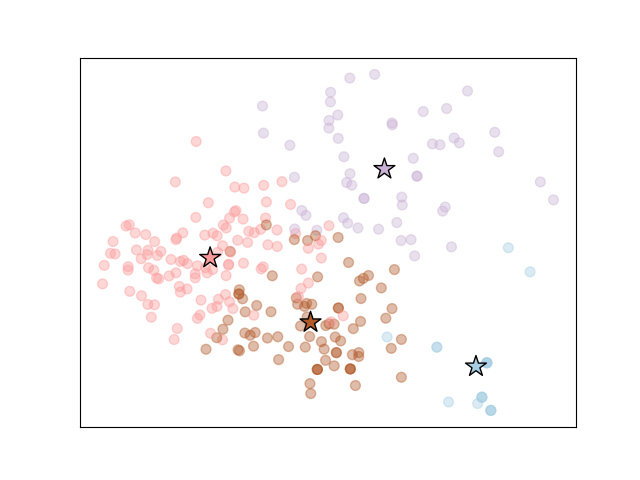

Figure 5: Visualization of clustering on six reasoning tasks. Cluster centres are noted as stars. The scatter of PA-CoT-concat clusters shows its superiority in example differentiation.

Conclusion

The research highlights the critical role of diverse reasoning patterns in enhancing the performance of LLMs under the CoT paradigm. By shifting focus from simply accurate demonstrations to incorporating varied rationale processes and lengths, PA-CoT offers a robust framework for improving reasoning tasks in LLMs. The implications for future AI development suggest harnessing pattern diversity could further enhance model robustness and generalization across diverse applications.