- The paper introduces i-Gaussian, a novel approach that leverages pre-trained video generation models to convert static 3D objects into interactive simulations.

- It employs the Differentiable Material Point Method combined with K-Means clustering to efficiently simulate elastic material dynamics under various forces.

- User studies and ablation experiments confirm that i-Gaussian produces more lifelike and immersive object interactions compared to existing methods.

"PhysDreamer: Physics-Based Interaction with 3D Objects via Video Generation"

Introduction

"PhysDreamer: Physics-Based Interaction with 3D Objects via Video Generation" introduces a novel approach, i-Gaussian, which aims to synthesize realistic, interactive 3D dynamics by converting static 3D objects into interactive simulations. This approach takes advantage of dynamics priors encoded in pre-trained video generation models to estimate physical material fields, thus enabling objects to respond realistically to various physical interactions.

Methodology

i-Gaussian Model

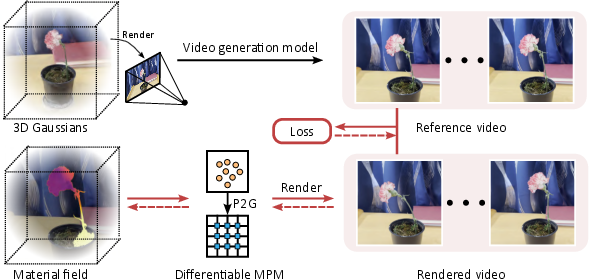

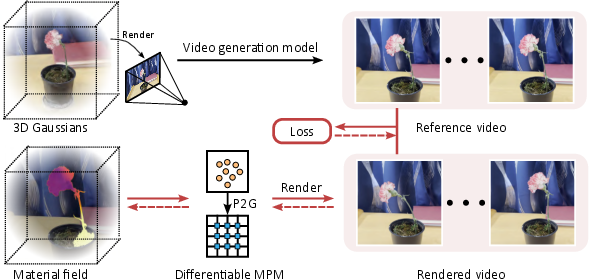

i-Gaussian represents objects using 3D Gaussians and employs the Differentiable Material Point Method (MPM) for simulation. The key innovation is leveraging video generation models to extract prior dynamics knowledge, which aids in estimating the physical attributes of an object. This estimation process is critical as it underpins the modeling of the interactive behavior of objects responding to external forces or manipulations. The model allows for synthesizing 3D responses by matching simulations to reference video content.

Figure 1: Leveraging video generation models to estimate a physical material field for static 3D objects and synthesizing dynamics under arbitrary forces.

Simulation Process

The simulation process is powered by MPM to solve the mechanics underlying elastic material dynamics, governed by stress-strain relationships. The model's efficiency arises from a subsampling strategy where driving particles are selected via K-Means clustering, reducing computational overhead by simulating only a subset of particles.

Figure 2: Accelerated MPM simulation using K-Means to select driving particles at the initial timestep.

This computational strategy is complemented by a pipeline that aligns a rendered video of object dynamics with a reference video to optimize the material field and initial velocity field iteratively.

Figure 3: Overview of i-Gaussian showing the rendering process, video generation, and material/velocity field optimization through gradient flow.

Results and Evaluation

The paper evaluates i-Gaussian on various elastic objects including flowers and telephones, demonstrating its superiority over existing methods like PhysGaussian and DreamGaussian4D. A user study reflects a preference for the motion realism generated by i-Gaussian over these baselines, despite its simulation results occasionally being less realistic than real-world interactions due to lower frequency motion patterns.

Figure 4: Comparison of synthesized dynamics with real captured videos and other baseline models.

Ablation Study

An ablation study assesses the impact of using single versus multi-view reference videos for self-occluding structures. Here, multi-view supervision significantly enhances the quality of synthesized dynamics, especially in complex scenes.

Figure 5: Comparison between single-view and two-view supervisions showing improved recognition and dynamics for occluded parts.

Conclusion

i-Gaussian advances the integration of physical plausibility into synthetic 3D environments, offering a promising direction for creating more lifelike and immersive simulations. Its capability to dynamically simulate object interactions based on video priors presents a flexible tool for virtual experiences. Future work could enhance efficiency and broaden applicability to accommodated collision dynamics and automated object separation for simulation setups. Overall, i-Gaussian sets a new benchmark in bridging static 3D representations and interactive dynamics, proving beneficial for applications in virtual reality and interactive media.