An Overview of Diffusion Models: Applications, Guided Generation, Statistical Rates and Optimization (2404.07771v1)

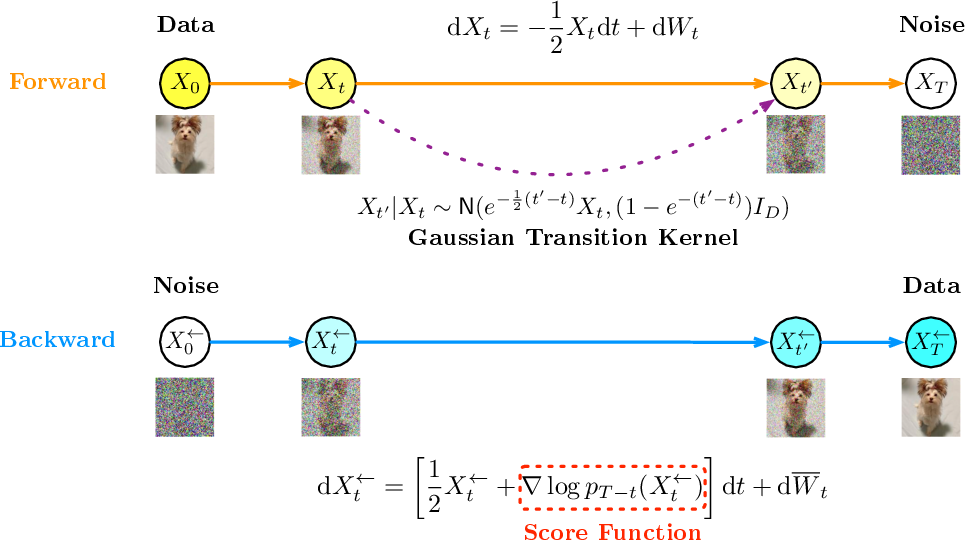

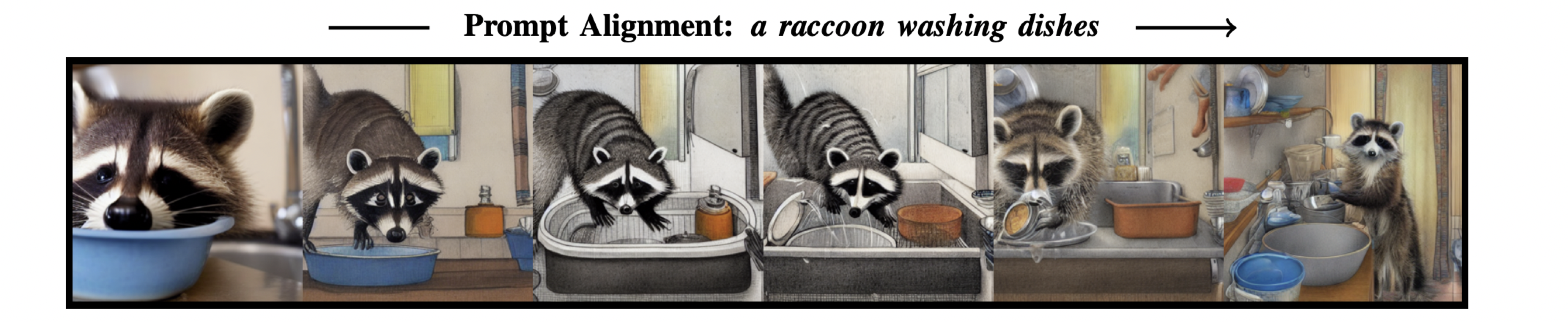

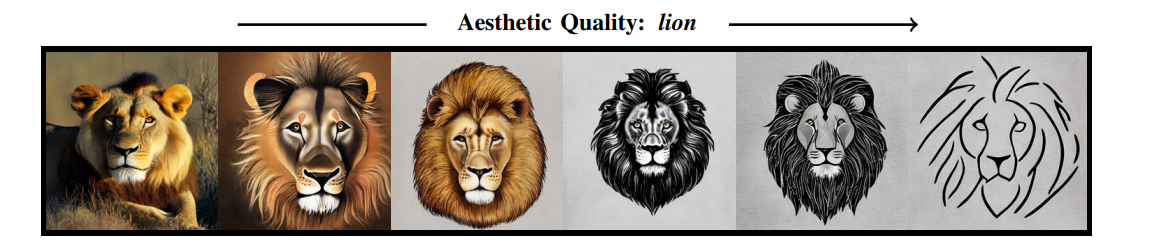

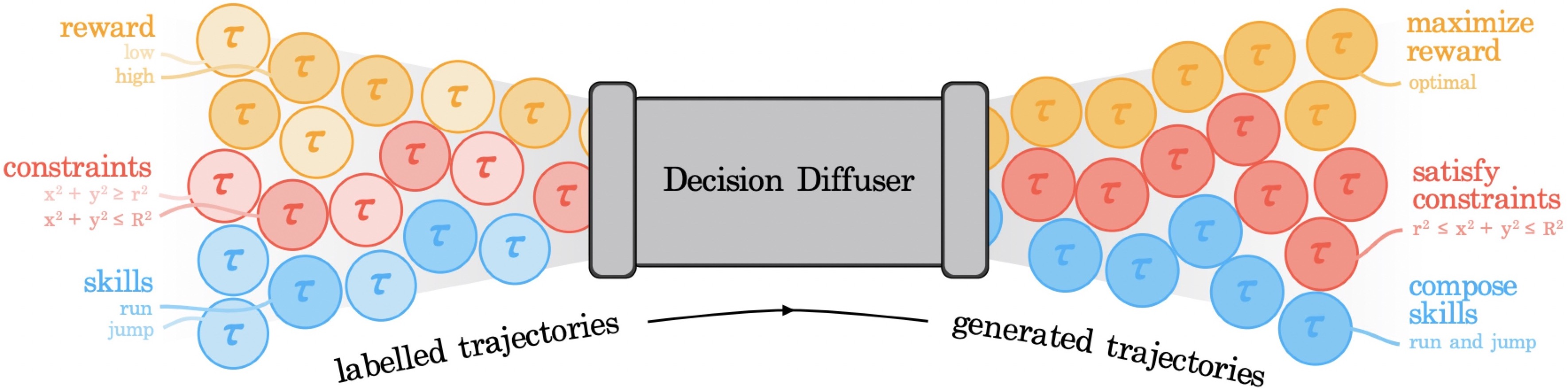

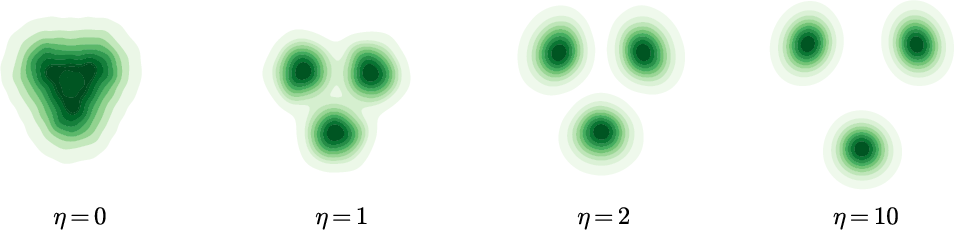

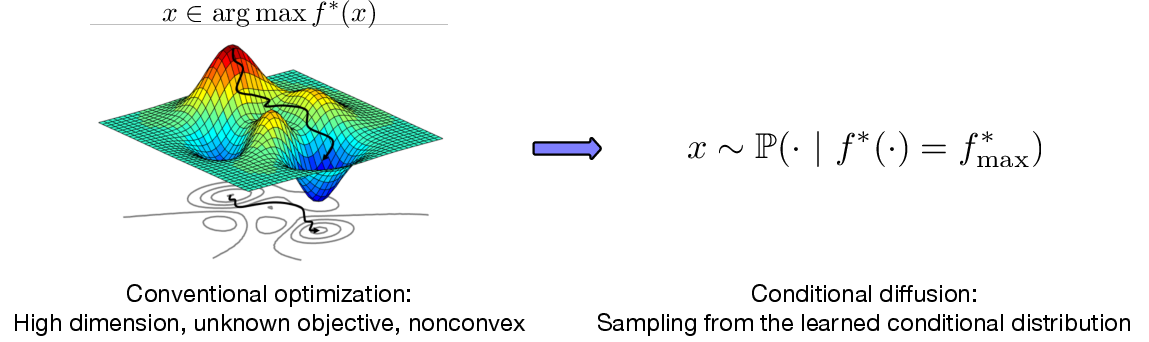

Abstract: Diffusion models, a powerful and universal generative AI technology, have achieved tremendous success in computer vision, audio, reinforcement learning, and computational biology. In these applications, diffusion models provide flexible high-dimensional data modeling, and act as a sampler for generating new samples under active guidance towards task-desired properties. Despite the significant empirical success, theory of diffusion models is very limited, potentially slowing down principled methodological innovations for further harnessing and improving diffusion models. In this paper, we review emerging applications of diffusion models, understanding their sample generation under various controls. Next, we overview the existing theories of diffusion models, covering their statistical properties and sampling capabilities. We adopt a progressive routine, beginning with unconditional diffusion models and connecting to conditional counterparts. Further, we review a new avenue in high-dimensional structured optimization through conditional diffusion models, where searching for solutions is reformulated as a conditional sampling problem and solved by diffusion models. Lastly, we discuss future directions about diffusion models. The purpose of this paper is to provide a well-rounded theoretical exposure for stimulating forward-looking theories and methods of diffusion models.

- R. Bommasani, D. A. Hudson, E. Adeli, R. Altman, S. Arora, S. von Arx, M. S. Bernstein, J. Bohg, A. Bosselut, E. Brunskill et al., “On the opportunities and risks of foundation models,” arXiv preprint arXiv:2108.07258, 2021.

- J. Sohl-Dickstein, E. Weiss, N. Maheswaranathan, and S. Ganguli, “Deep unsupervised learning using nonequilibrium thermodynamics,” in Proceedings of the International conference on machine learning. PMLR, 2015, pp. 2256–2265.

- I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks,” Communications of the ACM, vol. 63, no. 11, pp. 139–144, 2020.

- A. Creswell, T. White, V. Dumoulin, K. Arulkumaran, B. Sengupta, and A. A. Bharath, “Generative adversarial networks: An overview,” IEEE signal processing magazine, vol. 35, no. 1, pp. 53–65, 2018.

- D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” arXiv preprint arXiv:1312.6114, 2013.

- D. P. Kingma, M. Welling et al., “An introduction to variational autoencoders,” Foundations and Trends® in Machine Learning, vol. 12, no. 4, pp. 307–392, 2019.

- Y. Song and S. Ermon, “Generative modeling by estimating gradients of the data distribution,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- S. Dathathri, A. Madotto, J. Lan, J. Hung, E. Frank, P. Molino, J. Yosinski, and R. Liu, “Plug and play language models: A simple approach to controlled text generation,” arXiv preprint arXiv:1912.02164, 2019.

- J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” Advances in Neural Information Processing Systems, vol. 33, pp. 6840–6851, 2020.

- Y. Song, J. Sohl-Dickstein, D. P. Kingma, A. Kumar, S. Ermon, and B. Poole, “Score-based generative modeling through stochastic differential equations,” arXiv preprint arXiv:2011.13456, 2020.

- Z. Kong, W. Ping, J. Huang, K. Zhao, and B. Catanzaro, “Diffwave: A versatile diffusion model for audio synthesis,” arXiv preprint arXiv:2009.09761, 2020.

- N. Chen, Y. Zhang, H. Zen, R. J. Weiss, M. Norouzi, and W. Chan, “Wavegrad: Estimating gradients for waveform generation,” arXiv preprint arXiv:2009.00713, 2020.

- G. Mittal, J. Engel, C. Hawthorne, and I. Simon, “Symbolic music generation with diffusion models,” arXiv preprint arXiv:2103.16091, 2021.

- R. Huang, Z. Zhao, H. Liu, J. Liu, C. Cui, and Y. Ren, “Prodiff: Progressive fast diffusion model for high-quality text-to-speech,” in Proceedings of the 30th ACM International Conference on Multimedia, 2022, pp. 2595–2605.

- M. Jeong, H. Kim, S. J. Cheon, B. J. Choi, and N. S. Kim, “Diff-tts: A denoising diffusion model for text-to-speech,” arXiv preprint arXiv:2104.01409, 2021.

- A. Ulhaq, N. Akhtar, and G. Pogrebna, “Efficient diffusion models for vision: A survey,” arXiv preprint arXiv:2210.09292, 2022.

- O. Avrahami, D. Lischinski, and O. Fried, “Blended diffusion for text-driven editing of natural images,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 18 208–18 218.

- G. Kim, T. Kwon, and J. C. Ye, “Diffusionclip: Text-guided diffusion models for robust image manipulation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 2426–2435.

- A. Bansal, H.-M. Chu, A. Schwarzschild, S. Sengupta, M. Goldblum, J. Geiping, and T. Goldstein, “Universal guidance for diffusion models,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 843–852.

- C. Saharia, W. Chan, S. Saxena, L. Li, J. Whang, E. L. Denton, K. Ghasemipour, R. Gontijo Lopes, B. Karagol Ayan, T. Salimans et al., “Photorealistic text-to-image diffusion models with deep language understanding,” Advances in Neural Information Processing Systems, vol. 35, pp. 36 479–36 494, 2022.

- R. Po, W. Yifan, V. Golyanik, K. Aberman, J. T. Barron, A. H. Bermano, E. R. Chan, T. Dekel, A. Holynski, A. Kanazawa et al., “State of the art on diffusion models for visual computing,” arXiv preprint arXiv:2310.07204, 2023.

- C. Zhang, C. Zhang, S. Zheng, M. Zhang, M. Qamar, S.-H. Bae, and I. S. Kweon, “A survey on audio diffusion models: Text to speech synthesis and enhancement in generative ai,” arXiv preprint arXiv:2303.13336, vol. 2, 2023.

- X. Li, J. Thickstun, I. Gulrajani, P. S. Liang, and T. B. Hashimoto, “Diffusion-lm improves controllable text generation,” Advances in Neural Information Processing Systems, vol. 35, pp. 4328–4343, 2022.

- P. Yu, S. Xie, X. Ma, B. Jia, B. Pang, R. Gao, Y. Zhu, S.-C. Zhu, and Y. N. Wu, “Latent diffusion energy-based model for interpretable text modeling,” arXiv preprint arXiv:2206.05895, 2022.

- J. Lovelace, V. Kishore, C. Wan, E. Shekhtman, and K. Weinberger, “Latent diffusion for language generation,” arXiv preprint arXiv:2212.09462, 2022.

- J. M. L. Alcaraz and N. Strodthoff, “Diffusion-based time series imputation and forecasting with structured state space models,” arXiv preprint arXiv:2208.09399, 2022.

- Y. Tashiro, J. Song, Y. Song, and S. Ermon, “CSDI: Conditional score-based diffusion models for probabilistic time series imputation,” Advances in Neural Information Processing Systems, vol. 34, pp. 24 804–24 816, 2021.

- G. Tevet, S. Raab, B. Gordon, Y. Shafir, D. Cohen-Or, and A. H. Bermano, “Human motion diffusion model,” arXiv preprint arXiv:2209.14916, 2022.

- M. Tian, B. Chen, A. Guo, S. Jiang, and A. R. Zhang, “Fast and reliable generation of ehr time series via diffusion models,” arXiv preprint arXiv:2310.15290, 2023.

- T. Pearce, T. Rashid, A. Kanervisto, D. Bignell, M. Sun, R. Georgescu, S. V. Macua, S. Z. Tan, I. Momennejad, K. Hofmann, and S. Devlin, “Imitating human behaviour with diffusion models,” arXiv preprint arXiv:2301.10677, 2023.

- C. Chi, S. Feng, Y. Du, Z. Xu, E. Cousineau, B. Burchfiel, and S. Song, “Diffusion Policy: Visuomotor policy learning via action diffusion,” arXiv preprint arXiv:2303.04137, 2023.

- P. Hansen-Estruch, I. Kostrikov, M. Janner, J. G. Kuba, and S. Levine, “IDQL: Implicit Q-learning as an actor-critic method with diffusion policies,” arXiv preprint arXiv:2304.10573, 2023.

- M. Reuss, M. Li, X. Jia, and R. Lioutikov, “Goal-conditioned imitation learning using score-based diffusion policies,” arXiv preprint arXiv:2304.02532, 2023.

- Z. Zhu, H. Zhao, H. He, Y. Zhong, S. Zhang, Y. Yu, and W. Zhang, “Diffusion models for reinforcement learning: A survey,” arXiv preprint arXiv:2311.01223, 2023.

- Z. Ding and C. Jin, “Consistency models as a rich and efficient policy class for reinforcement learning,” arXiv preprint arXiv:2309.16984, 2023.

- C. Cao, Z.-X. Cui, S. Liu, D. Liang, and Y. Zhu, “High-frequency space diffusion models for accelerated mri,” arXiv preprint arXiv:2208.05481, 2022.

- H. Chung, E. S. Lee, and J. C. Ye, “MR image denoising and super-resolution using regularized reverse diffusion,” IEEE Transactions on Medical Imaging, vol. 42, no. 4, pp. 922–934, 2022.

- H. Chung and J. C. Ye, “Score-based diffusion models for accelerated MRI,” Medical Image Analysis, vol. 80, p. 102479, 2022.

- A. Güngör, S. U. Dar, Ş. Öztürk, Y. Korkmaz, H. A. Bedel, G. Elmas, M. Ozbey, and T. Çukur, “Adaptive diffusion priors for accelerated MRI reconstruction,” Medical Image Analysis, p. 102872, 2023.

- B. Jing, G. Corso, J. Chang, R. Barzilay, and T. Jaakkola, “Torsional diffusion for molecular conformer generation,” Advances in Neural Information Processing Systems, vol. 35, pp. 24 240–24 253, 2022.

- N. Anand and T. Achim, “Protein structure and sequence generation with equivariant denoising diffusion probabilistic models,” arXiv preprint arXiv:2205.15019, 2022.

- J. S. Lee, J. Kim, and P. M. Kim, “Proteinsgm: Score-based generative modeling for de novo protein design,” bioRxiv, pp. 2022–07, 2022.

- S. Luo, Y. Su, X. Peng, S. Wang, J. Peng, and J. Ma, “Antigen-specific antibody design and optimization with diffusion-based generative models for protein structures,” Advances in Neural Information Processing Systems, vol. 35, pp. 9754–9767, 2022.

- S. Mei, F. Fan, and A. Maier, “Metal inpainting in CBCT projections using score-based generative model,” arXiv preprint arXiv:2209.09733, 2022.

- D. J. Waibel, E. Röoell, B. Rieck, R. Giryes, and C. Marr, “A diffusion model predicts 3d shapes from 2d microscopy images,” arXiv preprint arXiv:2208.14125, 2022.

- J. Ingraham, M. Baranov, Z. Costello, V. Frappier, A. Ismail, S. Tie, W. Wang, V. Xue, F. Obermeyer, A. Beam et al., “Illuminating protein space with a programmable generative model,” BioRxiv, pp. 2022–12, 2022.

- Y. Huang, X. Peng, J. Ma, and M. Zhang, “3DLinker: an E (3) equivariant variational autoencoder for molecular linker design,” arXiv preprint arXiv:2205.07309, 2022.

- A. Schneuing, Y. Du, C. Harris, A. Jamasb, I. Igashov, W. Du, T. Blundell, P. Lió, C. Gomes, M. Welling, M. Bronstein, and B. Correia, “Structure-based drug design with equivariant diffusion models,” arXiv preprint arXiv:2210.13695, 2022.

- L. Wu, C. Gong, X. Liu, M. Ye, and Q. Liu, “Diffusion-based molecule generation with informative prior bridges,” Advances in Neural Information Processing Systems, vol. 35, pp. 36 533–36 545, 2022.

- N. Gruver, S. Stanton, N. C. Frey, T. G. Rudner, I. Hotzel, J. Lafrance-Vanasse, A. Rajpal, K. Cho, and A. G. Wilson, “Protein design with guided discrete diffusion,” arXiv preprint arXiv:2305.20009, 2023.

- T. Weiss, E. Mayo Yanes, S. Chakraborty, L. Cosmo, A. M. Bronstein, and R. Gershoni-Poranne, “Guided diffusion for inverse molecular design,” Nature Computational Science, pp. 1–10, 2023.

- M. Xu, L. Yu, Y. Song, C. Shi, S. Ermon, and J. Tang, “Geodiff: A geometric diffusion model for molecular conformation generation,” arXiv preprint arXiv:2203.02923, 2022.

- Y. Song, L. Shen, L. Xing, and S. Ermon, “Solving inverse problems in medical imaging with score-based generative models,” arXiv preprint arXiv:2111.08005, 2021.

- J. L. Watson, D. Juergens, N. R. Bennett, B. L. Trippe, J. Yim, H. E. Eisenach, W. Ahern, A. J. Borst, R. J. Ragotte, and L. F. Milles, “De novo design of protein structure and function with rfdiffusion,” Nature, vol. 620, no. 7976, pp. 1089–1100, 2023.

- L. Yang, Z. Zhang, Y. Song, S. Hong, R. Xu, Y. Zhao, Y. Shao, W. Zhang, B. Cui, and M.-H. Yang, “Diffusion models: A comprehensive survey of methods and applications,” arXiv preprint arXiv:2209.00796, 2022.

- X. Li, Y. Ren, X. Jin, C. Lan, X. Wang, W. Zeng, X. Wang, and Z. Chen, “Diffusion models for image restoration and enhancement–a comprehensive survey,” arXiv preprint arXiv:2308.09388, 2023.

- Z. Guo, J. Liu, Y. Wang, M. Chen, D. Wang, D. Xu, and J. Cheng, “Diffusion models in bioinformatics: A new wave of deep learning revolution in action,” arXiv preprint arXiv:2302.10907, 2023.

- H. Cao, C. Tan, Z. Gao, Y. Xu, G. Chen, P.-A. Heng, and S. Z. Li, “A survey on generative diffusion model,” arXiv preprint arXiv:2209.02646, 2022.

- F.-A. Croitoru, V. Hondru, R. T. Ionescu, and M. Shah, “Diffusion models in vision: A survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- E. Hoogeboom, D. Nielsen, P. Jaini, P. Forré, and M. Welling, “Argmax flows and multinomial diffusion: Learning categorical distributions,” Advances in Neural Information Processing Systems, vol. 34, pp. 12 454–12 465, 2021.

- J. Austin, D. D. Johnson, J. Ho, D. Tarlow, and R. Van Den Berg, “Structured denoising diffusion models in discrete state-spaces,” Advances in Neural Information Processing Systems, vol. 34, pp. 17 981–17 993, 2021.

- S. Gu, D. Chen, J. Bao, F. Wen, B. Zhang, D. Chen, L. Yuan, and B. Guo, “Vector quantized diffusion model for text-to-image synthesis,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 10 696–10 706.

- Y. Ouyang, L. Xie, C. Li, and G. Cheng, “Missdiff: Training diffusion models on tabular data with missing values,” arXiv preprint arXiv:2307.00467, 2023.

- Y. Song and S. Ermon, “Improved techniques for training score-based generative models,” Advances in neural information processing systems, vol. 33, pp. 12 438–12 448, 2020.

- J. Song, C. Meng, and S. Ermon, “Denoising diffusion implicit models,” arXiv preprint arXiv:2010.02502, 2020.

- R. Rombach, A. Blattmann, D. Lorenz, P. Esser, and B. Ommer, “High-resolution image synthesis with latent diffusion models,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 10 684–10 695.

- Y. Song, P. Dhariwal, M. Chen, and I. Sutskever, “Consistency models,” arXiv preprint arXiv:2303.01469, 2023.

- C. Lu, Y. Zhou, F. Bao, J. Chen, C. Li, and J. Zhu, “Dpm-solver: A fast ode solver for diffusion probabilistic model sampling in around 10 steps,” Advances in Neural Information Processing Systems, vol. 35, pp. 5775–5787, 2022.

- T. Salimans and J. Ho, “Progressive distillation for fast sampling of diffusion models,” arXiv preprint arXiv:2202.00512, 2022.

- T. Karras, M. Aittala, T. Aila, and S. Laine, “Elucidating the design space of diffusion-based generative models,” Advances in Neural Information Processing Systems, vol. 35, pp. 26 565–26 577, 2022.

- Q. Zhang, M. Tao, and Y. Chen, “gddim: Generalized denoising diffusion implicit models,” arXiv preprint arXiv:2206.05564, 2022.

- F. Bao, C. Li, J. Zhu, and B. Zhang, “Analytic-dpm: an analytic estimate of the optimal reverse variance in diffusion probabilistic models,” arXiv preprint arXiv:2201.06503, 2022.

- X. Liu, X. Zhang, J. Ma, J. Peng, and Q. Liu, “Instaflow: One step is enough for high-quality diffusion-based text-to-image generation,” arXiv preprint arXiv:2309.06380, 2023.

- Q. Zhang and Y. Chen, “Fast sampling of diffusion models with exponential integrator,” arXiv preprint arXiv:2204.13902, 2022.

- K. Clark, P. Vicol, K. Swersky, and D. J. Fleet, “Directly fine-tuning diffusion models on differentiable rewards,” arXiv preprint arXiv:2309.17400, 2023.

- K. Lee, H. Liu, M. Ryu, O. Watkins, Y. Du, C. Boutilier, P. Abbeel, M. Ghavamzadeh, and S. S. Gu, “Aligning text-to-image models using human feedback,” arXiv preprint arXiv:2302.12192, 2023.

- X. Wu, K. Sun, F. Zhu, R. Zhao, and H. Li, “Better aligning text-to-image models with human preference,” arXiv preprint arXiv:2303.14420, 2023.

- K. Black, M. Janner, Y. Du, I. Kostrikov, and S. Levine, “Training diffusion models with reinforcement learning,” arXiv preprint arXiv:2305.13301, 2023.

- Y. Fan, O. Watkins, Y. Du, H. Liu, M. Ryu, C. Boutilier, P. Abbeel, M. Ghavamzadeh, K. Lee, and K. Lee, “DPOK: Reinforcement learning for fine-tuning text-to-image diffusion models,” arXiv preprint arXiv:2305.16381, 2023.

- J. Xu, X. Liu, Y. Wu, Y. Tong, Q. Li, M. Ding, J. Tang, and Y. Dong, “Imagereward: Learning and evaluating human preferences for text-to-image generation,” arXiv preprint arXiv:2304.05977, 2023.

- Y. Hao, Z. Chi, L. Dong, and F. Wei, “Optimizing prompts for text-to-image generation,” arXiv preprint arXiv:2212.09611, 2022.

- D. Watson, W. Chan, J. Ho, and M. Norouzi, “Learning fast samplers for diffusion models by differentiating through sample quality,” in International Conference on Learning Representations, 2021.

- B. Wallace, A. Gokul, S. Ermon, and N. Naik, “End-to-end diffusion latent optimization improves classifier guidance,” arXiv preprint arXiv:2303.13703, 2023.

- A. Block, Y. Mroueh, and A. Rakhlin, “Generative modeling with denoising auto-encoders and langevin sampling,” arXiv preprint arXiv:2002.00107, 2020.

- H. Lee, J. Lu, and Y. Tan, “Convergence for score-based generative modeling with polynomial complexity,” arXiv preprint arXiv:2206.06227, 2022.

- S. Chen, S. Chewi, J. Li, Y. Li, A. Salim, and A. R. Zhang, “Sampling is as easy as learning the score: theory for diffusion models with minimal data assumptions,” arXiv preprint arXiv:2209.11215, 2022.

- H. Lee, J. Lu, and Y. Tan, “Convergence of score-based generative modeling for general data distributions,” arXiv preprint arXiv:2209.12381, 2022.

- S. Chen, S. Chewi, H. Lee, Y. Li, J. Lu, and A. Salim, “The probability flow ode is provably fast,” arXiv preprint arXiv:2305.11798, 2023.

- J. Benton, V. De Bortoli, A. Doucet, and G. Deligiannidis, “Linear convergence bounds for diffusion models via stochastic localization,” arXiv preprint arXiv:2308.03686, 2023.

- K. Oko, S. Akiyama, and T. Suzuki, “Diffusion models are minimax optimal distribution estimators,” arXiv preprint arXiv:2303.01861, 2023.

- M. Chen, K. Huang, T. Zhao, and M. Wang, “Score approximation, estimation and distribution recovery of diffusion models on low-dimensional data,” arXiv preprint arXiv:2302.07194, 2023.

- S. Mei and Y. Wu, “Deep networks as denoising algorithms: Sample-efficient learning of diffusion models in high-dimensional graphical models,” arXiv preprint arXiv:2309.11420, 2023.

- W. Tang and H. Zhao, “Score-based diffusion models via stochastic differential equations–a technical tutorial,” arXiv preprint arXiv:2402.07487, 2024.

- A. Q. Nichol and P. Dhariwal, “Improved denoising diffusion probabilistic models,” in Proceedings of the International Conference on Machine Learning. PMLR, 2021, pp. 8162–8171.

- B. D. Anderson, “Reverse-time diffusion equation models,” Stochastic Processes and their Applications, vol. 12, no. 3, pp. 313–326, 1982.

- U. G. Haussmann and E. Pardoux, “Time reversal of diffusions,” The Annals of Probability, pp. 1188–1205, 1986.

- S. Luo, Y. Tan, S. Patil, D. Gu, P. von Platen, A. Passos, L. Huang, J. Li, and H. Zhao, “Lcm-lora: A universal stable-diffusion acceleration module,” arXiv preprint arXiv:2311.05556, 2023.

- Y. Liu, K. Zhang, Y. Li, Z. Yan, C. Gao, R. Chen, Z. Yuan, Y. Huang, H. Sun, and J. Gao, “Sora: A review on background, technology, limitations, and opportunities of large vision models,” arXiv preprint arXiv:2402.17177, 2024.

- P. Esser, S. Kulal, A. Blattmann, R. Entezari, J. Müller, H. Saini, Y. Levi, D. Lorenz, A. Sauer, and F. Boesel, “Scaling rectified flow transformers for high-resolution image synthesis,” arXiv preprint arXiv:2403.03206, 2024.

- J. Ho and T. Salimans, “Classifier-free diffusion guidance,” arXiv preprint arXiv:2207.12598, 2022.

- A. Ramesh, P. Dhariwal, A. Nichol, C. Chu, and M. Chen, “Hierarchical text-conditional image generation with clip latents,” arXiv preprint arXiv:2204.06125, 2022.

- Y. Wang, J. Yu, and J. Zhang, “Zero-shot image restoration using denoising diffusion null-space model,” arXiv preprint arXiv:2212.00490, 2022.

- B. Kawar, M. Elad, S. Ermon, and J. Song, “Denoising diffusion restoration models,” Advances in Neural Information Processing Systems, vol. 35, pp. 23 593–23 606, 2022.

- J. Choi, S. Kim, Y. Jeong, Y. Gwon, and S. Yoon, “ILVR: Conditioning method for denoising diffusion probabilistic models,” arXiv preprint arXiv:2108.02938, 2021.

- C. Saharia, J. Ho, W. Chan, T. Salimans, D. J. Fleet, and M. Norouzi, “Image super-resolution via iterative refinement,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 4, pp. 4713–4726, 2022.

- C. Saharia, W. Chan, H. Chang, C. Lee, J. Ho, T. Salimans, D. Fleet, and M. Norouzi, “Palette: Image-to-image diffusion models,” in ACM SIGGRAPH 2022 Conference Proceedings, 2022, pp. 1–10.

- A. Lugmayr, M. Danelljan, A. Romero, F. Yu, R. Timofte, and L. Van Gool, “Repaint: Inpainting using denoising diffusion probabilistic models,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 11 461–11 471.

- H. Li, Y. Yang, M. Chang, S. Chen, H. Feng, Z. Xu, Q. Li, and Y. Chen, “Srdiff: Single image super-resolution with diffusion probabilistic models,” Neurocomputing, vol. 479, pp. 47–59, 2022.

- J. Whang, M. Delbracio, H. Talebi, C. Saharia, A. G. Dimakis, and P. Milanfar, “Deblurring via stochastic refinement,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 16 293–16 303.

- Y.-J. Lu, Y. Tsao, and S. Watanabe, “A study on speech enhancement based on diffusion probabilistic model,” in 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC). IEEE, 2021, pp. 659–666.

- S. Welker, J. Richter, and T. Gerkmann, “Speech enhancement with score-based generative models in the complex stft domain,” arXiv preprint arXiv:2203.17004, 2022.

- J. Richter, S. Welker, J.-M. Lemercier, B. Lay, and T. Gerkmann, “Speech enhancement and dereverberation with diffusion-based generative models,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2023.

- C.-Y. Yu, S.-L. Yeh, G. Fazekas, and H. Tang, “Conditioning and sampling in variational diffusion models for speech super-resolution,” in ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023, pp. 1–5.

- Z. Wang, J. J. Hunt, and M. Zhou, “Diffusion policies as an expressive policy class for offline reinforcement learning,” arXiv preprint arXiv:2208.06193, 2022.

- M. Janner, Q. Li, and S. Levine, “Offline reinforcement learning as one big sequence modeling problem,” Advances in Neural Information Processing Systems, 2021.

- L. Chen, K. Lu, A. Rajeswaran, K. Lee, A. Grover, M. Laskin, P. Abbeel, A. Srinivas, and I. Mordatch, “Decision transformer: Reinforcement learning via sequence modeling,” Advances in neural information processing systems, vol. 34, pp. 15 084–15 097, 2021.

- M. Janner, Y. Du, J. B. Tenenbaum, and S. Levine, “Planning with diffusion for flexible behavior synthesis,” arXiv preprint arXiv:2205.09991, 2022.

- A. Ajay, Y. Du, A. Gupta, J. Tenenbaum, T. Jaakkola, and P. Agrawal, “Is conditional generative modeling all you need for decision-making?” arXiv preprint arXiv:2211.15657, 2022.

- P. Agrawal, A. V. Nair, P. Abbeel, J. Malik, and S. Levine, “Learning to poke by poking: Experiential learning of intuitive physics,” Advances in neural information processing systems, vol. 29, 2016.

- Z. Liang, Y. Mu, M. Ding, F. Ni, M. Tomizuka, and P. Luo, “AdaptDiffuser: Diffusion models as adaptive self-evolving planners,” arXiv preprint arXiv:2302.01877, 2023.

- E. D. Zhong, T. Bepler, J. H. Davis, and B. Berger, “Reconstructing continuous distributions of 3d protein structure from cryo-em images,” arXiv preprint arXiv:1909.05215, 2019.

- E. D. Zhong, T. Bepler, B. Berger, and J. H. Davis, “CryoDRGN: reconstruction of heterogeneous cryo-EM structures using neural networks,” Nature Methods, vol. 18, no. 2, pp. 176–185, 2021.

- J.-E. Shin, A. J. Riesselman, A. W. Kollasch, C. McMahon, E. Simon, C. Sander, A. Manglik, A. C. Kruse, and D. S. Marks, “Protein design and variant prediction using autoregressive generative models,” Nature Communications, vol. 12, no. 1, p. 2403, 2021.

- A. Strokach and P. M. Kim, “Deep generative modeling for protein design,” Current Opinion in Structural Biology, vol. 72, pp. 226–236, 2022.

- B. Trabucco, X. Geng, A. Kumar, and S. Levine, “Design-bench: Benchmarks for data-driven offline model-based optimization,” in Proceedings of the International Conference on Machine Learning. PMLR, 2022, pp. 21 658–21 676.

- A. Kumar and S. Levine, “Model inversion networks for model-based optimization,” Advances in Neural Information Processing Systems, vol. 33, pp. 5126–5137, 2020.

- S. Krishnamoorthy, S. M. Mashkaria, and A. Grover, “Diffusion models for black-box optimization,” arXiv preprint arXiv:2306.07180, 2023.

- H. Yuan, K. Huang, C. Ni, M. Chen, and M. Wang, “Reward-directed conditional diffusion: Provable distribution estimation and reward improvement,” arXiv preprint arXiv:2307.07055, 2023.

- A. Hyvärinen and P. Dayan, “Estimation of non-normalized statistical models by score matching.” Journal of Machine Learning Research, vol. 6, no. 4, 2005.

- P. Vincent, “A connection between score matching and denoising autoencoders,” Neural computation, vol. 23, no. 7, pp. 1661–1674, 2011.

- C.-W. Huang, J. H. Lim, and A. C. Courville, “A variational perspective on diffusion-based generative models and score matching,” Advances in Neural Information Processing Systems, vol. 34, pp. 22 863–22 876, 2021.

- C. Luo, “Understanding diffusion models: A unified perspective,” arXiv preprint arXiv:2208.11970, 2022.

- A. Vahdat, K. Kreis, and J. Kautz, “Score-based generative modeling in latent space,” Advances in Neural Information Processing Systems, vol. 34, pp. 11 287–11 302, 2021.

- O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18. Springer, 2015, pp. 234–241.

- W. Peebles and S. Xie, “Scalable diffusion models with transformers,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 4195–4205.

- A. Gupta, L. Yu, K. Sohn, X. Gu, M. Hahn, L. Fei-Fei, I. Essa, L. Jiang, and J. Lezama, “Photorealistic video generation with diffusion models,” arXiv preprint arXiv:2312.06662, 2023.

- G. Cybenko, “Approximation by superpositions of a sigmoidal function,” Mathematics of Control, Signals and Systems, vol. 2, no. 4, pp. 303–314, 1989.

- K. Hornik, M. Stinchcombe, and H. White, “Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks,” Neural Networks, vol. 3, no. 5, pp. 551–560, 1990.

- A. R. Barron, “Approximation and estimation bounds for artificial neural networks,” Machine Learning, vol. 14, pp. 115–133, 1994.

- I. Gühring, G. Kutyniok, and P. Petersen, “Error bounds for approximations with deep relu neural networks in w s, p norms,” Analysis and Applications, vol. 18, no. 05, pp. 803–859, 2020.

- D. Yarotsky, “Optimal approximation of continuous functions by very deep relu networks,” in Conference on learning theory. PMLR, 2018, pp. 639–649.

- J. Lu, Z. Shen, H. Yang, and S. Zhang, “Deep network approximation for smooth functions,” SIAM Journal on Mathematical Analysis, vol. 53, no. 5, pp. 5465–5506, 2021.

- A. J. Schmidt-Hieber, “Nonparametric regression using deep neural networks with relu activation function,” Annals of Statistics, vol. 48, no. 4, pp. 1875–1897, 2020.

- M. Chen, H. Jiang, W. Liao, and T. Zhao, “Efficient approximation of deep relu networks for functions on low dimensional manifolds,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- K. Y. Yang and A. Wibisono, “Convergence in kl and rényi divergence of the unadjusted langevin algorithm using estimated score,” in NeurIPS 2022 Workshop on Score-Based Methods, 2022.

- A. Wibisono, Y. Wu, and K. Y. Yang, “Optimal score estimation via empirical bayes smoothing,” arXiv preprint arXiv:2402.07747, 2024.

- K. Shah, S. Chen, and A. Klivans, “Learning mixtures of gaussians using the ddpm objective,” arXiv preprint arXiv:2307.01178, 2023.

- Y. Han, M. Razaviyayn, and R. Xu, “Neural network-based score estimation in diffusion models: Optimization and generalization,” arXiv preprint arXiv:2401.15604, 2024.

- S. Geman and C. Graffigne, “Markov random field image models and their applications to computer vision,” in Proceedings of the international congress of mathematicians, vol. 1. Berkeley, CA, 1986, p. 2.

- M. Ranzato, A. Krizhevsky, and G. Hinton, “Factored 3-way restricted boltzmann machines for modeling natural images,” in Proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings, 2010, pp. 621–628.

- R. Eldan, “Gaussian-width gradient complexity, reverse log-sobolev inequalities and nonlinear large deviations,” Geometric and Functional Analysis, vol. 28, no. 6, pp. 1548–1596, 2018.

- M. Celentano, Z. Fan, and S. Mei, “Local convexity of the tap free energy and amp convergence for z 2-synchronization,” The Annals of Statistics, vol. 51, no. 2, pp. 519–546, 2023.

- V. De Bortoli, J. Thornton, J. Heng, and A. Doucet, “Diffusion schrödinger bridge with applications to score-based generative modeling,” Advances in Neural Information Processing Systems, vol. 34, pp. 17 695–17 709, 2021.

- M. S. Albergo, N. M. Boffi, and E. Vanden-Eijnden, “Stochastic interpolants: A unifying framework for flows and diffusions,” arXiv preprint arXiv:2303.08797, 2023.

- J. Benton, V. De Bortoli, A. Doucet, and G. Deligiannidis, “Nearly d𝑑ditalic_d-linear convergence bounds for diffusion models via stochastic localization,” in Proceedings of the International Conference on Learning Representations, 2024.

- S. Chen, G. Daras, and A. Dimakis, “Restoration-degradation beyond linear diffusions: A non-asymptotic analysis for ddim-type samplers,” in International Conference on Machine Learning. PMLR, 2023, pp. 4462–4484.

- G. Li, Y. Huang, T. Efimov, Y. Wei, Y. Chi, and Y. Chen, “Accelerating convergence of score-based diffusion models, provably,” arXiv preprint arXiv:2403.03852, 2024.

- G. Li, Y. Wei, Y. Chen, and Y. Chi, “Towards faster non-asymptotic convergence for diffusion-based generative models,” arXiv preprint arXiv:2306.09251, 2023.

- V. De Bortoli, “Convergence of denoising diffusion models under the manifold hypothesis,” arXiv preprint arXiv:2208.05314, 2022.

- A. Montanari and Y. Wu, “Posterior sampling from the spiked models via diffusion processes,” arXiv preprint arXiv:2304.11449, 2023.

- A. El Alaoui, A. Montanari, and M. Sellke, “Sampling from mean-field gibbs measures via diffusion processes,” arXiv preprint arXiv:2310.08912, 2023.

- R. Eldan, “Thin shell implies spectral gap up to polylog via a stochastic localization scheme,” Geometric and Functional Analysis, vol. 23, no. 2, pp. 532–569, 2013.

- A. Montanari, “Sampling, diffusions, and stochastic localization,” arXiv preprint arXiv:2305.10690, 2023.

- Y. Chen and R. Eldan, “Localization schemes: A framework for proving mixing bounds for markov chains,” in 2022 IEEE 63rd Annual Symposium on Foundations of Computer Science (FOCS). IEEE, 2022, pp. 110–122.

- A. El Alaoui and A. Montanari, “An information-theoretic view of stochastic localization,” IEEE Transactions on Information Theory, vol. 68, no. 11, pp. 7423–7426, 2022.

- A. El Alaoui, A. Montanari, and M. Sellke, “Sampling from the sherrington-kirkpatrick gibbs measure via algorithmic stochastic localization,” in 2022 IEEE 63rd Annual Symposium on Foundations of Computer Science (FOCS). IEEE, 2022, pp. 323–334.

- D. Ghio, Y. Dandi, F. Krzakala, and L. Zdeborova, “Sampling with flows, diffusion and autoregressive neural networks: A spin-glass perspective,” arXiv preprint arXiv:2308.14085, 2023.

- Y. Song, S. Garg, J. Shi, and S. Ermon, “Sliced score matching: A scalable approach to density and score estimation,” in Uncertainty in Artificial Intelligence. PMLR, 2020, pp. 574–584.

- X. Liu, L. Wu, M. Ye, and Q. Liu, “Let us build bridges: Understanding and extending diffusion generative models,” arXiv preprint arXiv:2208.14699, 2022.

- R. Eldan, “Taming correlations through entropy-efficient measure decompositions with applications to mean-field approximation,” Probability Theory and Related Fields, vol. 176, no. 3, pp. 737–755, 2020.

- ——, “Analysis of high-dimensional distributions using pathwise methods,” in Proc. Int. Cong. Math, vol. 6, 2022, pp. 4246–4270.

- Y. T. Lee and S. S. Vempala, “Eldan’s stochastic localization and the kls conjecture: Isoperimetry, concentration and mixing,” arXiv preprint arXiv:1612.01507, 2016.

- Y. Chen, “An almost constant lower bound of the isoperimetric coefficient in the kls conjecture,” Geometric and Functional Analysis, vol. 31, pp. 34–61, 2021.

- A. E. Alaoui, A. Montanari, and M. Sellke, “Sampling from mean-field gibbs measures via diffusion processes,” arXiv preprint arXiv:2310.08912, 2023.

- Z. Li, H. Yuan, K. Huang, C. Ni, Y. Ye, M. Chen, and M. Wang, “Diffusion model for data-driven black-box optimization,” arXiv preprint arXiv:2403.13219, 2024.

- P. Dhariwal and A. Nichol, “Diffusion models beat gans on image synthesis,” Advances in Neural Information Processing Systems, vol. 34, pp. 8780–8794, 2021.

- A. Brock, J. Donahue, and K. Simonyan, “Large scale gan training for high fidelity natural image synthesis,” arXiv preprint arXiv:1809.11096, 2018.

- D. P. Kingma and P. Dhariwal, “Glow: Generative flow with invertible 1x1 convolutions,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- Y. Wu, M. Chen, Z. Li, M. Wang, and Y. Wei, “Theoretical insights for diffusion guidance: A case study for gaussian mixture models,” arXiv preprint arXiv:2403.01639, 2024.

- M. Uehara, Y. Zhao, K. Black, E. Hajiramezanali, G. Scalia, N. L. Diamant, A. M. Tseng, S. Levine, and T. Biancalani, “Feedback efficient online fine-tuning of diffusion models,” arXiv preprint arXiv:2402.16359, 2024.

- W. Tang, “Fine-tuning of diffusion models via stochastic control: entropy regularization and beyond,” arXiv preprint arXiv:2403.06279, 2024.

- M. Uehara, Y. Zhao, K. Black, E. Hajiramezanali, G. Scalia, N. L. Diamant, A. M. Tseng, T. Biancalani, and S. Levine, “Fine-tuning of continuous-time diffusion models as entropy-regularized control,” arXiv preprint arXiv:2402.15194, 2024.

- H. Fu, Z. Yang, M. Wang, and M. Chen, “Unveil conditional diffusion models with classifier-free guidance: A sharp statistical theory,” arXiv preprint arXiv:2403.11968, 2024.

- H. Chung, J. Kim, M. T. Mccann, M. L. Klasky, and J. C. Ye, “Diffusion posterior sampling for general noisy inverse problems,” arXiv preprint arXiv:2209.14687, 2022.

- L. Rout, N. Raoof, G. Daras, C. Caramanis, A. Dimakis, and S. Shakkottai, “Solving linear inverse problems provably via posterior sampling with latent diffusion models,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- H. Chung, B. Sim, D. Ryu, and J. C. Ye, “Improving diffusion models for inverse problems using manifold constraints,” Advances in Neural Information Processing Systems, vol. 35, pp. 25 683–25 696, 2022.

- Y. Pan and D. Wu, “A novel recommendation model for online-to-offline service based on the customer network and service location,” Journal of Management Information Systems, vol. 37, no. 2, pp. 563–593, 2020.

- Y. Pan, D. Wu, C. Luo, and A. Dolgui, “User activity measurement in rating-based online-to-offline (o2o) service recommendation,” Information Sciences, vol. 479, pp. 180–196, 2019.

- J. Fu and S. Levine, “Offline model-based optimization via normalized maximum likelihood estimation,” arXiv preprint arXiv:2102.07970, 2021.

- J. Bu, D. Simchi-Levi, and L. Wang, “Offline pricing and demand learning with censored data,” Management Science, vol. 69, no. 2, pp. 885–903, 2023.

- T. Nguyen-Tang, S. Gupta, A. T. Nguyen, and S. Venkatesh, “Offline neural contextual bandits: Pessimism, optimization and generalization,” arXiv preprint arXiv:2111.13807, 2021.

- Y. Jin, Z. Yang, and Z. Wang, “Is pessimism provably efficient for offline RL?” in International Conference on Machine Learning. PMLR, 2021, pp. 5084–5096.

- R. A. Bradley and M. E. Terry, “Rank analysis of incomplete block designs: I. the method of paired comparisons,” Biometrika, vol. 39, no. 3/4, pp. 324–345, 1952.

- B. Zhu, M. Jordan, and J. Jiao, “Principled reinforcement learning with human feedback from pairwise or k-wise comparisons,” in Proceedings of the International Conference on Machine Learning. PMLR, 2023, pp. 43 037–43 067.

- A. Lou and S. Ermon, “Reflected diffusion models,” arXiv preprint arXiv:2304.04740, 2023.

- W. Nie, B. Guo, Y. Huang, C. Xiao, A. Vahdat, and A. Anandkumar, “Diffusion models for adversarial purification,” arXiv preprint arXiv:2205.07460, 2022.

- Q. Wu, H. Ye, and Y. Gu, “Guided diffusion model for adversarial purification from random noise,” arXiv preprint arXiv:2206.10875, 2022.

- N. Carlini, F. Tramer, K. D. Dvijotham, L. Rice, M. Sun, and J. Z. Kolter, “(certified!!) adversarial robustness for free!” arXiv preprint arXiv:2206.10550, 2022.

- C. Xiao, Z. Chen, K. Jin, J. Wang, W. Nie, M. Liu, A. Anandkumar, B. Li, and D. Song, “Densepure: Understanding diffusion models for adversarial robustness,” in The Eleventh International Conference on Learning Representations, 2022.

- E. Delage and Y. Ye, “Distributionally robust optimization under moment uncertainty with application to data-driven problems,” Operations research, vol. 58, no. 3, pp. 595–612, 2010.

- D. Kuhn, P. M. Esfahani, V. A. Nguyen, and S. Shafieezadeh-Abadeh, “Wasserstein distributionally robust optimization: Theory and applications in machine learning,” in Operations research & management science in the age of analytics. Informs, 2019, pp. 130–166.

- J. Goh and M. Sim, “Distributionally robust optimization and its tractable approximations,” Operations research, vol. 58, no. 4-part-1, pp. 902–917, 2010.

- H. Rahimian and S. Mehrotra, “Distributionally robust optimization: A review,” arXiv preprint arXiv:1908.05659, 2019.

- C. Xu, J. Lee, X. Cheng, and Y. Xie, “Flow-based distributionally robust optimization,” IEEE Journal on Selected Areas in Information Theory, 2024.

- C. Meng, K. Choi, J. Song, and S. Ermon, “Concrete score matching: Generalized score matching for discrete data,” Advances in Neural Information Processing Systems, vol. 35, pp. 34 532–34 545, 2022.

- A. Campbell, J. Benton, V. De Bortoli, T. Rainforth, G. Deligiannidis, and A. Doucet, “A continuous time framework for discrete denoising models,” Advances in Neural Information Processing Systems, vol. 35, pp. 28 266–28 279, 2022.

- J. Benton, Y. Shi, V. De Bortoli, G. Deligiannidis, and A. Doucet, “From denoising diffusions to denoising markov models,” arXiv preprint arXiv:2211.03595, 2022.

- J. E. Santos, Z. R. Fox, N. Lubbers, and Y. T. Lin, “Blackout diffusion: generative diffusion models in discrete-state spaces,” in Proceedings of the International Conference on Machine Learning. PMLR, 2023, pp. 9034–9059.

- A. Lou, C. Meng, and S. Ermon, “Discrete diffusion language modeling by estimating the ratios of the data distribution,” arXiv preprint arXiv:2310.16834, 2023.

- H. Sun, L. Yu, B. Dai, D. Schuurmans, and H. Dai, “Score-based continuous-time discrete diffusion models,” arXiv preprint arXiv:2211.16750, 2022.

- Y. Li, J. Guo, R. Wang, and J. Yan, “From distribution learning in training to gradient search in testing for combinatorial optimization,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- H. Chen and L. Ying, “Convergence analysis of discrete diffusion model: Exact implementation through uniformization,” arXiv preprint arXiv:2402.08095, 2024.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.