RiskLabs: Predicting Financial Risk Using Large Language Model based on Multimodal and Multi-Sources Data (2404.07452v2)

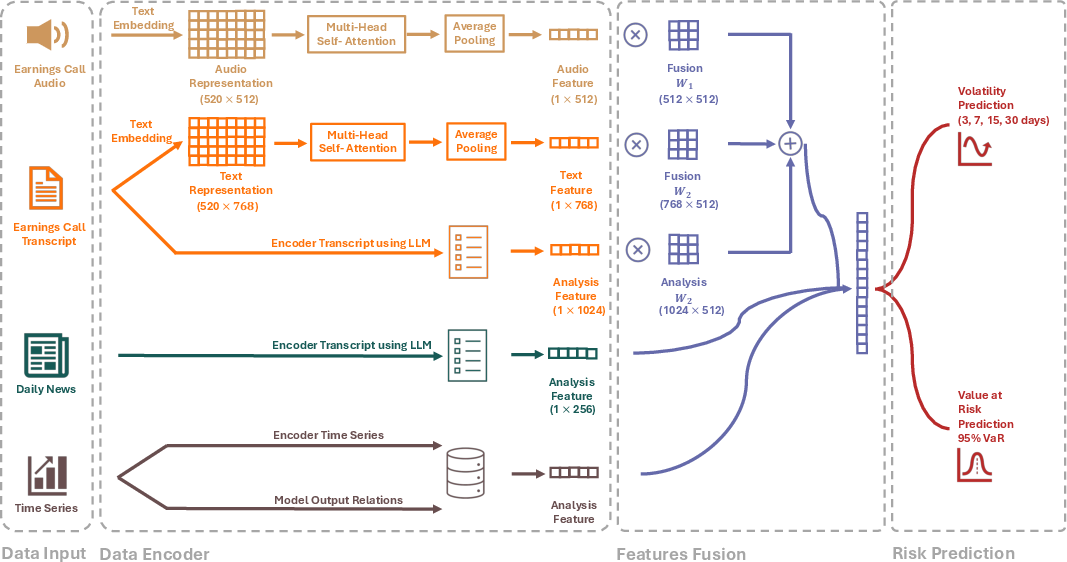

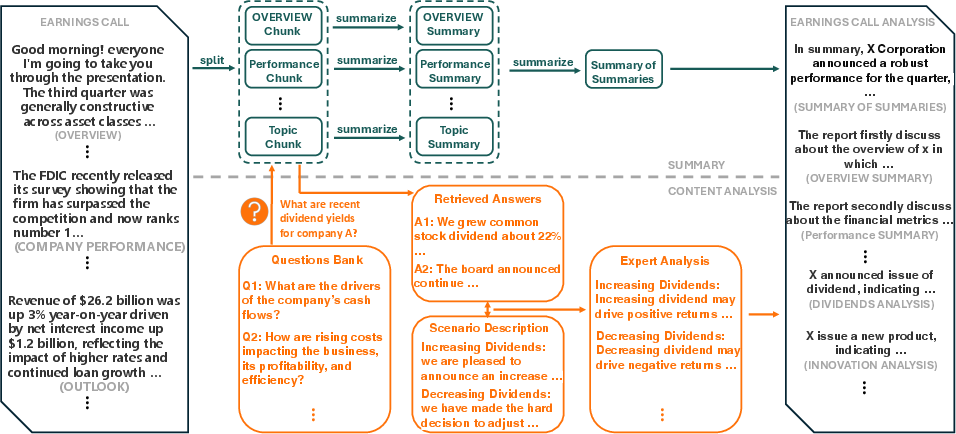

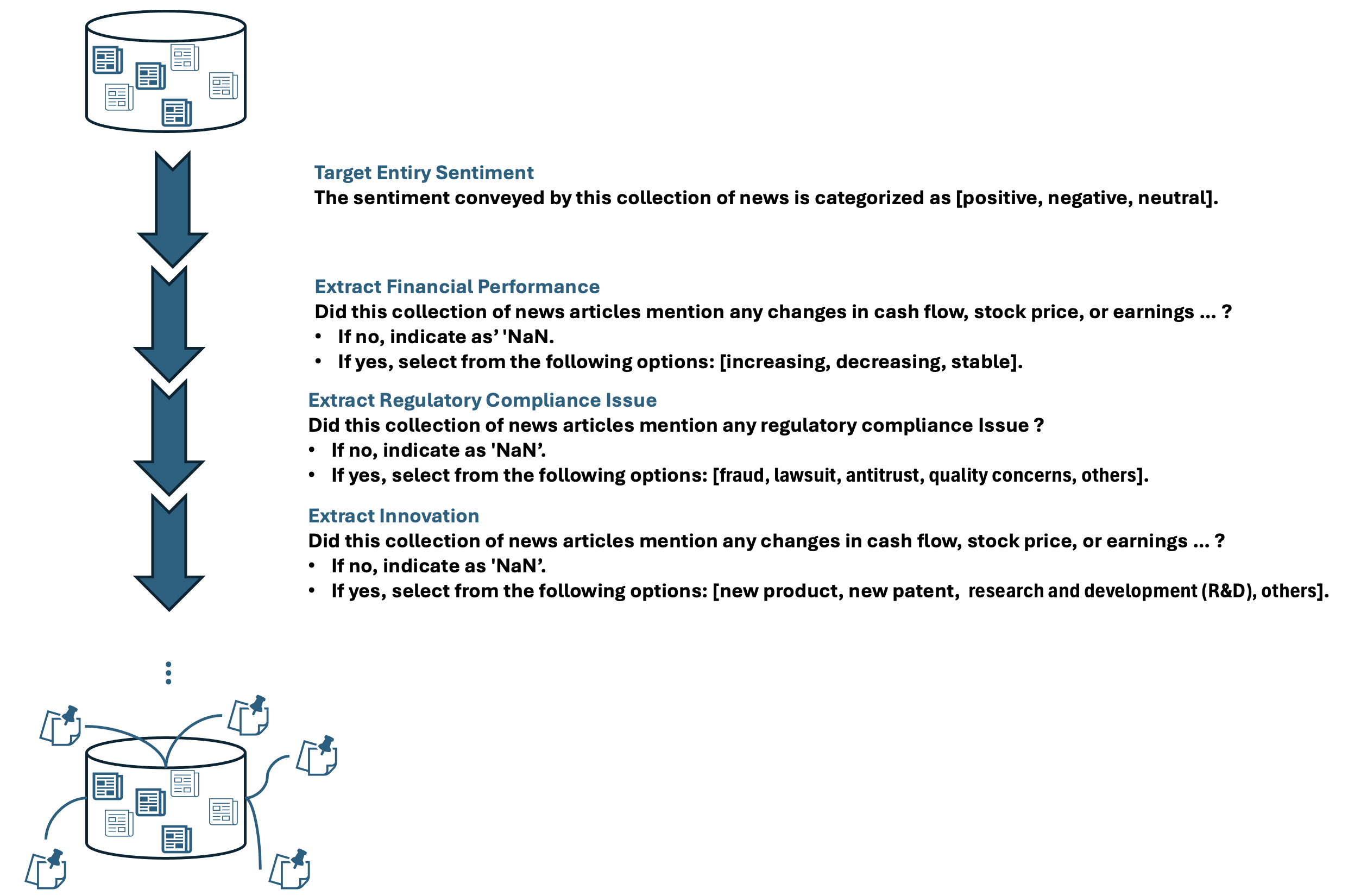

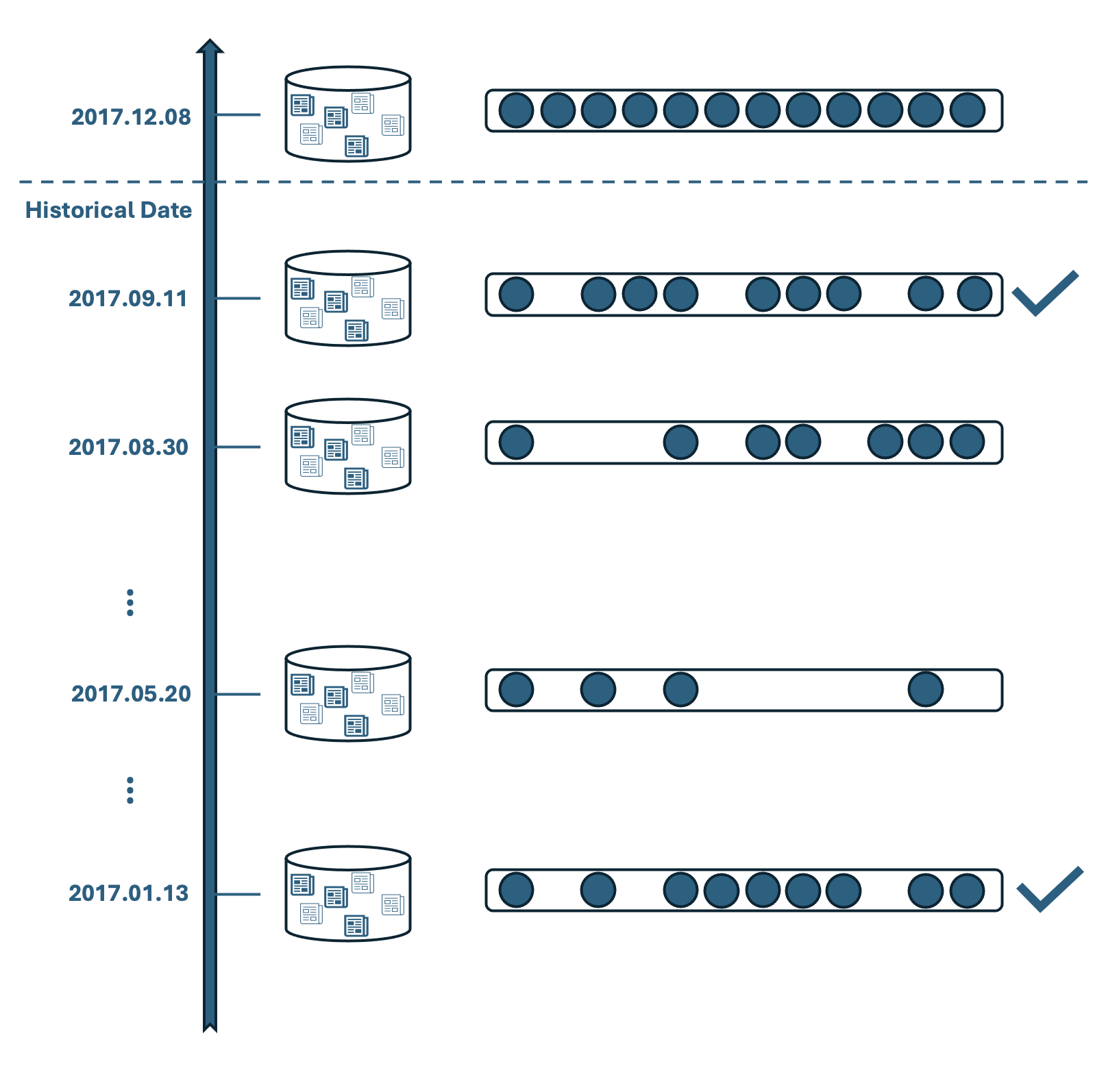

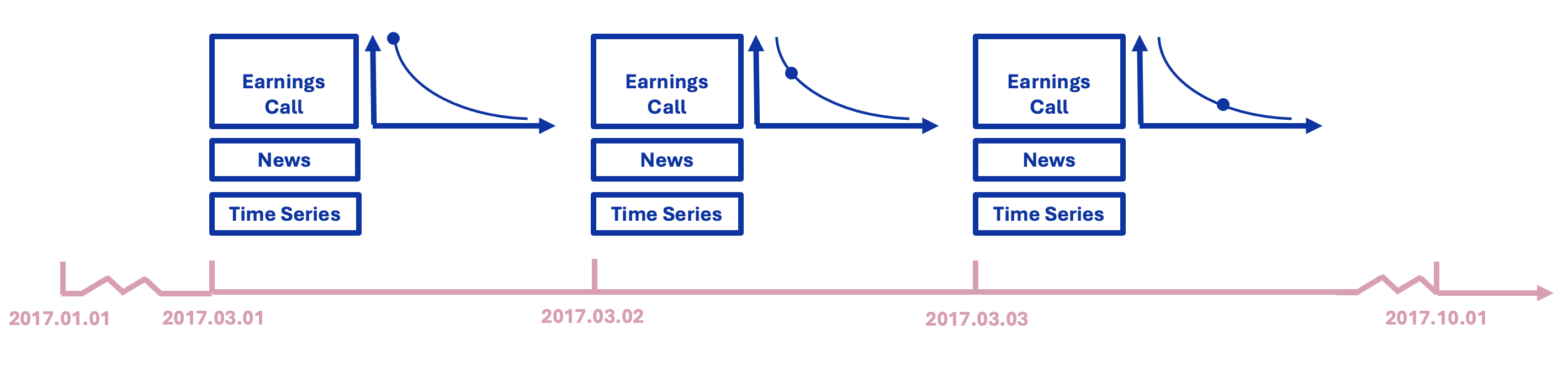

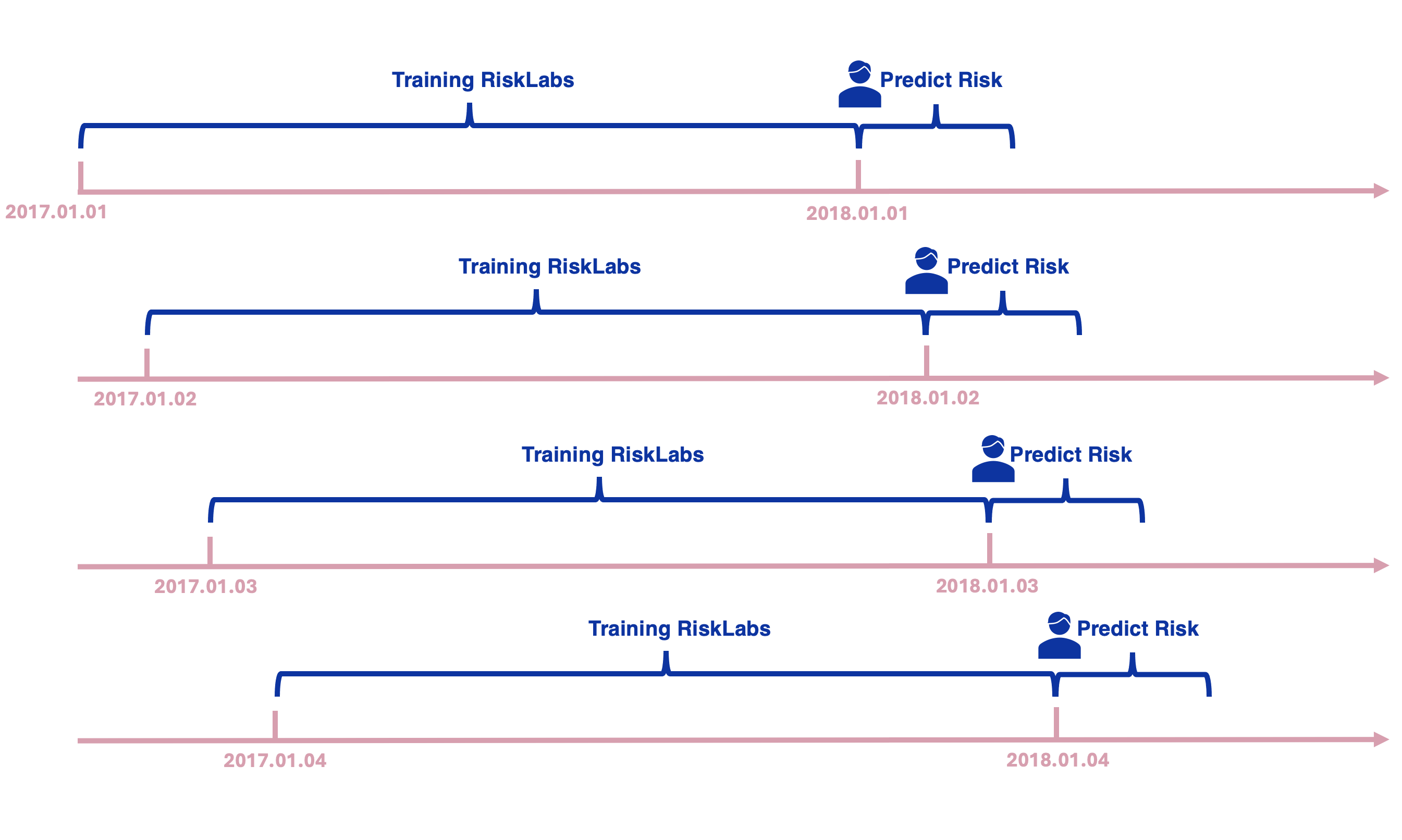

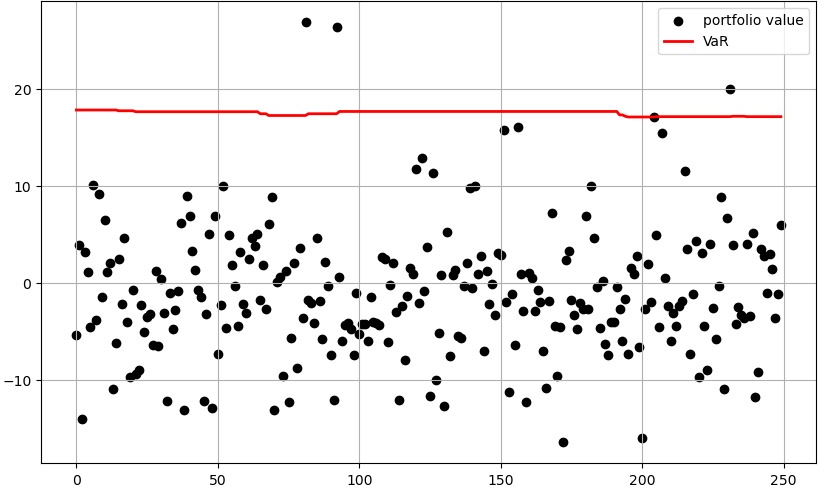

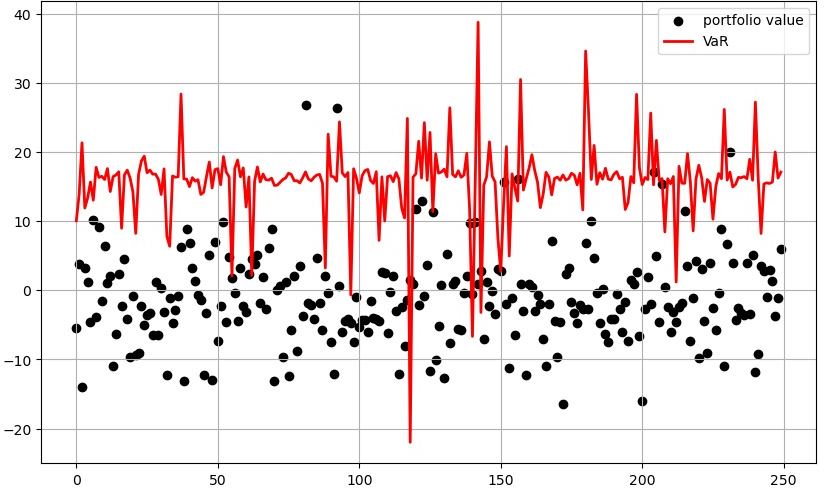

Abstract: The integration of AI techniques, particularly LLMs, in finance has garnered increasing academic attention. Despite progress, existing studies predominantly focus on tasks like financial text summarization, question-answering, and stock movement prediction (binary classification), the application of LLMs to financial risk prediction remains underexplored. Addressing this gap, in this paper, we introduce RiskLabs, a novel framework that leverages LLMs to analyze and predict financial risks. RiskLabs uniquely integrates multimodal financial data, including textual and vocal information from Earnings Conference Calls (ECCs), market-related time series data, and contextual news data to improve financial risk prediction. Empirical results demonstrate RiskLabs' effectiveness in forecasting both market volatility and variance. Through comparative experiments, we examine the contributions of different data sources to financial risk assessment and highlight the crucial role of LLMs in this process. We also discuss the challenges associated with using LLMs for financial risk prediction and explore the potential of combining them with multimodal data for this purpose.

- An exploration of automatic text summarization of financial reports. In Proceedings of the Third Workshop on Financial Technology and Natural Language Processing, pp. 1–7.

- Gpt-4 technical report. arXiv preprint arXiv:2303.08774.

- Identifying corporate credit risk sentiments from financial news. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Industry Track, pp. 362–370.

- The distribution of realized stock return volatility. Journal of financial economics 61(1), 43–76.

- Artificial intelligence and fintech: An overview of opportunities and risks for banking, investments, and microfinance. Strategic Change 30(3), 211–222.

- Predicting companies’ esg ratings from news articles using multivariate timeseries analysis. arXiv preprint arXiv:2212.11765.

- wav2vec 2.0: A framework for self-supervised learning of speech representations. Advances in neural information processing systems 33, 12449–12460.

- Becker, P. (2023). Sustainability science: Managing risk and resilience for sustainable development. Elsevier.

- Artificial intelligence, machine learning and big data in finance opportunities, challenges, and implications for policy makers.

- Breiman, L. (2001). Random forests. Machine learning 45, 5–32.

- Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555.

- Volatility distribution in the s&p500 stock index. Physica A: Statistical Mechanics and its Applications 245(3-4), 441–445.

- Support-vector networks. Machine learning 20, 273–297.

- Forecasting stock market volatility using (non-linear) garch models. Journal of forecasting 15(3), 229–235.

- Simcse: Simple contrastive learning of sentence embeddings. arXiv preprint arXiv:2104.08821.

- Learning to forget: Continual prediction with lstm. Neural computation 12(10), 2451–2471.

- Empirical asset pricing via machine learning. The Review of Financial Studies 33(5), 2223–2273.

- Predicting the direction of stock market prices using random forest. arXiv preprint arXiv:1605.00003.

- Kim, H. Y. and C. H. Won (2018). Forecasting the volatility of stock price index: A hybrid model integrating lstm with multiple garch-type models. Expert Systems with Applications 103, 25–37.

- Predicting risk from financial reports with regression. In Proceedings of human language technologies: the 2009 annual conference of the North American Chapter of the Association for Computational Linguistics, pp. 272–280.

- Kruschke, J. (2014). Doing bayesian data analysis: A tutorial with r, jags, and stan.

- Lakhani, A. (2023). Enhancing customer service with chatgpt transforming the way businesses interact with customers.

- Lee, Y.-C. (2007). Application of support vector machines to corporate credit rating prediction. Expert Systems with Applications 33(1), 67–74.

- Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems 33, 9459–9474.

- Large language models in finance: A survey. In Proceedings of the Fourth ACM International Conference on AI in Finance, pp. 374–382.

- Train big, then compress: Rethinking model size for efficient training and inference of transformers. In International Conference on machine learning, pp. 5958–5968. PMLR.

- Foundations and trends in multimodal machine learning: Principles, challenges, and open questions. arXiv preprint arXiv:2209.03430.

- Investigating deep stock market forecasting with sentiment analysis. Entropy 25(2), 219.

- Multi-task sequence to sequence learning. arXiv preprint arXiv:1511.06114.

- Mitchell, A. (2013). Risk and resilience: From good idea to good practice.

- Stock price prediction using news sentiment analysis. In 2019 IEEE fifth international conference on big data computing service and applications (BigDataService), pp. 205–208. IEEE.

- What you say and how you say it matters: Predicting stock volatility using verbal and vocal cues. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 390–401.

- A survey of hallucination in large foundation models. arXiv preprint arXiv:2309.05922.

- Multimodal multi-task financial risk forecasting. In Proceedings of the 28th ACM international conference on multimedia, pp. 456–465.

- Retrieval augmentation reduces hallucination in conversation. arXiv preprint arXiv:2104.07567.

- The performance of lstm and bilstm in forecasting time series. In 2019 IEEE International conference on big data (Big Data), pp. 3285–3292. IEEE.

- A review of supervised machine learning algorithms. In 2016 3rd international conference on computing for sustainable global development (INDIACom), pp. 1310–1315. Ieee.

- Soni, V. (2023). Large language models for enhancing customer lifecycle management. Journal of Empirical Social Science Studies 7(1), 67–89.

- Enhanced news sentiment analysis using deep learning methods. Journal of Computational Social Science 2(1), 33–46.

- Improving customer service quality in msmes through the use of chatgpt. Jurnal Minfo Polgan 12(1), 380–386.

- Managing risk and resilience.

- Rank-normalization, folding, and localization: An improved r for assessing convergence of mcmc (with discussion). Bayesian analysis 16(2), 667–718.

- A sparsity algorithm for finding optimal counterfactual explanations: Application to corporate credit rating. Research in International Business and Finance 64, 101869.

- Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems 35, 24824–24837.

- An effective application of decision tree to stock trading. Expert Systems with applications 31(2), 270–274.

- Fingpt: Open-source financial large language models. arXiv preprint arXiv:2306.06031.

- Html: Hierarchical transformer-based multi-task learning for volatility prediction. In Proceedings of The Web Conference 2020, pp. 441–451.

- Finmem: A performance-enhanced llm trading agent with layered memory and character design. arXiv preprint arXiv:2311.13743.

- Enhancing financial sentiment analysis via retrieval augmented large language models. In Proceedings of the Fourth ACM International Conference on AI in Finance, pp. 349–356.

- Benchmarking large language models for news summarization. Transactions of the Association for Computational Linguistics 12, 39–57.

- Joint abstractive and extractive method for long financial document summarization. In Proceedings of the 3rd Financial Narrative Processing Workshop, pp. 99–105.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.