- The paper introduces RoT, enhancing LLMs by using reflections on search trees to prevent repeated errors in multi-step reasoning.

- RoT employs a state selection strategy to identify critical experience states that inform guideline summarization for refined decision-making.

- Experiments in Blocksworld and CraigslistBargain show RoT improves search efficiency and strategic planning, outperforming non-tree-search methods.

Reflection on Search Trees: Enhancing LLMs

This essay dissects the approach and findings presented in the paper "RoT: Enhancing LLMs with Reflection on Search Trees" (2404.05449). It details the enhancement of LLMs through the novel framework called Reflection on search Trees (RoT), which draws from past experiences in tree-search-based prompting methods to improve reasoning and planning capabilities in LLMs.

Introduction

Recent advancements have shown that tree-search-based prompting methods significantly enhance LLMs in complex tasks requiring multi-step reasoning and planning. These methods decompose a problem into sequential steps involving action and state transitions. However, traditional tree search methodologies are limited, as they do not leverage past search experiences, resulting in repetitive mistakes. RoT addresses these limitations by employing reflections from previous search processes to prevent errors and improve accuracy.

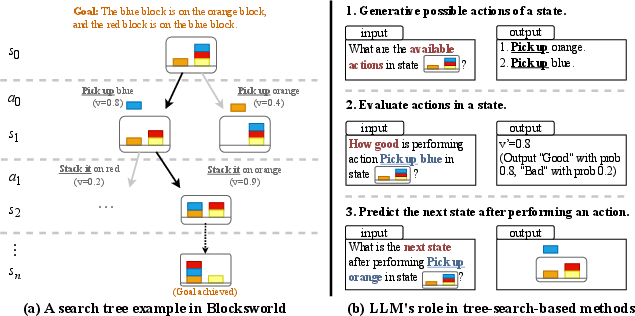

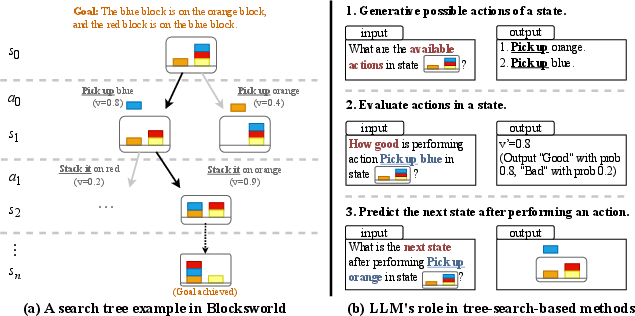

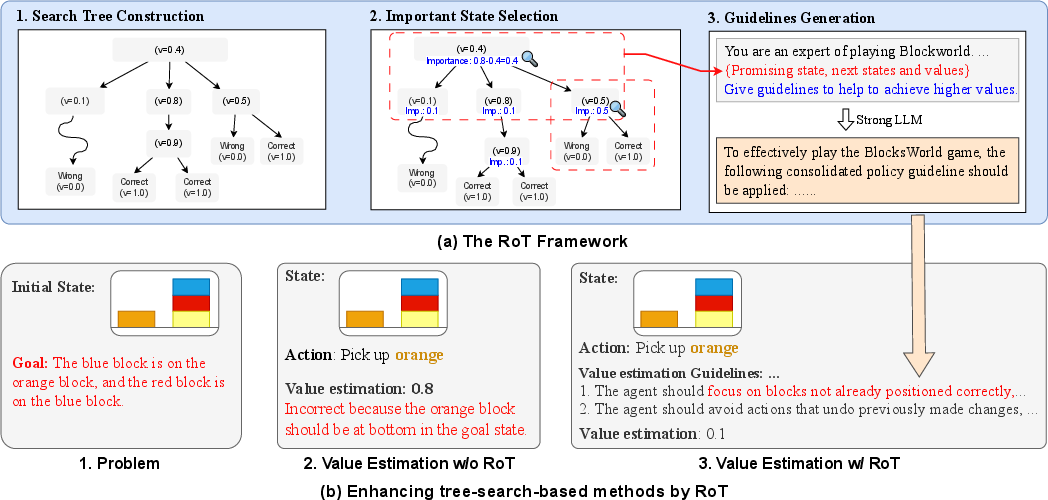

Figure 1: An illustration about tree-search-based prompting method in Blocksworld. ai and si denotes action and state at depth i. v is the estimated value of an action by the tree search algorithm (value estimation in BFS, and average estimated value of children in MCTS).

Core Framework: Reflection on Search Trees

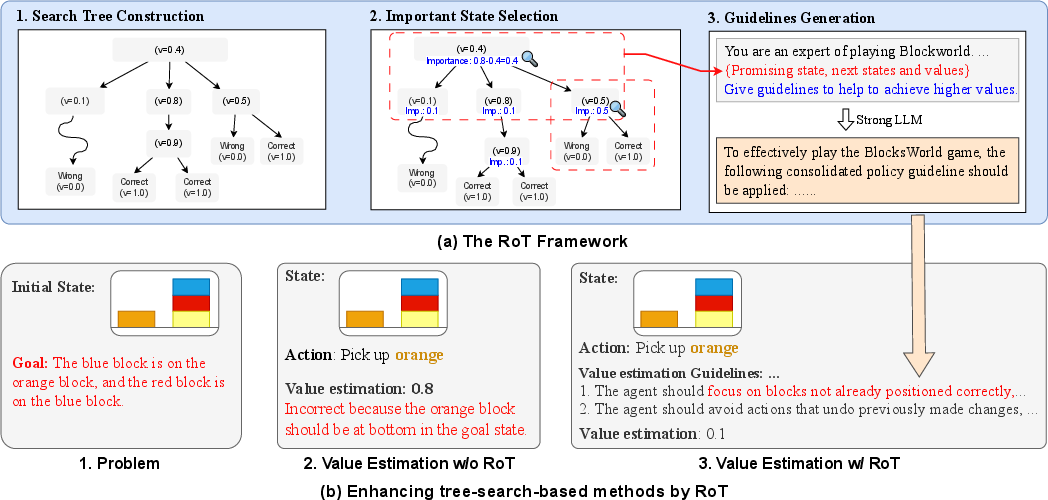

RoT integrates past search experiences to form guidelines from which LLMs can learn, thereby enhancing future decisions. The framework operates by identifying critical states from historical search processes and summarizing these into actionable insights for subsequent tasks.

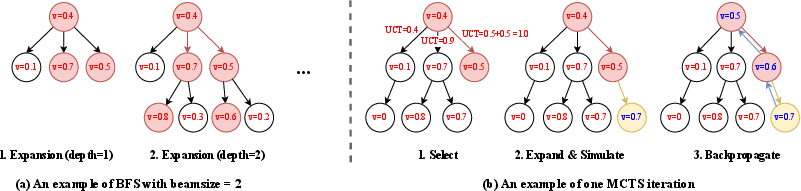

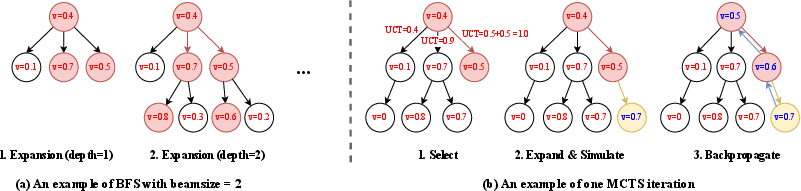

Tree-Search-Based Methods

Tree-search methods like BFS and MCTS are employed to explore optimal paths in reasoning tasks. They utilize LLMs to generate actions, predict subsequent states, and evaluate their effectiveness. This synergy between tree search and LLMs is essential for overcoming challenges in tasks such as Blocksworld and GSM8k.

State Selection Strategy

The framework introduces a state selection mechanism identifying states pivotal to affecting search outcomes. Importance is calculated based on changes in state values resulting from specific actions. This crucial step ensures the efficiency of the reflection process by focusing on significant experiences.

Figure 2: The RoT framework.

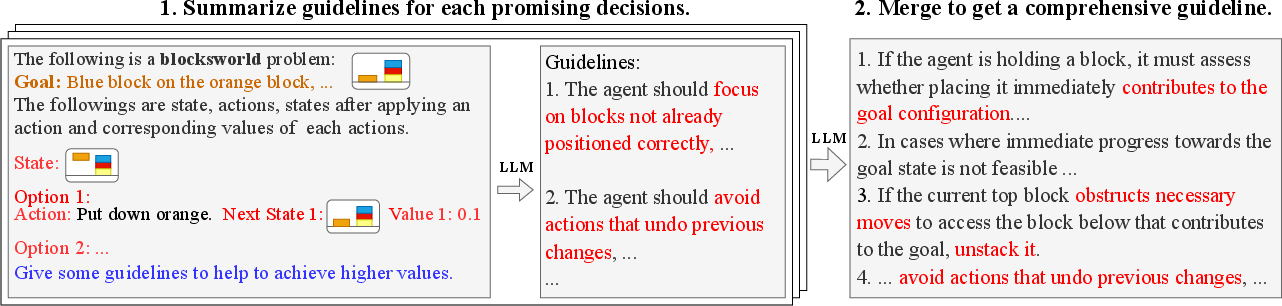

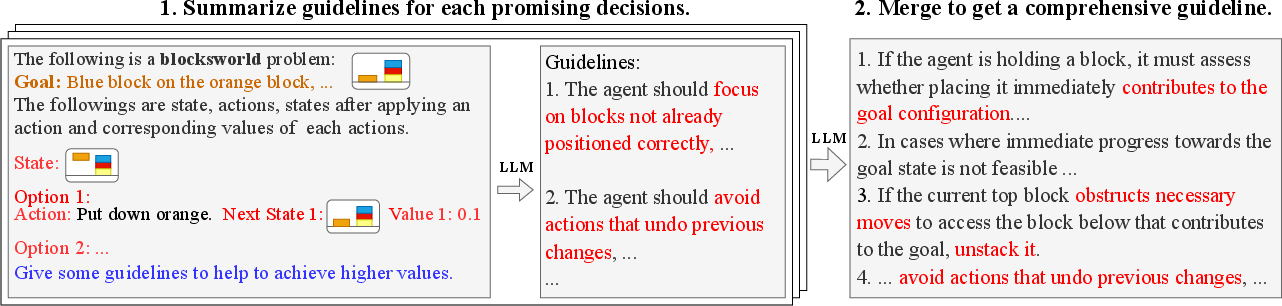

Guideline Summarization

Guidelines are generated by contrasting actions and evaluating their resultant states' values. These guidelines enhance future search quality, whether the task involves tree-search-based or non-tree-search-based prompting methods. Notably, the paper stresses iterative improvements through expert-like strategy refinement, inspired by expert iteration algorithms.

Figure 3: Guideline Summarization.

Experiments

RoT showcases significant improvements across diverse tasks:

- Blocksworld: RoT enhances traditional tree-search methods like BFS and MCTS, achieving substantial performance gains even in complex multi-step tasks.

- GSM8k: Though improvements are less pronounced, RoT provides substantial benefit by enhancing logical reasoning, though arithmetic accuracy remains a bottleneck.

- CraigslistBargain: RoT markedly improves negotiation success rates, demonstrating its utility in strategic decision-making tasks requiring adaptive planning.

Across these domains, RoT consistently outperforms non-tree-search-based methods, underlining its value.

Figure 4: Examples of BFS and MCTS.

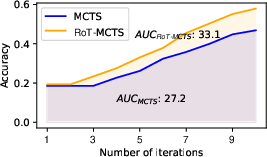

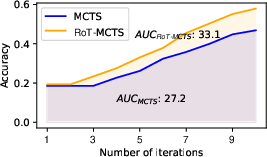

Figure 5: AUC on the step-6 split of Blocksworld using phi-2.

Search Efficiency and Analysis

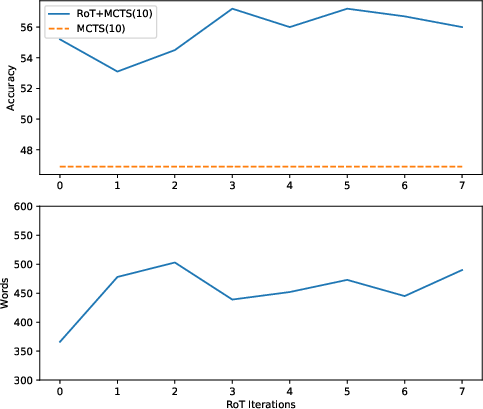

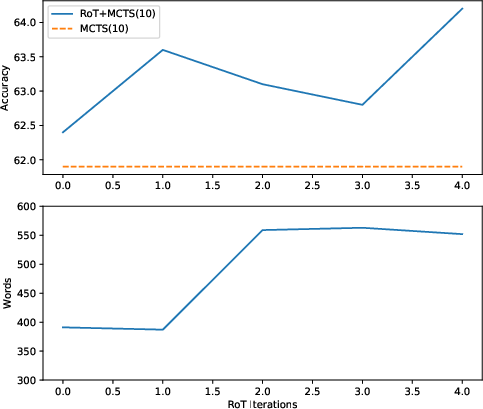

The AUC metric illustrates RoT's capability in improving search efficiency, particularly in more challenging tasks. The reflection-guided process reduces redundant exploration and enhances strategic planning capabilities.

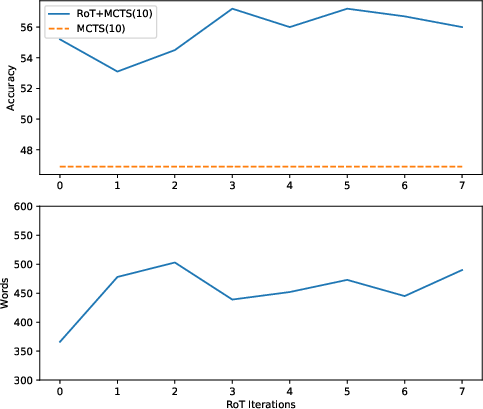

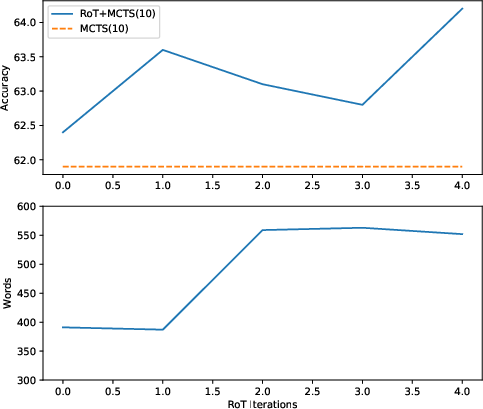

Figure 6: Word count of summarized guidelines and accurate when iteratively applying RoT to MCTS(10).

Conclusion

The RoT framework offers significant advancements in enhancing the reasoning capabilities of LLMs through tree-search-based experiences. By reflecting on past actions, RoT not only improves decision accuracy but also significantly enhances search efficiency in complex tasks. This framework holds promise for future applications in AI, where integrating past experiences can lead to more intelligent and adaptive systems.

The implications of RoT extend into both academic research and practical applications, offering a potent tool for addressing complex AI challenges in various domains.