"I'm categorizing LLM as a productivity tool": Examining ethics of LLM use in HCI research practices (2403.19876v1)

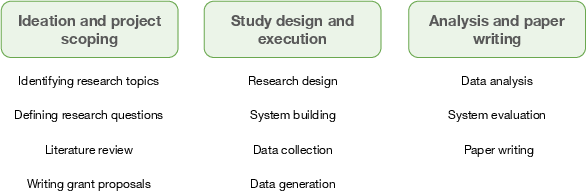

Abstract: LLMs are increasingly applied in real-world scenarios, including research and education. These models, however, come with well-known ethical issues, which may manifest in unexpected ways in human-computer interaction research due to the extensive engagement with human subjects. This paper reports on research practices related to LLM use, drawing on 16 semi-structured interviews and a survey conducted with 50 HCI researchers. We discuss the ways in which LLMs are already being utilized throughout the entire HCI research pipeline, from ideation to system development and paper writing. While researchers described nuanced understandings of ethical issues, they were rarely or only partially able to identify and address those ethical concerns in their own projects. This lack of action and reliance on workarounds was explained through the perceived lack of control and distributed responsibility in the LLM supply chain, the conditional nature of engaging with ethics, and competing priorities. Finally, we reflect on the implications of our findings and present opportunities to shape emerging norms of engaging with LLMs in HCI research.

- Guidelines for Human-AI Interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI ’19). Association for Computing Machinery, New York, NY, USA, 1–13. https://doi.org/10.1145/3290605.3300233

- Alissa N Antle. 2017. The ethics of doing research with vulnerable populations. Interactions 24, 6 (2017), 74–77.

- Christopher Bail. 2023. Can generative artificial intelligence improve social science.

- On the dangers of stochastic parrots: Can language models be too big?. In Proceedings of the 2021 ACM conference on fairness, accountability, and transparency. 610–623.

- ACM Publications Board. 2021. ACM Publications Policy on Research Involving Human Participants and Subjects. https://www.acm.org/publications/policies/research-involving-human-participants-and-subjects. (Accessed on 01/16/2024).

- Living guidelines for generative AI — why scientists must oversee its use. Nature 622, 7984 (Oct. 2023), 693–696. https://doi.org/10.1038/d41586-023-03266-1

- Virginia Braun and Victoria Clarke. 2006. Using thematic analysis in psychology. Qualitative research in psychology 3, 2 (2006), 77–101.

- Virginia Braun and Victoria Clarke. 2019. Reflecting on reflexive thematic analysis. Qualitative research in sport, exercise and health 11, 4 (2019), 589–597.

- Five Provocations for Ethical HCI Research. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (San Jose, California, USA) (CHI ’16). Association for Computing Machinery, New York, NY, USA, 852–863. https://doi.org/10.1145/2858036.2858313

- Ryan Browne. 2023. OpenAI CEO says a bug allowed some ChatGPT to see others’ chat titles. https://www.cnbc.com/2023/03/23/openai-ceo-says-a-bug-allowed-some-chatgpt-to-see-others-chat-titles.html. (Accessed on 01/15/2024).

- Amy Bruckman. 2014. Research ethics and HCI. Ways of Knowing in HCI (2014), 449–468.

- CSCW research ethics town hall: Working towards community norms. In Companion of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing. 113–115.

- Computer Science Security Research And Human Subjects: Emerging Considerations For Research Ethics Boards. Journal of empirical research on human research ethics : JERHRE 6 (06 2011), 71–83. https://doi.org/10.1525/jer.2011.6.2.71

- Elizabeth A. Buchanan and Charles M. Ess. 2009. Internet Research Ethics and the Institutional Review Board: Current Practices and Issues. SIGCAS Comput. Soc. 39, 3 (dec 2009), 43–49. https://doi.org/10.1145/1713066.1713069

- Elizabeth A. Buchanan and Michael Zimmer. 2023. Internet Research Ethics. In The Stanford Encyclopedia of Philosophy (Winter 2023 ed.), Edward N. Zalta and Uri Nodelman (Eds.). Metaphysics Research Lab, Stanford University.

- Dispensing with Humans in Human-Computer Interaction Research. In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (¡conf-loc¿, ¡city¿Hamburg¡/city¿, ¡country¿Germany¡/country¿, ¡/conf-loc¿) (CHI EA ’23). Association for Computing Machinery, New York, NY, USA, Article 413, 26 pages. https://doi.org/10.1145/3544549.3582749

- Caitlin Cahill. 2007. Including excluded perspectives in participatory action research. Design Studies 28, 3 (2007), 325–340.

- Extracting Training Data from Large Language Models. arXiv:2012.07805 [cs.CR]

- Portrayals and perceptions of AI and why they matter. (2018).

- ACL 2023 Program Chairs. 2023. ACL 2023 Policy on AI Writing Assistance - ACL 2023. https://2023.aclweb.org/blog/ACL-2023-policy/. (Accessed on 01/11/2024).

- Ethics, logs and videotape: ethics in large scale user trials and user generated content. In CHI’11 Extended Abstracts on Human Factors in Computing Systems. 2421–2424.

- Canyu Chen and Kai Shu. 2023a. Can LLM-Generated Misinformation Be Detected? arXiv:2309.13788 [cs.CL]

- Canyu Chen and Kai Shu. 2023b. Combating Misinformation in the Age of LLMs: Opportunities and Challenges. arXiv:2311.05656 [cs.CY]

- Compost: Characterizing and evaluating caricature in llm simulations. arXiv preprint arXiv:2310.11501 (2023).

- CoMPosT: Characterizing and Evaluating Caricature in LLM Simulations. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Houda Bouamor, Juan Pino, and Kalika Bali (Eds.). Association for Computational Linguistics, Singapore, 10853–10875. https://doi.org/10.18653/v1/2023.emnlp-main.669

- LLM-Assisted Content Analysis: Using Large Language Models to Support Deductive Coding. arXiv:2306.14924 [cs.CL]

- Advancing the ethical use of digital data in human research: challenges and strategies to promote ethical practice. Ethics and Information Technology 21, 1 (Nov. 2018), 59–73. https://doi.org/10.1007/s10676-018-9490-4

- Understanding accountability in algorithmic supply chains. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency. 1186–1197.

- Using large language models in psychology. Nature Reviews Psychology 2, 11 (2023), 688–701.

- Assessing Language Model Deployment with Risk Cards. arXiv:2303.18190 [cs.CL]

- Anthropomorphization of AI: Opportunities and Risks. arXiv:2305.14784 [cs.AI]

- “That’s important, but…”: How Computer Science Researchers Anticipate Unintended Consequences of Their Research Innovations. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–16.

- Bias of AI-Generated Content: An Examination of News Produced by Large Language Models. arXiv:2309.09825 [cs.AI]

- Investigating Code Generation Performance of ChatGPT with Crowdsourcing Social Data. In 2023 IEEE 47th Annual Computers, Software, and Applications Conference (COMPSAC). 876–885. https://doi.org/10.1109/COMPSAC57700.2023.00117

- SIGCHI Research Ethics Committee Town Hall. In Companion Publication of the 2021 Conference on Computer Supported Cooperative Work and Social Computing. 232–233.

- Research Ethics in HCI: A SIGCHI Community Discussion. In Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems (New Orleans, LA, USA) (CHI EA ’22). Association for Computing Machinery, New York, NY, USA, Article 169, 3 pages. https://doi.org/10.1145/3491101.3516400

- Research Ethics for HCI: A roundtable discussion. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. 1–5.

- Casey Fiesler and Nicholas Proferes. 2018. “Participant” perceptions of Twitter research ethics. Social Media+ Society 4, 1 (2018), 2056305118763366.

- Association for Computing Machinery. 2023. ACM Policy on Authorship. https://www.acm.org/publications/policies/new-acm-policy-on-authorship. (Accessed on 01/11/2024).

- Office for Human Research Protections. 1998. Informed Consent Checklist (1998) — HHS.gov. https://www.hhs.gov/ohrp/regulations-and-policy/guidance/checklists/index.html. (Accessed on 01/15/2024).

- ACM Code 2018 Task Force. 2018. Code of Ethics. https://www.acm.org/code-of-ethics. (Accessed on 01/16/2024).

- Research Ethics in HCI: A town hall meeting. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems. 1295–1299.

- S3: Social-network Simulation System with Large Language Model-Empowered Agents. arXiv:2307.14984 [cs.SI]

- CollabCoder: A GPT-Powered Workflow for Collaborative Qualitative Analysis. arXiv:2304.07366 [cs.HC]

- Patat: Human-ai collaborative qualitative coding with explainable interactive rule synthesis. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–19.

- Realtoxicityprompts: Evaluating neural toxic degeneration in language models. arXiv preprint arXiv:2009.11462 (2020).

- Ideas are dimes a dozen: Large language models for Idea generation in innovation. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4526071#

- Evaluating Large Language Models in Generating Synthetic HCI Research Data: A Case Study. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (Hamburg, Germany) (CHI ’23). Association for Computing Machinery, New York, NY, USA, Article 433, 19 pages. https://doi.org/10.1145/3544548.3580688

- Jennifer A Hamilton. 2009. On the ethics of unusable data. Fieldwork is not what it used to be: Learning anthropology’s method in a time of transition (2009), 73–88.

- Transparent Statistics in Human–Computer Interaction Working Group. 2019. Transparent Statistics Guidelines. https://doi.org/10.5281/zenodo.1186169 (Available at https://transparentstats.github.io/guidelines).

- Using mixed-methods sequential explanatory design: From theory to practice. Field methods 18, 1 (2006), 3–20.

- Employing large language models in survey research. Natural Language Processing Journal 4 (2023), 100020. https://doi.org/10.1016/j.nlp.2023.100020

- Jigsaw and Google’s Counter Abuse Technology team. 2017. Perspective API. https://perspectiveapi.com/. (Accessed on 01/16/2024).

- ” Because AI is 100% right and safe”: User attitudes and sources of AI authority in India. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–18.

- ChatGPT for good? On opportunities and challenges of large language models for education. Learning and individual differences 103 (2023), 102274.

- Fride Haram Klykken. 2022. Implementing continuous consent in qualitative research. Qualitative Research 22, 5 (2022), 795–810.

- Prototyping the use of Large Language Models (LLMs) for adult learning content creation at scale. arXiv:2306.01815 [cs.CY]

- MetaAgents: Simulating Interactions of Human Behaviors for LLM-based Task-oriented Coordination via Collaborative Generative Agents. arXiv:2310.06500 [cs.AI]

- Embracing four tensions in human-computer interaction research with marginalized people. ACM Transactions on Computer-Human Interaction (TOCHI) 28, 2 (2021), 1–47.

- Q. Vera Liao and Jennifer Wortman Vaughan. 2023. AI Transparency in the Age of LLMs: A Human-Centered Research Roadmap. arXiv:2306.01941 [cs.HC]

- How AI Processing Delays Foster Creativity: Exploring Research Question Co-Creation with an LLM-based Agent. arXiv:2310.06155 [cs.HC]

- Bridging the Gap Between UX Practitioners’ Work Practices and AI-Enabled Design Support Tools. In Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems (New Orleans, LA, USA) (CHI EA ’22). Association for Computing Machinery, New York, NY, USA, Article 268, 7 pages. https://doi.org/10.1145/3491101.3519809

- Analyzing Leakage of Personally Identifiable Information in Language Models. arXiv:2302.00539 [cs.LG]

- Conceptual Design Generation Using Large Language Models. arXiv:2306.01779 [cs.CL]

- Wendy E Mackay. 1995. Ethics, lies and videotape…. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 138–145.

- Reliability and inter-rater reliability in qualitative research: Norms and guidelines for CSCW and HCI practice. Proceedings of the ACM on human-computer interaction 3, CSCW (2019), 1–23.

- On the design of ai-powered code assistants for notebooks. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–16.

- Can LLMs Keep a Secret? Testing Privacy Implications of Language Models via Contextual Integrity Theory. arXiv:2310.17884 [cs.AI]

- Use of LLMs for Illicit Purposes: Threats, Prevention Measures, and Vulnerabilities. arXiv:2308.12833 [cs.CL]

- Sigchi research ethics town hall. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems. 1–6.

- Situational Ethics: Re-Thinking Approaches to Formal Ethics Requirements for Human-Computer Interaction. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (Seoul, Republic of Korea) (CHI ’15). Association for Computing Machinery, New York, NY, USA, 105–114. https://doi.org/10.1145/2702123.2702481

- Auditing large language models: a three-layered approach. AI and Ethics (May 2023). https://doi.org/10.1007/s43681-023-00289-2

- An empirical study of model errors and user error discovery and repair strategies in natural language database queries. In Proceedings of the 28th International Conference on Intelligent User Interfaces. 633–649.

- US Department of Health and Human Services. [n. d.]. 2018 Requirements (2018 Common Rule) — HHS.gov. https://www.hhs.gov/ohrp/regulations-and-policy/regulations/45-cfr-46/revised-common-rule-regulatory-text/index.html. (Accessed on 01/15/2024).

- US Department of Health and Human Services. 2018. Policy for the Protection of Human Subjects. https://www.hhs.gov/ohrp/regulations-and-policy/regulations/45-cfr-46/index.html. (Accessed on 01/15/2024).

- National Institute of Standards and Technology. 2023. Artificial Intelligence Risk Management Framework (AI RMF 1.0). (2023).

- OpenAI. 2023. GPT-4 Technical Report. arXiv:2303.08774 [cs.CL]

- Jonas Oppenlaender and Joonas Hämäläinen. 2023. Mapping the Challenges of HCI: An Application and Evaluation of ChatGPT and GPT-4 for Mining Insights at Scale. arXiv:2306.05036 [cs.HC]

- Google PAIR. [n. d.]. People + AI Research Guidebook - Case Studies. https://pair.withgoogle.com/guidebook/case-studies. (Accessed on 01/15/2024).

- Google PAIR. 2021. People + AI Guidebook. https://pair.withgoogle.com/guidebook. (Accessed on 01/15/2024).

- Purposeful Sampling for Qualitative Data Collection and Analysis in Mixed Method Implementation Research. Administration and policy in mental health 42 (11 2013). https://doi.org/10.1007/s10488-013-0528-y

- On the Risk of Misinformation Pollution with Large Language Models. arXiv:2305.13661 [cs.CL]

- Stefano De Paoli. 2023. Can Large Language Models emulate an inductive Thematic Analysis of semi-structured interviews? An exploration and provocation on the limits of the approach and the model. arXiv:2305.13014 [cs.CL]

- Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology. 1–22.

- Social Simulacra: Creating Populated Prototypes for Social Computing Systems. arXiv:2208.04024 [cs.HC]

- PromptInfuser: Bringing User Interface Mock-Ups to Life with Large Language Models. In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (¡conf-loc¿, ¡city¿Hamburg¡/city¿, ¡country¿Germany¡/country¿, ¡/conf-loc¿) (CHI EA ’23). Association for Computing Machinery, New York, NY, USA, Article 237, 6 pages. https://doi.org/10.1145/3544549.3585628

- Catherine Petrozzino. 2021. Who pays for ethical debt in AI? AI and Ethics 1, 3 (2021), 205–208.

- Lumpapun Punchoojit and Nuttanont Hongwarittorrn. 2015. Research ethics in human-computer interaction: A review of ethical concerns in the past five years. In 2015 2nd National Foundation for Science and Technology Development Conference on Information and Computer Science (NICS). 180–185. https://doi.org/10.1109/NICS.2015.7302187

- Are ChatGPT and large language models ”the answer” to bringing us closer to systematic review automation? Systematic reviews 12 (04 2023), 72. https://doi.org/10.1186/s13643-023-02243-z

- Changes in Research Ethics, Openness, and Transparency in Empirical Studies between CHI 2017 and CHI 2022. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (¡conf-loc¿, ¡city¿Hamburg¡/city¿, ¡country¿Germany¡/country¿, ¡/conf-loc¿) (CHI ’23). Association for Computing Machinery, New York, NY, USA, Article 505, 23 pages. https://doi.org/10.1145/3544548.3580848

- Integrating Ethics within Machine Learning Courses. ACM Trans. Comput. Educ. 19, 4, Article 32 (aug 2019), 26 pages. https://doi.org/10.1145/3341164

- Value cards: An educational toolkit for teaching social impacts of machine learning through deliberation. In Proceedings of the 2021 ACM conference on fairness, accountability, and transparency. 850–861.

- Shaping the Emerging Norms of Using Large Language Models in Social Computing Research. In Companion Publication of the 2023 Conference on Computer Supported Cooperative Work and Social Computing. 569–571.

- Irina Shklovski and Janet Vertesi. 2012. “Un-Googling”: Research Technologies, Communities at Risk and the Ethics of User Studies in HCI. In Electronic Workshops in Computing.

- Ben Shneiderman. 2020. Bridging the Gap Between Ethics and Practice: Guidelines for Reliable, Safe, and Trustworthy Human-Centered AI Systems. ACM Trans. Interact. Intell. Syst. 10, 4, Article 26 (oct 2020), 31 pages. https://doi.org/10.1145/3419764

- Incorporating Ethics in Computing Courses: Barriers, Support, and Perspectives from Educators. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 1 (¡conf-loc¿, ¡city¿Toronto ON¡/city¿, ¡country¿Canada¡/country¿, ¡/conf-loc¿) (SIGCSE 2023). Association for Computing Machinery, New York, NY, USA, 367–373. https://doi.org/10.1145/3545945.3569855

- Identifying and Mitigating Privacy Risks Stemming from Language Models: A Survey. arXiv:2310.01424 [cs.CL]

- Evaluating Interpretive Research in HCI. Interactions 31, 1 (2024), 38–42.

- Open Scholarship and Peer Review: a Time for Experimentation. (2013).

- Structured Generation and Exploration of Design Space with Large Language Models for Human-AI Co-Creation. arXiv:2310.12953 [cs.HC]

- Use of Large Language Models to Aid Analysis of Textual Data. bioRxiv (2023). https://doi.org/10.1101/2023.07.17.549361 arXiv:https://www.biorxiv.org/content/early/2023/07/19/2023.07.17.549361.full.pdf

- Large language models in medicine. Nature medicine 29, 8 (2023), 1930–1940.

- Jakob Tholander and Martin Jonsson. 2023. Design Ideation with AI - Sketching, Thinking and Talking with Generative Machine Learning Models. In Proceedings of the 2023 ACM Designing Interactive Systems Conference (Pittsburgh, PA, USA) (DIS ’23). Association for Computing Machinery, New York, NY, USA, 1930–1940. https://doi.org/10.1145/3563657.3596014

- Beyond the Belmont Principles: Ethical Challenges, Practices, and Beliefs in the Online Data Research Community. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing (San Francisco, California, USA) (CSCW ’16). Association for Computing Machinery, New York, NY, USA, 941–953. https://doi.org/10.1145/2818048.2820078

- Leveraging Large Language Models to Power Chatbots for Collecting User Self-Reported Data. arXiv:2301.05843 [cs.HC]

- Ethical and social risks of harm from Language Models. arXiv:2112.04359 [cs.CL]

- Taxonomy of Risks Posed by Language Models. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency (Seoul, Republic of Korea) (FAccT ’22). Association for Computing Machinery, New York, NY, USA, 214–229. https://doi.org/10.1145/3531146.3533088

- David Gray Widder and Dawn Nafus. 2023. Dislocated accountabilities in the “AI supply chain”: Modularity and developers’ notions of responsibility. Big Data & Society 10, 1 (2023), 20539517231177620.

- PromptChainer: Chaining Large Language Model Prompts through Visual Programming. arXiv:2203.06566 [cs.HC]

- Supporting Qualitative Analysis with Large Language Models: Combining Codebook with GPT-3 for Deductive Coding. In Companion Proceedings of the 28th International Conference on Intelligent User Interfaces. 75–78.

- Supporting Qualitative Analysis with Large Language Models: Combining Codebook with GPT-3 for Deductive Coding. In Companion Proceedings of the 28th International Conference on Intelligent User Interfaces (Sydney, NSW, Australia) (IUI ’23 Companion). Association for Computing Machinery, New York, NY, USA, 75–78. https://doi.org/10.1145/3581754.3584136

- Do LLMs Implicitly Exhibit User Discrimination in Recommendation? An Empirical Study. arXiv:2311.07054 [cs.IR]

- Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond. arXiv:2304.13712 [cs.CL]

- Xuan Zhang and Wei Gao. 2023. Towards LLM-based Fact Verification on News Claims with a Hierarchical Step-by-Step Prompting Method. arXiv:2310.00305 [cs.CL]

- Siren’s Song in the AI Ocean: A Survey on Hallucination in Large Language Models. arXiv:2309.01219 [cs.CL]

- VISAR: A Human-AI Argumentative Writing Assistant with Visual Programming and Rapid Draft Prototyping. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (, San Francisco, CA, USA,) (UIST ’23). Association for Computing Machinery, New York, NY, USA, Article 5, 30 pages. https://doi.org/10.1145/3586183.3606800

- ” It’s a Fair Game”, or Is It? Examining How Users Navigate Disclosure Risks and Benefits When Using LLM-Based Conversational Agents. arXiv preprint arXiv:2309.11653 (2023).

- Michael Zimmer. 2010. “But the data is already public”: on the ethics of research in Facebook. Ethics and Information Technology 12 (2010), 313–325. https://api.semanticscholar.org/CorpusID:24881139

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.