LLMSense: Harnessing LLMs for High-level Reasoning Over Spatiotemporal Sensor Traces (2403.19857v1)

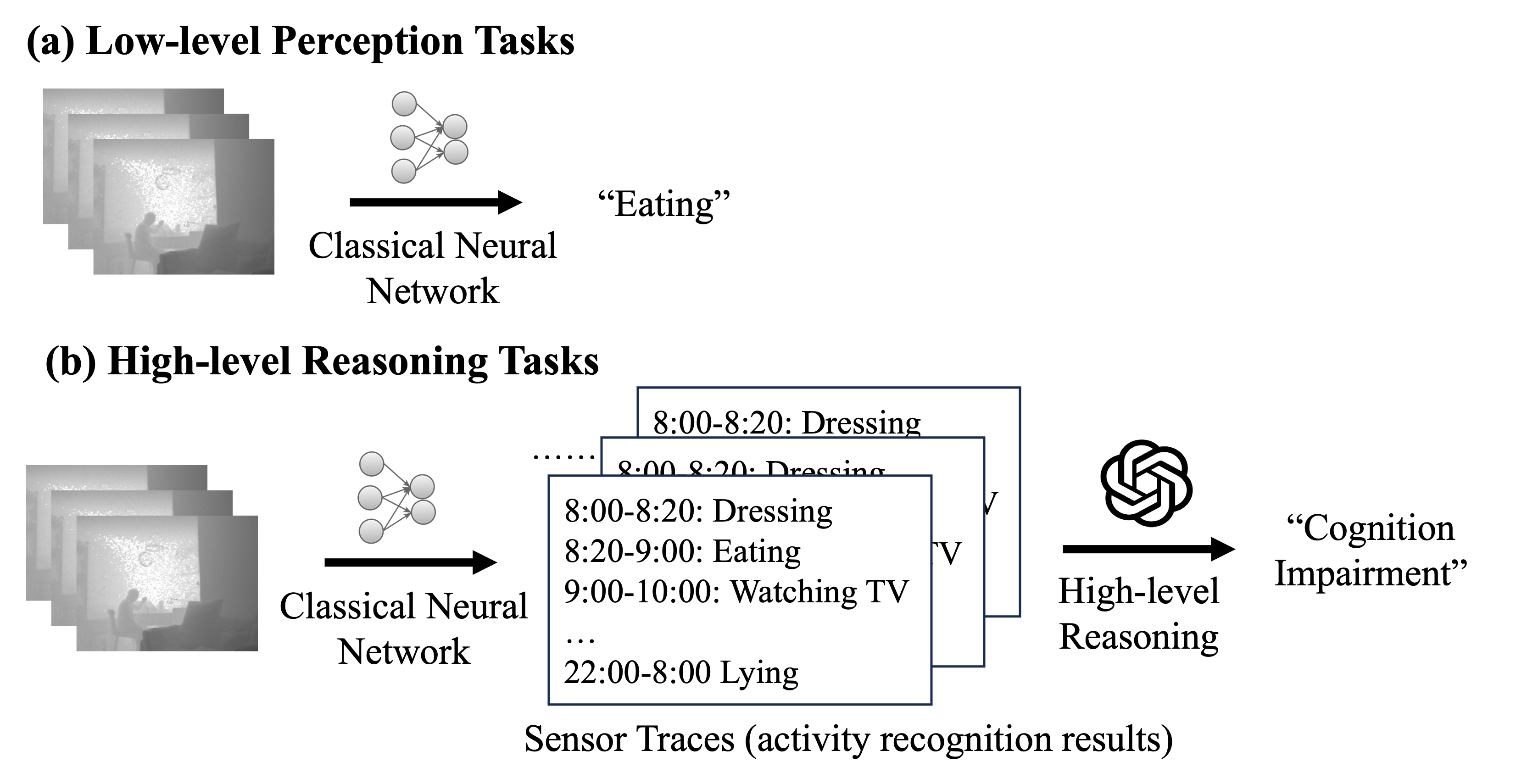

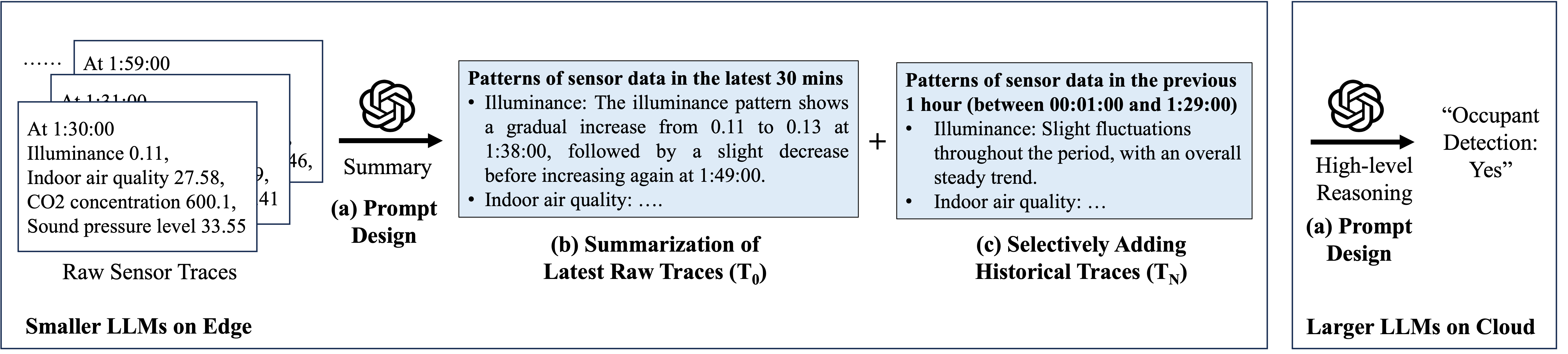

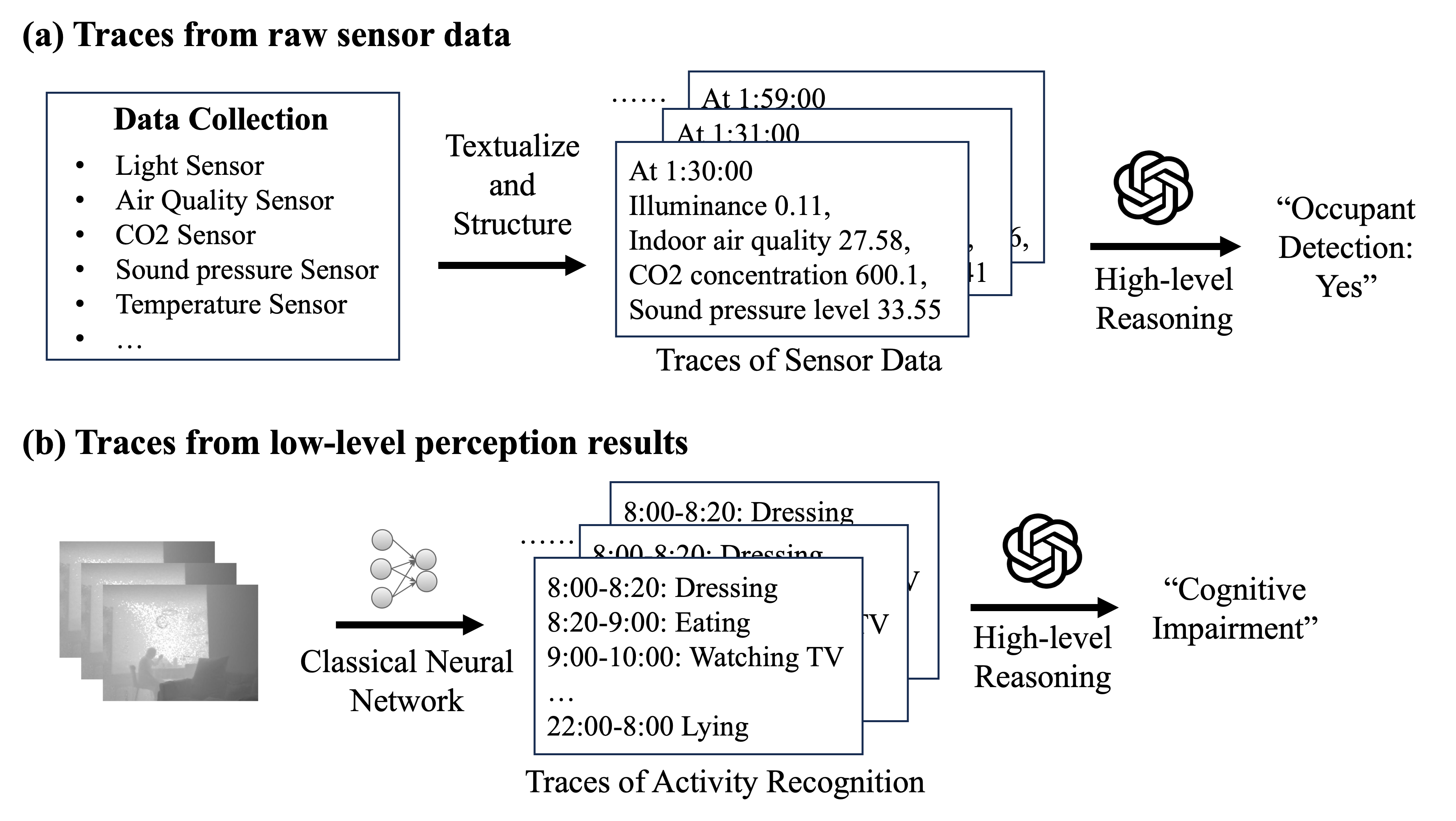

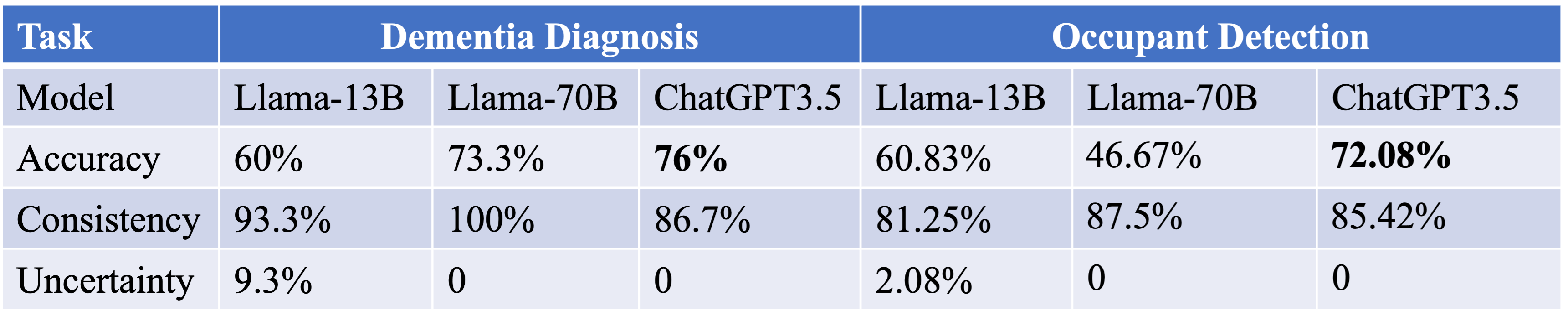

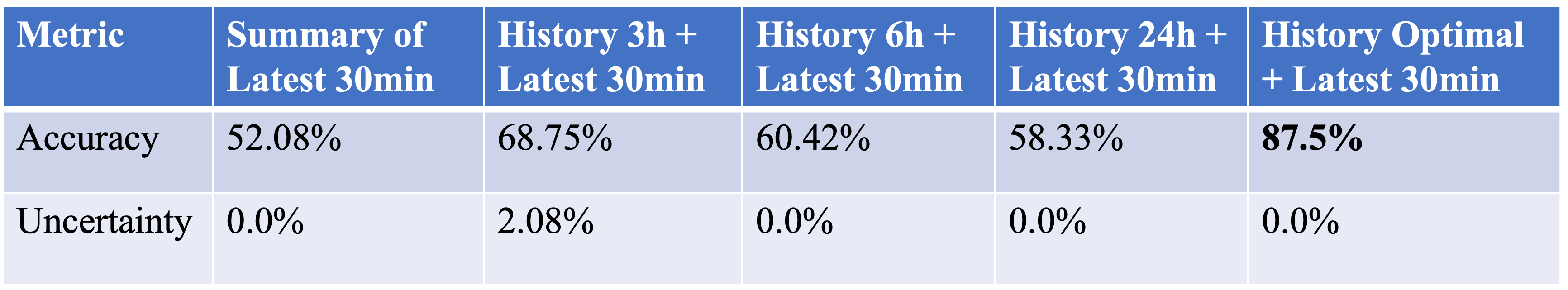

Abstract: Most studies on machine learning in sensing systems focus on low-level perception tasks that process raw sensory data within a short time window. However, many practical applications, such as human routine modeling and occupancy tracking, require high-level reasoning abilities to comprehend concepts and make inferences based on long-term sensor traces. Existing machine learning-based approaches for handling such complex tasks struggle to generalize due to the limited training samples and the high dimensionality of sensor traces, necessitating the integration of human knowledge for designing first-principle models or logic reasoning methods. We pose a fundamental question: Can we harness the reasoning capabilities and world knowledge of LLMs to recognize complex events from long-term spatiotemporal sensor traces? To answer this question, we design an effective prompting framework for LLMs on high-level reasoning tasks, which can handle traces from the raw sensor data as well as the low-level perception results. We also design two strategies to enhance performance with long sensor traces, including summarization before reasoning and selective inclusion of historical traces. Our framework can be implemented in an edge-cloud setup, running small LLMs on the edge for data summarization and performing high-level reasoning on the cloud for privacy preservation. The results show that LLMSense can achieve over 80\% accuracy on two high-level reasoning tasks such as dementia diagnosis with behavior traces and occupancy tracking with environmental sensor traces. This paper provides a few insights and guidelines for leveraging LLM for high-level reasoning on sensor traces and highlights several directions for future work.

- M. Weber, F. Banihashemi, P. Mandl, H.-A. Jacobsen, and R. Mayer, “Overcoming data scarcity through transfer learning in co2-based building occupancy detection,” in Proceedings of the 10th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, 2023, pp. 1–10.

- N. Banovic, T. Buzali, F. Chevalier, J. Mankoff, and A. K. Dey, “Modeling and understanding human routine behavior,” in Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, 2016, pp. 248–260.

- F.-T. Sun, Y.-T. Yeh, H.-T. Cheng, C. Kuo, and M. Griss, “Nonparametric discovery of human routines from sensor data,” in 2014 IEEE international conference on pervasive computing and communications (PerCom). IEEE, 2014, pp. 11–19.

- F. Ingelrest, G. Barrenetxea, G. Schaefer, M. Vetterli, O. Couach, and M. Parlange, “Sensorscope: Application-specific sensor network for environmental monitoring,” ACM Transactions on Sensor Networks (TOSN), vol. 6, no. 2, pp. 1–32, 2010.

- T. Han, K. Muhammad, T. Hussain, J. Lloret, and S. W. Baik, “An efficient deep learning framework for intelligent energy management in iot networks,” IEEE Internet of Things Journal, vol. 8, no. 5, pp. 3170–3179, 2020.

- X. Xu, X. Liu, H. Zhang, W. Wang, S. Nepal, Y. Sefidgar, W. Seo, K. S. Kuehn, J. F. Huckins, M. E. Morris et al., “Globem: Cross-dataset generalization of longitudinal human behavior modeling,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 6, no. 4, pp. 1–34, 2023.

- C. Chang, W.-C. Peng, and T.-F. Chen, “Llm4ts: Two-stage fine-tuning for time-series forecasting with pre-trained llms,” arXiv preprint arXiv:2308.08469, 2023.

- S. Mirchandani, F. Xia, P. Florence, D. Driess, M. G. Arenas, K. Rao, D. Sadigh, A. Zeng et al., “Large language models as general pattern machines,” in 7th Annual Conference on Robot Learning, 2023.

- X. Liu, D. McDuff, G. Kovacs, I. Galatzer-Levy, J. Sunshine, J. Zhan, M.-Z. Poh, S. Liao, P. Di Achille, and S. Patel, “Large language models are few-shot health learners,” arXiv preprint arXiv:2305.15525, 2023.

- H. Xu, L. Han, M. Li, and M. Srivastava, “Penetrative ai: Making llms comprehend the physical world,” arXiv preprint arXiv:2310.09605, 2023.

- Y. Chen, H. Xie, M. Ma, Y. Kang, X. Gao, L. Shi, Y. Cao, X. Gao, H. Fan, M. Wen et al., “Automatic root cause analysis via large language models for cloud incidents,” 2024.

- Z. Yan, K. Zhang, R. Zhou, L. He, X. Li, and L. Sun, “Multimodal chatgpt for medical applications: an experimental study of gpt-4v,” arXiv preprint arXiv:2310.19061, 2023.

- J. V. Jeyakumar, A. Sarker, L. A. Garcia, and M. Srivastava, “X-char: A concept-based explainable complex human activity recognition model,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 7, no. 1, pp. 1–28, 2023.

- M. Jin, S. Wang, L. Ma, Z. Chu, J. Y. Zhang, X. Shi, P.-Y. Chen, Y. Liang, Y.-F. Li, S. Pan et al., “Time-llm: Time series forecasting by reprogramming large language models,” arXiv preprint arXiv:2310.01728, 2023.

- H. Xue and F. D. Salim, “Utilizing language models for energy load forecasting,” in Proceedings of the 10th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, 2023, pp. 224–227.

- X. Xu, B. Yao, Y. Dong, H. Yu, J. Hendler, A. K. Dey, and D. Wang, “Leveraging large language models for mental health prediction via online text data,” arXiv preprint arXiv:2307.14385, 2023.

- X. Ouyang, Z. Xie, H. Fu, S. Cheng, L. Pan, N. Ling, G. Xing, J. Zhou, and J. Huang, “Harmony: Heterogeneous multi-modal federated learning through disentangled model training,” in Proceedings of the 21st Annual International Conference on Mobile Systems, Applications and Services, 2023, pp. 530–543.

- “ollama,” https://github.com/ollama/ollama, 2024.

- A. Gu, K. Goel, and C. Ré, “Efficiently modeling long sequences with structured state spaces,” arXiv preprint arXiv:2111.00396, 2021.

- A. Gu and T. Dao, “Mamba: Linear-time sequence modeling with selective state spaces,” arXiv preprint arXiv:2312.00752, 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.