A Diffusion-Based Generative Equalizer for Music Restoration

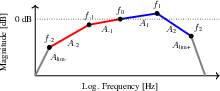

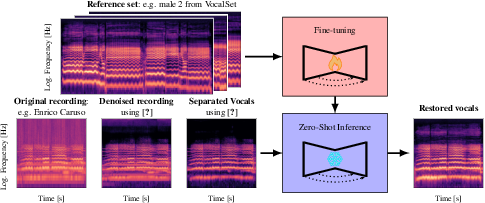

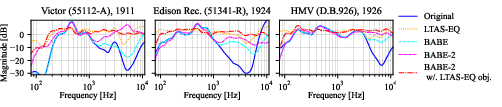

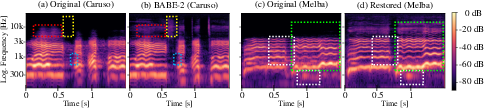

Abstract: This paper presents a novel approach to audio restoration, focusing on the enhancement of low-quality music recordings, and in particular historical ones. Building upon a previous algorithm called BABE, or Blind Audio Bandwidth Extension, we introduce BABE-2, which presents a series of improvements. This research broadens the concept of bandwidth extension to \emph{generative equalization}, a novel task that, to the best of our knowledge, has not been explicitly addressed in previous studies. BABE-2 is built around an optimization algorithm utilizing priors from diffusion models, which are trained or fine-tuned using a curated set of high-quality music tracks. The algorithm simultaneously performs two critical tasks: estimation of the filter degradation magnitude response and hallucination of the restored audio. The proposed method is objectively evaluated on historical piano recordings, showing an enhancement over the prior version. The method yields similarly impressive results in rejuvenating the works of renowned vocalists Enrico Caruso and Nellie Melba. This research represents an advancement in the practical restoration of historical music.

- Digital Audio Restoration—A Statistical Model Based Approach, Springer, 1998.

- M. Kob and T. A. Weege, “How to interpret early recordings? Artefacts and resonances in recording and reproduction of singing voices,” Computational Phonogram Archiving, pp. 335–350, 2019.

- “Digital audio antiquing—Signal processing methods for imitating the sound quality of historical recordings,” J. Audio Eng. Soc., vol. 56, no. 3, pp. 115–139, Mar. 2008.

- “Blind deconvolution through digital signal processing,” Proc. IEEE, vol. 63, no. 4, pp. 678–692, 1975.

- E. Moliner and V. Välimäki, “A two-stage U-net for high-fidelity denoising of historical recordings,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), Singapore, May 2022, pp. 841–845.

- “Diffusion models for audio restoration,” arXiv preprint arXiv:2402.09821, 2024.

- “Blind audio bandwidth extension: A diffusion-based zero-shot approach,” arXiv, 2024.

- “Score-based generative modeling through stochastic differential equations,” in Proc. Int. Conf. Learning Representations (ICLR), May 2021.

- “Elucidating the design space of diffusion-based generative models,” Adv. Neural Inf. Process. Syst. (NeurIPS), Dec. 2022.

- “Solving audio inverse problems with a diffusion model,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), Rhodes, Greece, Jun. 2023.

- “Diffusion posterior sampling for general noisy inverse problems,” in Proc. Int. Conf. Learning Representations (ICLR), Kigali, Rwanda, May 2023.

- “Parallel diffusion models of operator and image for blind inverse problems,” in Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. (CVPR), Vancouver, BC, Canada, Jun. 2023, pp. 6059–6069.

- “CADS: Unleashing the diversity of diffusion models through condition-annealed sampling,” in Proc. Int. Conf. Learning Representations (ICLR), 2024.

- D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in Proc. Int. Conf. Learn. Represent. (ICLR), San Diego, CA, May 2015.

- A. Wright and V. Välimäki, “Perceptual loss function for neural modeling of audio systems,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process., 2020, pp. 251–255.

- E. Moliner and V. Välimäki, “Diffusion-based audio inpainting,” J. Audio Eng. Soc., vol. 72, Mar. 2024.

- “Enabling factorized piano music modeling and generation with the MAESTRO dataset,” in Proc. Int. Conf. Learning Representations (ICLR), May 2019.

- “Fréchet audio distance: A reference-free metric for evaluating music enhancement algorithms,” in Proc. Interspeech, Aug. 2019, pp. 2350–2354.

- “Adapting Frechet audio distance for generative music evaluation,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), 2024.

- “Large-scale contrastive language-audio pretraining with feature fusion and keyword-to-caption augmentation,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), 2023.

- “High fidelity neural audio compression,” Transactions on Machine Learning Research, 2023.

- E. Moliner and V. Välimäki, “BEHM-GAN: Bandwidth extension of historical music using generative adversarial networks,” IEEE/ACM Trans. Audio Speech Lang. Process., vol. 31, pp. 943–956, Jul. 2023.

- “Vocalset: A singing voice dataset,” in ISMIR, 2018, pp. 468–474.

- “Hybrid transformers for music source separation,” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), 2023.

- J.B Steane, “Fach,” Groove Music Online, 2002.

- J. Freestone, Enrico Caruso: His Recorded Legacy, TS Denison, Minneapolis, 1961.

- B. Gentili, “The birth of ‘modern’ vocalism: The paradigmatic case of Enrico Caruso,” J. Royal Musical Assoc., vol. 146, no. 2, pp. 425–453, 2021.

- R. Celletti and A. Blyth, “Caruso, Enrico,” Groove Music Online, 2013.

- D. Shawe-Taylor and A. Blyth, “Gigli, Beniamino,” Groove Music Online, 2001.

- L. A. G. Strong, “John McCormack: The story of a singer,” The Macmillan Company, 1941.

- D. Shawe-Taylor, “Melba, Dame Nellie,” Groove Music Online, May 2009.

- E. Forbes, “Patti, Adelina,” Groove Music Online, 2001.

- “Multi-singer: Fast multi-singer singing voice vocoder with a large-scale corpus,” in Proceedings of the 29th ACM International Conference on Multimedia, 2021, pp. 3945–3954.

- “Opencpop: A high-quality open source chinese popular song corpus for singing voice synthesis,” in Interspeech, 2022.

- “The NUS sung and spoken lyrics corpus: A quantitative comparison of singing and speech,” in Proc. Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, 2013, pp. 1–9.

- “PJS: Phoneme-balanced japanese singing-voice corpus,” in Proc. Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, 2020, pp. 487–491.

- “M4singer: A multi-style, multi-singer and musical score provided mandarin singing corpus,” Adv. Neural Inf. Process. Syst. (NeurIPS) Datasets and Benchmarks Track, vol. 35, pp. 6914–6926, 2022.

- “Children’s song dataset for singing voice research,” in Proc. ISMIR, 2020, vol. 4.

- “Guidance with spherical gaussian constraint for conditional diffusion,” arXiv preprint arXiv:2402.03201, 2024.

- “Video diffusion models,” Adv. Neural Inf. Process. Syst. (NeurIPS), Dec. 2022.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.