Towards Human-AI Deliberation: Design and Evaluation of LLM-Empowered Deliberative AI for AI-Assisted Decision-Making (2403.16812v2)

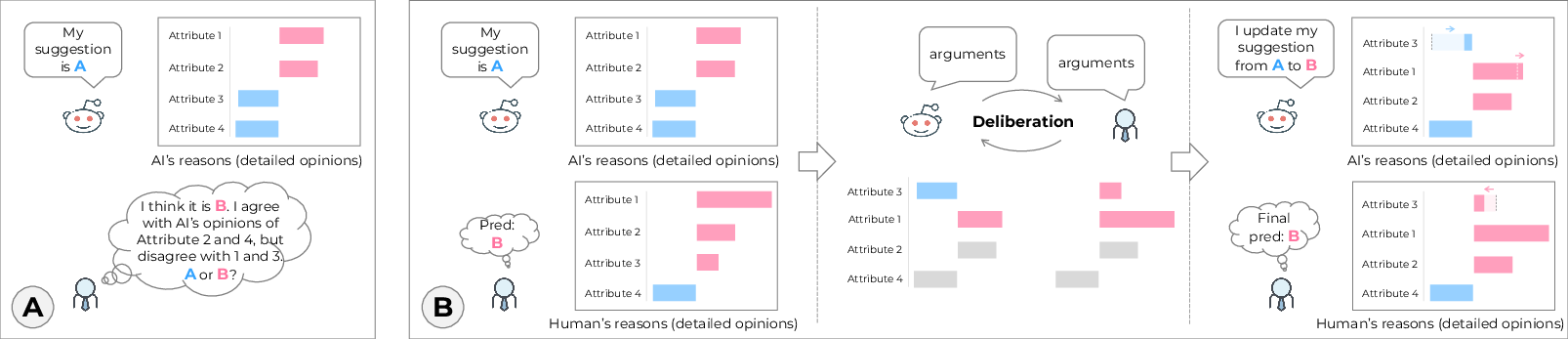

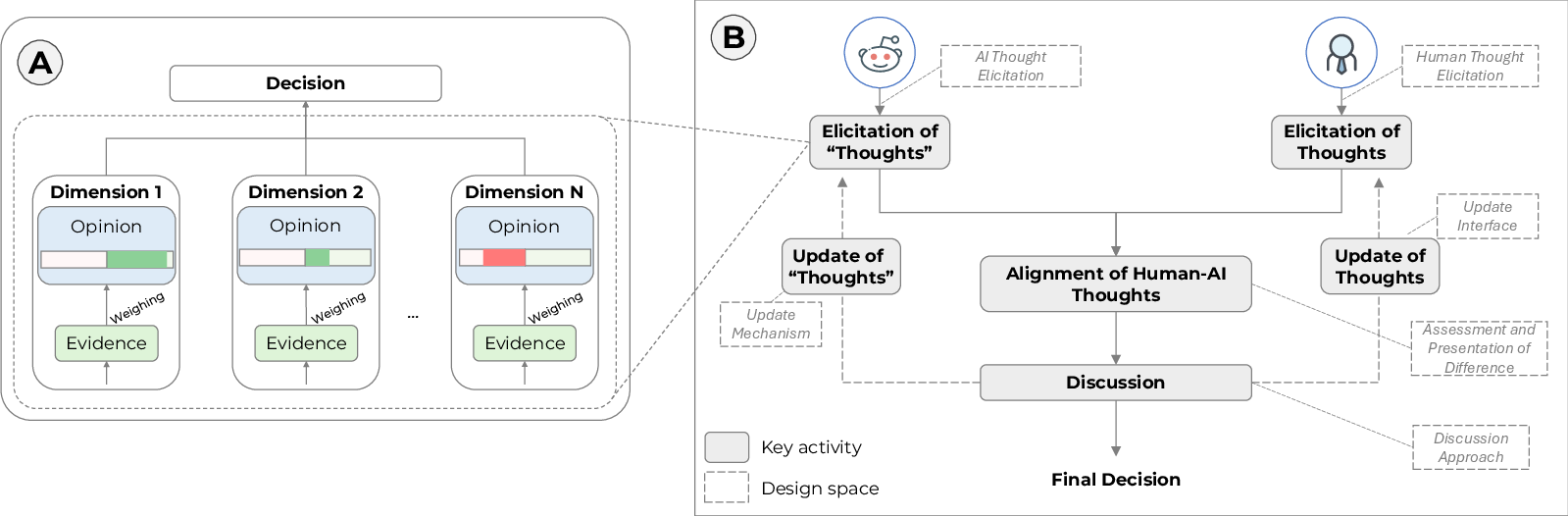

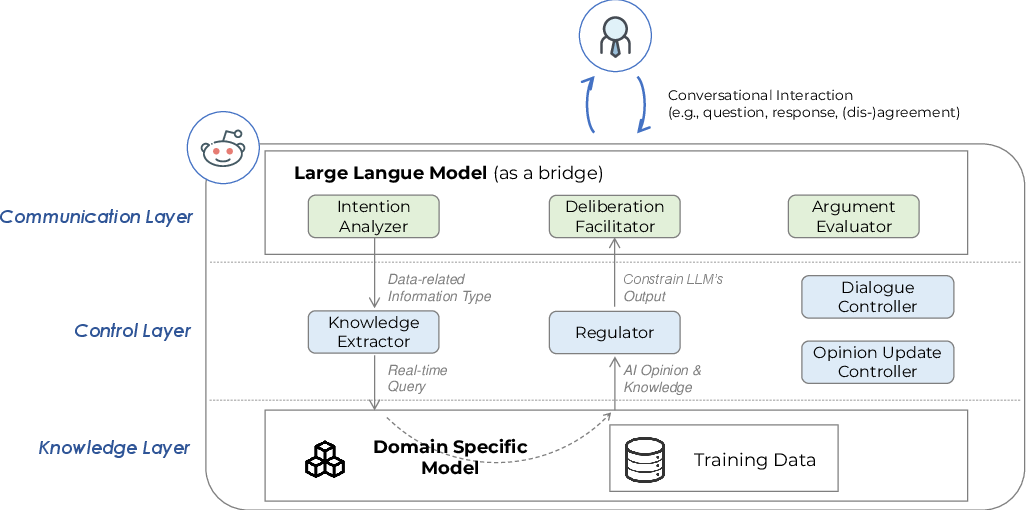

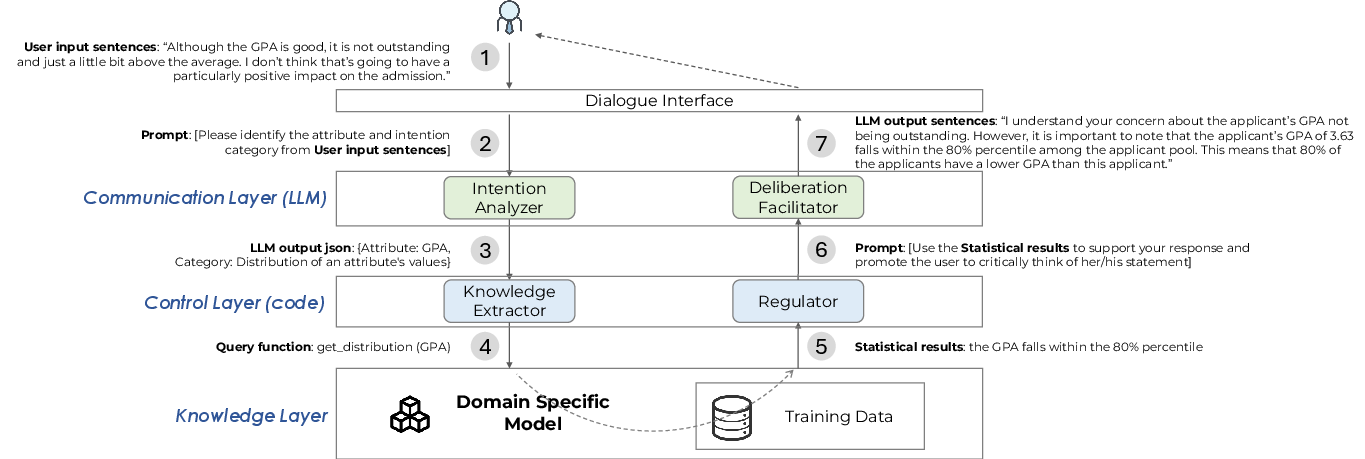

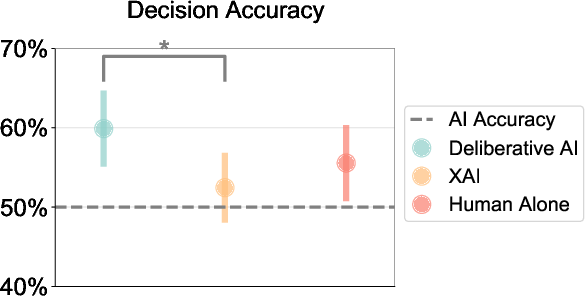

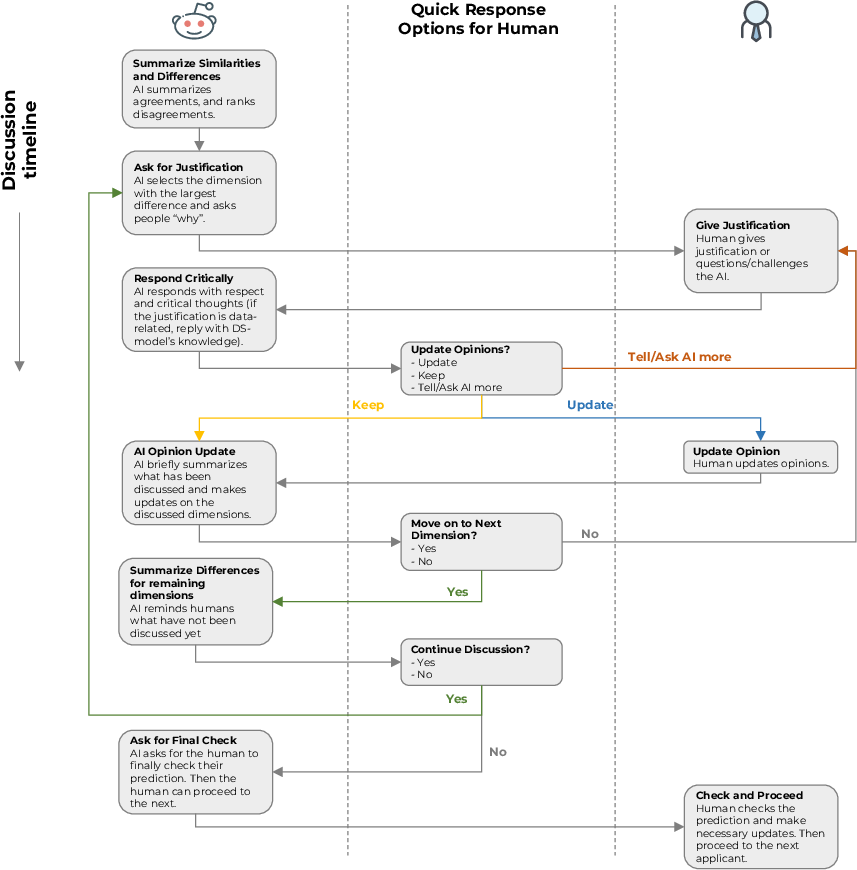

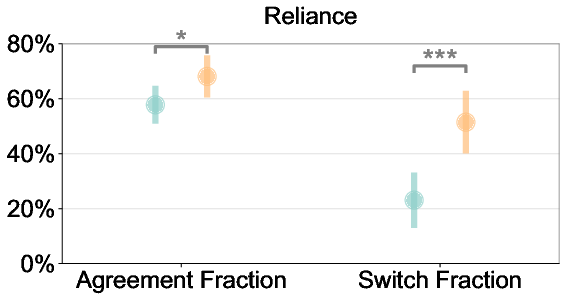

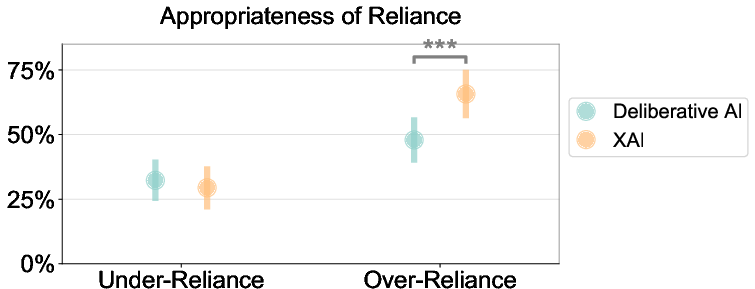

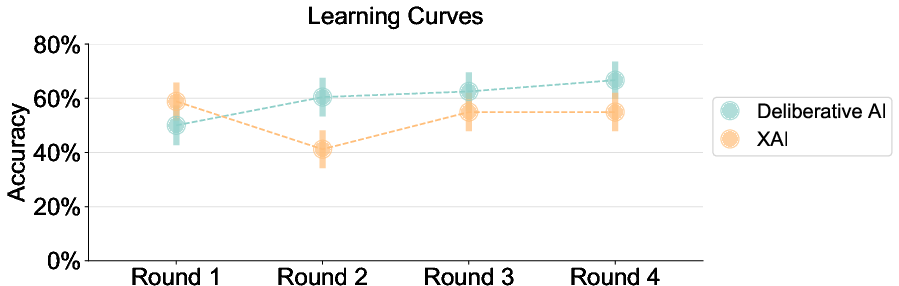

Abstract: In AI-assisted decision-making, humans often passively review AI's suggestion and decide whether to accept or reject it as a whole. In such a paradigm, humans are found to rarely trigger analytical thinking and face difficulties in communicating the nuances of conflicting opinions to the AI when disagreements occur. To tackle this challenge, we propose Human-AI Deliberation, a novel framework to promote human reflection and discussion on conflicting human-AI opinions in decision-making. Based on theories in human deliberation, this framework engages humans and AI in dimension-level opinion elicitation, deliberative discussion, and decision updates. To empower AI with deliberative capabilities, we designed Deliberative AI, which leverages LLMs as a bridge between humans and domain-specific models to enable flexible conversational interactions and faithful information provision. An exploratory evaluation on a graduate admissions task shows that Deliberative AI outperforms conventional explainable AI (XAI) assistants in improving humans' appropriate reliance and task performance. Based on a mixed-methods analysis of participant behavior, perception, user experience, and open-ended feedback, we draw implications for future AI-assisted decision tool design.

- COGAM: measuring and moderating cognitive load in machine learning model explanations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 1–14.

- A Human-Centered Interpretability Framework Based on Weight of Evidence. arXiv (2021).

- André Bächtiger and John Parkinson. 2019. Mapping and measuring deliberation: Towards a new deliberative quality. Oxford University Press.

- Measuring deliberation 2.0: standards, discourse types, and sequenzialization. In ECPR General Conference. Potsdam, 5–12.

- Optimizing ai for teamwork. arXiv preprint arXiv:2004.13102 (2020).

- Beyond accuracy: The role of mental models in human-AI team performance. In Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, Vol. 7. 2–11.

- Updates in human-ai teams: Understanding and addressing the performance/compatibility tradeoff. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33. 2429–2437.

- Does the whole exceed its parts? the effect of ai explanations on complementary team performance. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. 1–16.

- Jason Barabas. 2004. How deliberation affects policy opinions. American political science review 98, 4 (2004), 687–701.

- The decision-making ecology. From evidence to outcomes in child welfare: An international reader (2014), 24–40.

- How Cognitive Biases Affect XAI-assisted Decision-making: A Systematic Review. In Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society. 78–91.

- ’It’s Reducing a Human Being to a Percentage’ Perceptions of Justice in Algorithmic Decisions. In Proceedings of the 2018 Chi conference on human factors in computing systems. 1–14.

- Methods for analyzing and measuring group deliberation. In Sourcebook for political communication research. Routledge, 345–367.

- Shared interest: Measuring human-ai alignment to identify recurring patterns in model behavior. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–17.

- Silvia Bonaccio and Reeshad S Dalal. 2006. Advice taking and decision-making: An integrative literature review, and implications for the organizational sciences. Organizational behavior and human decision processes 101, 2 (2006), 127–151.

- Proxy tasks and subjective measures can be misleading in evaluating explainable AI systems. In Proceedings of the 25th international conference on intelligent user interfaces. 454–464.

- To trust or to think: cognitive forcing functions can reduce overreliance on AI in AI-assisted decision-making. Proceedings of the ACM on Human-Computer Interaction 5, CSCW1 (2021), 1–21.

- 83Discourse Quality Index. In Research Methods in Deliberative Democracy. Oxford University Press. https://doi.org/10.1093/oso/9780192848925.003.0006 arXiv:https://academic.oup.com/book/0/chapter/378695331/chapter-pdf/49943298/oso-9780192848925-chapter-6.pdf

- Improving Human-AI Collaboration With Descriptions of AI Behavior. Proc. ACM Hum.-Comput. Interact. 7, CSCW1, Article 136 (apr 2023), 21 pages. https://doi.org/10.1145/3579612

- Human-centered tools for coping with imperfect algorithms during medical decision-making. In Proceedings of the 2019 chi conference on human factors in computing systems. 1–14.

- ” Hello AI”: uncovering the onboarding needs of medical practitioners for human-AI collaborative decision-making. Proceedings of the ACM on Human-computer Interaction 3, CSCW (2019), 1–24.

- Nancy Cartwright and Jacob Stegenga. 2011. A theory of evidence for evidence-based policy. (2011).

- Simone Chambers. 2005. Measuring publicity’s effect: Reconciling empirical research and normative theory. Acta Politica 40 (2005), 255–266.

- Cicero: Multi-turn, contextual argumentation for accurate crowdsourcing. In Proceedings of the 2019 chi conference on human factors in computing systems. 1–14.

- Explaining decision-making algorithms through UI: Strategies to help non-expert stakeholders. In Proceedings of the 2019 chi conference on human factors in computing systems. 1–12.

- Are Two Heads Better Than One in AI-Assisted Decision Making? Comparing the Behavior and Performance of Groups and Individuals in Human-AI Collaborative Recidivism Risk Assessment. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–18.

- Don’t Just Tell Me, Ask Me: AI Systems that Intelligently Frame Explanations as Questions Improve Human Logical Discernment Accuracy over Causal AI explanations. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–13.

- Jeffrey Dastin. 2018. Amazon scraps secret AI recruiting tool that showed bias against women. In Ethics of Data and Analytics. Auerbach Publications, 296–299.

- Jury decision making: 45 years of empirical research on deliberating groups. Psychology, public policy, and law 7, 3 (2001), 622.

- On making the right choice: The deliberation-without-attention effect. Science 311, 5763 (2006), 1005–1007.

- Steven E Dilsizian and Eliot L Siegel. 2014. Artificial intelligence in medicine and cardiac imaging: harnessing big data and advanced computing to provide personalized medical diagnosis and treatment. Current cardiology reports 16, 1 (2014), 1–8.

- Explaining models: an empirical study of how explanations impact fairness judgment. In Proceedings of the 24th international conference on intelligent user interfaces. 275–285.

- Charles A Doswell. 2004. Weather forecasting by humans—Heuristics and decision making. Weather and Forecasting 19, 6 (2004), 1115–1126.

- Microtalk: Using argumentation to improve crowdsourcing accuracy. In Proceedings of the AAAI Conference on Human Computation and Crowdsourcing, Vol. 4. 32–41.

- Julia Dressel and Hany Farid. 2018. The accuracy, fairness, and limits of predicting recidivism. Science advances 4, 1 (2018), eaao5580.

- AI-Moderated Decision-Making: Capturing and Balancing Anchoring Bias in Sequential Decision Tasks. In CHI Conference on Human Factors in Computing Systems. 1–9.

- Franz Eisenfuhr. 2011. Decision making.

- Jonathan St BT Evans. 2002. Logic and human reasoning: an assessment of the deduction paradigm. Psychological bulletin 128, 6 (2002), 978.

- Human-level play in the game of Diplomacy by combining language models with strategic reasoning. Science 378, 6624 (2022), 1067–1074.

- Jenny Fan and Amy X Zhang. 2020. Digital juries: A civics-oriented approach to platform governance. In Proceedings of the 2020 CHI conference on human factors in computing systems. 1–14.

- Statistical power analyses using G* Power 3.1: Tests for correlation and regression analyses. Behavior research methods 41, 4 (2009), 1149–1160.

- James S Fishkin. 2018. Democracy when the people are thinking: Revitalizing our politics through public deliberation. Oxford University Press.

- Krzysztof Z Gajos and Lena Mamykina. 2022. Do People Engage Cognitively with AI? Impact of AI Assistance on Incidental Learning. In 27th International Conference on Intelligent User Interfaces. 794–806.

- Francis Galton. 1907. Vox populi. Nature 75, 1949 (1907), 450–451.

- Implicit detection of motor impairment in Parkinson’s disease from everyday smartphone interactions. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. 1–6.

- Explainable active learning (xal) toward ai explanations as interfaces for machine teachers. Proceedings of the ACM on Human-Computer Interaction 4, CSCW3 (2021), 1–28.

- Improving business process decision making based on past experience. Decision Support Systems 59 (2014), 93–107.

- Uncertainty Quantification 360: A Holistic Toolkit for Quantifying and Communicating the Uncertainty of AI. arXiv preprint arXiv:2106.01410 (2021).

- Francesca Gino and Don A Moore. 2007. Effects of task difficulty on use of advice. Journal of Behavioral Decision Making 20, 1 (2007), 21–35.

- Isidore Jacob Good. 1950. Probability and the Weighing of Evidence. (1950).

- David Gough. 2007. Weight of evidence: a framework for the appraisal of the quality and relevance of evidence. Research papers in education 22, 2 (2007), 213–228.

- Diego Gracia. 2003. Ethical case deliberation and decision making. Medicine, Health Care and Philosophy 6 (2003), 227–233.

- Ben Green and Yiling Chen. 2019. The principles and limits of algorithm-in-the-loop decision making. Proceedings of the ACM on Human-Computer Interaction 3, CSCW (2019), 1–24.

- Jürgen Habermas. 2005. Concluding comments on empirical approaches to deliberative politics. Acta politica 40 (2005), 384–392.

- Robert Harris. 1997. Evaluating Internet research sources. Virtual salt 17, 1 (1997), 1–17.

- Sandra G Hart. 2006. NASA-task load index (NASA-TLX); 20 years later. In Proceedings of the human factors and ergonomics society annual meeting, Vol. 50. Sage publications Sage CA: Los Angeles, CA, 904–908.

- Knowing About Knowing: An Illusion of Human Competence Can Hinder Appropriate Reliance on AI Systems. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–18.

- Randy Y Hirokawa. 1985. Discussion procedures and decision-making performance: A test of a functional perspective. Human Communication Research 12, 2 (1985), 203–224.

- Fairness requires deliberation: The primacy of economic over social considerations. Frontiers in psychology 6 (2015), 747.

- Causability and explainability of artificial intelligence in medicine. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 9, 4 (2019), e1312.

- Hsiu-Fang Hsieh and Sarah E Shannon. 2005. Three approaches to qualitative content analysis. Qualitative health research 15, 9 (2005), 1277–1288.

- How moral case deliberation supports good clinical decision making. AMA journal of ethics 21, 10 (2019), 913–919.

- Talking together: Public deliberation and political participation in America. University of Chicago Press.

- Survey of hallucination in natural language generation. Comput. Surveys 55, 12 (2023), 1–38.

- Daniel Kahneman. 2011. Thinking, fast and slow. Macmillan.

- Robert A Kaufman and David Kirsh. 2022. Cognitive Differences in Human and AI Explanation. In Proceedings of the Annual Meeting of the Cognitive Science Society, Vol. 44.

- Interpreting interpretability: understanding data scientists’ use of interpretability tools for machine learning. In Proceedings of the 2020 CHI conference on human factors in computing systems. 1–14.

- Consumer credit-risk models via machine-learning algorithms. Journal of Banking & Finance 34, 11 (2010), 2767–2787.

- Negotiation and honesty in artificial intelligence methods for the board game of Diplomacy. Nature Communications 13, 1 (2022), 7214.

- Pretrial publicity, judicial remedies, and jury bias. Law and human behavior 14, 5 (1990), 409–438.

- Human-AI Collaboration via Conditional Delegation: A Case Study of Content Moderation. In CHI Conference on Human Factors in Computing Systems. 1–18.

- Towards a Science of Human-AI Decision Making: A Survey of Empirical Studies. arXiv preprint arXiv:2112.11471 (2021).

- Vivian Lai and Chenhao Tan. 2019. On human predictions with explanations and predictions of machine learning models: A case study on deception detection. In Proceedings of the conference on fairness, accountability, and transparency. 29–38.

- Hélène Landemore. 2012. Democratic reason: Politics, collective intelligence, and the rule of the many. Princeton University Press.

- Hélène Landemore and Scott E Page. 2015. Deliberation and disagreement: Problem solving, prediction, and positive dissensus. Politics, philosophy & economics 14, 3 (2015), 229–254.

- Discussion of shared and unshared information in decision-making groups. Journal of personality and social psychology 67, 3 (1994), 446.

- Construction and evaluation of a user experience questionnaire. In Symposium of the Austrian HCI and usability engineering group. Springer, 63–76.

- John D Lee and Katrina A See. 2004. Trust in automation: Designing for appropriate reliance. Human factors 46, 1 (2004), 50–80.

- A Human-AI Collaborative Approach for Clinical Decision Making on Rehabilitation Assessment. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems. 1–14.

- Solutionchat: Real-time moderator support for chat-based structured discussion. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 1–12.

- Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems 33 (2020), 9459–9474.

- Questioning the AI: informing design practices for explainable AI user experiences. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 1–15.

- Q Vera Liao and Kush R Varshney. 2021. Human-Centered Explainable AI (XAI): From Algorithms to User Experiences. arXiv preprint arXiv:2110.10790 (2021).

- Understanding the effect of out-of-distribution examples and interactive explanations on human-ai decision making. Proceedings of the ACM on Human-Computer Interaction 5, CSCW2 (2021), 1–45.

- Evaluating the logical reasoning ability of chatgpt and gpt-4. arXiv preprint arXiv:2304.03439 (2023).

- Christopher Lord and Dionysia Tamvaki. 2013. The politics of justification? Applying the ‘Discourse Quality Index’to the study of the European Parliament. European Political Science Review 5, 1 (2013), 27–54.

- Scott M Lundberg and Su-In Lee. 2017. A unified approach to interpreting model predictions. Advances in neural information processing systems 30 (2017).

- Fred C Lunenburg. 2010. The decision making process.. In National Forum of Educational Administration & Supervision Journal, Vol. 27.

- Aidan Lyon and Eric Pacuit. 2013. The wisdom of crowds: Methods of human judgement aggregation. In Handbook of human computation. Springer, 599–614.

- Who Should I Trust: AI or Myself? Leveraging Human and AI Correctness Likelihood to Promote Appropriate Trust in AI-Assisted Decision-Making. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–19.

- Modeling Adaptive Expression of Robot Learning Engagement and Exploring its Effects on Human Teachers. ACM Transactions on Computer-Human Interaction (2022).

- ” Are You Really Sure?” Understanding the Effects of Human Self-Confidence Calibration in AI-Assisted Decision Making. arXiv preprint arXiv:2403.09552 (2024).

- SmartEye: assisting instant photo taking via integrating user preference with deep view proposal network. In Proceedings of the 2019 CHI conference on human factors in computing systems. 1–12.

- Beyond Recommender: An Exploratory Study of the Effects of Different AI Roles in AI-Assisted Decision Making. arXiv preprint arXiv:2403.01791 (2024).

- Glancee: An Adaptable System for Instructors to Grasp Student Learning Status in Synchronous Online Classes. In CHI Conference on Human Factors in Computing Systems. 1–25.

- Merriam-Webster. [n.d.]. Deliberation Definition. https://www.merriam-webster.com/dictionary/deliberation.

- How can decision making be improved? Perspectives on psychological science 4, 4 (2009), 379–383.

- A meta-analysis of confidence and judgment accuracy in clinical decision making. Journal of Counseling Psychology 62, 4 (2015), 553.

- Tim Miller. 2019. Explanation in artificial intelligence: Insights from the social sciences. Artificial intelligence 267 (2019), 1–38.

- Tim Miller. 2023. Explainable AI is Dead, Long Live Explainable AI! Hypothesis-driven Decision Support using Evaluative AI. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency. 333–342.

- Swati Mishra and Jeffrey M Rzeszotarski. 2021. Crowdsourcing and Evaluating Concept-driven Explanations of Machine Learning Models. Proceedings of the ACM on Human-Computer Interaction 5, CSCW1 (2021), 1–26.

- Tina Nabatchi and Matt Leighninger. 2015. Public participation for 21st century democracy. John Wiley & Sons.

- DJ Pangburn. 2019. Schools are using software to help pick who gets in. What could go wrong. Fast Company 17 (2019).

- Raja Parasuraman and Victor Riley. 1997. Humans and automation: Use, misuse, disuse, abuse. Human factors 39, 2 (1997), 230–253.

- A Slow Algorithm Improves Users’ Assessments of the Algorithm’s Accuracy. Proceedings of the ACM on Human-Computer Interaction 3, CSCW (2019), 1–15.

- Charles Sanders Peirce. 2014. Illustrations of the Logic of Science. Open Court.

- Manipulating and measuring model interpretability. In Proceedings of the 2021 CHI conference on human factors in computing systems. 1–52.

- Anne Preisz. 2019. Fast and slow thinking; and the problem of conflating clinical reasoning and ethical deliberation in acute decision-making. Journal of paediatrics and child health 55, 6 (2019), 621–624.

- Deciding fast and slow: The role of cognitive biases in ai-assisted decision-making. Proceedings of the ACM on Human-Computer Interaction 6, CSCW1 (2022), 1–22.

- Thomas L Saaty. 2008. Decision making with the analytic hierarchy process. International journal of services sciences 1, 1 (2008), 83–98.

- Understanding expert disagreement in medical data analysis through structured adjudication. Proceedings of the ACM on Human-Computer Interaction 3, CSCW (2019), 1–23.

- Appropriate reliance on AI advice: Conceptualization and the effect of explanations. In Proceedings of the 28th International Conference on Intelligent User Interfaces. 410–422.

- RetroLens: A Human-AI Collaborative System for Multi-step Retrosynthetic Route Planning. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–20.

- Herbert A Simon. 1990. Bounded rationality. Utility and probability (1990), 15–18.

- Herbert Alexander Simon. 1997. Models of bounded rationality: Empirically grounded economic reason. Vol. 3. MIT press.

- Robert L Simon. 2008. The Blackwell guide to social and political philosophy. John Wiley & Sons.

- Ignore, trust, or negotiate: understanding clinician acceptance of AI-based treatment recommendations in health care. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–18.

- TalkToModel: Explaining Machine Learning Models with Interactive Natural Language Conversations. (2022).

- Measuring political deliberation: A discourse quality index. Comparative European Politics 1 (2003), 21–48.

- Deliberative politics in action. Analysing parliamentary discourse. (2005).

- Mark Steyvers and Aakriti Kumar. 2022. Three Challenges for AI-Assisted Decision-Making. (2022).

- Philip E Tetlock. 2017. Expert political judgment. In Expert Political Judgment. Princeton University Press.

- Dennis F Thompson. 2008. Deliberative democratic theory and empirical political science. Annu. Rev. Polit. Sci. 11 (2008), 497–520.

- Calibrating trust in AI-assisted decision making.

- Dartmouth U Mass. [n.d.]. 7 STEPS TO EFFECTIVE DECISION MAKING. https://www.umassd.edu/fycm/decision-making/process/.

- Crowdsourcing perceptions of fair predictors for machine learning: A recidivism case study. Proceedings of the ACM on Human-Computer Interaction 3, CSCW (2019), 1–21.

- Frans H Van Eemeren and A Francisca Sn Henkemans. 2016. Argumentation: Analysis and evaluation. Taylor & Francis.

- Argumentation: Analysis, evaluation, presentation. Routledge.

- A design methodology for trust cue calibration in cognitive agents. In International conference on virtual, augmented and mixed reality. Springer, 251–262.

- The Effects of AI Biases and Explanations on Human Decision Fairness: A Case Study of Bidding in Rental Housing Markets. ([n. d.]).

- Xinru Wang and Ming Yin. 2021. Are explanations helpful? a comparative study of the effects of explanations in ai-assisted decision-making. In 26th International Conference on Intelligent User Interfaces. 318–328.

- Xinru Wang and Ming Yin. 2023. Watch Out for Updates: Understanding the Effects of Model Explanation Updates in AI-Assisted Decision Making. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–19.

- Austin Waters and Risto Miikkulainen. 2014. Grade: Machine learning support for graduate admissions. Ai Magazine 35, 1 (2014), 64–64.

- Douglas L Weed. 2005. Weight of evidence: a review of concept and methods. Risk Analysis: An International Journal 25, 6 (2005), 1545–1557.

- Learning to complement humans. arXiv preprint arXiv:2005.00582 (2020).

- Deliberative and participatory democracy? Ideological strength and the processes leading from deliberation to political engagement. International Journal of Public Opinion Research 22, 2 (2010), 154–180.

- CheXplain: enabling physicians to explore and understand data-driven, AI-enabled medical imaging analysis. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 1–13.

- How do visual explanations foster end users’ appropriate trust in machine learning?. In Proceedings of the 25th International Conference on Intelligent User Interfaces. 189–201.

- Ilan Yaniv. 2004. Receiving other people’s advice: Influence and benefit. Organizational behavior and human decision processes 93, 1 (2004), 1–13.

- Deliberating with AI: Improving Decision-Making for the Future through Participatory AI Design and Stakeholder Deliberation. Proceedings of the ACM on Human-Computer Interaction 7, CSCW1 (2023), 1–32.

- Effect of confidence and explanation on accuracy and trust calibration in AI-assisted decision making. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency. 295–305.

- Evaluating the impact of uncertainty visualization on model reliance. IEEE Transactions on Visualization and Computer Graphics (2023).

- Competent but Rigid: Identifying the Gap in Empowering AI to Participate Equally in Group Decision-Making. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–19.

- Qian Zhu and Shuai Ma. 2019. What Did I Miss?. In Adjunct Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology. 53–55.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.