AutoDev: Automated AI-Driven Development

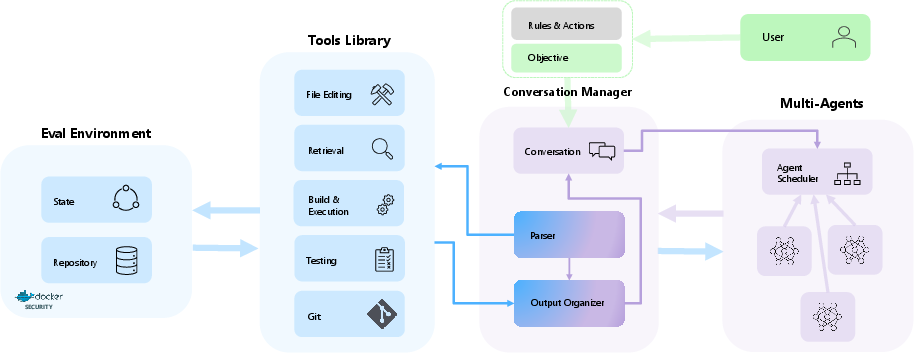

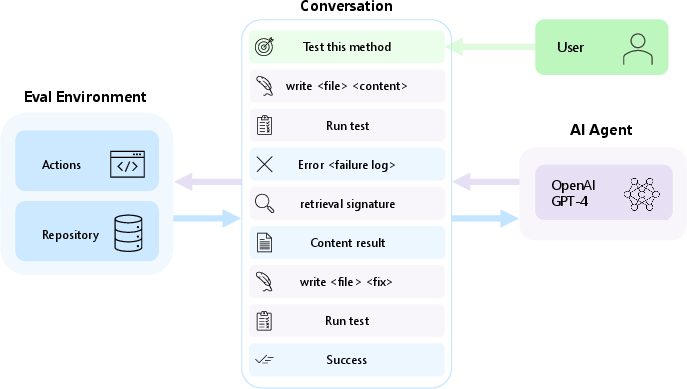

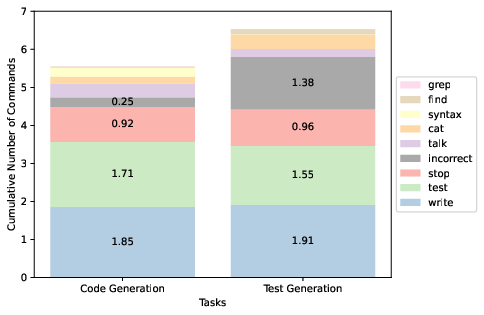

Abstract: The landscape of software development has witnessed a paradigm shift with the advent of AI-powered assistants, exemplified by GitHub Copilot. However, existing solutions are not leveraging all the potential capabilities available in an IDE such as building, testing, executing code, git operations, etc. Therefore, they are constrained by their limited capabilities, primarily focusing on suggesting code snippets and file manipulation within a chat-based interface. To fill this gap, we present AutoDev, a fully automated AI-driven software development framework, designed for autonomous planning and execution of intricate software engineering tasks. AutoDev enables users to define complex software engineering objectives, which are assigned to AutoDev's autonomous AI Agents to achieve. These AI agents can perform diverse operations on a codebase, including file editing, retrieval, build processes, execution, testing, and git operations. They also have access to files, compiler output, build and testing logs, static analysis tools, and more. This enables the AI Agents to execute tasks in a fully automated manner with a comprehensive understanding of the contextual information required. Furthermore, AutoDev establishes a secure development environment by confining all operations within Docker containers. This framework incorporates guardrails to ensure user privacy and file security, allowing users to define specific permitted or restricted commands and operations within AutoDev. In our evaluation, we tested AutoDev on the HumanEval dataset, obtaining promising results with 91.5% and 87.8% of Pass@1 for code generation and test generation respectively, demonstrating its effectiveness in automating software engineering tasks while maintaining a secure and user-controlled development environment.

- Code generation on humaneval - state-of-the-art. https://paperswithcode.com/sota/code-generation-on-humaneval, 2024. Accessed: 2024-02-27.

- Github copilot: Your ai pair programmer. https://github.com/features/copilot, 2024.

- Copilot evaluation harness: Evaluating llm-guided software programming, 2024.

- Vscuda: Llm based cuda extension for visual studio code. In Proceedings of the SC ’23 Workshops of The International Conference on High Performance Computing, Network, Storage, and Analysis (New York, NY, USA, 2023), SC-W ’23, Association for Computing Machinery, p. 11–17.

- Evaluating large language models trained on code.

- Crosscodeeval: A diverse and multilingual benchmark for cross-file code completion. Advances in Neural Information Processing Systems 36 (2024).

- Gpt-3: Its nature, scope, limits, and consequences. Minds and Machines 30 (2020), 681–694.

- Gravitas, S. Autogpt. https://github.com/Significant-Gravitas/AutoGPT, 2024. GitHub repository.

- Codexglue: A machine learning benchmark dataset for code understanding and generation, 2021.

- In-ide generation-based information support with a large language model, 2023.

- Gpt-4 technical report, 2024.

- OpenAI. Gpt 3.5 models, 2023.

- OpenAI. Gpt-4 technical report, 2023.

- Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems 35 (2022), 27730–27744.

- Scaling language models: Methods, analysis & insights from training gopher, 2022.

- Code llama: Open foundation models for code. arXiv preprint arXiv:2308.12950 (2023).

- Reflexion: Language agents with verbal reinforcement learning, 2023.

- Using deepspeed and megatron to train megatron-turing nlg 530b, a large-scale generative language model, 2022.

- Beyond the imitation game: Quantifying and extrapolating the capabilities of language models, 2023.

- Attention is all you need. Advances in neural information processing systems 30 (2017).

- Glue: A multi-task benchmark and analysis platform for natural language understanding, 2019.

- Autogen: Enabling next-gen llm applications via multi-agent conversation, 2023.

- Language agent tree search unifies reasoning acting and planning in language models, 2023.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.