Chatbot Arena: An Open Platform for Evaluating LLMs by Human Preference (2403.04132v1)

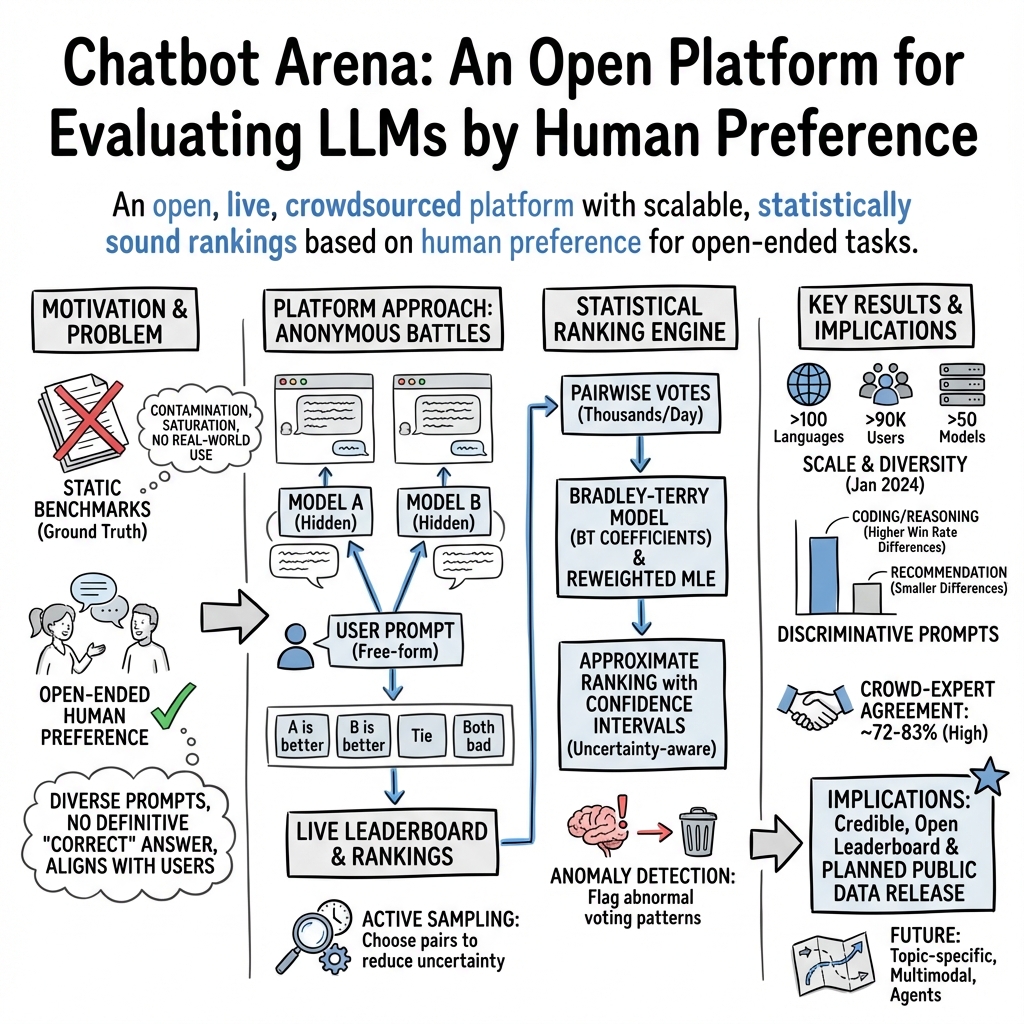

Abstract: LLMs have unlocked new capabilities and applications; however, evaluating the alignment with human preferences still poses significant challenges. To address this issue, we introduce Chatbot Arena, an open platform for evaluating LLMs based on human preferences. Our methodology employs a pairwise comparison approach and leverages input from a diverse user base through crowdsourcing. The platform has been operational for several months, amassing over 240K votes. This paper describes the platform, analyzes the data we have collected so far, and explains the tried-and-true statistical methods we are using for efficient and accurate evaluation and ranking of models. We confirm that the crowdsourced questions are sufficiently diverse and discriminating and that the crowdsourced human votes are in good agreement with those of expert raters. These analyses collectively establish a robust foundation for the credibility of Chatbot Arena. Because of its unique value and openness, Chatbot Arena has emerged as one of the most referenced LLM leaderboards, widely cited by leading LLM developers and companies. Our demo is publicly available at \url{https://chat.lmsys.org}.

- Training a helpful and harmless assistant with reinforcement learning from human feedback. arXiv preprint arXiv:2204.05862, 2022.

- Elo uncovered: Robustness and best practices in language model evaluation, 2023.

- Rank analysis of incomplete block designs: I. the method of paired comparisons. Biometrika, 39(3/4):324–345, 1952.

- Preference-based rank elicitation using statistical models: The case of mallows. In Xing, E. P. and Jebara, T. (eds.), Proceedings of the 31st International Conference on Machine Learning, volume 32 of Proceedings of Machine Learning Research, pp. 1071–1079, Bejing, China, 22–24 Jun 2014a. PMLR. URL https://proceedings.mlr.press/v32/busa-fekete14.html.

- Preference-based rank elicitation using statistical models: The case of mallows. In Xing, E. P. and Jebara, T. (eds.), Proceedings of the 31st International Conference on Machine Learning, volume 32 of Proceedings of Machine Learning Research, pp. 1071–1079, Bejing, China, 22–24 Jun 2014b. PMLR. URL https://proceedings.mlr.press/v32/busa-fekete14.html.

- Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374, 2021.

- Chernoff, H. Sequential Design of Experiments, pp. 345–360. Springer New York, New York, NY, 1992. ISBN 978-1-4612-4380-9. doi: 10.1007/978-1-4612-4380-9_27. URL https://doi.org/10.1007/978-1-4612-4380-9_27.

- Can large language models be an alternative to human evaluations? In Rogers, A., Boyd-Graber, J., and Okazaki, N. (eds.), Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 15607–15631, Toronto, Canada, July 2023. Association for Computational Linguistics. doi: 10.18653/v1/2023.acl-long.870. URL https://aclanthology.org/2023.acl-long.870.

- Training verifiers to solve math word problems. arXiv preprint arXiv:2110.14168, 2021.

- Ultrafeedback: Boosting language models with high-quality feedback, 2023.

- Bootstrap confidence intervals. Statistical science, 11(3):189–228, 1996.

- Durrett, R. Probability: theory and examples, volume 49. Cambridge university press, 2019.

- Elo, A. E. The proposed uscf rating system, its development, theory, and applications. Chess Life, 22(8):242–247, 1967.

- Fisher, R. A. Statistical methods for research workers. Number 5. Oliver and Boyd, 1928.

- Freedman, D. A. On the so-called “huber sandwich estimator”’ and “robust standard errors”’. The American Statistician, 60(4):299–302, 2006.

- Gemini: a family of highly capable multimodal models. arXiv preprint arXiv:2312.11805, 2023.

- Koala: A dialogue model for academic research. Blog post, April 2023. URL https://bair.berkeley.edu/blog/2023/04/03/koala/.

- Grootendorst, M. Bertopic: Neural topic modeling with a class-based tf-idf procedure. arXiv preprint arXiv:2203.05794, 2022.

- Measuring massive multitask language understanding. In International Conference on Learning Representations, 2020.

- Time-uniform chernoff bounds via nonnegative supermartingales. 2020.

- Competition-level problems are effective llm evaluators. arXiv preprint arXiv:2312.02143, 2023.

- Huber, P. J. et al. The behavior of maximum likelihood estimates under nonstandard conditions. In Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, volume 1, pp. 221–233. Berkeley, CA: University of California Press, 1967.

- Hunter, D. R. MM algorithms for generalized Bradley-Terry models. The Annals of Statistics, 32(1):384 – 406, 2004. doi: 10.1214/aos/1079120141. URL https://doi.org/10.1214/aos/1079120141.

- Online active model selection for pre-trained classifiers. In International Conference on Artificial Intelligence and Statistics, pp. 307–315. PMLR, 2021.

- The perils of using Mechanical Turk to evaluate open-ended text generation. In Moens, M.-F., Huang, X., Specia, L., and Yih, S. W.-t. (eds.), Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pp. 1265–1285, Online and Punta Cana, Dominican Republic, November 2021. Association for Computational Linguistics. doi: 10.18653/v1/2021.emnlp-main.97. URL https://aclanthology.org/2021.emnlp-main.97.

- Dynabench: Rethinking benchmarking in nlp. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 4110–4124, 2021.

- Openassistant conversations–democratizing large language model alignment. arXiv preprint arXiv:2304.07327, 2023.

- Langley, P. Crafting papers on machine learning. In Langley, P. (ed.), Proceedings of the 17th International Conference on Machine Learning (ICML 2000), pp. 1207–1216, Stanford, CA, 2000. Morgan Kaufmann.

- Alpacaeval: An automatic evaluator of instruction-following models. https://github.com/tatsu-lab/alpaca_eval, 2023.

- Competition-level code generation with alphacode. Science, 378(6624):1092–1097, 2022.

- Holistic evaluation of language models. arXiv preprint arXiv:2211.09110, 2022.

- ToxicChat: Unveiling hidden challenges of toxicity detection in real-world user-AI conversation. In Bouamor, H., Pino, J., and Bali, K. (eds.), Findings of the Association for Computational Linguistics: EMNLP 2023, pp. 4694–4702, Singapore, December 2023. Association for Computational Linguistics. doi: 10.18653/v1/2023.findings-emnlp.311. URL https://aclanthology.org/2023.findings-emnlp.311.

- Liu, T.-Y. et al. Learning to rank for information retrieval. Foundations and Trends® in Information Retrieval, 3(3):225–331, 2009.

- Umap: Uniform manifold approximation and projection for dimension reduction, 2020.

- OpenAI. Gpt-4 technical report. arXiv preprint arXiv:2303.08774, 2023.

- Proving test set contamination in black box language models. arXiv preprint arXiv:2310.17623, 2023.

- Training language models to follow instructions with human feedback, 2022.

- Game-theoretic statistics and safe anytime-valid inference. Statistical Science, 38(4):576–601, 2023.

- Ties in paired-comparison experiments: A generalization of the bradley-terry model. Journal of the American Statistical Association, 62(317):194–204, 1967. doi: 10.1080/01621459.1967.10482901.

- Beyond the imitation game: Quantifying and extrapolating the capabilities of language models. Transactions on Machine Learning Research, 2023.

- Online rank elicitation for plackett-luce: A dueling bandits approach. In Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., and Garnett, R. (eds.), Advances in Neural Information Processing Systems, volume 28. Curran Associates, Inc., 2015. URL https://proceedings.neurips.cc/paper_files/paper/2015/file/7eacb532570ff6858afd2723755ff790-Paper.pdf.

- Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023.

- E-values: Calibration, combination and applications. The Annals of Statistics, 49(3):1736–1754, 2021.

- Self-instruct: Aligning language models with self-generated instructions. In Rogers, A., Boyd-Graber, J., and Okazaki, N. (eds.), Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 13484–13508, Toronto, Canada, July 2023. Association for Computational Linguistics. doi: 10.18653/v1/2023.acl-long.754. URL https://aclanthology.org/2023.acl-long.754.

- Estimating means of bounded random variables by betting. arXiv preprint arXiv:2010.09686, 2020.

- White, H. Maximum likelihood estimation of misspecified models. Econometrica: Journal of the econometric society, pp. 1–25, 1982.

- Rethinking benchmark and contamination for language models with rephrased samples. arXiv preprint arXiv:2311.04850, 2023.

- Hellaswag: Can a machine really finish your sentence? In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 4791–4800, 2019.

- Lmsys-chat-1m: A large-scale real-world llm conversation dataset, 2023a.

- Judging LLM-as-a-judge with MT-bench and chatbot arena. In Thirty-seventh Conference on Neural Information Processing Systems Datasets and Benchmarks Track, 2023b. URL https://openreview.net/forum?id=uccHPGDlao.

- Agieval: A human-centric benchmark for evaluating foundation models. arXiv preprint arXiv:2304.06364, 2023.

- Starling-7b: Improving llm helpfulness & harmlessness with rlaif, November 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Glossary

- Active sampling rule: A strategy for selecting which model pair to compare next to most efficiently reduce uncertainty in estimates. "Active sampling rule. Our sampling rule was to choose the model pair a € A proportionally to the reduction in confidence interval size by sampling that pair:"

- AlpacaEval: A benchmark that approximates human preference evaluation using LLMs as judges for open-ended tasks. "MT-Bench (Zheng et al., 2023b), and AlpacaEval (Li et al., 2023) are common examples of static benchmarks."

- Approximate ranking: A ranking procedure that incorporates statistical uncertainty via confidence sets to avoid overstating or understating model performance. "Approximate rankings. Finally, we report an approximate ranking for each model that accounts for the uncertainty in the estimation of the score."

- BERTopic: A topic modeling framework that uses transformer embeddings and clustering to discover topics. "To study the prompt diversity, we build a topic modeling pipeline with BERTopic3 (Grootendorst, 2022)."

- Beta distribution: A continuous probability distribution on [0, 1] used here to simulate BT coefficients in experiments. "A vector of BT coefficients is drawn, with each coordinate sampled i.i.d. from a distribution beta(1/7, 1/7);"

- BigBench: A large, diverse benchmark suite for evaluating LLM capabilities. "Prominent examples in this category are MMLU (Hendrycks et al., 2020), Hel- laSwag (Zellers et al., 2019), GSM-8K (Cobbe et al., 2021), BigBench (Srivastava et al., 2023), AGIEval (Zhong et al., 2023), and HumanEval (Chen et al., 2021)."

- Bonferroni correction: A multiple-testing adjustment method to control the family-wise error rate when making sequential inferences. "Along with a variant of the Bonferroni correction."

- Bradley–Terry (BT) coefficients: Parameters that quantify model strengths in the Bradley–Terry paired-comparison framework. "A standard score function in this setting is the vector of Bradley-Terry (BT) coefficients (Bradley & Terry, 1952)."

- Bradley–Terry model: A probabilistic paired-comparison model where win probabilities follow a logistic function of latent strengths. "In the Bradley-Terry model, Ht € {0,1}, and the probability model m beats model m' is modeled via a logistic relationship:"

- Chi-squared interval: A confidence interval constructed using the chi-squared distribution under asymptotic normality to provide uniform coverage. "To get the uniform confidence set, we construct the chi-squared interval implied by the central limit theo- rem using the sandwich estimate of the variance."

- Confidence set: A set of parameter values that, with high probability, contains the true parameter vector. "Given an M-dimensional confidence set C satisfying"

- E-values: Evidence measures for sequential testing that support anytime-valid inference and combination. "Armed with this data, we employ a suite of powerful statistical techniques, ranging from the statistical model of Bradley & Terry (1952) to the E-values of Vovk & Wang (2021), to estimate the ranking over models as reliably and sample-efficiently as possible."

- Elo rating system: A rating method originally for games that updates competitor scores based on match outcomes. "The Elo rating system has also been used for LLMs (Bai et al., 2022; Boubdir et al., 2023)."

- Exchangeability: A property indicating that observations can be permuted without changing their joint distribution, used to justify p-value validity. "Under the null hypothesis that HAY is exchangeable with Hi, pi is a valid p-value (see Appendix C for a proof)."

- Fisher’s combination test: A classical method for combining multiple p-values into a single test statistic. "We can test against this null hypothesis sequentially by using Fisher's combination test (Fisher, 1928) along with a variant of the Bonferroni correction."

- HDBSCAN: A hierarchical density-based clustering algorithm that discovers clusters of varying densities. "We then use the hierarchical density-based clustering algorithm, HDB- SCAN, to identify topic clusters with minimum cluster size 32."

- HELM: A holistic evaluation suite for LLMs covering diverse axes like accuracy, robustness, and fairness. "Benchmarks focusing on safety, such as ToxicChat (Lin et al., 2023), and comprehensive suites like HELM (Liang et al., 2022), also exist."

- HumanEval: A benchmark for evaluating code generation and problem-solving abilities of LLMs. "Prominent examples in this category are MMLU (Hendrycks et al., 2020), Hel- laSwag (Zellers et al., 2019), GSM-8K (Cobbe et al., 2021), BigBench (Srivastava et al., 2023), AGIEval (Zhong et al., 2023), and HumanEval (Chen et al., 2021)."

- Human-in-the-loop: An evaluation or training paradigm where human feedback is integrated into the process. "DynaBench (Kiela et al., 2021) identifies these challenges and recommends the use of a live benchmark that incorporates a human-in-the-loop approach"

- Inverse weighting: A reweighting technique that adjusts likelihood contributions by the inverse of sampling probabilities. "We perform the inverse weighting by P(At) because this allows us to target a score with a uniform distribution over A."

- Learning to rank: A field of machine learning focused on ranking items based on pairwise or listwise feedback. "This is a well-studied topic in the literature on learning to rank (Liu et al., 2009), and we present our perspective here."

- LLM-as-judge: An evaluation approach where a LLM acts as the judge to compare other models’ outputs. "We factor out user votes and consider LLM-as-judge (Zheng et al., 2023b) to evaluate model re- sponse."

- Maximum likelihood estimator (MLE): An estimator that maximizes the likelihood of observed data under a parametric model. "Where § is our MLE of the BT coefficients and Vg is the sandwich variance of the logistic regression."

- MT-Bench: A benchmark that uses GPT-4 as a judge to evaluate instruction-following capabilities of LLMs. "We compare Arena bench against a widely used LLM benchmark, MT- Bench (Zheng et al., 2023b)."

- Multiplicity correction: Adjustments to inference that account for estimating or testing many parameters simultaneously. "The multiplicity correction, in this case a chi-square CLT interval, is technically required for the purpose of calculating the ranking, because it ensures all scores are simulta- neously contained in their intervals (and the ranking is a function of all the scores)."

- Nonnegative supermartingales: Stochastic processes used to create time-uniform guarantees in sequential analysis. "We also believe our approach to detecting harmful users could be improved and made more formally rigorous by using the theory of nonnegative supermartingales and E- values"

- Pivot bootstrap: A bootstrap method that uses pivotal quantities to improve confidence interval accuracy. "To compute confidence intervals on the BT coefficients, we employ two strategies: (1) the pivot bootstrap (DiCiccio & Efron, 1996)"

- Rank elicitation: Methods for inferring rankings from preferences or comparisons, often using statistical models. "Related topics include probability models (Hunter, 2004; Rao & Kupper, 1967), rank elicita- tion (Szörényi et al., 2015; Busa-Fekete et al., 2014a;b), and online experiment design (Chernoff, 1992; Karimi et al., 2021)."

- Sandwich estimator (robust standard errors): A robust variance estimator for MLEs that remains valid under model misspecification. "So-called 'sandwich' covariance matrix is used; see Section 5 for details, and see Appendix B for a nonparametric extension of the Bradley- Terry model."

- UMAP: A manifold learning method for dimensionality reduction that preserves local and global structure. "To mitigate the curse of dimensionality for data clustering, we employ UMAP (Uniform Manifold Approx- imation and Projection) (McInnes et al., 2020) to reduce the embedding dimension from 1,536 to 5."

- Uniform validity: A property where confidence sets or intervals are simultaneously valid across all parameters. "The uniform validity of C directly implies that P(Em : Rm > rank(P)m) ≤ a- i.e., with high probability, no model's performance is un- derstated."

- Win matrix: A matrix of pairwise win probabilities indicating how often one model is preferred over another. "One critical goal is to estimate the win matrix: 0*(a) = E[Ht | At = a], for all a € A; see the left panel of Figure 1 for an illustration of the (empirical) win matrix."

Collections

Sign up for free to add this paper to one or more collections.