Towards AI Accountability Infrastructure: Gaps and Opportunities in AI Audit Tooling (2402.17861v3)

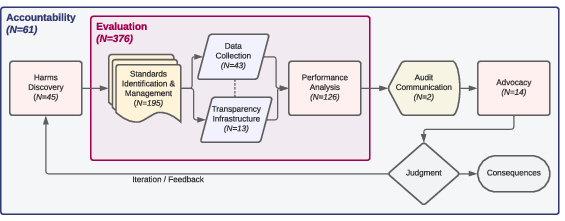

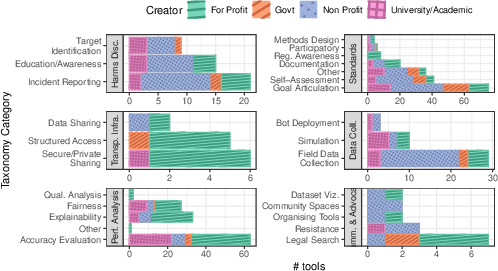

Abstract: Audits are critical mechanisms for identifying the risks and limitations of deployed AI systems. However, the effective execution of AI audits remains incredibly difficult, and practitioners often need to make use of various tools to support their efforts. Drawing on interviews with 35 AI audit practitioners and a landscape analysis of 435 tools, we compare the current ecosystem of AI audit tooling to practitioner needs. While many tools are designed to help set standards and evaluate AI systems, they often fall short in supporting accountability. We outline challenges practitioners faced in their efforts to use AI audit tools and highlight areas for future tool development beyond evaluation -- from harms discovery to advocacy. We conclude that the available resources do not currently support the full scope of AI audit practitioners' needs and recommend that the field move beyond tools for just evaluation and towards more comprehensive infrastructure for AI accountability.

- Claudio Agosti “Tracking Exposed Manifesto” In Tracking Exposed, 2023 URL: https://tracking.exposed/manifesto

- “WES: Agent-based User Interaction Simulation on Real Infrastructure” In Proceedings of the IEEE/ACM 42nd International Conference on Software Engineering Workshops, ICSEW’20 New York, NY, USA: Association for Computing Machinery, 2020, pp. 276–284 DOI: 10.1145/3387940.3392089

- Airbnb “A New Way We’re Fighting Discrimination on Airbnb - Resource Centre” In Airbnb Resource Centre, 2020 URL: https://www.airbnb.ca/resources/hosting-homes/a/a-new-way-were-fighting-discrimination-on-airbnb-201

- American Civil Liberties Union “Sandvig v. Barr — Challenge to CFAA Prohibition on Uncovering Racial Discrimination Online” In American Civil Liberties Union, 2019 URL: https://www.aclu.org/cases/sandvig-v-barr-challenge-cfaa-prohibition-uncovering-racial-discrimination-online

- “ModelTracker: Redesigning Performance Analysis Tools for Machine Learning” In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI ’15 New York, NY, USA: Association for Computing Machinery, 2015, pp. 337–346 DOI: 10.1145/2702123.2702509

- “Machine Bias” In ProPublica, 2016 URL: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

- Jack Bandy “Problematic Machine Behavior: A Systematic Literature Review of Algorithm Audits” In Proceedings of the ACM on Human-Computer Interaction 5.CSCW1, 2021, pp. 74:1–74:34 DOI: 10.1145/3449148

- “Algorithmic Equity Toolkit”, 2020 URL: https://www.aclu-wa.org/AEKit

- “AI Fairness 360: An Extensible Toolkit for Detecting, Understanding, and Mitigating Unwanted Algorithmic Bias” arXiv, 2018 DOI: 10.48550/arXiv.1810.01943

- “Benefits Tech Advocacy Hub” In Benefits Tech Advocacy Hub, 2023 URL: https://btah.org/

- Glen Berman, Nitesh Goyal and Michael Madaio “A Scoping Study of Evaluation Practices for Responsible AI Tools: Steps Towards Effectiveness Evaluations”, 2024 DOI: 10.1145/3613904.3642398

- “On Selective, Mutable and Dialogic XAI: A Review of What Users Say about Different Types of Interactive Explanations” In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI ’23 New York, NY, USA: Association for Computing Machinery, 2023, pp. 1–21 DOI: 10.1145/3544548.3581314

- “Power to the People? Opportunities and Challenges for Participatory AI” In Equity and Access in Algorithms, Mechanisms, and Optimization, 2022, pp. 1–8

- “AI Auditing: The Broken Bus on the Road to AI Accountability” In IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), 2024 URL: https://arxiv.org/abs/2401.14462

- “Tech Worker Organizing for Power and Accountability” In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, 2022, pp. 452–463

- Mark Bovens “Analysing and Assessing Accountability: A Conceptual Framework 1” In European law journal 13.4 Wiley Online Library, 2007, pp. 447–468

- “Toward Algorithmic Accountability in Public Services: A Qualitative Study of Affected Community Perspectives on Algorithmic Decision-making in Child Welfare Services” In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI ’19 New York, NY, USA: Association for Computing Machinery, 2019, pp. 1–12 DOI: 10.1145/3290605.3300271

- “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification” In Proceedings of the 1st Conference on Fairness, Accountability and Transparency 81, Proceedings of Machine Learning Research New York, NY, USA: PMLR, 2018, pp. 77–91 URL: http://proceedings.mlr.press/v81/buolamwini18a.html

- “Community Jury - Azure Application Architecture Guide” In Microsoft, 2022 URL: https://learn.microsoft.com/en-us/azure/architecture/guide/responsible-innovation/community-jury/

- Charlie Pownall “AI, Algorithmic and Automation Incident and Controversy Repository (AIAAIC)”, 2021 URL: https://www.aiaaic.org/

- Kathy Charmaz “Constructing Grounded Theory”, Introducing Qualitative Methods London ; Thousand Oaks, Calif: Sage, 2014

- Mike Clark “Research Cannot Be the Justification for Compromising People’s Privacy” In Meta, 2021 URL: https://about.fb.com/news/2021/08/research-cannot-be-the-justification-for-compromising-peoples-privacy/

- Yvette D. Clarke “Algorithmic Accountability Act of 2022”, 2019 URL: https://www.congress.gov/117/bills/hr6580/BILLS-117hr6580ih.pdf

- Sasha Costanza-Chock, Inioluwa Deborah Raji and Joy Buolamwini “Who Audits the Auditors? Recommendations from a Field Scan of the Algorithmic Auditing Ecosystem” In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’22 New York, NY, USA: Association for Computing Machinery, 2022, pp. 1571–1583 DOI: 10.1145/3531146.3533213

- “Crunchbase” URL: https://www.crunchbase.com

- “A Local Law to Amend the Administrative Code of the City of New York, in Relation to Automated Employment Decision Tools”, 2021 URL: https://www.nyc.gov/site/dca/about/automated-employment-decision-tools.page

- “The Participatory Turn in AI Design: Theoretical Foundations and the Current State of Practice” In Proceedings of the 3rd ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization, 2023, pp. 1–23

- “Understanding Practices, Challenges, and Opportunities for User-Driven Algorithm Auditing in Industry Practice” In arXiv preprint arXiv:2210.03709, 2022 arXiv:2210.03709

- “Understanding Practices, Challenges, and Opportunities for User-Engaged Algorithm Auditing in Industry Practice” In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI ’23 New York, NY, USA: Association for Computing Machinery, 2023, pp. 1–18 DOI: 10.1145/3544548.3581026

- “Exploring How Machine Learning Practitioners (Try To) Use Fairness Toolkits” In 2022 ACM Conference on Fairness, Accountability, and Transparency, 2022, pp. 473–484 DOI: 10.1145/3531146.3533113

- Deven R. Desai and Joshua A. Kroll “Trust But Verify: A Guide to Algorithms and the Law”, 2017 URL: https://papers.ssrn.com/abstract=2959472

- “Toward User-Driven Algorithm Auditing: Investigating Users’ Strategies for Uncovering Harmful Algorithmic Behavior” In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, CHI ’22 New York, NY, USA: Association for Computing Machinery, 2022, pp. 1–19 DOI: 10.1145/3491102.3517441

- Digital Regulation Cooperation Forum “Auditing Algorithms: The Existing Landscape, Role of Regulators and Future Outlook”, 2022 URL: https://www.gov.uk/government/publications/findings-from-the-drcf-algorithmic-processing-workstream-spring-2022/auditing-algorithms-the-existing-landscape-role-of-regulators-and-future-outlook

- “We Research Misinformation on Facebook. It Just Disabled Our Accounts.” In The New York Times, 2021 URL: https://www.nytimes.com/2021/08/10/opinion/facebook-misinformation.html

- Upol Ehsan and Mark O. Riedl “Human-Centered Explainable AI: Towards a Reflective Sociotechnical Approach” In HCI International 2020 - Late Breaking Papers: Multimodality and Intelligence, Lecture Notes in Computer Science Cham: Springer International Publishing, 2020, pp. 449–466 DOI: 10.1007/978-3-030-60117-1˙33

- European Parliament and Council of the European Union “Regulation (EU) 2016/679 of the European Parliament and of the Council. of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation)”, 2016 URL: https://data.europa.eu/eli/reg/2016/679/oj

- “Facets - Know Your Data” In FACETS, 2023 URL: https://pair-code.github.io/facets/

- Michael Feffer, Nikolas Martelaro and Hoda Heidari “The AI Incident Database as an Educational Tool to Raise Awareness of AI Harms: A Classroom Exploration of Efficacy, Limitations, & Future Improvements” In Proceedings of the 3rd ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization, EAAMO ’23 New York, NY, USA: Association for Computing Machinery, 2023, pp. 1–11 DOI: 10.1145/3617694.3623223

- Laura Galindo, Karine Perset and Francesca Sheeka “An Overview of National AI Strategies and Policies” OECD, 2021

- Marzyeh Ghassemi, Luke Oakden-Rayner and Andrew L. Beam “The False Hope of Current Approaches to Explainable Artificial Intelligence in Health Care” In The Lancet Digital Health 3.11 Elsevier, 2021, pp. e745–e750 DOI: 10.1016/S2589-7500(21)00208-9

- “Gig Economy Data Hub” In Gig Economy Data Hub, 2021 URL: https://www.gigeconomydata.org/home

- “Github” In GitHub URL: https://github.com

- “Discovery of Grounded Theory: Strategies for Qualitative Research” Routledge, 2017

- Ellen P. Goodman and Julia Trehu “AI Audit Washing and Accountability”, 2022 DOI: 10.2139/ssrn.4227350

- “AI Regulation Has Its Own Alignment Problem: The Technical and Institutional Feasibility of Disclosure, Registration, Licensing, and Auditing” In Forthcoming, George Washington Law Review, 2023

- Merve Hickock “Ethical AI Frameworks, Guidelines, Toolkits” In AI Ethicist URL: https://www.aiethicist.org/frameworks-guidelines-toolkits

- “Business Data Ethics: Emerging Trends in the Governance of Advanced Analytics and AI”, 2020 DOI: 10.2139/ssrn.3828239

- “Improving Fairness in Machine Learning Systems: What Do Industry Practitioners Need?” In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems Glasgow Scotland Uk: ACM, 2019, pp. 1–16 DOI: 10.1145/3290605.3300830

- Information Commissioner’s Office “Annex A: Fairness in the AI Lifecycle”, 2023 URL: https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/guidance-on-ai-and-data-protection/

- “Fake AI” Meatspace Press, 2021

- “Interpreting Interpretability: Understanding Data Scientists’ Use of Interpretability Tools for Machine Learning” In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, CHI ’20 New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–14 DOI: 10.1145/3313831.3376219

- “Risky Analysis: Assessing and Improving AI Governance Tools” World Privacy Forum, 2023 URL: https://www.worldprivacyforum.org/wp-content/uploads/2023/12/WPF_Risky_Analysis_December_2023_fs.pdf

- “”Help Me Help the AI”: Understanding How Explainability Can Support Human-AI Interaction” In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, CHI ’23 New York, NY, USA: Association for Computing Machinery, 2023, pp. 1–17 DOI: 10.1145/3544548.3581001

- “Participatory Approaches to Machine Learning”, International Conference on Machine Learning Workshop, 2020

- “Problems with Shapley-value-based Explanations as Feature Importance Measures” In Proceedings of the 37th International Conference on Machine Learning 119, ICML’20 JMLR.org, 2020, pp. 5491–5500

- “Sociotechnical Audits: Broadening the Algorithm Auditing Lens to Investigate Targeted Advertising” In Proceedings of the ACM on Human-Computer Interaction 7.CSCW2, 2023, pp. 360:1–360:37 DOI: 10.1145/3610209

- Christie Lawrence, Isaac Cui and Daniel Ho “The Bureaucratic Challenge to AI Governance: An Empirical Assessment of Implementation at U.S. Federal Agencies” In Proceedings of the 2023 AAAI/ACM Conference on AI, Ethics, and Society, AIES ’23 New York, NY, USA: Association for Computing Machinery, 2023, pp. 606–652 DOI: 10.1145/3600211.3604701

- Michelle Seng Ah Lee and Jat Singh “The Landscape and Gaps in Open Source Fairness Toolkits” In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, CHI ’21 New York, NY, USA: Association for Computing Machinery, 2021, pp. 1–13 DOI: 10.1145/3411764.3445261

- Anna Lenhart “Federal AI Legislation: An Analysis of Proposals from the 117th Congress Relevant to Generative AI Tools”, 2023 URL: https://iddp.gwu.edu/sites/g/files/zaxdzs5791/files/2023-06/federal_ai_legislation_v3.pdf

- Samuel Levine “Letter from Acting Director of the Bureau of Consumer Protection Samuel Levine to Facebook”, 2021 URL: https://www.ftc.gov/blog-posts/2021/08/letter-acting-director-bureau-consumer-protection-samuel-levine-facebook

- Tiffany Li “Algorithmic Destruction” In SMU Law Review 75.3, 2022, pp. 479 DOI: 10.25172/smulr.75.3.2

- Q.Vera Liao, Daniel Gruen and Sarah Miller “Questioning the AI: Informing Design Practices for Explainable AI User Experiences” In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, CHI ’20 New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–15 DOI: 10.1145/3313831.3376590

- Zachary C. Lipton “The Mythos of Model Interpretability: In Machine Learning, the Concept of Interpretability Is Both Important and Slippery.” In Queue 16.3, 2018, pp. 31–57 DOI: 10.1145/3236386.3241340

- Scott M Lundberg and Su-In Lee “A Unified Approach to Interpreting Model Predictions” In Advances in Neural Information Processing Systems 30 Curran Associates, Inc., 2017 URL: https://papers.nips.cc/paper_files/paper/2017/hash/8a20a8621978632d76c43dfd28b67767-Abstract.html

- “Co-Designing Checklists to Understand Organizational Challenges and Opportunities around Fairness in AI” In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, CHI ’20 New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–14 DOI: 10.1145/3313831.3376445

- Phil Mendelson “Stop Discrimination by Algorithms Act of 2021”, 2021 URL: https://lims.dccouncil.gov/Legislation/B24-0558

- “Auditing Algorithms: Understanding Algorithmic Systems from the Outside In” In Foundations and Trends® in Human–Computer Interaction 14.4, 2021, pp. 272–344 DOI: 10.1561/1100000083

- “Model Cards for Model Reporting” In Proceedings of the Conference on Fairness, Accountability, and Transparency, FAT* ’19 New York, NY, USA: Association for Computing Machinery, 2019, pp. 220–229 DOI: 10.1145/3287560.3287596

- Mozilla Foundation “YouTube Regrets”, 2021 URL: https://assets.mofoprod.net/network/documents/Mozilla_YouTube_Regrets_Report.pdf

- Arvind Narayanan “How to Recognize AI Snake Oil” In Arthur Miller Lecture on Science and Ethics Massachusetts Institute of Technology, 2019

- Mei Ngan, Patrick Grother and Kayee Hanaoka “Ongoing Face Recognition Vendor Test (FRVT) Part 6B: Face Recognition Accuracy with Face Masks Using Post-COVID-19 Algorithms”, 2020 DOI: 10.6028/NIST.IR.8331

- Office of Science and Technology Policy “Blueprint for an AI Bill of Rights”, 2022 URL: https://www.whitehouse.gov/ostp/ai-bill-of-rights/

- OpenAI “GPT-4 Technical Report” arXiv, 2023 DOI: 10.48550/arXiv.2303.08774

- Billy Perrigo “California Bill Proposes Regulating AI at State Level” In Time, 2023 URL: https://time.com/6313588/california-ai-regulation-bill/

- “The ROOTS Search Tool: Data Transparency for LLMs” In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations) Toronto, Canada: Association for Computational Linguistics, 2023, pp. 304–314 DOI: 10.18653/v1/2023.acl-demo.29

- Michael Power “The Audit Society: Rituals of Verification” Oxford, New York: Oxford University Press, 1999

- Queensland Government “Community Engagement Toolkit for Planning”, 2017 URL: https://dilgpprd.blob.core.windows.net/general/community-engagement-toolkit.pdf

- Inioluwa Deborah Raji and Joy Buolamwini “Actionable Auditing Revisited: Investigating the Impact of Publicly Naming Biased Performance Results of Commercial AI Products” In Communications of the ACM 66.1, 2022, pp. 101–108 DOI: 10.1145/3571151

- “The Fallacy of AI Functionality” In 2022 ACM Conference on Fairness, Accountability, and Transparency Seoul Republic of Korea: ACM, 2022, pp. 959–972 DOI: 10.1145/3531146.3533158

- “Closing the AI Accountability Gap: Defining an End-to-End Framework for Internal Algorithmic Auditing” In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, FAT* ’20 New York, NY, USA: Association for Computing Machinery, 2020, pp. 33–44 DOI: 10.1145/3351095.3372873

- “Outsider Oversight: Designing a Third Party Audit Ecosystem for AI Governance” In Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, AIES ’22 New York, NY, USA: Association for Computing Machinery, 2022, pp. 557–571 DOI: 10.1145/3514094.3534181

- “Where Responsible AI Meets Reality: Practitioner Perspectives on Enablers for Shifting Organizational Practices” In Proceedings of the ACM on Human-Computer Interaction 5.CSCW1, 2021, pp. 7:1–7:23 DOI: 10.1145/3449081

- Tate Ryan-Mosley “Why Everyone Is Mad about New York’s AI Hiring Law” In MIT Technology Review, 2023 URL: https://www.technologyreview.com/2023/07/10/1076013/new-york-ai-hiring-law/

- “Auditing Algorithms: Research Methods for Detecting Discrimination on Internet Platforms” In Data and Discrimination: Converting Critical Concerns into Productive: A Preconference at the 64th Annual Meeting of the International Communication Association, 2014, pp. 23

- “Sandvig v. Bar”, 2020 URL: https://casetext.com/case/sandvig-v-barr

- “Fairness and Abstraction in Sociotechnical Systems” In Proceedings of the Conference on Fairness, Accountability, and Transparency, FAT* ’19 New York, NY, USA: Association for Computing Machinery, 2019, pp. 59–68 DOI: 10.1145/3287560.3287598

- “Selenium” In Selenium URL: https://www.selenium.dev/

- Nathan Sheard “Banning Government Use of Face Recognition Technology: 2020 Year in Review” In Electronic Frontier Foundation, 2021 URL: https://www.eff.org/deeplinks/2020/12/banning-government-use-face-recognition-technology-2020-year-review

- “”Public (s)-in-the-Loop”: Facilitating Deliberation of Algorithmic Decisions in Contentious Public Policy Domains” In arXiv preprint arXiv:2204.10814, 2022 arXiv:2204.10814

- “Everyday Algorithm Auditing: Understanding the Power of Everyday Users in Surfacing Harmful Algorithmic Behaviors” In Proceedings of the ACM on Human-Computer Interaction 5.CSCW2 ACM New York, NY, USA, 2021, pp. 1–29

- “Facebook Settles Civil Rights Cases by Making Sweeping Changes to Its Online Ad Platform — ACLU of Northern CA” In ACLU Northern California, 2019 URL: https://www.aclunc.org/blog/facebook-settles-civil-rights-cases-making-sweeping-changes-its-online-ad-platform

- “Model Evaluation for Extreme Risks” arXiv, 2023 DOI: 10.48550/arXiv.2305.15324

- “Participation Is Not a Design Fix for Machine Learning” In Proceedings of the 2nd ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization, EAAMO ’22 New York, NY, USA: Association for Computing Machinery, 2022, pp. 1–6 DOI: 10.1145/3551624.3555285

- Mona Sloane, Emanuel Moss and Rumman Chowdhury “A Silicon Valley Love Triangle: Hiring Algorithms, Pseudo-Science, and the Quest for Auditability” In Patterns (New York, N.Y.) 3.2, 2022, pp. 100425 DOI: 10.1016/j.patter.2021.100425

- “No Explainability without Accountability: An Empirical Study of Explanations and Feedback in Interactive ML” In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, CHI ’20 New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–13 DOI: 10.1145/3313831.3376624

- Chandler Nicholle Spinks “Contemporary Housing Discrimination: Facebook, Targeted Advertising, and the Fair Housing Act” In Houston Law Review 57.4 Joe Christensen, Inc., 2020, pp. 925–952 URL: https://houstonlawreview.org/article/12762-contemporary-housing-discrimination-facebook-targeted-advertising-and-the-fair-housing-act

- “Physiognomic Artificial Intelligence” In Fordham Intell. Prop. Media & Ent. LJ 32 HeinOnline, 2021, pp. 922

- Stop LAPD Spying Coalition and Free Radicals “The Algorithmic Ecology: An Abolitionist Tool for Organizing Against Algorithms” In Free Radicals, 2020 URL: https://freerads.org/2020/03/02/the-algorithmic-ecology-an-abolitionist-tool-for-organizing-against-algorithms/

- Elham Tabassi “AI Risk Management Framework: AI RMF (1.0)”, 2023, pp. error: NIST AI 100–1 DOI: 10.6028/NIST.AI.100-1

- The Markup “Citizen Browser” In The Markup, 2022 URL: https://themarkup.org/series/citizen-browser

- “Why We Need to Know More: Exploring the State of AI Incident Documentation Practices” In Proceedings of the 2023 AAAI/ACM Conference on AI, Ethics, and Society, AIES ’23 New York, NY, USA: Association for Computing Machinery, 2023, pp. 576–583 DOI: 10.1145/3600211.3604700

- Twitter “Twitter Algorithmic Bias - Bug Bounty Program” In HackerOne, 2021 URL: https://hackerone.com/twitter-algorithmic-bias

- Aleksandra Urman, Ivan Smirnov and Jana Lasser “The Right to Audit and Power Asymmetries in Algorithm Auditing” In arXiv preprint arXiv:2302.08301, 2023 arXiv:2302.08301

- Briana Vecchione, Karen Levy and Solon Barocas “Algorithmic Auditing and Social Justice: Lessons from the History of Audit Studies” In Proceedings of the 1st ACM Conference on Equity and Access in Algorithms, Mechanisms, and Optimization, EAAMO ’21 New York, NY, USA: Association for Computing Machinery, 2021, pp. 1–9 DOI: 10.1145/3465416.3483294

- Sandra Wachter, Brent Mittelstadt and Chris Russell “Bias Preservation in Machine Learning: The Legality of Fairness Metrics Under EU Non-Discrimination Law” In West Virginia Law Review 123.3, 2021, pp. 735 URL: https://researchrepository.wvu.edu/wvlr/vol123/iss3/4

- “Designing Theory-Driven User-Centric Explainable AI” In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI ’19 New York, NY, USA: Association for Computing Machinery, 2019, pp. 1–15 DOI: 10.1145/3290605.3300831

- “Join Us in the AI Test Kitchen” In Google, 2022 URL: https://blog.google/technology/ai/join-us-in-the-ai-test-kitchen/

- “Governing Algorithmic Systems with Impact Assessments: Six Observations” In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, AIES ’21 New York, NY, USA: Association for Computing Machinery, 2021, pp. 1010–1022 DOI: 10.1145/3461702.3462580

- “It’s about Power: What Ethical Concerns Do Software Engineers Have, and What Do They (Feel They Can) Do about Them?” In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’23 New York, NY, USA: Association for Computing Machinery, 2023, pp. 467–479 DOI: 10.1145/3593013.3594012

- Harry F. Wolcott “Transforming Qualitative Data: Description, Analysis, and Interpretation” SAGE, 1994

- Richmond Y. Wong, Michael A. Madaio and Nick Merrill “Seeing Like a Toolkit: How Toolkits Envision the Work of AI Ethics” In Proceedings of the ACM on Human-Computer Interaction 7.CSCW1, 2023, pp. 145:1–145:27 DOI: 10.1145/3579621

- “A Qualitative Exploration of Perceptions of Algorithmic Fairness” In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, CHI ’18 New York, NY, USA: Association for Computing Machinery, 2018, pp. 1–14 DOI: 10.1145/3173574.3174230

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.