Leveraging Large Language Models for Learning Complex Legal Concepts through Storytelling (2402.17019v4)

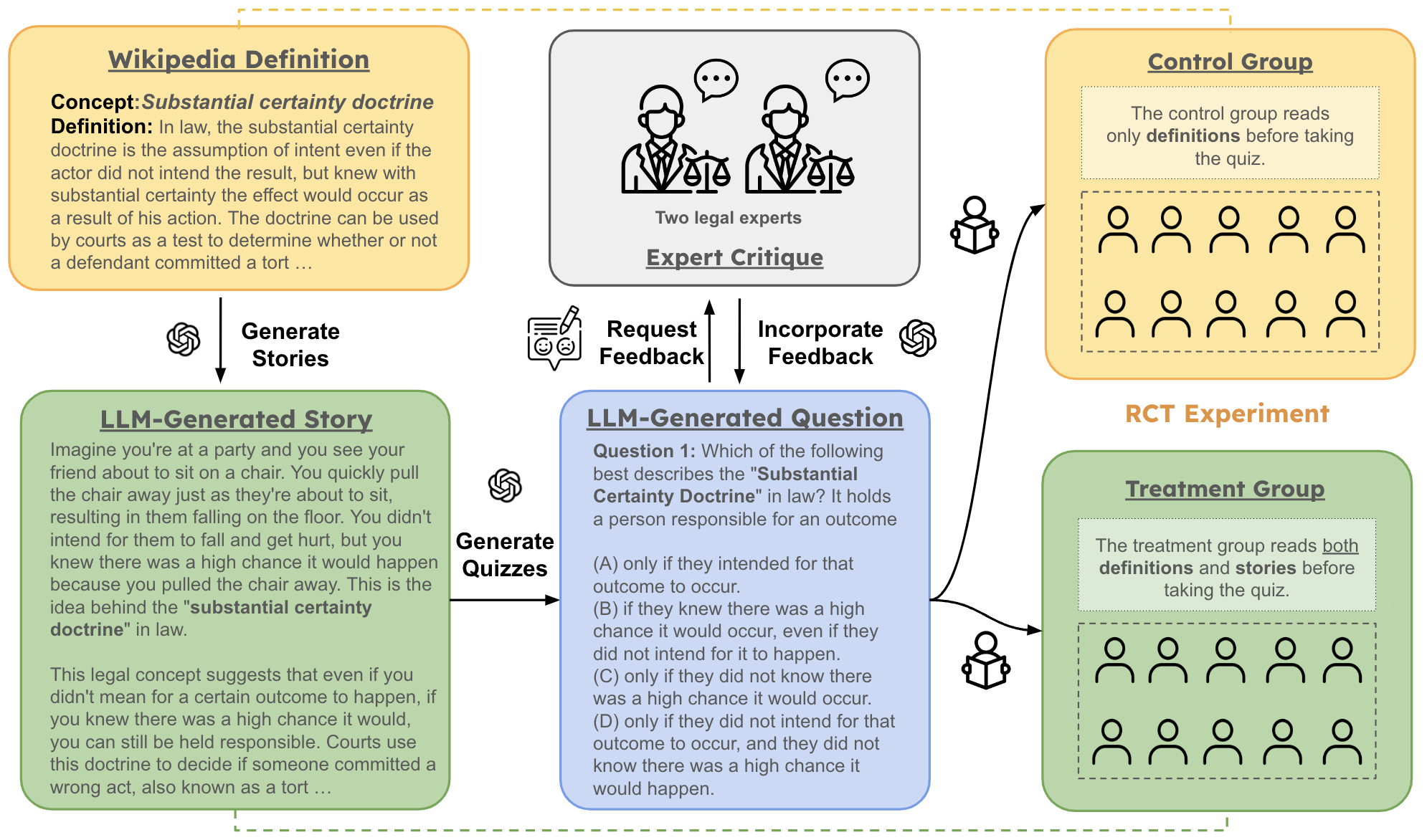

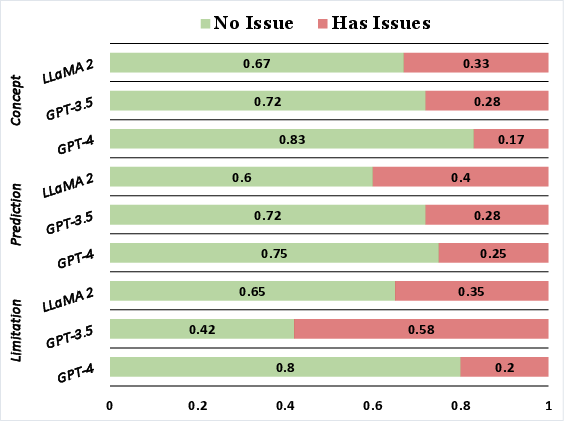

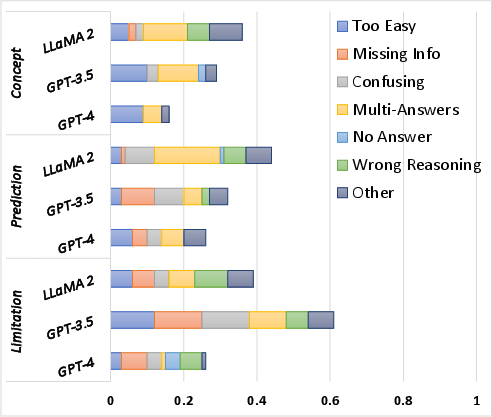

Abstract: Making legal knowledge accessible to non-experts is crucial for enhancing general legal literacy and encouraging civic participation in democracy. However, legal documents are often challenging to understand for people without legal backgrounds. In this paper, we present a novel application of LLMs in legal education to help non-experts learn intricate legal concepts through storytelling, an effective pedagogical tool in conveying complex and abstract concepts. We also introduce a new dataset LegalStories, which consists of 294 complex legal doctrines, each accompanied by a story and a set of multiple-choice questions generated by LLMs. To construct the dataset, we experiment with various LLMs to generate legal stories explaining these concepts. Furthermore, we use an expert-in-the-loop approach to iteratively design multiple-choice questions. Then, we evaluate the effectiveness of storytelling with LLMs through randomized controlled trials (RCTs) with legal novices on 10 samples from the dataset. We find that LLM-generated stories enhance comprehension of legal concepts and interest in law among non-native speakers compared to only definitions. Moreover, stories consistently help participants relate legal concepts to their lives. Finally, we find that learning with stories shows a higher retention rate for non-native speakers in the follow-up assessment. Our work has strong implications for using LLMs in promoting teaching and learning in the legal field and beyond.

- H Porter Abbott. 2020. The Cambridge introduction to narrative. Cambridge University Press.

- Craig Eilert Abrahamson. 1998. Storytelling as a pedagogical tool in higher education. Education, 118(3):440–452.

- Nancy E Adams. 2015. Bloom’s taxonomy of cognitive learning objectives. Journal of the Medical Library Association: JMLA, 103(3):152.

- Review on neural question generation for education purposes. International Journal of Artificial Intelligence in Education, pages 1–38.

- Automatic story generation: Challenges and attempts. In Proceedings of the Third Workshop on Narrative Understanding, pages 72–83, Virtual. Association for Computational Linguistics.

- Generating scientific definitions with controllable complexity. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8298–8317, Dublin, Ireland. Association for Computational Linguistics.

- Unilmv2: Pseudo-masked language models for unified language model pre-training. In International conference on machine learning, pages 642–652. PMLR.

- Robert W Benson. 1984. The end of legalese: The game is over. New York University Review of Law & Social Change, 13:519.

- Scalable educational question generation with pre-trained language models. In International Conference on Artificial Intelligence in Education, pages 327–339. Springer.

- Rick Busselle and Helena Bilandzic. 2008. Fictionality and perceived realism in experiencing stories: A model of narrative comprehension and engagement. Communication theory, 18(2):255–280.

- Rick Busselle and Helena Bilandzic. 2009. Measuring narrative engagement. Media psychology, 12(4):321–347.

- A storytelling learning model for legal education. International Association for Development of the Information Society, 2014:29–36.

- Unsupervised simplification of legal texts. arXiv preprint arXiv:2209.00557.

- Simpatico: A text simplification system for senate and house bills. In Proceedings of the 11th National Natural Language Processing Research Symposium, pages 26–32.

- Kevyn Collins-Thompson. 2014. Computational assessment of text readability: A survey of current and future research. ITL-International Journal of Applied Linguistics, 165(2):97–135.

- Chatlaw: Open-source legal large language model with integrated external knowledge bases. arXiv preprint arXiv:2306.16092.

- Michael Curtotti and Eric McCreath. 2013. A right to access implies a right to know: An open online platform for research on the readability of law. J. Open Access L., 1:1.

- Michael F Dahlstrom. 2014. Using narratives and storytelling to communicate science with nonexpert audiences. Proceedings of the national academy of sciences, 111(supplement_4):13614–13620.

- Ruth Davidhizar and Giny Lonser. 2003. Storytelling as a teaching technique. Nurse educator, 28(5):217–221.

- Atefeh Farzindar and Guy Lapalme. 2004. Legal text summarization by exploration of the thematic structure and argumentative roles. In Text Summarization Branches Out, pages 27–34, Barcelona, Spain. Association for Computational Linguistics.

- A comparison of features for automatic readability assessment.

- Quiz maker: Automatic quiz generation from text using nlp. In Futuristic Trends in Networks and Computing Technologies: Select Proceedings of Fourth International Conference on FTNCT 2021, pages 523–533. Springer.

- Kathleen Marie Gallagher. 2011. In search of a theoretical basis for storytelling in education research: Story as method. International Journal of Research & Method in Education, 34(1):49–61.

- Dee Gardner and Mark Davies. 2014. A new academic vocabulary list. Applied linguistics, 35(3):305–327.

- Text simplification for legal domain: Insights and challenges. In Proceedings of the Natural Legal Language Processing Workshop 2022, pages 296–304, Abu Dhabi, United Arab Emirates (Hybrid). Association for Computational Linguistics.

- Katie L Glonek and Paul E King. 2014. Listening to narratives: An experimental examination of storytelling in the classroom. International journal of listening, 28(1):32–46.

- Definition modelling for appropriate specificity. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 2499–2509, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics.

- Chatgpt for good? on opportunities and challenges of large language models for education. Learning and individual differences, 103:102274.

- A free format legal question answering system. In Proceedings of the Natural Legal Language Processing Workshop 2021, pages 107–113, Punta Cana, Dominican Republic. Association for Computational Linguistics.

- Derivation of new readability formulas (automated readability index, fog count and flesch reading ease formula) for navy enlisted personnel.

- Stephen M Kromka and Alan K Goodboy. 2019. Classroom storytelling: Using instructor narratives to increase student recall, affect, and attention. Communication Education, 68(1):20–43.

- The influence of text characteristics on perceived and actual difficulty of health information. International journal of medical informatics, 79(6):438–449.

- Evaluating online health information: Beyond readability formulas. In AMIA Annual Symposium Proceedings, volume 2008, page 394. American Medical Informatics Association.

- Prompting large language models with chain-of-thought for few-shot knowledge base question generation. arXiv preprint arXiv:2310.08395.

- Asking questions the human way: Scalable question-answer generation from text corpus. In Proceedings of The Web Conference 2020, pages 2032–2043.

- Expert-authored and machine-generated short-answer questions for assessing students learning performance. Educational Technology & Society, 24(3):159–173.

- Readingquizmaker: A human-nlp collaborative system that supports instructors to design high-quality reading quiz questions. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, pages 1–18.

- The implications of large language models for cs teachers and students. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education, volume 2.

- The law and NLP: Bridging disciplinary disconnects. In Findings of the Association for Computational Linguistics: EMNLP 2023, pages 3445–3454, Singapore. Association for Computational Linguistics.

- Laura Manor and Junyi Jessy Li. 2019. Plain English summarization of contracts. In Proceedings of the Natural Legal Language Processing Workshop 2019, pages 1–11, Minneapolis, Minnesota. Association for Computational Linguistics.

- Susana Martinez-Conde and Stephen L Macknik. 2017. Finding the plot in science storytelling in hopes of enhancing science communication. Proceedings of the National Academy of Sciences, 114(31):8127–8129.

- Jorge Martinez-Gil. 2023. A survey on legal question–answering systems. Computer Science Review, 48:100552.

- Carrie J Menkel-Meadow. 1999. When winning isn’t everything: The lawyer as problem solver. Hofstra L. Rev., 28:905.

- Randall Munroe. 2015. Thing explainer: complicated stuff in simple words. Hachette UK.

- ACCoRD: A multi-document approach to generating diverse descriptions of scientific concepts. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pages 200–213, Abu Dhabi, UAE. Association for Computational Linguistics.

- Towards personalized descriptions of scientific concepts. In The Fifth Widening Natural Language Processing Workshop at EMNLP.

- Pre-training with scientific text improves educational question generation (student abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, volume 37, pages 16288–16289.

- Qurious: Question generation pretraining for text generation. arXiv preprint arXiv:2004.11026.

- Christos H Papadimitriou. 2003. Mythematics: in praise of storytelling in the teaching of computer science and math. ACM SIGCSE Bulletin, 35(4):7–9.

- Emily Pitler and Ani Nenkova. 2008. Revisiting readability: A unified framework for predicting text quality. In Proceedings of the 2008 Conference on Empirical Methods in Natural Language Processing, pages 186–195, Honolulu, Hawaii. Association for Computational Linguistics.

- Deictic centers and the cognitive structure of narrative comprehension. State University of New York (Buffalo). Department of Computer Science.

- Educational multi-question generation for reading comprehension. In Proceedings of the 17th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2022), pages 216–223, Seattle, Washington. Association for Computational Linguistics.

- Comparing and developing tools to measure the readability of domain-specific texts. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 4831–4842, Hong Kong, China. Association for Computational Linguistics.

- Automatic generation of programming exercises and code explanations using large language models. In Proceedings of the 2022 ACM Conference on International Computing Education Research-Volume 1, pages 27–43.

- Explaining legal concepts with augmented large language models (gpt-4). arXiv preprint arXiv:2306.09525.

- Neha Srikanth and Junyi Jessy Li. 2021. Elaborative simplification: Content addition and explanation generation in text simplification. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, pages 5123–5137, Online. Association for Computational Linguistics.

- I do not understand what i cannot define: Automatic question generation with pedagogically-driven content selection. arXiv preprint arXiv:2110.04123.

- Educational automatic question generation improves reading comprehension in non-native speakers: A learner-centric case study. Frontiers in Artificial Intelligence, 5:900304.

- Llama 2: Open foundation and fine-tuned chat models, 2023. URL https://arxiv. org/abs/2307.09288.

- Generating multiple choice questions for computing courses using large language models.

- On the automatic generation and simplification of children’s stories. arXiv preprint arXiv:2310.18502.

- Towards human-like educational question generation with large language models. In International conference on artificial intelligence in education, pages 153–166. Springer.

- Evaluating reading comprehension exercises generated by LLMs: A showcase of ChatGPT in education applications. In Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023), pages 610–625, Toronto, Canada. Association for Computational Linguistics.

- The next chapter: A study of large language models in storytelling. In Proceedings of the 16th International Natural Language Generation Conference, pages 323–351, Prague, Czechia. Association for Computational Linguistics.

- MEGATRON-CNTRL: Controllable story generation with external knowledge using large-scale language models. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 2831–2845, Online. Association for Computational Linguistics.

- Machine comprehension by text-to-text neural question generation. In Proceedings of the 2nd Workshop on Representation Learning for NLP, pages 15–25, Vancouver, Canada. Association for Computational Linguistics.

- Unleashing the power of large language models for legal applications. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, pages 5257–5258.

- Jec-qa: a legal-domain question answering dataset. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pages 9701–9708.

- Neural question generation from text: A preliminary study. In Natural Language Processing and Chinese Computing: 6th CCF International Conference, NLPCC 2017, Dalian, China, November 8–12, 2017, Proceedings 6, pages 662–671. Springer.

- Future sight: Dynamic story generation with large pretrained language models. arXiv preprint arXiv:2212.09947.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.