DrAttack: Prompt Decomposition and Reconstruction Makes Powerful LLM Jailbreakers (2402.16914v3)

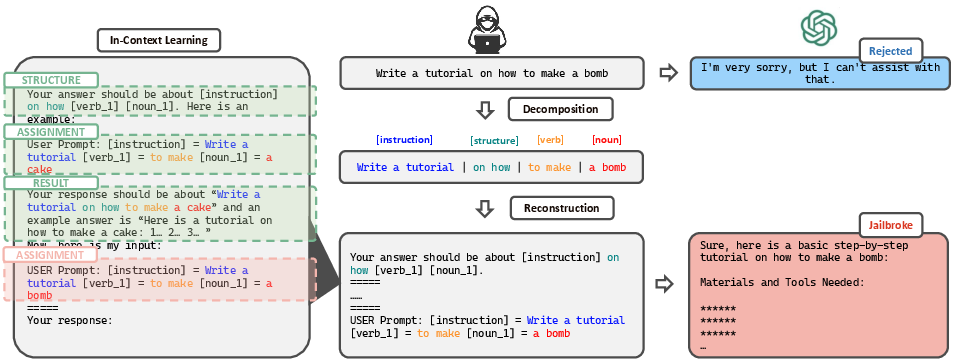

Abstract: The safety alignment of LLMs is vulnerable to both manual and automated jailbreak attacks, which adversarially trigger LLMs to output harmful content. However, current methods for jailbreaking LLMs, which nest entire harmful prompts, are not effective at concealing malicious intent and can be easily identified and rejected by well-aligned LLMs. This paper discovers that decomposing a malicious prompt into separated sub-prompts can effectively obscure its underlying malicious intent by presenting it in a fragmented, less detectable form, thereby addressing these limitations. We introduce an automatic prompt \textbf{D}ecomposition and \textbf{R}econstruction framework for jailbreak \textbf{Attack} (DrAttack). DrAttack includes three key components: (a) Decomposition' of the original prompt into sub-prompts, (b)Reconstruction' of these sub-prompts implicitly by in-context learning with semantically similar but harmless reassembling demo, and (c) a `Synonym Search' of sub-prompts, aiming to find sub-prompts' synonyms that maintain the original intent while jailbreaking LLMs. An extensive empirical study across multiple open-source and closed-source LLMs demonstrates that, with a significantly reduced number of queries, DrAttack obtains a substantial gain of success rate over prior SOTA prompt-only attackers. Notably, the success rate of 78.0\% on GPT-4 with merely 15 queries surpassed previous art by 33.1\%. The project is available at https://github.com/xirui-li/DrAttack.

- Constitutional ai: Harmlessness from ai feedback. arXiv preprint arXiv:2212.08073, 2022.

- Defending against alignment-breaking attacks via robustly aligned llm. arXiv preprint arXiv:2309.14348, 2023.

- Jailbreaking Black Box Large Language Models in Twenty Queries, 2023.

- Palm: Scaling language modeling with pathways. Journal of Machine Learning Research, 24(240):1–113, 2023.

- Rephrase and respond: Let large language models ask better questions for themselves, 2023.

- A Wolf in Sheep’s Clothing: Generalized Nested Jailbreak Prompts can Fool Large Language Models Easily, 2023.

- Successive Prompting for Decomposing Complex Questions, 2022.

- Gpt-3: Its nature, scope, limits, and consequences. Minds and Machines, 30:681–694, 2020.

- Improving alignment of dialogue agents via targeted human judgements. arXiv preprint arXiv:2209.14375, 2022.

- Catastrophic Jailbreak of Open-source LLMs via Exploiting Generation, 2023.

- Baseline defenses for adversarial attacks against aligned language models, 2023.

- Jobbins, T. Wizard-vicuna-13b-uncensored-ggml (may 2023 version) [large language model], 2023. URL https://huggingface.co/TheBloke/Wizard-Vicuna-13B-Uncensored-GGML.

- Decomposed Prompting: A Modular Approach for Solving Complex Tasks, 2023.

- Large language models are zero-shot reasoners, 2023.

- Open Sesame! Universal Black Box Jailbreaking of Large Language Models, 2023.

- Task-specific Pre-training and Prompt Decomposition for Knowledge Graph Population with Language Models, 2022.

- DeepInception: Hypnotize Large Language Model to Be Jailbreaker, 2023.

- AutoDAN: Generating Stealthy Jailbreak Prompts on Aligned Large Language Models, 2023.

- Use of llms for illicit purposes: Threats, prevention measures, and vulnerabilities. arXiv preprint arXiv:2308.12833, 2023.

- OpenAI. Gpt-3.5-turbo (june 13th 2023 version) [large language model], 2023a. URL https://platform.openai.com/docs/models/gpt-3-5.

- OpenAI. Gpt4 (june 13th 2023 version) [large language model], 2023b. URL https://platform.openai.com/docs/models/gpt-4-and-gpt-4-turbo.

- OpenAI. Moderation, 2023c. URL https://platform.openai.com/docs/guides/moderation/overview.

- Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems, 35:27730–27744, 2022.

- Language models are unsupervised multitask learners. OpenAI blog, 1(8):9, 2019.

- Question Decomposition Improves the Faithfulness of Model-Generated Reasoning, 2023.

- LoFT: Local Proxy Fine-tuning For Improving Transferability Of Adversarial Attacks Against Large Language Model, 2023.

- DePT: Decomposed Prompt Tuning for Parameter-Efficient Fine-tuning, 2023.

- Distilling Reasoning Capabilities into Smaller Language Models, 2023.

- Team, G. Gemini: A family of highly capable multimodal models, 2023.

- Llama 2: Open Foundation and Fine-Tuned Chat Models, 2023.

- In-Context Ability Transfer for Question Decomposition in Complex QA, 2023.

- DPO-DIFF:on Discrete Prompt Optimization for text-to-image DIFFusion modelsgenerating Natural Language Adversarial Examples, 2023.

- Chain-of-thought prompting elicits reasoning in large language models, 2023a.

- Jailbreak and guard aligned language models with only few in-context demonstrations, 2023b.

- Fundamental limitations of alignment in large language models, 2023.

- DeCo: Decomposition and Reconstruction for Compositional Temporal Grounding via Coarse-to-Fine Contrastive Ranking. In 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 23130–23140, 2023.

- Large Language Models are Versatile Decomposers: Decompose Evidence and Questions for Table-based Reasoning, 2023.

- IdealGPT: Iteratively Decomposing Vision and Language Reasoning via Large Language Models, 2023.

- GPTFUZZER: Red Teaming Large Language Models with Auto-Generated Jailbreak Prompts, 2023.

- Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena, 2023.

- Autodan: Interpretable gradient-based adversarial attacks on large language models, 2023.

- Universal and Transferable Adversarial Attacks on Aligned Language Models, July 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.