Mirror: A Multiple-perspective Self-Reflection Method for Knowledge-rich Reasoning

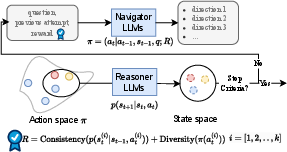

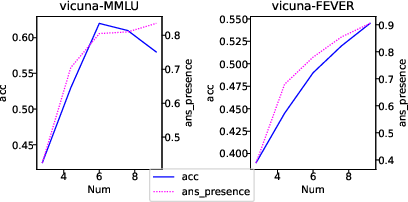

Abstract: While LLMs have the capability to iteratively reflect on their own outputs, recent studies have observed their struggles with knowledge-rich problems without access to external resources. In addition to the inefficiency of LLMs in self-assessment, we also observe that LLMs struggle to revisit their predictions despite receiving explicit negative feedback. Therefore, We propose Mirror, a Multiple-perspective self-reflection method for knowledge-rich reasoning, to avoid getting stuck at a particular reflection iteration. Mirror enables LLMs to reflect from multiple-perspective clues, achieved through a heuristic interaction between a Navigator and a Reasoner. It guides agents toward diverse yet plausibly reliable reasoning trajectory without access to ground truth by encouraging (1) diversity of directions generated by Navigator and (2) agreement among strategically induced perturbations in responses generated by the Reasoner. The experiments on five reasoning datasets demonstrate that Mirror's superiority over several contemporary self-reflection approaches. Additionally, the ablation study studies clearly indicate that our strategies alleviate the aforementioned challenges.

- Adrien Baranes and Pierre-Yves Oudeyer. 2013. Active learning of inverse models with intrinsically motivated goal exploration in robots. Robotics Auton. Syst., 61(1):49–73.

- A survey of monte carlo tree search methods. IEEE Transactions on Computational Intelligence and AI in Games, 4(1):1–43.

- Discovering latent knowledge in language models without supervision. In The Eleventh International Conference on Learning Representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023. OpenReview.net.

- Learning universal policies via text-guided video generation. CoRR, abs/2302.00111.

- Guiding pretraining in reinforcement learning with large language models. In International Conference on Machine Learning, ICML 2023, 23-29 July 2023, Honolulu, Hawaii, USA, volume 202 of Proceedings of Machine Learning Research, pages 8657–8677. PMLR.

- RARR: Researching and revising what language models say, using language models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 16477–16508, Toronto, Canada. Association for Computational Linguistics.

- Enabling large language models to generate text with citations. In Empirical Methods in Natural Language Processing (EMNLP).

- Improving alignment of dialogue agents via targeted human judgements. ArXiv, abs/2209.14375.

- CRITIC: large language models can self-correct with tool-interactive critiquing. CoRR, abs/2305.11738.

- Critic: Large language models can self-correct with tool-interactive critiquing. ArXiv, abs/2305.11738.

- Reasoning with language model is planning with world model. CoRR, abs/2305.14992.

- Measuring massive multitask language understanding. Proceedings of the International Conference on Learning Representations (ICLR).

- TRUE: Re-evaluating factual consistency evaluation. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 3905–3920, Seattle, United States. Association for Computational Linguistics.

- Large language models cannot self-correct reasoning yet. CoRR, abs/2310.01798.

- Language models (mostly) know what they know. CoRR, abs/2207.05221.

- Grace: Discriminator-guided chain-of-thought reasoning. In Conference on Empirical Methods in Natural Language Processing.

- Levente Kocsis and Csaba Szepesvári. 2006. Bandit based monte-carlo planning. In European conference on machine learning, pages 282–293. Springer.

- Exploration in deep reinforcement learning: A survey. Information Fusion, 85:1–22.

- Emotionprompt: Leveraging psychology for large language models enhancement via emotional stimulus. arXiv preprint arXiv:2307.11760.

- Lost in the middle: How language models use long contexts. CoRR, abs/2307.03172.

- Training socially aligned language models in simulated human society. arXiv preprint arXiv:2305.16960.

- Self-refine: Iterative refinement with self-feedback. CoRR, abs/2303.17651.

- Selfcheckgpt: Zero-resource black-box hallucination detection for generative large language models. CoRR, abs/2303.08896.

- Samuel Marks and Max Tegmark. 2023. The geometry of truth: Emergent linear structure in large language model representations of true/false datasets. ArXiv, abs/2310.06824.

- Rethinking the role of demonstrations: What makes in-context learning work? In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, EMNLP 2022, Abu Dhabi, United Arab Emirates, December 7-11, 2022, pages 11048–11064. Association for Computational Linguistics.

- Improving intrinsic exploration with language abstractions. Advances in Neural Information Processing Systems, 35:33947–33960.

- Pierre-Yves Oudeyer and Frederic Kaplan. 2007. What is intrinsic motivation? a typology of computational approaches. Frontiers in neurorobotics, 1:6.

- Automatically correcting large language models: Surveying the landscape of diverse self-correction strategies. ArXiv, abs/2308.03188.

- C-MCTS: safe planning with monte carlo tree search. CoRR, abs/2305.16209.

- Refiner: Reasoning feedback on intermediate representations. ArXiv, abs/2304.01904.

- Check your facts and try again: Improving large language models with external knowledge and automated feedback. CoRR, abs/2302.12813.

- Exploring the limits of transfer learning with a unified text-to-text transformer. Journal of Machine Learning Research, 21(140):1–67.

- Nils Reimers and Iryna Gurevych. 2019. Sentence-bert: Sentence embeddings using siamese bert-networks. In Conference on Empirical Methods in Natural Language Processing.

- Reflexion: Language agents with verbal reinforcement learning.

- Monte carlo tree search: a review of recent modifications and applications. Artif. Intell. Rev., 56(3):2497–2562.

- FEVER: a large-scale dataset for fact extraction and VERification. In NAACL-HLT.

- Llama 2: Open foundation and fine-tuned chat models. ArXiv, abs/2307.09288.

- Self-consistency improves chain of thought reasoning in language models. In The Eleventh International Conference on Learning Representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023. OpenReview.net.

- Chain of thought prompting elicits reasoning in large language models. In Advances in Neural Information Processing Systems.

- Self-evaluation guided beam search for reasoning.

- Tree of thoughts: Deliberate problem solving with large language models. ArXiv, abs/2305.10601.

- React: Synergizing reasoning and acting in language models. In The Eleventh International Conference on Learning Representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023. OpenReview.net.

- STar: Bootstrapping reasoning with reasoning. In Advances in Neural Information Processing Systems.

- Interpretable unified language checking. ArXiv, abs/2304.03728.

- Judging llm-as-a-judge with mt-bench and chatbot arena. ArXiv, abs/2306.05685.

- Language agent tree search unifies reasoning acting and planning in language models. CoRR, abs/2310.04406.

- Solving math word problems via cooperative reasoning induced language models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 4471–4485, Toronto, Canada. Association for Computational Linguistics.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.