How NeRFs and 3D Gaussian Splatting are Reshaping SLAM: a Survey (2402.13255v3)

Abstract: Over the past two decades, research in the field of Simultaneous Localization and Mapping (SLAM) has undergone a significant evolution, highlighting its critical role in enabling autonomous exploration of unknown environments. This evolution ranges from hand-crafted methods, through the era of deep learning, to more recent developments focused on Neural Radiance Fields (NeRFs) and 3D Gaussian Splatting (3DGS) representations. Recognizing the growing body of research and the absence of a comprehensive survey on the topic, this paper aims to provide the first comprehensive overview of SLAM progress through the lens of the latest advancements in radiance fields. It sheds light on the background, evolutionary path, inherent strengths and limitations, and serves as a fundamental reference to highlight the dynamic progress and specific challenges.

- E. Sucar, S. Liu, J. Ortiz, and A. J. Davison, “imap: Implicit mapping and positioning in real-time,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 6229–6238.

- R. A. Newcombe, S. Izadi, O. Hilliges, D. Molyneaux, D. Kim, A. J. Davison, P. Kohi, J. Shotton, S. Hodges, and A. Fitzgibbon, “Kinectfusion: Real-time dense surface mapping and tracking,” in IEEE ISMAR. IEEE, 2011, pp. 127–136.

- K. Tateno, F. Tombari, I. Laina, and N. Navab, “Cnn-slam: Real-time dense monocular slam with learned depth prediction,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 6243–6252.

- Y. Li, N. Brasch, Y. Wang, N. Navab, and F. Tombari, “Structure-slam: Low-drift monocular slam in indoor environments,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 6583–6590, 2020.

- Z. Zhu, S. Peng, V. Larsson, W. Xu, H. Bao, Z. Cui, M. R. Oswald, and M. Pollefeys, “Nice-slam: Neural implicit scalable encoding for slam,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12 786–12 796.

- E. Kruzhkov, A. Savinykh, P. Karpyshev, M. Kurenkov, E. Yudin, A. Potapov, and D. Tsetserukou, “Meslam: Memory efficient slam based on neural fields,” in 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC). IEEE, 2022, pp. 430–435.

- S. Zhi, E. Sucar, A. Mouton, I. Haughton, T. Laidlow, and A. J. Davison, “ilabel: Revealing objects in neural fields,” IEEE Robotics and Automation Letters, vol. 8, no. 2, pp. 832–839, 2022.

- T. Hua, H. Bai, Z. Cao, M. Liu, D. Tao, and L. Wang, “Hi-map: Hierarchical factorized radiance field for high-fidelity monocular dense mapping,” arXiv preprint arXiv:2401.03203, 2024.

- M. Li, J. He, G. Jiang, and H. Wang, “Ddn-slam: Real-time dense dynamic neural implicit slam with joint semantic encoding,” arXiv preprint arXiv:2401.01545, 2024.

- R. Mur-Artal, J. M. M. Montiel, and J. D. Tardos, “Orb-slam: a versatile and accurate monocular slam system,” IEEE transactions on robotics, vol. 31, no. 5, pp. 1147–1163, 2015.

- M. Bloesch, J. Czarnowski, R. Clark, S. Leutenegger, and A. J. Davison, “Codeslam—learning a compact, optimisable representation for dense visual slam,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 2560–2568.

- C. Yan, D. Qu, D. Wang, D. Xu, Z. Wang, B. Zhao, and X. Li, “Gs-slam: Dense visual slam with 3d gaussian splatting,” arXiv preprint arXiv:2311.11700, 2023.

- C. Ruan, Q. Zang, K. Zhang, and K. Huang, “Dn-slam: A visual slam with orb features and nerf mapping in dynamic environments,” IEEE Sensors Journal, 2023.

- D. Qu, C. Yan, D. Wang, J. Yin, D. Xu, B. Zhao, and X. Li, “Implicit event-rgbd neural slam,” arXiv preprint arXiv:2311.11013, 2023.

- J. Deng, Q. Wu, X. Chen, S. Xia, Z. Sun, G. Liu, W. Yu, and L. Pei, “Nerf-loam: Neural implicit representation for large-scale incremental lidar odometry and mapping,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 8218–8227.

- H. Li, X. Gu, W. Yuan, L. Yang, Z. Dong, and P. Tan, “Dense rgb slam with neural implicit maps,” in Proceedings of the International Conference on Learning Representations, 2023. [Online]. Available: https://openreview.net/forum?id=QUK1ExlbbA

- E. Sandström, K. Ta, L. V. Gool, and M. R. Oswald, “Uncle-SLAM: Uncertainty learning for dense neural SLAM,” in International Conference on Computer Vision Workshops (ICCVW), 2023.

- Z. Zhu, S. Peng, V. Larsson, Z. Cui, M. R. Oswald, A. Geiger, and M. Pollefeys, “Nicer-slam: Neural implicit scene encoding for rgb slam,” in International Conference on 3D Vision (3DV), March 2024.

- G. Grisetti, R. Kümmerle, C. Stachniss, and W. Burgard, “A tutorial on graph-based slam,” IEEE Intelligent Transportation Systems Magazine, vol. 2, no. 4, pp. 31–43, 2010.

- K. Yousif, A. Bab-Hadiashar, and R. Hoseinnezhad, “An overview to visual odometry and visual slam: Applications to mobile robotics,” Intelligent Industrial Systems, vol. 1, no. 4, pp. 289–311, 2015.

- C. Cadena, L. Carlone, H. Carrillo, Y. Latif, D. Scaramuzza, J. Neira, I. Reid, and J. J. Leonard, “Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age,” IEEE Transactions on robotics, vol. 32, no. 6, pp. 1309–1332, 2016.

- T. Taketomi, H. Uchiyama, and S. Ikeda, “Visual slam algorithms: A survey from 2010 to 2016,” IPSJ Transactions on Computer Vision and Applications, vol. 9, no. 1, pp. 1–11, 2017.

- C. Duan, S. Junginger, J. Huang, K. Jin, and K. Thurow, “Deep learning for visual slam in transportation robotics: A review,” Transportation Safety and Environment, vol. 1, no. 3, pp. 177–184, 2019.

- S. Mokssit, D. B. Licea, B. Guermah, and M. Ghogho, “Deep learning techniques for visual slam: A survey,” IEEE Access, vol. 11, pp. 20 026–20 050, 2023.

- R. A. Newcombe, S. J. Lovegrove, and A. J. Davison, “Dtam: Dense tracking and mapping in real-time,” in 2011 international conference on computer vision. IEEE, 2011, pp. 2320–2327.

- R. F. Salas-Moreno, R. A. Newcombe, H. Strasdat, P. H. Kelly, and A. J. Davison, “Slam++: Simultaneous localisation and mapping at the level of objects,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2013, pp. 1352–1359.

- T. Whelan, S. Leutenegger, R. Salas-Moreno, B. Glocker, and A. Davison, “Elasticfusion: Dense slam without a pose graph.” Robotics: Science and Systems, 2015.

- Z. Teed and J. Deng, “Droid-slam: Deep visual slam for monocular, stereo, and rgb-d cameras,” Advances in neural information processing systems, vol. 34, pp. 16 558–16 569, 2021.

- Q.-Y. Zhou, S. Miller, and V. Koltun, “Elastic fragments for dense scene reconstruction,” in Proceedings of the IEEE International Conference on Computer Vision, 2013, pp. 473–480.

- T. Schops, T. Sattler, and M. Pollefeys, “Bad slam: Bundle adjusted direct rgb-d slam,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 134–144.

- M. Nießner, M. Zollhöfer, S. Izadi, and M. Stamminger, “Real-time 3d reconstruction at scale using voxel hashing,” ACM Transactions on Graphics (ToG), vol. 32, no. 6, pp. 1–11, 2013.

- F. Steinbrucker, C. Kerl, and D. Cremers, “Large-scale multi-resolution surface reconstruction from rgb-d sequences,” in Proceedings of the IEEE International Conference on Computer Vision, 2013, pp. 3264–3271.

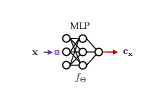

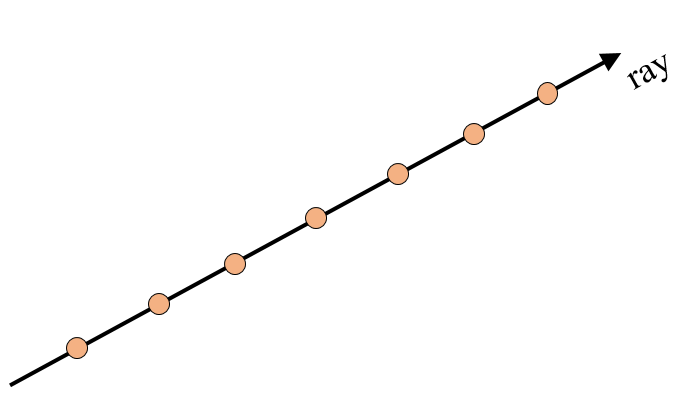

- B. Mildenhall, P. P. Srinivasan, M. Tancik, J. T. Barron, R. Ramamoorthi, and R. Ng, “Nerf: Representing scenes as neural radiance fields for view synthesis,” Communications of the ACM, vol. 65, no. 1, pp. 99–106, 2021.

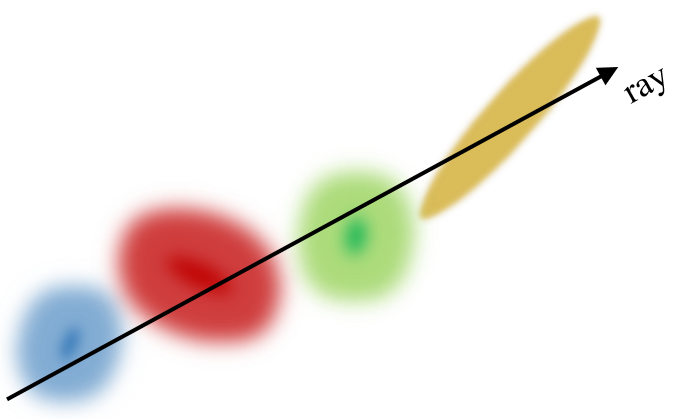

- B. Kerbl, G. Kopanas, T. Leimkühler, and G. Drettakis, “3d gaussian splatting for real-time radiance field rendering,” ACM Transactions on Graphics, vol. 42, no. 4, 2023.

- L. Mescheder, M. Oechsle, M. Niemeyer, S. Nowozin, and A. Geiger, “Occupancy networks: Learning 3d reconstruction in function space,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 4460–4470.

- Z. Chen and H. Zhang, “Learning implicit fields for generative shape modeling,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- J. J. Park, P. Florence, J. Straub, R. Newcombe, and S. Lovegrove, “Deepsdf: Learning continuous signed distance functions for shape representation,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 165–174.

- Y. Xie, T. Takikawa, S. Saito, O. Litany, S. Yan, N. Khan, F. Tombari, J. Tompkin, V. Sitzmann, and S. Sridhar, “Neural fields in visual computing and beyond,” in Computer Graphics Forum, vol. 41, no. 2. Wiley Online Library, 2022, pp. 641–676.

- H. Durrant-Whyte and T. Bailey, “Simultaneous localization and mapping: part i,” IEEE robotics & automation magazine, vol. 13, no. 2, pp. 99–110, 2006.

- T. Bailey and H. Durrant-Whyte, “Simultaneous localization and mapping (slam): Part ii,” IEEE robotics & automation magazine, vol. 13, no. 3, pp. 108–117, 2006.

- S. Saeedi, M. Trentini, M. Seto, and H. Li, “Multiple-robot simultaneous localization and mapping: A review,” Journal of Field Robotics, vol. 33, no. 1, pp. 3–46, 2016.

- M. R. U. Saputra, A. Markham, and N. Trigoni, “Visual slam and structure from motion in dynamic environments: A survey,” ACM Computing Surveys (CSUR), vol. 51, no. 2, pp. 1–36, 2018.

- M. Zaffar, S. Ehsan, R. Stolkin, and K. M. Maier, “Sensors, slam and long-term autonomy: A review,” in 2018 NASA/ESA Conference on Adaptive Hardware and Systems (AHS). IEEE, 2018, pp. 285–290.

- J. Yang, Y. Li, L. Cao, Y. Jiang, L. Sun, and Q. Xie, “A survey of slam research based on lidar sensors,” International Journal of Sensors, vol. 1, no. 1, p. 1003, 2019.

- W. Zhao, T. He, A. Y. M. Sani, and T. Yao, “Review of slam techniques for autonomous underwater vehicles,” in Proceedings of the 2019 International Conference on Robotics, Intelligent Control and Artificial Intelligence, 2019, pp. 384–389.

- C. Chen, B. Wang, C. X. Lu, N. Trigoni, and A. Markham, “A survey on deep learning for localization and mapping: Towards the age of spatial machine intelligence,” arXiv preprint arXiv:2006.12567, 2020.

- W. Chen, G. Shang, A. Ji, C. Zhou, X. Wang, C. Xu, Z. Li, and K. Hu, “An overview on visual slam: From tradition to semantic,” Remote Sensing, vol. 14, no. 13, p. 3010, 2022.

- I. A. Kazerouni, L. Fitzgerald, G. Dooly, and D. Toal, “A survey of state-of-the-art on visual slam,” Expert Systems with Applications, vol. 205, p. 117734, 2022.

- Y. Tang, C. Zhao, J. Wang, C. Zhang, Q. Sun, W. X. Zheng, W. Du, F. Qian, and J. Kurths, “Perception and navigation in autonomous systems in the era of learning: A survey,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- M. Zollhöfer, P. Stotko, A. Görlitz, C. Theobalt, M. Nießner, R. Klein, and A. Kolb, “State of the art on 3d reconstruction with rgb-d cameras,” in Computer graphics forum, vol. 37, no. 2. Wiley Online Library, 2018, pp. 625–652.

- J. A. Placed, J. Strader, H. Carrillo, N. Atanasov, V. Indelman, L. Carlone, and J. A. Castellanos, “A survey on active simultaneous localization and mapping: State of the art and new frontiers,” IEEE Transactions on Robotics, 2023.

- A. Dai, M. Nießner, M. Zollhöfer, S. Izadi, and C. Theobalt, “Bundlefusion: Real-time globally consistent 3d reconstruction using on-the-fly surface reintegration,” vol. 36, no. 4, p. 1, 2017.

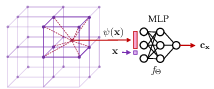

- T. Müller, A. Evans, C. Schied, and A. Keller, “Instant neural graphics primitives with a multiresolution hash encoding,” ACM Trans. Graph., vol. 41, no. 4, pp. 102:1–102:15, Jul. 2022. [Online]. Available: https://doi.org/10.1145/3528223.3530127

- Z. Li, T. Müller, A. Evans, R. H. Taylor, M. Unberath, M.-Y. Liu, and C.-H. Lin, “Neuralangelo: High-fidelity neural surface reconstruction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 8456–8465.

- S. Peng, M. Niemeyer, L. Mescheder, M. Pollefeys, and A. Geiger, “Convolutional occupancy networks,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16. Springer, 2020, pp. 523–540.

- Q. Xu, Z. Xu, J. Philip, S. Bi, Z. Shu, K. Sunkavalli, and U. Neumann, “Point-nerf: Point-based neural radiance fields,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 5438–5448.

- K. Deng, A. Liu, J.-Y. Zhu, and D. Ramanan, “Depth-supervised nerf: Fewer views and faster training for free,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12 882–12 891.

- B. Roessle, J. T. Barron, B. Mildenhall, P. P. Srinivasan, and M. Nießner, “Dense depth priors for neural radiance fields from sparse input views,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12 892–12 901.

- M. Niemeyer, J. T. Barron, B. Mildenhall, M. S. Sajjadi, A. Geiger, and N. Radwan, “Regnerf: Regularizing neural radiance fields for view synthesis from sparse inputs,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 5480–5490.

- K. Gao, Y. Gao, H. He, D. Lu, L. Xu, and J. Li, “Nerf: Neural radiance field in 3d vision, a comprehensive review,” arXiv preprint arXiv:2210.00379, 2022.

- S. Fridovich-Keil, A. Yu, M. Tancik, Q. Chen, B. Recht, and A. Kanazawa, “Plenoxels: Radiance fields without neural networks,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 5501–5510.

- W. E. Lorensen and H. E. Cline, “Marching cubes: A high resolution 3d surface construction algorithm,” in Seminal graphics: pioneering efforts that shaped the field, 1998, pp. 347–353.

- P. Wang, L. Liu, Y. Liu, C. Theobalt, T. Komura, and W. Wang, “Neus: Learning neural implicit surfaces by volume rendering for multi-view reconstruction,” arXiv preprint arXiv:2106.10689, 2021.

- D. Azinović, R. Martin-Brualla, D. B. Goldman, M. Nießner, and J. Thies, “Neural rgb-d surface reconstruction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 6290–6301.

- G. Chen and W. Wang, “A survey on 3d gaussian splatting,” arXiv preprint arXiv:2401.03890, 2024.

- J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “A benchmark for the evaluation of rgb-d slam systems,” in 2012 IEEE/RSJ international conference on intelligent robots and systems. IEEE, 2012, pp. 573–580.

- A. Dai, A. X. Chang, M. Savva, M. Halber, T. Funkhouser, and M. Nießner, “Scannet: Richly-annotated 3d reconstructions of indoor scenes,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 5828–5839.

- J. Straub, T. Whelan, L. Ma, Y. Chen, E. Wijmans, S. Green, J. J. Engel, R. Mur-Artal, C. Ren, S. Verma, A. Clarkson, M. Yan, B. Budge, Y. Yan, X. Pan, J. Yon, Y. Zou, K. Leon, N. Carter, J. Briales, T. Gillingham, E. Mueggler, L. Pesqueira, M. Savva, D. Batra, H. M. Strasdat, R. D. Nardi, M. Goesele, S. Lovegrove, and R. Newcombe, “The Replica dataset: A digital replica of indoor spaces,” arXiv preprint arXiv:1906.05797, 2019.

- A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in Conference on Computer Vision and Pattern Recognition (CVPR), 2012.

- M. Ramezani, Y. Wang, M. Camurri, D. Wisth, M. Mattamala, and M. Fallon, “The newer college dataset: Handheld lidar, inertial and vision with ground truth,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 4353–4360.

- X. Yang, H. Li, H. Zhai, Y. Ming, Y. Liu, and G. Zhang, “Vox-fusion: Dense tracking and mapping with voxel-based neural implicit representation,” in 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR). IEEE, 2022, pp. 499–507.

- M. M. Johari, C. Carta, and F. Fleuret, “Eslam: Efficient dense slam system based on hybrid representation of signed distance fields,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2023, pp. 17 408–17 419.

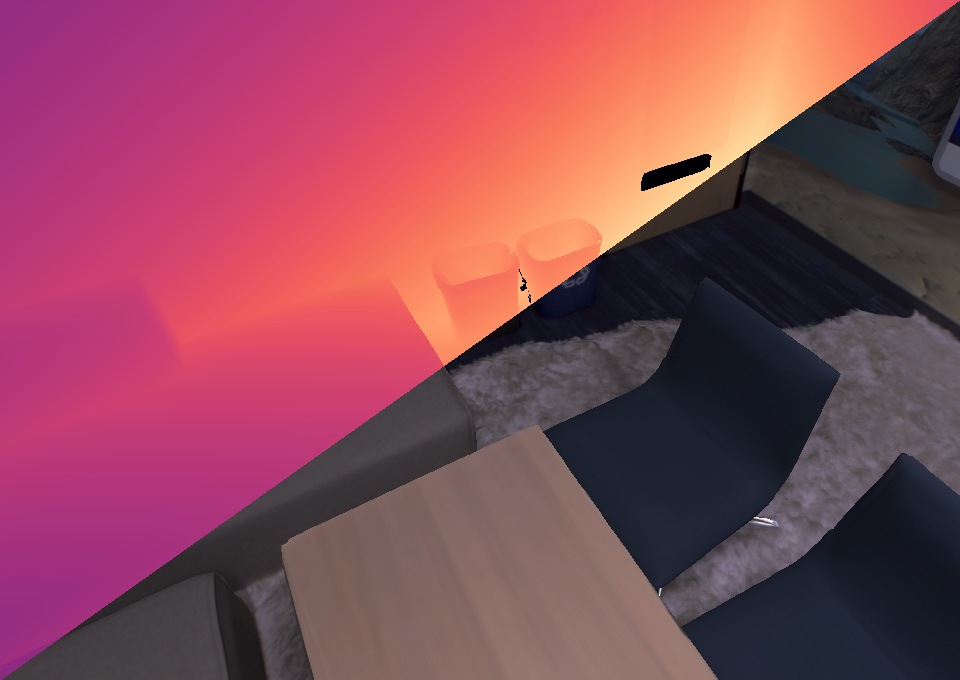

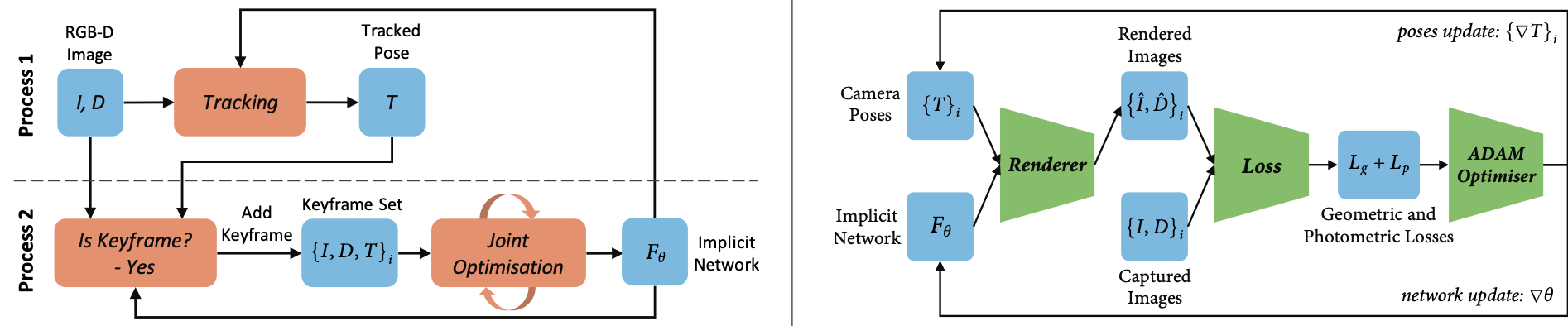

- H. Wang, J. Wang, and L. Agapito, “Co-slam: Joint coordinate and sparse parametric encodings for neural real-time slam,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2023, pp. 13 293–13 302.

- Y. Zhang, F. Tosi, S. Mattoccia, and M. Poggi, “Go-slam: Global optimization for consistent 3d instant reconstruction,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 3727–3737.

- Z. Teed and J. Deng, “Droid-slam: Deep visual slam for monocular, stereo, and rgb-d cameras,” NeurIPS, vol. 34, pp. 16 558–16 569, 2021.

- E. Sandström, Y. Li, L. Van Gool, and M. R. Oswald, “Point-slam: Dense neural point cloud-based slam,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2023.

- L. Xinyang, L. Yijin, T. Yanbin, B. Hujun, Z. Guofeng, Z. Yinda, and C. Zhaopeng, “Multi-modal neural radiance field for monocular dense slam with a light-weight tof sensor,” in International Conference on Computer Vision (ICCV), 2023.

- P. Hu and Z. Han, “Learning neural implicit through volume rendering with attentive depth fusion priors,” in Advances in Neural Information Processing Systems (NeurIPS), 2023.

- M. Li, J. He, Y. Wang, and H. Wang, “End-to-end rgb-d slam with multi-mlps dense neural implicit representations,” IEEE Robotics and Automation Letters, 2023.

- A. L. Teigen, Y. Park, A. Stahl, and R. Mester, “Rgb-d mapping and tracking in a plenoxel radiance field,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2024, pp. 3342–3351.

- H. Wang, Y. Cao, X. Wei, Y. Shou, L. Shen, Z. Xu, and K. Ren, “Structerf-slam: Neural implicit representation slam for structural environments,” Computers & Graphics, p. 103893, 2024.

- R. Mur-Artal and J. D. Tardós, “Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras,” IEEE transactions on robotics, vol. 33, no. 5, pp. 1255–1262, 2017.

- P. F. Felzenszwalb and D. P. Huttenlocher, “Efficient graph-based image segmentation,” International journal of computer vision, vol. 59, pp. 167–181, 2004.

- Y. Ming, W. Ye, and A. Calway, “idf-slam: End-to-end rgb-d slam with neural implicit mapping and deep feature tracking,” arXiv preprint arXiv:2209.07919, 2022.

- M. El Banani, L. Gao, and J. Johnson, “Unsupervisedr&r: Unsupervised point cloud registration via differentiable rendering,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 7129–7139.

- W. Guo, B. Wang, and L. Chen, “Neuv-slam: Fast neural multiresolution voxel optimization for rgbd dense slam,” 2024.

- H. Huang, L. Li, H. Cheng, and S.-K. Yeung, “Photo-slam: Real-time simultaneous localization and photorealistic mapping for monocular, stereo, and rgb-d cameras,” arXiv preprint arXiv:2311.16728, 2023.

- C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. Montiel, and J. D. Tardós, “Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam,” IEEE Transactions on Robotics, vol. 37, no. 6, pp. 1874–1890, 2021.

- N. Keetha, J. Karhade, K. M. Jatavallabhula, G. Yang, S. Scherer, D. Ramanan, and J. Luiten, “Splatam: Splat, track & map 3d gaussians for dense rgb-d slam,” arXiv preprint arXiv:2312.02126, 2023.

- H. Matsuki, R. Murai, P. H. Kelly, and A. J. Davison, “Gaussian splatting slam,” arXiv preprint arXiv:2312.06741, 2023.

- V. Yugay, Y. Li, T. Gevers, and M. R. Oswald, “Gaussian-slam: Photo-realistic dense slam with gaussian splatting,” arXiv preprint arXiv:2312.10070, 2023.

- J. Park, Q.-Y. Zhou, and V. Koltun, “Colored point cloud registration revisited,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 143–152.

- J. Hu, M. Mao, H. Bao, G. Zhang, and Z. Cui, “CP-SLAM: Collaborative neural point-based SLAM system,” in Thirty-seventh Conference on Neural Information Processing Systems, 2023. [Online]. Available: https://openreview.net/forum?id=dFSeZm6dTC

- B. Xiang, Y. Sun, Z. Xie, X. Yang, and Y. Wang, “Nisb-map: Scalable mapping with neural implicit spatial block,” IEEE Robotics and Automation Letters, 2023.

- S. Liu and J. Zhu, “Efficient map fusion for multiple implicit slam agents,” IEEE Transactions on Intelligent Vehicles, 2023.

- Y. Tang, J. Zhang, Z. Yu, H. Wang, and K. Xu, “Mips-fusion: Multi-implicit-submaps for scalable and robust online neural rgb-d reconstruction,” ACM Transactions on Graphics (TOG), vol. 42, no. 6, pp. 1–16, 2023.

- H. Matsuki, K. Tateno, M. Niemeyer, and F. Tombari, “Newton: Neural view-centric mapping for on-the-fly large-scale slam,” arXiv preprint arXiv:2303.13654, 2023.

- Y. Mao, X. Yu, K. Wang, Y. Wang, R. Xiong, and Y. Liao, “Ngel-slam: Neural implicit representation-based global consistent low-latency slam system,” arXiv preprint arXiv:2311.09525, 2023.

- T. Deng, G. Shen, T. Qin, J. Wang, W. Zhao, J. Wang, D. Wang, and W. Chen, “Plgslam: Progressive neural scene represenation with local to global bundle adjustment,” arXiv preprint arXiv:2312.09866, 2023.

- L. Liso, E. Sandström, V. Yugay, L. V. Gool, and M. R. Oswald, “Loopy-slam: Loopy-slam: Dense neural slam with loop closures,” arXiv preprint arXiv:2402.09944, 2024.

- K. Mazur, E. Sucar, and A. J. Davison, “Feature-realistic neural fusion for real-time, open set scene understanding,” in 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023, pp. 8201–8207.

- M. Tan and Q. Le, “Efficientnet: Rethinking model scaling for convolutional neural networks,” in International conference on machine learning. PMLR, 2019, pp. 6105–6114.

- M. Caron, H. Touvron, I. Misra, H. Jégou, J. Mairal, P. Bojanowski, and A. Joulin, “Emerging properties in self-supervised vision transformers,” in Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 9650–9660.

- X. Kong, S. Liu, M. Taher, and A. J. Davison, “vmap: Vectorised object mapping for neural field slam,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2023, pp. 952–961.

- Y. Haghighi, S. Kumar, J. P. Thiran, and L. Van Gool, “Neural implicit dense semantic slam,” arXiv preprint arXiv:2304.14560, 2023.

- B. Cheng, A. G. Schwing, and A. Kirillov, “Per-pixel classification is not all you need for semantic segmentation,” 2021.

- S. Zhu, G. Wang, H. Blum, J. Liu, L. Song, M. Pollefeys, and H. Wang, “Sni-slam: Semantic neural implicit slam,” arXiv preprint arXiv:2311.11016, 2023.

- M. Oquab, T. Darcet, T. Moutakanni, H. Vo, M. Szafraniec, V. Khalidov, P. Fernandez, D. Haziza, F. Massa, A. El-Nouby et al., “Dinov2: Learning robust visual features without supervision,” arXiv preprint arXiv:2304.07193, 2023.

- K. Li, M. Niemeyer, N. Navab, and F. Tombari, “Dns slam: Dense neural semantic-informed slam,” arXiv preprint arXiv:2312.00204, 2023.

- M. Li, S. Liu, and H. Zhou, “Sgs-slam: Semantic gaussian splatting for neural dense slam,” 2024.

- A. Kirillov, E. Mintun, N. Ravi, H. Mao, C. Rolland, L. Gustafson, T. Xiao, S. Whitehead, A. C. Berg, W.-Y. Lo et al., “Segment anything,” arXiv preprint arXiv:2304.02643, 2023.

- M. A. Karaoglu, H. Schieber, N. Schischka, M. Görgülü, F. Grötzner, A. Ladikos, D. Roth, N. Navab, and B. Busam, “Dynamon: Motion-aware fast and robust camera localization for dynamic nerf,” arXiv preprint arXiv:2309.08927, 2023.

- L.-C. Chen, G. Papandreou, F. Schroff, and H. Adam, “Rethinking atrous convolution for semantic image segmentation,” arXiv preprint arXiv:1706.05587, 2017.

- S. F. Bhat, R. Birkl, D. Wofk, P. Wonka, and M. Müller, “Zoedepth: Zero-shot transfer by combining relative and metric depth,” arXiv preprint arXiv:2302.12288, 2023.

- Z. Xu, J. Niu, Q. Li, T. Ren, and C. Chen, “Nid-slam: Neural implicit representation-based rgb-d slam in dynamic environments,” arXiv preprint arXiv:2401.01189, 2024.

- D. Lisus, C. Holmes, and S. Waslander, “Towards open world nerf-based slam,” in 2023 20th Conference on Robots and Vision (CRV), 2023, pp. 37–44.

- C.-M. Chung, Y.-C. Tseng, Y.-C. Hsu, X.-Q. Shi, Y.-H. Hua, J.-F. Yeh, W.-C. Chen, Y.-T. Chen, and W. H. Hsu, “Orbeez-slam: A real-time monocular visual slam with orb features and nerf-realized mapping,” in 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023, pp. 9400–9406.

- T. Hua, H. Bai, Z. Cao, and L. Wang, “Fmapping: Factorized efficient neural field mapping for real-time dense rgb slam,” arXiv preprint arXiv:2306.00579, 2023.

- J. Lin, A. Nachkov, S. Peng, L. Van Gool, and D. P. Paudel, “Ternary-type opacity and hybrid odometry for rgb-only nerf-slam,” arXiv preprint arXiv:2312.13332, 2023.

- H. Matsuki, E. Sucar, T. Laidow, K. Wada, R. Scona, and A. J. Davison, “imode: Real-time incremental monocular dense mapping using neural field,” in 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023, pp. 4171–4177.

- F. Ma and S. Karaman, “Sparse-to-dense: Depth prediction from sparse depth samples and a single image,” 2018.

- W. Zhang, T. Sun, S. Wang, Q. Cheng, and N. Haala, “Hi-slam: Monocular real-time dense mapping with hybrid implicit fields,” IEEE Robotics and Automation Letters, 2023.

- A. Eftekhar, A. Sax, J. Malik, and A. Zamir, “Omnidata: A scalable pipeline for making multi-task mid-level vision datasets from 3d scans,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 10 786–10 796.

- H. Xu, J. Zhang, J. Cai, H. Rezatofighi, and D. Tao, “Gmflow: Learning optical flow via global matching,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 8121–8130.

- J. Naumann, B. Xu, S. Leutenegger, and X. Zuo, “Nerf-vo: Real-time sparse visual odometry with neural radiance fields,” arXiv preprint arXiv:2312.13471, 2023.

- Z. Teed, L. Lipson, and J. Deng, “Deep patch visual odometry,” arXiv preprint arXiv:2208.04726, 2022.

- H. Zhou, Z. Guo, S. Liu, L. Zhang, Q. Wang, Y. Ren, and M. Li, “Mod-slam: Monocular dense mapping for unbounded 3d scene reconstruction,” 2024.

- R. Ranftl, A. Bochkovskiy, and V. Koltun, “Vision transformers for dense prediction,” ICCV, 2021.

- X. Han, H. Liu, Y. Ding, and L. Yang, “Ro-map: Real-time multi-object mapping with neural radiance fields,” IEEE Robotics and Automation Letters, vol. 8, no. 9, pp. 5950–5957, 2023.

- A. Rosinol, J. J. Leonard, and L. Carlone, “Nerf-slam: Real-time dense monocular slam with neural radiance fields,” in 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2023, pp. 3437–3444.

- S. Isaacson, P.-C. Kung, M. Ramanagopal, R. Vasudevan, and K. A. Skinner, “Loner: Lidar only neural representations for real-time slam,” IEEE Robotics and Automation Letters, 2023.

- S. Rusinkiewicz and M. Levoy, “Efficient variants of the icp algorithm,” in Proceedings third international conference on 3-D digital imaging and modeling. IEEE, 2001, pp. 145–152.

- Y. Pan, X. Zhong, L. Wiesmann, T. Posewsky, J. Behley, and C. Stachniss, “Pin-slam: Lidar slam using a point-based implicit neural representation for achieving global map consistency,” arXiv preprint arXiv:2401.09101, 2024.

- S. Hong, J. He, X. Zheng, H. Wang, H. Fang, K. Liu, C. Zheng, and S. Shen, “Liv-gaussmap: Lidar-inertial-visual fusion for real-time 3d radiance field map rendering,” arXiv preprint arXiv:2401.14857, 2024.

- M. Burri, J. Nikolic, P. Gohl, T. Schneider, J. Rehder, S. Omari, M. W. Achtelik, and R. Siegwart, “The euroc micro aerial vehicle datasets,” The International Journal of Robotics Research, vol. 35, no. 10, pp. 1157–1163, 2016.

- B. Glocker, S. Izadi, J. Shotton, and A. Criminisi, “Real-time rgb-d camera relocalization,” in 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR). IEEE, 2013, pp. 173–179.

- C. Yeshwanth, Y.-C. Liu, M. Nießner, and A. Dai, “Scannet++: A high-fidelity dataset of 3d indoor scenes,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 12–22.

- Y. Liu, Y. Fu, F. Chen, B. Goossens, W. Tao, and H. Zhao, “Simultaneous localization and mapping related datasets: A comprehensive survey,” arXiv preprint arXiv:2102.04036, 2021.

- Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEE transactions on image processing, vol. 13, no. 4, pp. 600–612, 2004.

- R. Zhang, P. Isola, A. A. Efros, E. Shechtman, and O. Wang, “The unreasonable effectiveness of deep features as a perceptual metric,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 586–595.

- T. Müller, B. McWilliams, F. Rousselle, M. Gross, and J. Novák, “Neural importance sampling,” ACM Transactions on Graphics (ToG), vol. 38, no. 5, pp. 1–19, 2019.

- E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “Orb: An efficient alternative to sift or surf,” in 2011 International conference on computer vision. Ieee, 2011, pp. 2564–2571.

- R. Arandjelovic, P. Gronat, A. Torii, T. Pajdla, and J. Sivic, “Netvlad: Cnn architecture for weakly supervised place recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 5297–5307.

- B. Cheng, I. Misra, A. G. Schwing, A. Kirillov, and R. Girdhar, “Masked-attention mask transformer for universal image segmentation,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 1290–1299.

- A. Cao and J. Johnson, “Hexplane: A fast representation for dynamic scenes,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 130–141.

- R. Mur-Artal and J. D. Tardós, “Probabilistic semi-dense mapping from highly accurate feature-based monocular slam.” in Robotics: Science and Systems, vol. 2015. Rome, 2015.

- A. Gropp, L. Yariv, N. Haim, M. Atzmon, and Y. Lipman, “Implicit geometric regularization for learning shapes,” arXiv preprint arXiv:2002.10099, 2020.

- R. Ranftl, K. Lasinger, D. Hafner, K. Schindler, and V. Koltun, “Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 3, 2022.

- M. Tancik, E. Weber, E. Ng, R. Li, B. Yi, T. Wang, A. Kristoffersen, J. Austin, K. Salahi, A. Ahuja et al., “Nerfstudio: A modular framework for neural radiance field development,” in ACM SIGGRAPH 2023 Conference Proceedings, 2023, pp. 1–12.

- J. Lin, “Divergence measures based on the shannon entropy,” IEEE Transactions on Information theory, vol. 37, no. 1, pp. 145–151, 1991.

- J. L. Schönberger, E. Zheng, M. Pollefeys, and J.-M. Frahm, “Pixelwise view selection for unstructured multi-view stereo,” in European Conference on Computer Vision (ECCV), 2016.

- A. Zeng, S. Song, M. Nießner, M. Fisher, J. Xiao, and T. Funkhouser, “3dmatch: Learning local geometric descriptors from rgb-d reconstructions,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1802–1811.

- Y. Pan, P. Xiao, Y. He, Z. Shao, and Z. Li, “Mulls: Versatile lidar slam via multi-metric linear least square,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 11 633–11 640.

- P. Dellenbach, J.-E. Deschaud, B. Jacquet, and F. Goulette, “Ct-icp: Real-time elastic lidar odometry with loop closure,” in 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 5580–5586.

- M. Yokozuka, K. Koide, S. Oishi, and A. Banno, “Litamin2: Ultra light lidar-based slam using geometric approximation applied with kl-divergence,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 11 619–11 625.

- J. Behley and C. Stachniss, “Efficient surfel-based slam using 3d laser range data in urban environments.” in Robotics: Science and Systems, vol. 2018, 2018, p. 59.

- J. Ruan, B. Li, Y. Wang, and Y. Sun, “Slamesh: Real-time lidar simultaneous localization and meshing,” arXiv preprint arXiv:2303.05252, 2023.

- X. Liu, Z. Liu, F. Kong, and F. Zhang, “Large-scale lidar consistent mapping using hierarchical lidar bundle adjustment,” IEEE Robotics and Automation Letters, vol. 8, no. 3, pp. 1523–1530, 2023.

- A. Guédon and V. Lepetit, “Sugar: Surface-aligned gaussian splatting for efficient 3d mesh reconstruction and high-quality mesh rendering,” arXiv preprint arXiv:2311.12775, 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.