Real-time High-resolution View Synthesis of Complex Scenes with Explicit 3D Visibility Reasoning (2402.12886v1)

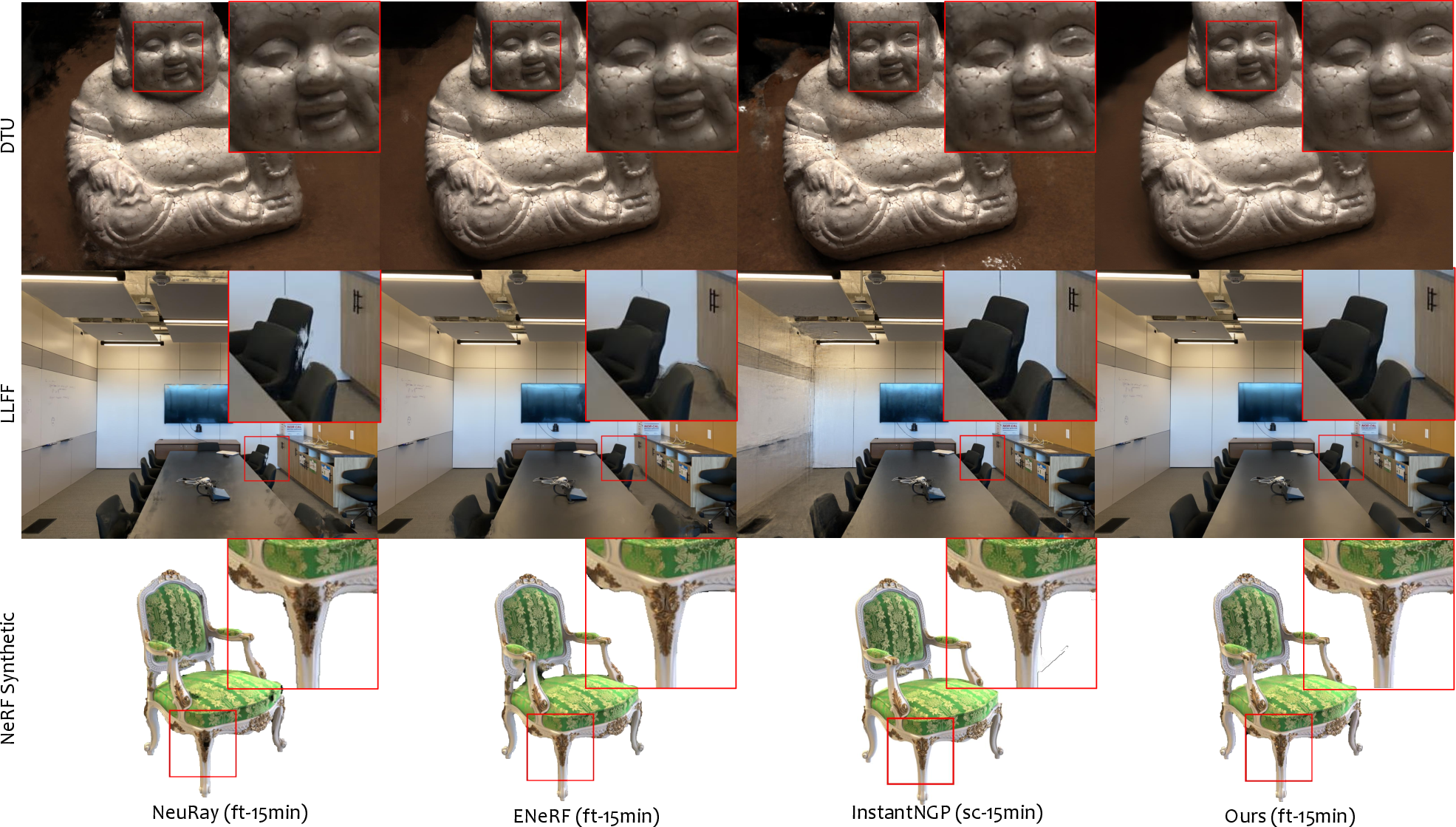

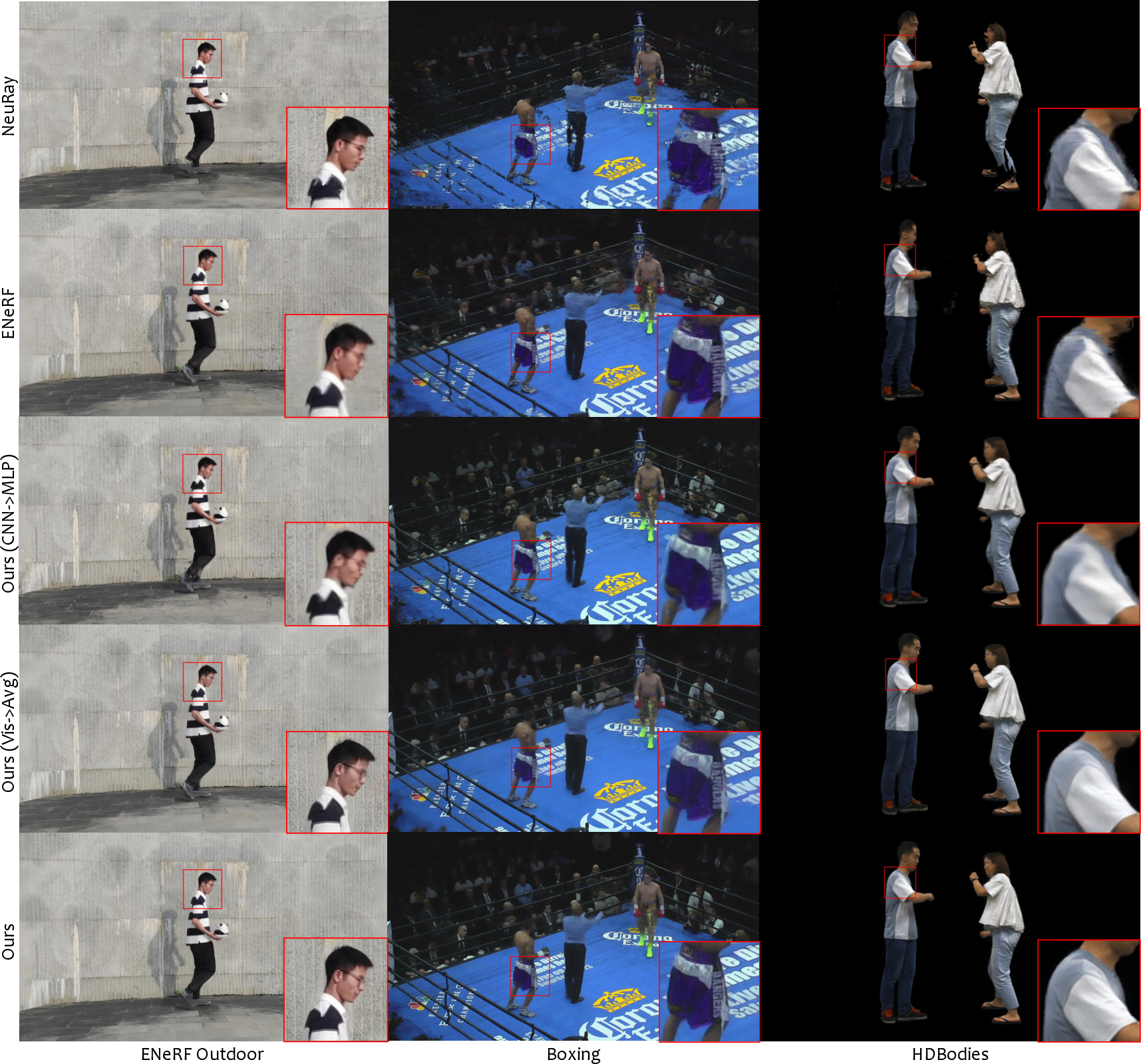

Abstract: Rendering photo-realistic novel-view images of complex scenes has been a long-standing challenge in computer graphics. In recent years, great research progress has been made on enhancing rendering quality and accelerating rendering speed in the realm of view synthesis. However, when rendering complex dynamic scenes with sparse views, the rendering quality remains limited due to occlusion problems. Besides, for rendering high-resolution images on dynamic scenes, the rendering speed is still far from real-time. In this work, we propose a generalizable view synthesis method that can render high-resolution novel-view images of complex static and dynamic scenes in real-time from sparse views. To address the occlusion problems arising from the sparsity of input views and the complexity of captured scenes, we introduce an explicit 3D visibility reasoning approach that can efficiently estimate the visibility of sampled 3D points to the input views. The proposed visibility reasoning approach is fully differentiable and can gracefully fit inside the volume rendering pipeline, allowing us to train our networks with only multi-view images as supervision while refining geometry and texture simultaneously. Besides, each module in our pipeline is carefully designed to bypass the time-consuming MLP querying process and enhance the rendering quality of high-resolution images, enabling us to render high-resolution novel-view images in real-time.Experimental results show that our method outperforms previous view synthesis methods in both rendering quality and speed, particularly when dealing with complex dynamic scenes with sparse views.

- B. Mildenhall, P. P. Srinivasan, M. Tancik, J. T. Barron, R. Ramamoorthi, and R. Ng, “NeRF: Representing scenes as neural radiance fields for view synthesis,” in European Conference on Computer Vision, 2020, pp. 405–421.

- A. Pumarola, E. Corona, G. Pons-Moll, and F. Moreno-Noguer, “D-NeRF: Neural radiance fields for dynamic scenes,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021. [Online]. Available: http://arxiv.org/abs/2011.13961v1

- B. Attal, J.-B. Huang, C. Richardt, M. Zollhoefer, J. Kopf, M. O’Toole, and C. Kim, “Hyperreel: High-fidelity 6-dof video with ray-conditioned sampling,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 16 610–16 620.

- C. Gao, A. Saraf, J. Kopf, and J.-B. Huang, “Dynamic view synthesis from dynamic monocular video,” arXiv preprint arXiv:2105.06468, 2021.

- A. Yu, V. Ye, M. Tancik, and A. Kanazawa, “PixelNeRF: Neural radiance fields from one or few images,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 4578–4587.

- Q. Wang, Z. Wang, K. Genova, P. P. Srinivasan, H. Zhou, J. T. Barron, R. Martin-Brualla, N. Snavely, and T. Funkhouser, “IBRNet: Learning multi-view image-based rendering,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 4690–4699.

- H. Lin, S. Peng, Z. Xu, Y. Yan, Q. Shuai, H. Bao, and X. Zhou, “Efficient neural radiance fields for interactive free-viewpoint video,” in SIGGRAPH Asia 2022 Conference Papers, 2022, pp. 1–9.

- Y. Liu, S. Peng, L. Liu, Q. Wang, P. Wang, C. Theobalt, X. Zhou, and W. Wang, “Neural rays for occlusion-aware image-based rendering,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 7824–7833.

- A. Chen, Z. Xu, F. Zhao, X. Zhang, F. Xiang, J. Yu, and H. Su, “MVSNeRF: Fast generalizable radiance field reconstruction from multi-view stereo,” in Proceedings of the IEEE International Conference on Computer Vision, 2021.

- S. J. Garbin, M. Kowalski, M. Johnson, J. Shotton, and J. Valentin, “FastNeRF: High-fidelity neural rendering at 200fps,” arXiv preprint arXiv:2103.10380, 2021. [Online]. Available: http://arxiv.org/abs/2103.10380v2

- P. Hedman, P. P. Srinivasan, B. Mildenhall, J. T. Barron, and P. Debevec, “Baking neural radiance fields for real-time view synthesis,” arXiv preprint arXiv:2103.14645, 2021. [Online]. Available: http://arxiv.org/abs/2103.14645v1

- Z. Chen, T. Funkhouser, P. Hedman, and A. Tagliasacchi, “Mobilenerf: Exploiting the polygon rasterization pipeline for efficient neural field rendering on mobile architectures,” in The Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

- T. Müller, A. Evans, C. Schied, and A. Keller, “Instant neural graphics primitives with a multiresolution hash encoding,” arXiv preprint arXiv:2201.05989, 2022.

- R. Jensen, A. Dahl, G. Vogiatzis, E. Tola, and H. Aanæs, “Large scale multi-view stereopsis evaluation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2014, pp. 406–413.

- B. Mildenhall, P. P. Srinivasan, R. Ortiz-Cayon, N. K. Kalantari, R. Ramamoorthi, R. Ng, and A. Kar, “Local light field fusion: Practical view synthesis with prescriptive sampling guidelines,” ACM Transactions on Graphics (TOG), vol. 38, no. 4, pp. 1–14, 2019.

- Z. Wang, L. Li, Z. Shen, L. Shen, and L. Bo, “4k-nerf: High fidelity neural radiance fields at ultra high resolutions,” arXiv preprint arXiv:2212.04701, 2022.

- J. T. Barron, B. Mildenhall, M. Tancik, P. Hedman, R. Martin-Brualla, and P. P. Srinivasan, “Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields,” in Proceedings of the IEEE International Conference on Computer Vision, 2021. [Online]. Available: http://arxiv.org/abs/2103.13415v3

- J. T. Barron, B. Mildenhall, D. Verbin, P. P. Srinivasan, and P. Hedman, “Mip-nerf 360: Unbounded anti-aliased neural radiance fields,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 5470–5479.

- K. Deng, A. Liu, J.-Y. Zhu, and D. Ramanan, “Depth-supervised nerf: Fewer views and faster training for free,” arXiv preprint arXiv:2107.02791, 2021.

- B. Roessle, J. T. Barron, B. Mildenhall, P. P. Srinivasan, and M. Nießner, “Dense depth priors for neural radiance fields from sparse input views,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12 892–12 901.

- D. Verbin, P. Hedman, B. Mildenhall, T. Zickler, J. T. Barron, and P. P. Srinivasan, “Ref-nerf: Structured view-dependent appearance for neural radiance fields supplemental material.”

- J. L. Schönberger, E. Zheng, J.-M. Frahm, and M. Pollefeys, “Pixelwise view selection for unstructured multi-view stereo,” in Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part III 14. Springer, 2016, pp. 501–518.

- J. L. Schönberger and J.-M. Frahm, “Structure-from-motion revisited,” in Conference on Computer Vision and Pattern Recognition, 2016.

- K. Park, U. Sinha, J. T. Barron, S. Bouaziz, D. B. Goldman, S. M. Seitz, and R. Martin-Brualla, “Nerfies: Deformable neural radiance fields,” in Proceedings of the IEEE International Conference on Computer Vision, 2021. [Online]. Available: http://arxiv.org/abs/2011.12948v5

- L. Liu, J. Gu, K. Z. Lin, T.-S. Chua, and C. Theobalt, “Neural sparse voxel fields,” in Proceedings of the European Conference on Computer Vision (ECCV), 2020. [Online]. Available: http://arxiv.org/abs/2007.11571v2

- C. Reiser, S. Peng, Y. Liao, and A. Geiger, “Kilonerf: Speeding up neural radiance fields with thousands of tiny mlps,” in Proceedings of the IEEE International Conference on Computer Vision, 2021. [Online]. Available: http://arxiv.org/abs/2103.13744v2

- D. Rebain, W. Jiang, S. Yazdani, K. Li, K. M. Yi, and A. Tagliasacchi, “Derf: Decomposed radiance fields,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 14 153–14 161.

- Z. Li, S. Niklaus, N. Snavely, and O. Wang, “Neural scene flow fields for space-time view synthesis of dynamic scenes,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 6498–6508.

- Y. Du, Y. Zhang, H.-X. Yu, J. B. Tenenbaum, and J. Wu, “Neural radiance flow for 4D view synthesis and video processing,” in Proceedings of the IEEE International Conference on Computer Vision, 2021. [Online]. Available: http://arxiv.org/abs/2012.09790v2

- K. Pulli, H. Hoppe, M. Cohen, L. Shapiro, T. Duchamp, and W. Stuetzle, “View-based rendering: Visualizing real objects from scanned range and color data,” in Rendering Techniques’ 97: Proceedings of the Eurographics Workshop in St. Etienne, France, June 16–18, 1997 8. Springer, 1997, pp. 23–34.

- K. C. Zheng, A. Colburn, A. Agarwala, M. Agrawala, D. Salesin, B. Curless, and M. F. Cohen, “Parallax photography: creating 3d cinematic effects from stills,” in Proceedings of Graphics Interface 2009, 2009, pp. 111–118.

- C. L. Zitnick, S. B. Kang, M. Uyttendaele, S. Winder, and R. Szeliski, “High-quality video view interpolation using a layered representation,” ACM transactions on graphics (TOG), vol. 23, no. 3, pp. 600–608, 2004.

- E. Penner and L. Zhang, “Soft 3D reconstruction for view synthesis,” ACM Transactions on Graphics (TOG), vol. 36, no. 6, pp. 1–11, 2017.

- P. Hedman, J. Philip, T. Price, J.-M. Frahm, G. Drettakis, and G. Brostow, “Deep blending for free-viewpoint image-based rendering,” ACM Transactions on Graphics (TOG), vol. 37, no. 6, pp. 1–15, 2018.

- G. Riegler and V. Koltun, “Free view synthesis,” in European Conference on Computer Vision, 2020, pp. 623–640.

- R. A. Drebin, L. Carpenter, and P. Hanrahan, “Volume rendering,” ACM Siggraph Computer Graphics, vol. 22, no. 4, pp. 65–74, 1988.

- J. Johnson, A. Alahi, and L. Fei-Fei, “Perceptual losses for real-time style transfer and super-resolution,” in European Conference on Computer Vision. Springer, 2016, pp. 694–711.

- K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

- G. Riegler and V. Koltun, “Stable view synthesis,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021.

- M. Suhail, C. Esteves, L. Sigal, and A. Makadia, “Light field neural rendering,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 8269–8279.

- A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” Advances in neural information processing systems, vol. 32, 2019.

- Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEE Transactions on Image Processing, vol. 13, no. 4, pp. 600–612, 2004.

- R. Zhang, P. Isola, A. A. Efros, E. Shechtman, and O. Wang, “The unreasonable effectiveness of deep features as a perceptual metric,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 586–595.

- D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- Z. Su, T. Zhou, K. Li, D. Brady, and Y. Liu, “View synthesis from multi-view rgb data using multilayered representation and volumetric estimation,” Virtual Reality & Intelligent Hardware, vol. 2, no. 1, pp. 43–55, 2020.

- T. Zhou, J. Huang, T. Yu, R. Shao, and K. Li, “Hdhuman: High-quality human novel-view rendering from sparse views,” IEEE Transactions on Visualization and Computer Graphics, 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.