- The paper demonstrates that out-of-the-box LLMs achieve state-of-the-art data-to-text generation for severely under-resourced languages.

- The study reveals that generating content in English followed by translation consistently outperforms direct generation in the target language.

- The evaluation, using BLEU, ChrF++, TER, and human judgments, underscores the need to refine metrics for multilingual NLP performance.

High-quality Data-to-Text Generation for Severely Under-Resourced Languages

The paper "High-quality Data-to-Text Generation for Severely Under-Resourced Languages with Out-of-the-box LLMs" (2402.12267) focuses on leveraging pretrained LLMs to improve data-to-text generation for severely under-resourced languages. The languages targeted include Irish, Welsh, Breton, and Maltese. This work evaluates the zero-shot capabilities of LLMs in these contexts and tests whether their deployment in out-of-the-box settings meets performance expectations close to those achieved with well-resourced languages like English.

Methodology and Experimental Setup

A comprehensive approach was employed, involving several LLMs, like GPT-3.5, BLOOM, LLaMa2-chat, and Falcon-chat. Two main strategies were investigated: direct generation in the target language and generation in English followed by machine translation (MT) to the target language. The translation step involved engines such as Google Translate and others, to accommodate the needs of different language profiles and resources.

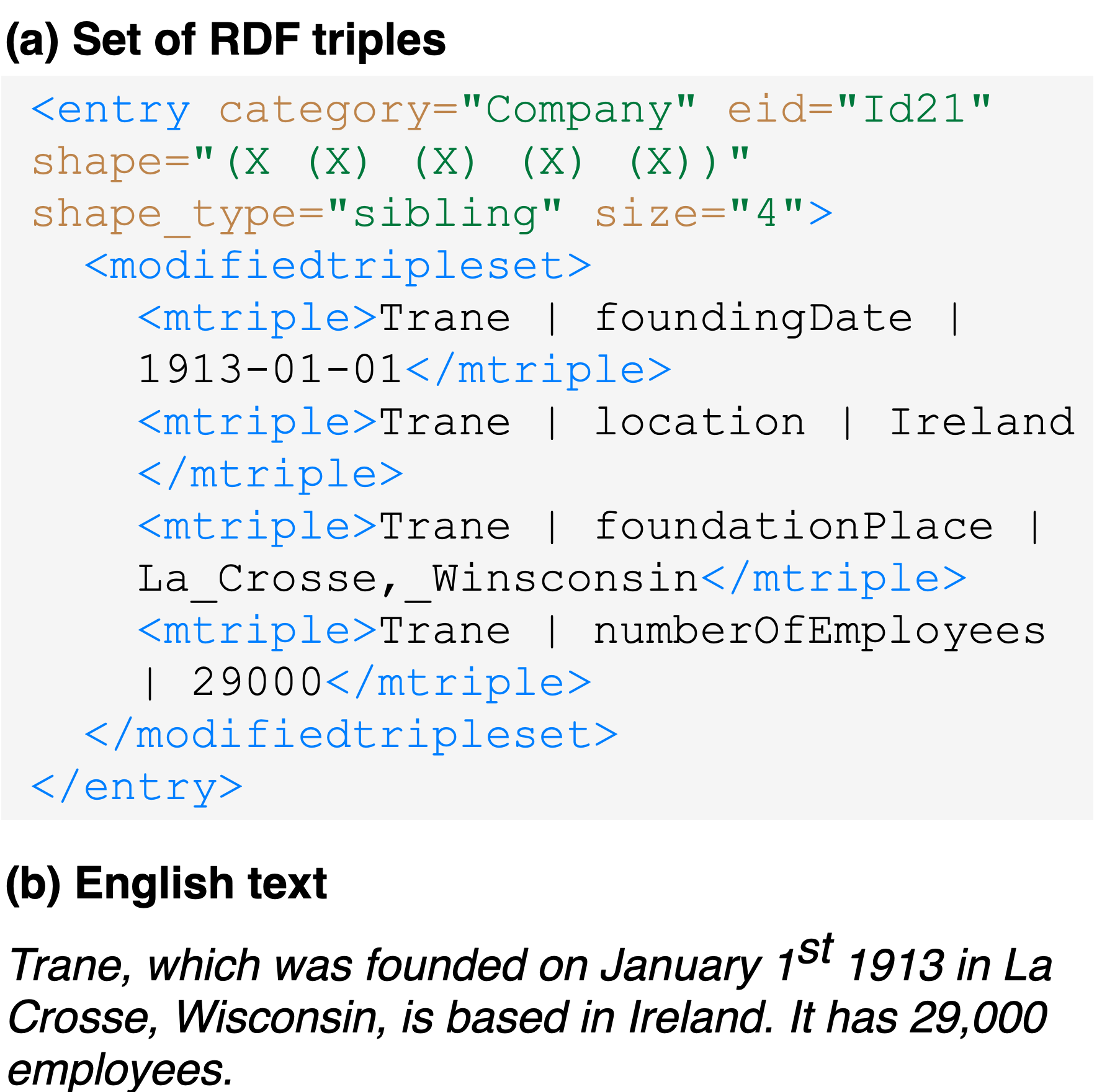

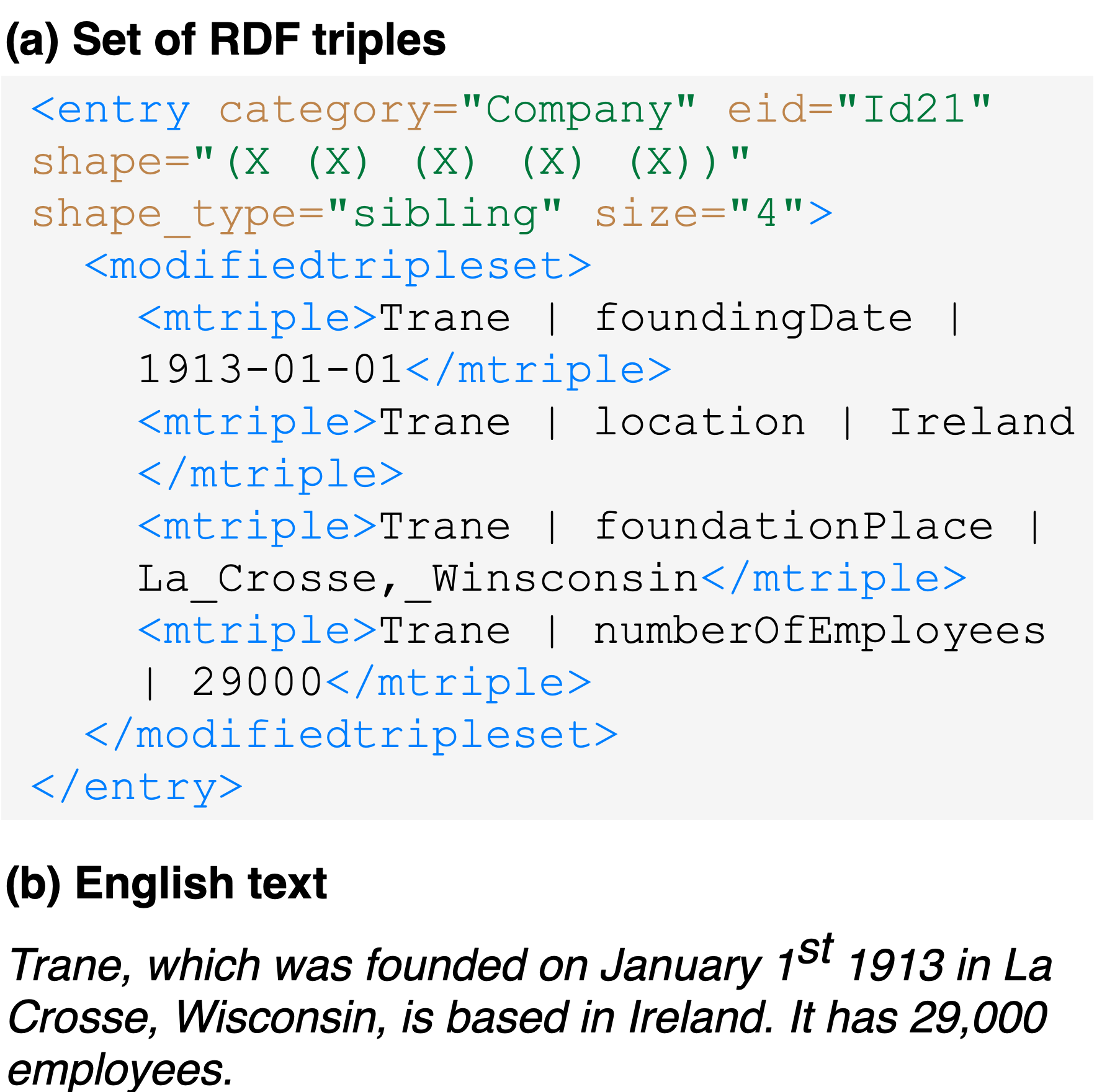

The task utilized the WebNLG 2023 dataset, which provides test and development sets manually translated by professional translators, and training data automatically translated from English. Evaluation metrics included conventional approaches like BLEU, ChrF++, and TER to support a rigorous performance comparison of language pairs and translation systems.

Results and Analysis

The experiments revealed that pretrained LLMs set new state-of-the-art benchmarks for data-to-text generation in under-resourced languages by significant margins. This was verified through human evaluations, which showed the generated systems achieving on-par performance with humans. Despite these strong results, BLEU scores were substantially lower for under-resourced languages compared to English, indicating potential shortcomings in metric compatibility with multilingual systems.

Figure 1: WebNLG input set of RDF triples and the corresponding output text in English.

The findings also highlighted interesting behavior in terms of translation strategy effectiveness. Generating content initially in English, followed by translation, consistently outperformed direct generation in the under-resourced language. This suggests that the linguistic richness in English models could be better leveraged through high-quality translation systems.

Implications and Future Work

This study underscores the capability of LLMs to bridge linguistic disparities, substantially impacting the field of multilingual NLP. It points towards LLMs as a potentially pivotal tool in reducing the resource gap between high-resource and under-resourced languages. The emphasis on using translations in improving output quality calls for further enhancement of MT systems to sustain this advantage across more diverse and complex language families.

Looking forward, future work could expand upon these findings by focusing on refining automatic evaluation metrics to better cater to multilingual scenarios. Additionally, there is considerable promise in exploring more nuanced prompt engineering techniques and refining LLMs' understanding of linguistic nuances in under-resourced contexts through supervised training on even small but culturally rich corpora.

Conclusion

This research paves the way for practical applications of LLMs in addressing text generation challenges in severely under-resourced languages, offering a compelling glance at their potential in expanding the scope of machine comprehension across the global linguistic landscape. The integration of advanced LLM and MT technologies stands as a potential game-changer in democratizing access to language generation capabilities for languages that have, until now, remained largely on the periphery of NLP advancements.