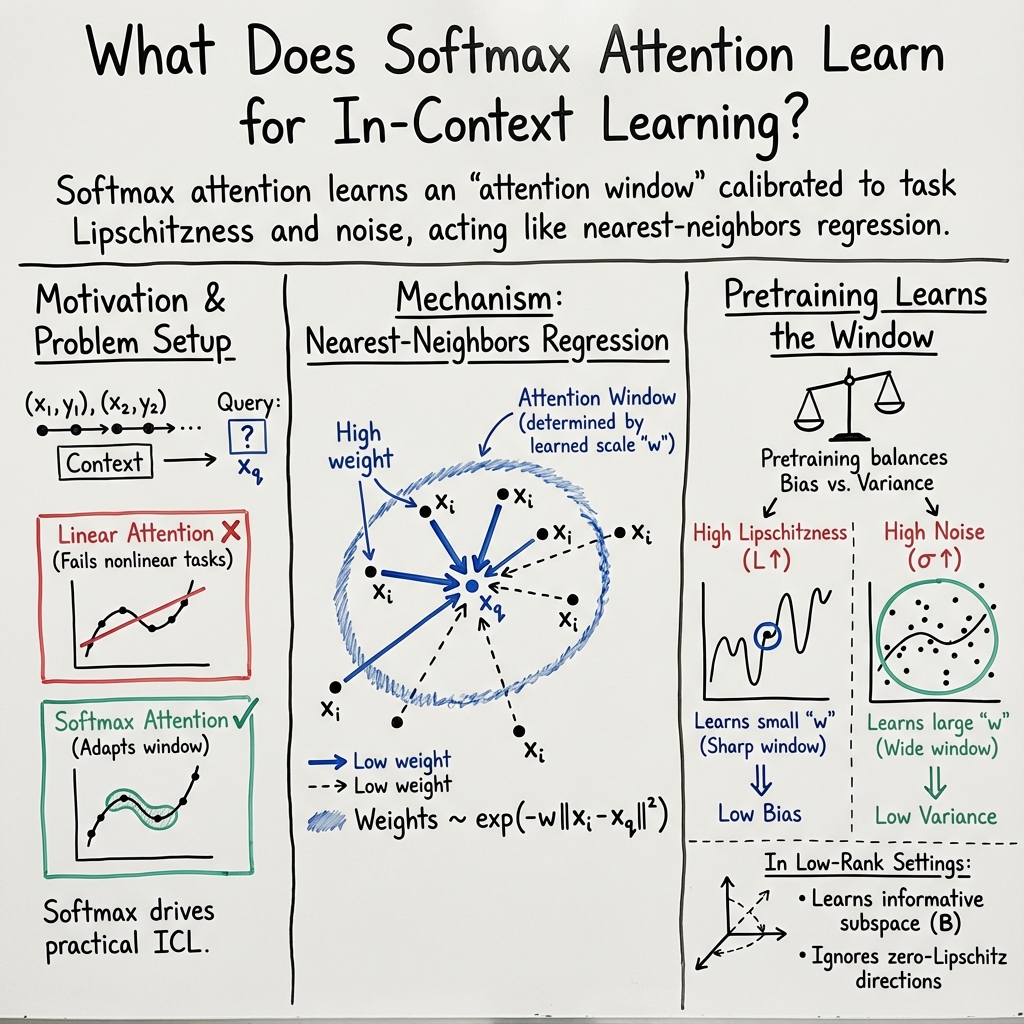

- The paper demonstrates that softmax attention dynamically adjusts its focus window to match the Lipschitz characteristics of regression tasks, acting like a nearest-neighbor predictor.

- The paper reveals that unlike linear attention, softmax activation is crucial for flexible window adaptation, leading to superior in-context learning outcomes.

- The paper shows that transformers generalize better on downstream tasks when their Lipschitz properties are aligned with those seen during pretraining, informing more efficient architecture design.

Adaptation of Transformer Softmax Attention to Task Variability in In-Context Learning

Overview of Findings

The study presents an examination of the attention mechanisms used by transformers, particularly focusing on how softmax attention adapts its focusing window in In-Context Learning (ICL) scenarios that involve regression tasks. The pivotal discovery highlights that the attention mechanism dynamically adjusts its window, i.e., the range of points it emphasizes for prediction, according to the combined Lipschitzness of the tasks it was trained on. In essence, this window resizing behavior allows the transformer to implement a nearest-neighbors predictor whose scale is attuned to the smoothness and noise characteristics of the task.

Key Technical Contributions

- Adaptive Attention Window: Empirical and theoretical analyses reveal that the softmax attention unit learns to widen or narrow its window based on the function Lipschitzness characteristic of its pre-training tasks. This adaptability parallels implementing a type of nearest-neighbor algorithm at inference, with the window acting as the locality for averaging inputs.

- Necessity of Softmax Activation: The research contrasts softmax with linear attention, showcasing that only the former can dynamically adapt its attention window. Linear attention lacks this adaptability, leading to suboptimal ICL performance under tasks differing in Lipschitzness.

- Generalization to Downstream Tasks: The study asserts that the transformer's ability to generalize to new tasks during ICL is directly correlated with the Lipschitz continuity of those tasks relative to the pretraining phase. Essentially, the model performs best when the downstream tasks share similar Lipschitz properties with the tasks seen during pretraining.

Experimental Validation

A series of experiments further underscore the transformer's capability to adjust its attention window, demonstrating how this feature contributes to its ICL performance across tasks with varying degrees of smoothness (Lipschitzness). Notably, such adaptability is shown to be contingent upon the softmax mechanism, which discernibly outperforms linear attention in terms of handling function classes with disparate Lipschitz constants.

Implications and Future Directions

This research illuminates a crucial aspect of how transformers manage to excel in ICL tasks, especially highlighting the role of the softmax attention mechanism's adaptability. The findings could pave the way for designing more efficient transformer architectures that can better tailor their attention mechanisms to the characteristics of the tasks at hand. Additionally, considering the emphasis on the softmax mechanism's adaptability, future work might explore how modifications to this component could further enhance ICL performance or extend adaptability to a broader range of task characteristics beyond Lipschitzness.

Conclusion

In conclusion, the research provides a detailed examination of how transformers, through the adaptability of softmax attention, can effectively perform in-context learning across a diverse range of regression tasks. The ability to adjust the attention window in response to the varied smoothness and noise levels inherent in these tasks stands as a testament to the transformers' robustness and versatility in ICL settings.