Graph Mamba: Towards Learning on Graphs with State Space Models

(2402.08678)Abstract

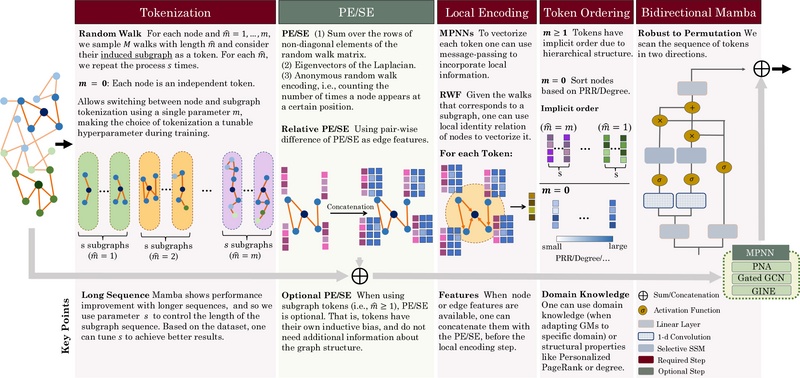

Graph Neural Networks (GNNs) have shown promising potential in graph representation learning. The majority of GNNs define a local message-passing mechanism, propagating information over the graph by stacking multiple layers. These methods, however, are known to suffer from two major limitations: over-squashing and poor capturing of long-range dependencies. Recently, Graph Transformers (GTs) emerged as a powerful alternative to Message-Passing Neural Networks (MPNNs). GTs, however, have quadratic computational cost, lack inductive biases on graph structures, and rely on complex Positional/Structural Encodings (SE/PE). In this paper, we show that while Transformers, complex message-passing, and SE/PE are sufficient for good performance in practice, neither is necessary. Motivated by the recent success of State Space Models (SSMs), such as Mamba, we present Graph Mamba Networks (GMNs), a general framework for a new class of GNNs based on selective SSMs. We discuss and categorize the new challenges when adapting SSMs to graph-structured data, and present four required and one optional steps to design GMNs, where we choose (1) Neighborhood Tokenization, (2) Token Ordering, (3) Architecture of Bidirectional Selective SSM Encoder, (4) Local Encoding, and dispensable (5) PE and SE. We further provide theoretical justification for the power of GMNs. Experiments demonstrate that despite much less computational cost, GMNs attain an outstanding performance in long-range, small-scale, large-scale, and heterophilic benchmark datasets.

Overview

-

Graph Mamba Networks (GMNs) aim to integrate the efficiency of State Space Models (SSMs) with the structural adaptability needed for graph-based data, addressing limitations of traditional Graph Neural Networks (GNNs).

-

GMNs introduce innovations such as Neighborhood Tokenization, Token Ordering, a Bidirectional Selective SSM Encoder, and Local Encoding to efficiently process graph-structured data without the need for complex encodings.

-

Empirical results demonstrate that GMNs outperform current leading models on various graph data benchmarks, achieving high performance in handling long-range, small-scale, large-scale, and heterophilic datasets without extra computational layers.

-

GMNs are proven to be universal approximators for any permutation equivariant function on graphs, offering a new direction for enhanced graph representation learning with potential for future advancements in scalability and domain-specific applications.

Exploring Graph Mamba Networks: A Leap in Learning on Graphs with State Space Models

Introduction

Graph Neural Networks (GNNs) have become a key component in machine learning for handling graph-structured data, encompassing a wide spectrum of applications from social network analysis to molecular biology. Traditional GNN architectures, notably Message-Passing Neural Networks (MPNNs) and their successors, Graph Transformers (GTs), have led the charge in graph representation learning. However, limitations such as quadratic computational costs and the necessity for complex positional or structural encodings have spurred continuous innovation. A noteworthy development in this landscape is the introduction of Graph Mamba Networks (GMNs), aiming to marry the efficiency and flexibility of State Space Models (SSMs) with the architectural needs of graph-structured data.

GMN Design Challenges and Solutions

The shift towards integrating SSMs into the domain of graph learning introduces a unique set of challenges. Chief among these are the adaption of tokenization and ordering mechanisms suitable for the inherently unordered structure of graphs. The design of GMNs adopts a strategic approach by devising a selective process that tailors SSMs specifically for graph data through:

- Neighborhood Tokenization to manage local graph structures efficiently.

- Token Ordering that ensures a meaningful sequence is established for SSM processing.

- Bidirectional Selective SSM Encoder architecture that leverages the power of SSMs while ensuring robustness against permutation variance, critical for graph data's unordered nature.

- Local Encoding for nuanced representation of node and subgraph features, bypassing the need for heavy computational Positional or Structural Encodings (PE/SE).

Empirical results vouch for GMNs' ability to outperform existing state-of-the-art models across diverse graph benchmarks, including long-range, small-scale, large-scale, and heterophilic datasets. Notably, GMNs showcase outstanding performance without necessitating complex encodings or additional MPNN layers, underlining the potential for SSMs to significantly advance the field of graph representation learning.

Theoretical Insights

The theoretical grounding of GMNs is reinforced through proofs of universality and expressive power. GMNs are shown to be universal approximators of any permutation equivariant function on graphs, given sufficient model capacity and suitable positional encodings. Furthermore, even without relying on PE/SE, GMNs demonstrate an expressive power that surpasses any Weisfeiler-Lehman test, a common yardstick for GNNs' ability to discriminate between graph structures.

Practical Implications and Future Directions

The development of GMNs signals a pivotal evolution in graph learning, emphasizing efficiency, scalability, and the intrinsic ability to capture long-range dependencies without the overhead of intensive computations typically associated with GTs. This shift paves the way for more effective and scalable applications in fields where graph data is paramount.

Looking ahead, there lies considerable potential in exploring further optimizations in the tokenization process and expanding the utility of GMNs across a broader spectrum of graph learning tasks. The adaptability of GMNs to varied graph structures also opens up avenues for domain-specific customizations, potentially enhancing models' performance in specialized applications.

In essence, Graph Mamba Networks stand at the confluence of innovation and practicality, offering a scalable, efficient, and powerful framework for advancing the state-of-the-art in graph representation learning. As this area continues to evolve, GMNs provide a solid foundation for future explorations, promising significant strides in both theoretical understanding and real-world applications of GNNs.