LegalLens: Leveraging LLMs for Legal Violation Identification in Unstructured Text (2402.04335v1)

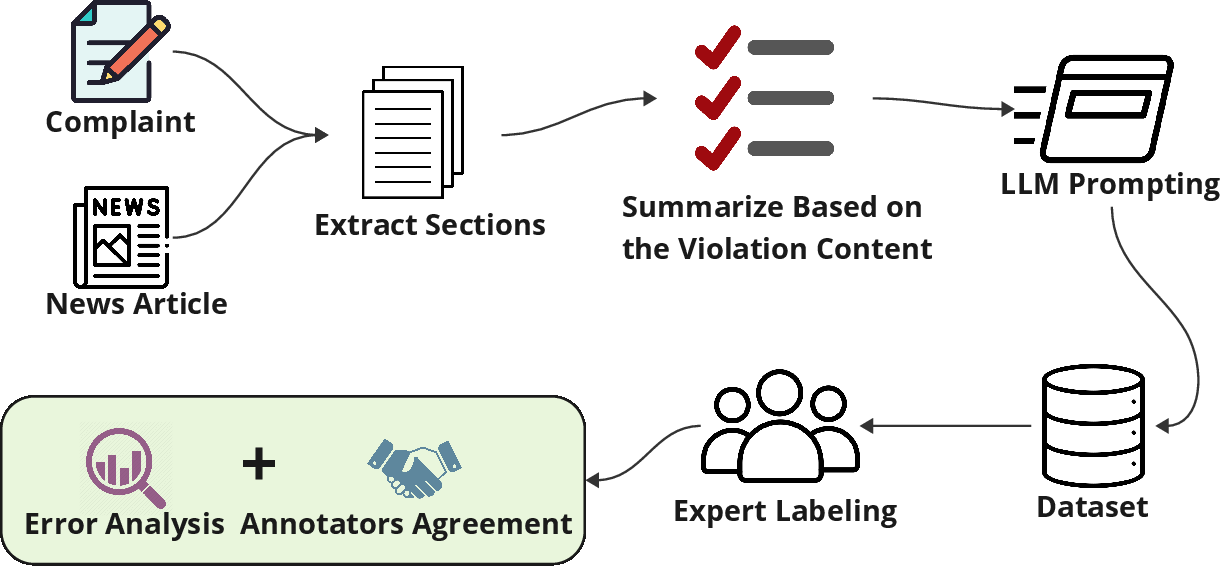

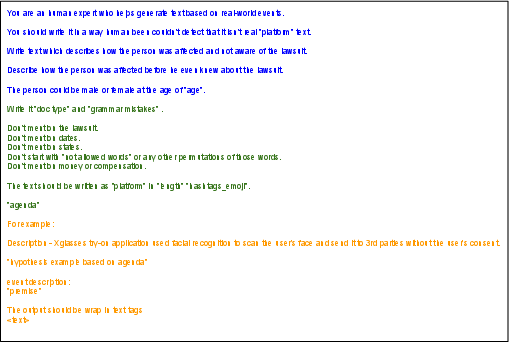

Abstract: In this study, we focus on two main tasks, the first for detecting legal violations within unstructured textual data, and the second for associating these violations with potentially affected individuals. We constructed two datasets using LLMs which were subsequently validated by domain expert annotators. Both tasks were designed specifically for the context of class-action cases. The experimental design incorporated fine-tuning models from the BERT family and open-source LLMs, and conducting few-shot experiments using closed-source LLMs. Our results, with an F1-score of 62.69\% (violation identification) and 81.02\% (associating victims), show that our datasets and setups can be used for both tasks. Finally, we publicly release the datasets and the code used for the experiments in order to advance further research in the area of legal NLP.

- Falcon-40B: an open large language model with state-of-the-art performance.

- Nlp-based automated compliance checking of data processing agreements against gdpr. IEEE Transactions on Software Engineering.

- Named entity recognition, linking and generation for greek legislation. In JURIX, pages 1–10.

- Longformer: The long-document transformer. CoRR, abs/2004.05150.

- Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901.

- Time-aware prompting for text generation. arXiv preprint arXiv:2211.02162.

- LEGAL-BERT: the muppets straight out of law school. CoRR, abs/2010.02559.

- LeXFiles and LegalLAMA: Facilitating English Multinational Legal Language Model Development. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, Canada. Association for Computational Linguistics.

- Xiang Dai. 2018. Recognizing complex entity mentions: A review and future directions. In Proceedings of ACL 2018, Student Research Workshop, pages 37–44.

- Qlora: Efficient finetuning of quantized llms.

- BERT: pre-training of deep bidirectional transformers for language understanding. CoRR, abs/1810.04805.

- Is gpt-3 a good data annotator? arXiv preprint arXiv:2212.10450.

- Named entity recognition and resolution in legal text. Springer.

- Response generation with context-aware prompt learning. arXiv preprint arXiv:2111.02643.

- Evaluating large language models in generating synthetic hci research data: a case study. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, pages 1–19.

- Parameter-efficient transfer learning for nlp.

- Lora: Low-rank adaptation of large language models.

- Hover: A dataset for many-hop fact extraction and claim verification. In Findings of the Association for Computational Linguistics: EMNLP 2020, pages 3441–3460.

- Named entity recognition in indian court judgments. arXiv preprint arXiv:2211.03442.

- A watermark for large language models. arXiv preprint arXiv:2301.10226.

- Yuta Koreeda and Christopher D Manning. 2021. Contractnli: A dataset for document-level natural language inference for contracts. arXiv preprint arXiv:2110.01799.

- Prototyping the use of large language models (llms) for adult learning content creation at scale. arXiv preprint arXiv:2306.01815.

- Fine-grained named entity recognition in legal documents. In International Conference on Semantic Systems, pages 272–287. Springer.

- A dataset of german legal documents for named entity recognition. arXiv preprint arXiv:2003.13016.

- Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Computing Surveys, 55(9):1–35.

- Roberta: A robustly optimized BERT pretraining approach. CoRR, abs/1907.11692.

- Lener-br: a dataset for named entity recognition in brazilian legal text. In Computational Processing of the Portuguese Language: 13th International Conference, PROPOR 2018, Canela, Brazil, September 24–26, 2018, Proceedings 13, pages 313–323. Springer.

- Processing long legal documents with pre-trained transformers: Modding legalbert and longformer.

- Detectgpt: Zero-shot machine-generated text detection using probability curvature. arXiv preprint arXiv:2301.11305.

- Is a prompt and a few samples all you need? using gpt-4 for data augmentation in low-resource classification tasks. arXiv preprint arXiv:2304.13861.

- Multilegalpile: A 689gb multilingual legal corpus. arXiv preprint arXiv:2306.02069.

- Anonymity at risk? assessing re-identification capabilities of large language models.

- OpenAI. 2023. Gpt-4 technical report.

- Training language models to follow instructions with human feedback. Advances in Neural Information Processing Systems, 35:27730–27744.

- Named entity recognition in the romanian legal domain. In Proceedings of the Natural Legal Language Processing Workshop 2021, pages 9–18.

- Training question answering models from synthetic data. arXiv preprint arXiv:2002.09599.

- Language models are unsupervised multitask learners. OpenAI blog, 1(8):9.

- Clasp: Few-shot cross-lingual data augmentation for semantic parsing. arXiv preprint arXiv:2210.07074.

- Linguist: Language model instruction tuning to generate annotated utterances for intent classification and slot tagging. arXiv preprint arXiv:2209.09900.

- Distilbert, a distilled version of BERT: smaller, faster, cheaper and lighter. CoRR, abs/1910.01108.

- Can gpt-4 support analysis of textual data in tasks requiring highly specialized domain expertise? arXiv preprint arXiv:2306.13906.

- Explaining legal concepts with augmented large language models (gpt-4). arXiv preprint arXiv:2306.09525.

- Classactionprediction: A challenging benchmark for legal judgment prediction of class action cases in the us. arXiv preprint arXiv:2211.00582.

- Using nlp and machine learning to detect data privacy violations. In IEEE INFOCOM 2020 - IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), pages 972–977.

- Named entity recognition in the legal domain using a pointer generator network. arXiv preprint arXiv:2012.09936.

- How to fine-tune bert for text classification?

- Fever: a large-scale dataset for fact extraction and verification. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), pages 809–819.

- Llama 2: Open foundation and fine-tuned chat models.

- Dimitrios Tsarapatsanis and Nikolaos Aletras. 2021. On the ethical limits of natural language processing on legal text. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, pages 3590–3599.

- Attention is all you need. CoRR, abs/1706.03762.

- Generating faithful synthetic data with large language models: A case study in computational social science. arXiv preprint arXiv:2305.15041.

- Unleash gpt-2 power for event detection. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 6271–6282.

- A simplified cohen’s kappa for use in binary classification data annotation tasks. IEEE Access, 7:164386–164397.

- Finetuned language models are zero-shot learners. arXiv preprint arXiv:2109.01652.

- Intelligent classification and automatic annotation of violations based on neural network language model. In 2020 International Joint Conference on Neural Networks (IJCNN), pages 1–7.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.