- The paper introduces RAG-Fusion, which integrates reciprocal rank fusion with additional query generation to improve response quality.

- The model, evaluated on Infineon’s chatbot, demonstrates enhanced accuracy for technical, sales, and customer inquiries despite higher latency.

- The study addresses challenges in response time and query relevance while proposing future work on multi-language support and improved document representation.

Overview of "RAG-Fusion: a New Take on Retrieval-Augmented Generation"

"RAG-Fusion: a New Take on Retrieval-Augmented Generation" introduces a novel approach to improving the efficacy of retrieval-augmented generation (RAG) systems. By integrating reciprocal rank fusion (RRF) into the RAG framework, the paper proposes a new model called RAG-Fusion. This model aims to enhance the accuracy, relevance, and comprehensiveness of responses by generating multiple contextually diverse queries and leveraging RRF for document reranking. The paper presents a comprehensive evaluation of RAG-Fusion in the context of Infineon's chatbot applications.

RAG-Fusion Methodology

Traditional RAG Limitations

Traditional RAG models combine LLMs with a document retrieval system to generate responses. Typically, relevant documents are retrieved based on vector distances to a query, and these documents are used to enhance response generation. While effective, this approach can produce responses limited by the initial query's ability to capture information from diverse perspectives.

RAG-Fusion Enhancements

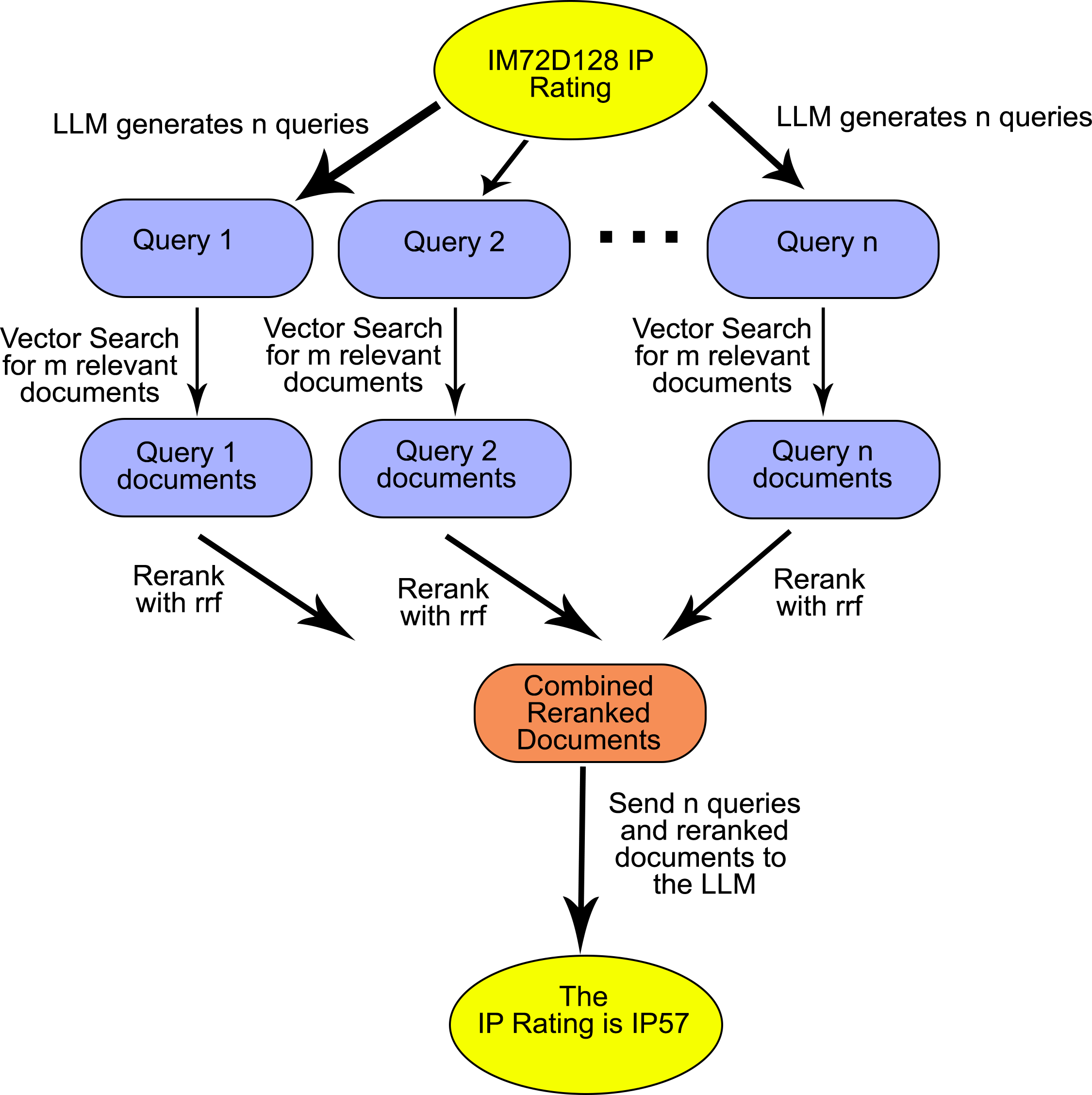

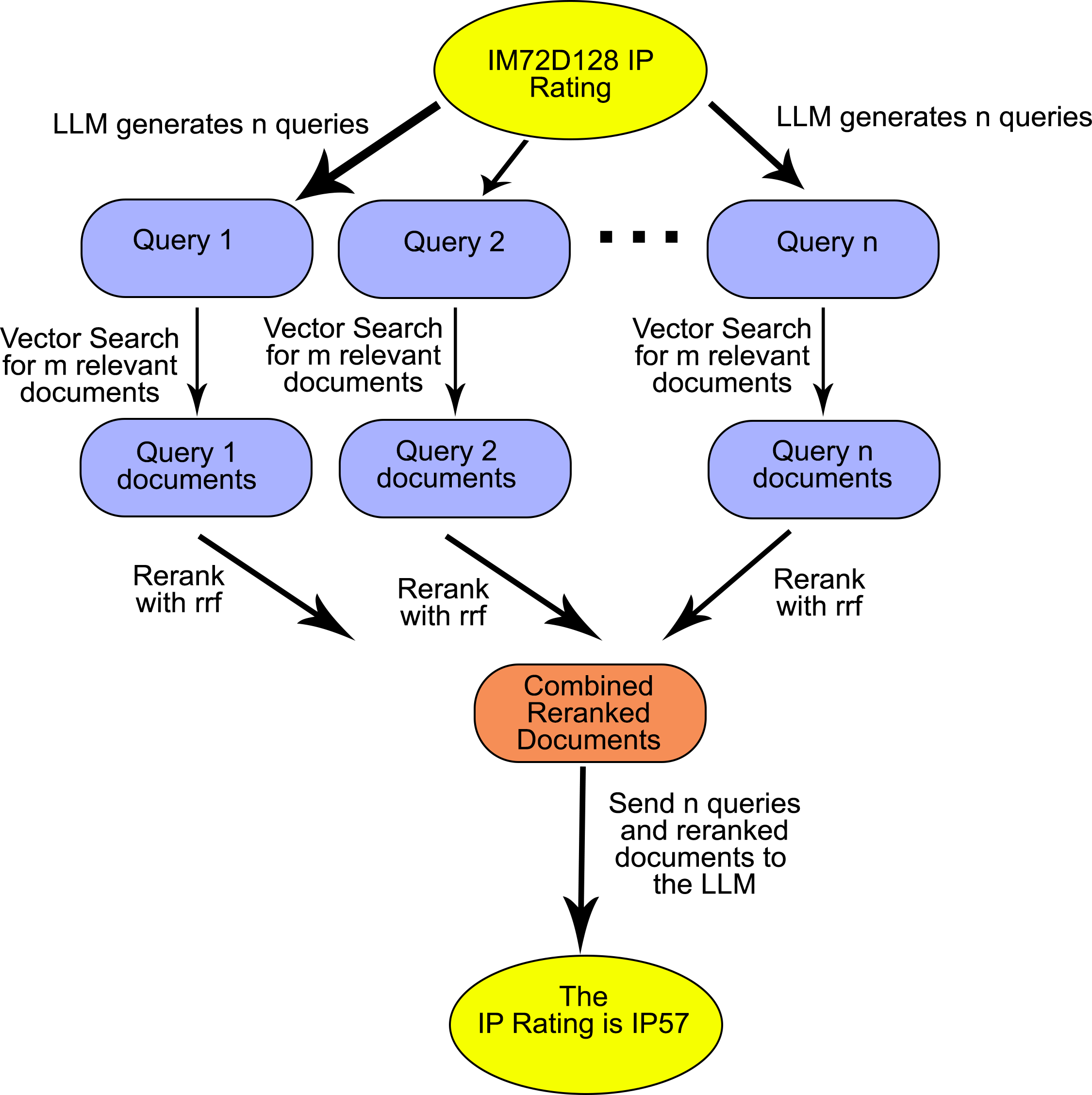

RAG-Fusion addresses this limitation by generating additional queries from the original user input using an LLM. These queries aim to capture various aspects and perspectives of the user’s question. For instance, given a query about a microphone, RAG-Fusion might generate related queries about its functionalities, benefits, and applications.

Figure 1: Diagram illustrating the high-level process of RAG-Fusion starting with the original query "IM72D128 IP Rating".

RRF is then employed to rerank the retrieved documents based on their relevance across these multiple queries. The rank is determined by calculating the reciprocal score, which helps prioritize documents that are consistently relevant across generated queries. The reranked documents are then used alongside the queries to generate a final comprehensive response.

Infineon Implementation and Use Cases

Chatbot Application

The paper evaluates the RAG-Fusion model within Infineon's chatbot, aimed at providing rapid access to technical and product information. Three primary use cases are explored: aiding engineers in finding technical product details, supporting account managers with sales strategies, and assisting customers with product inquiries.

Evaluation and Discussion

- Engineer Support: The RAG-Fusion model demonstrated superior performance in providing detailed and comprehensive answers about technical specifications and troubleshooting guidance. However, it struggled when the document database lacked specific procedural content.

- Sales and Customer Support: RAG-Fusion excelled in generating informative responses for sales strategies, synthesizing technical data with marketing insights to support account management. For customers, the chatbot effectively conveyed product suitability, enhancing customer engagement.

Implementation Challenges

Latency and Response Time

RAG-Fusion involves additional computational steps compared to traditional RAG models, notably two LLM calls—one for query generation and another for response generation using reranked documents. This increased complexity results in a longer response time, with RAG-Fusion found to be 1.77 times slower on average than RAG in the conducted tests.

Query Relevance and Prompt Engineering

The model's dependency on generating multiple queries potentially leads to irrelevant results when generated queries deviate from the original context. This necessitates careful prompt engineering to improve initial user queries’ ability to guide the generation process accurately.

Implications and Future Work

Application Across Languages

Future developments could enhance RAG-Fusion to support multi-language input and output, particularly for significant markets like Japanese and Mandarin Chinese. This would address issues of context loss in translated queries.

Enhanced Document Representation

Improving document representation, particularly for complex PDFs common in datasheets, would increase RAG-Fusion's accuracy by allowing more precise and contextually informed retrieval.

Evaluation Automation

The paper suggests employing evaluation frameworks like RAGElo and Ragas for automated evaluation of RAG-Fusion’s performance, though adaptation is required to align with specific industry needs.

Conclusion

RAG-Fusion presents a methodological advancement in retrieval-augmented generation, significantly enhancing response quality by integrating multiple query generation and reciprocal rank fusion. While practical challenges remain regarding performance latency and query relevance, the model offers promising improvements for applications where information retrieval and comprehensive response generation are critical. Future advancements should focus on language support expansion, document representation techniques, and refining evaluation frameworks to unlock RAG-Fusion's full potential in diverse real-world applications.