Towards Optimizing the Costs of LLM Usage

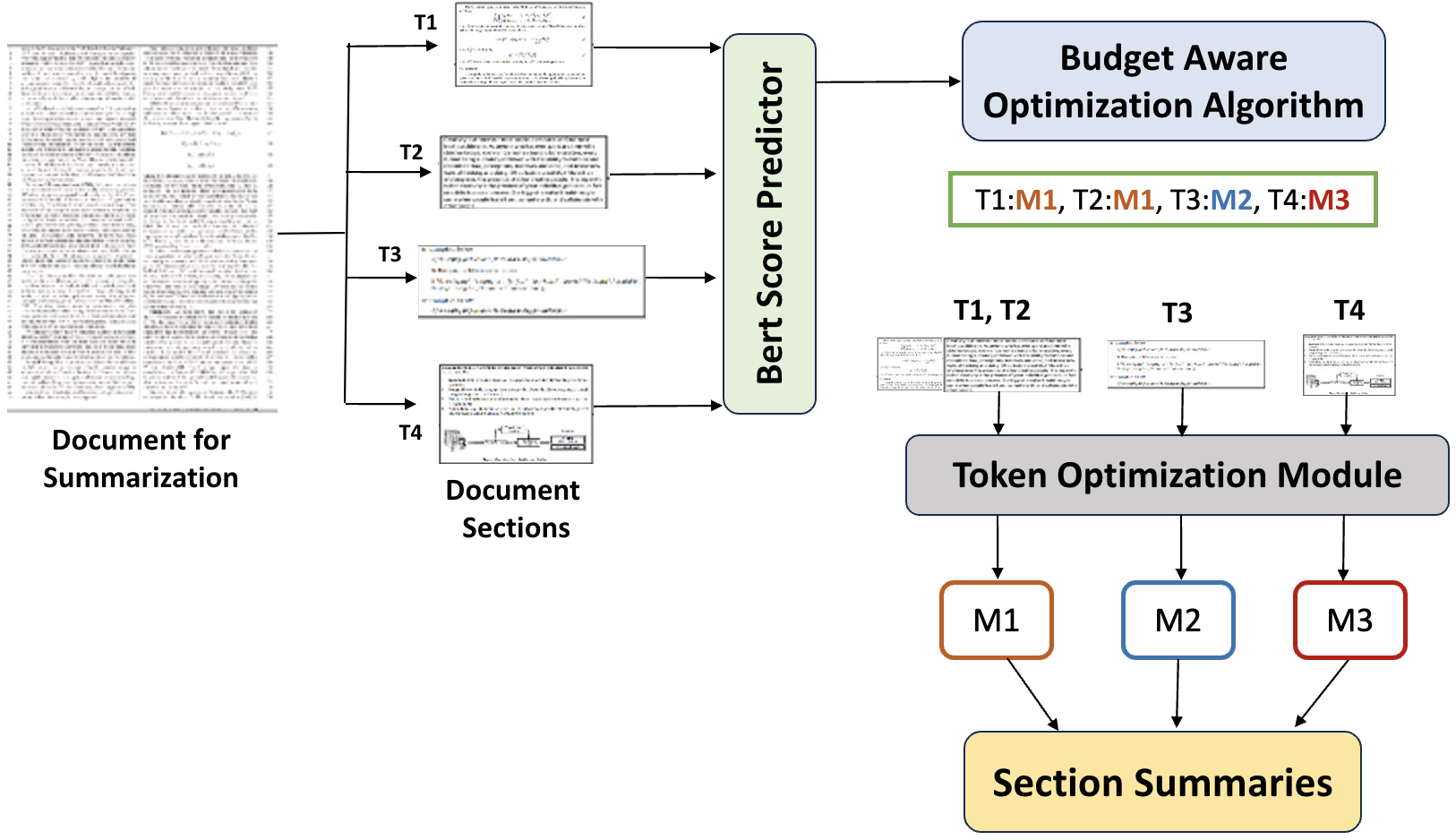

Abstract: Generative AI and LLMs in particular are heavily used nowadays for various document processing tasks such as question answering and summarization. However, different LLMs come with different capabilities for different tasks as well as with different costs, tokenization, and latency. In fact, enterprises are already incurring huge costs of operating or using LLMs for their respective use cases. In this work, we propose optimizing the usage costs of LLMs by estimating their output quality (without actually invoking the LLMs), and then solving an optimization routine for the LLM selection to either keep costs under a budget, or minimize the costs, in a quality and latency aware manner. We propose a model to predict the output quality of LLMs on document processing tasks like summarization, followed by an LP rounding algorithm to optimize the selection of LLMs. We study optimization problems trading off the quality and costs, both theoretically and empirically. We further propose a sentence simplification model for reducing the number of tokens in a controlled manner. Additionally, we propose several deterministic heuristics for reducing tokens in a quality aware manner, and study the related optimization problem of applying the heuristics optimizing the quality and cost trade-off. We perform extensive empirical validation of our methods on not only enterprise datasets but also on open-source datasets, annotated by us, and show that we perform much better compared to closest baselines. Our methods reduce costs by 40%- 90% while improving quality by 4%-7%. We will release the annotated open source datasets to the community for further research and exploration.

- GPT-3 API Latency — Model Comparison. https://medium.com/@evyborov/gpt-3-api-latency-model-comparison-13888a834938.

- gptcache. https://github.com/zilliztech/GPTCache.

- gptrim. https://www.gptrim.com/.

- NLTK. https://www.nltk.org/.

- OpenAI. https://openai.com/.

- OpenAI Pricing. https://openai.com/pricing.

- pyspellchecker. https://pypi.org/project/pyspellchecker/.

- thesaurus. https://github.com/zaibacu/thesaurus.

- Tiktoken. https://github.com/openai/tiktoken.

- Ashoori, M. Decoding the true cost of generative ai for your enterprise. https://www.linkedin.com/pulse/decoding-true-cost-generative-ai-your-enterprise-maryam-ashoori-phd/, 2023. [Online; accessed Oct-12-2023].

- Ms marco: A human generated machine reading comprehension dataset, 2016.

- METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization (Ann Arbor, Michigan, June 2005), Association for Computational Linguistics, pp. 65–72.

- Frugalml: How to use ml prediction apis more accurately and cheaply, 2020.

- Efficient online ml api selection for multi-label classification tasks, 2021.

- Frugalgpt: How to use large language models while reducing cost and improving performance. arXiv preprint arXiv:2305.05176 (2023).

- The economic potential of generative ai: The next productivity frontier. https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#introduction, 2023. [Online; accessed Oct-12-2023].

- Efficient unsupervised sentence compression by fine-tuning transformers with reinforcement learning, 2022.

- Samsum corpus: A human-annotated dialogue dataset for abstractive summarization.

- Cosmos qa: Machine reading comprehension with contextual commonsense reasoning, 2019.

- Babybear: Cheap inference triage for expensive language models, 2022.

- Neural text generation from structured data with application to the biography domain, 2016.

- Binary codes capable of correcting deletions, insertions, and reversals. In Soviet physics doklady (1966), vol. 10, Soviet Union, pp. 707–710.

- Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension, 2019.

- Lin, C.-Y. ROUGE: A package for automatic evaluation of summaries. In Text Summarization Branches Out (Barcelona, Spain, July 2004), Association for Computational Linguistics, pp. 74–81.

- Natural language inference in context – investigating contextual reasoning over long texts, 2020.

- Logiqa: A challenge dataset for machine reading comprehension with logical reasoning, 2020.

- Tangobert: Reducing inference cost by using cascaded architecture, 2022.

- Muss: Multilingual unsupervised sentence simplification by mining paraphrases, 2020.

- Controllable sentence simplification, 2020.

- fairseq: A fast, extensible toolkit for sequence modeling, 2019.

- Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (Philadelphia, Pennsylvania, USA, July 2002), Association for Computational Linguistics, pp. 311–318.

- Ranodeb Banerjee, O. Automatic document processing with large language models. https://www.linkedin.com/pulse/automatic-document-processing-large-language-models-ranodeb-banerjee/?utm_source=rss&utm_campaign=articles_sitemaps&utm_medium=google_news, 2023. [Online; accessed Oct-12-2023].

- Sallam, R. The economic potential of generative ai: The next productivity frontier. https://www.gartner.com/en/articles/take-this-view-to-assess-roi-for-generative-ai, 2023. [Online; accessed Oct-12-2023].

- Shafaq Naz, E. C. Reinventing logistics: Harnessing generative ai and gpt for intelligent document processing. https://www.e2enetworks.com/blog/reinventing-logistics-harnessing-generative-ai-and-gpt-for-intelligent-document-processing, 2023. [Online; accessed Oct-12-2023].

- Bigpatent: A large-scale dataset for abstractive and coherent summarization, 2019.

- Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288 (2023).

- XtractEdge. Cutting through the noise – how generative ai will change the idp landscape. https://www.edgeverve.com/xtractedge/blogs/transforming-idp-with-generative/, 2023. [Online; accessed Oct-12-2023].

- Reclor: A reading comprehension dataset requiring logical reasoning, 2020.

- Bertscore: Evaluating text generation with bert. arXiv preprint arXiv:1904.09675 (2019).

- Sentence simplification with deep reinforcement learning, 2017.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.