- The paper presents a framework that employs specialized agents to overcome FMs' limitations in traceability, interpretability, and domain adaptation.

- It details distinct agent roles—FM Updaters, Prompt Assistants, Assessors, Knowledge Curators, and Orchestrators—to collaboratively enhance model performance.

- By integrating external knowledge, dynamic prompts, and formal reasoning, the methodology significantly boosts FM generalization and reliability.

"Foundation Model Sherpas: Guiding Foundation Models through Knowledge and Reasoning"

Introduction

The paper discusses a conceptual framework known as "Foundation Model Sherpas" aimed at addressing the limitations of Foundation Models (FMs). Despite the widespread use of FMs such as LLMs, they face challenges including lack of traceability, interpretability, incorporation of domain knowledge, and generalizability to new environments. This framework advocates for utilizing agents that guide FMs through various tasks, enhancing their usability, trustworthiness, and alignment with specific user expectations.

Agents and Foundation Models

The paper highlights existing research at the convergence of agent-based perspectives and FM systems. Examples include approaches inspired by Minsky's "society of minds" and communicative agent frameworks that employ inception prompting to aid task completion and study multi-agent cooperative behaviors. Moreover, various orchestration frameworks integrate FMs into applications and workflows, emphasizing the orchestration of multiple agents to enhance task-solving capabilities.

Agent Roles in the Sherpas Framework

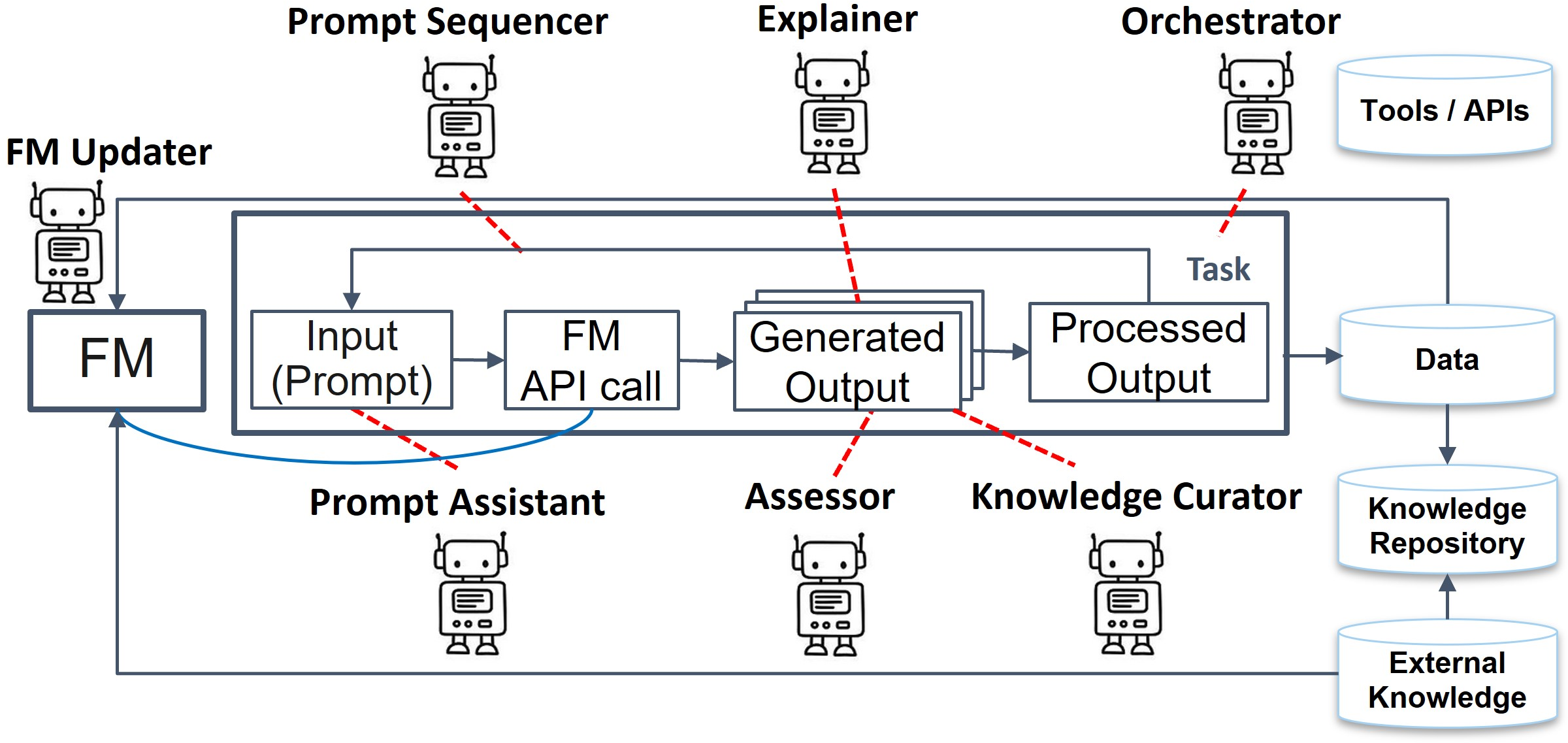

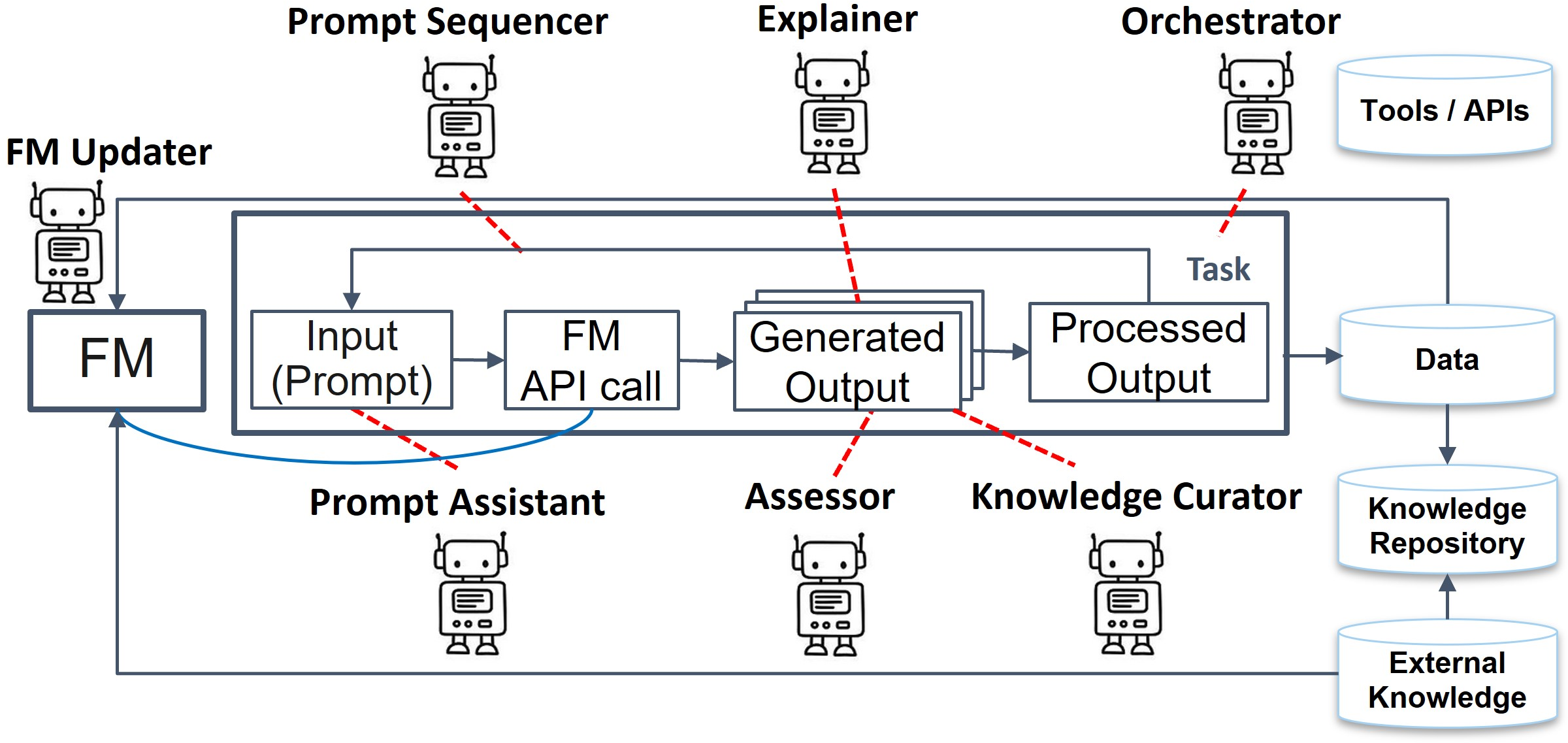

The framework categorizes agents into distinct roles, focusing on their interactions with FMs. These roles include FM Updaters, Prompt Assistants, Assessors, Knowledge Curators, and Orchestrators, each contributing uniquely to the use and management of FMs to tackle user-specified tasks effectively.

- FM Updaters: FM Updaters modify the underlying model or adapt its token generation process to align with user preferences better. Categories under FM Updaters include methods such as modified pre-training, fine/instruction-tuning, augmented/edited models, and controlled generation.

- Prompt Assistants and Sequencers: These agents assist with prompt engineering, essential for maximizing the interaction efficiency with FMs. Different prompting strategies like instruction-based and in-context learning prompts are highlighted, showcasing their effectiveness in guiding FM behavior through well-crafted prompts.

- Assessors and Explainers: Assessors evaluate FM-generated outputs based on attributes like generation quality, trustworthiness, factuality, and harmfulness. Explainers offer insights into the output, thus improving transparency and user understanding.

- Knowledge Curators: They assist in sourcing accurate knowledge for FMs, ensuring the models have access to relevant information from structured or unstructured databases. Extracting concise representations from FMs is also part of their role.

- Orchestrators: They manage workflows by coordinating interactions across agents, often involving external data access or tool usage. Orchestrators can be categorized based on their focus on tooling interaction, verbal reasoning flexibility, and formal reasoning capabilities.

Figure 1: The sherpas framework for guiding FMs, showing various agent categories with respect to their typical points of interaction with the FM as it executes or assists completion of a set of tasks.

Agent Interaction Protocols

The paper identifies four categories of agent interaction protocols: updating FMs with external knowledge, accessing tools, exploring dynamic prompts, and integrating external reasoners. These categories represent common methods in current frameworks, emphasizing the practical potential in optimizing the collaboration of various agents with FMs.

- Update FM with External Knowledge: Interaction protocols typically involve updating FMs using external knowledge or feedback loops from human evaluation, enhancing model performance and aligning outputs with expected results.

- Access Tools for Information Retrieval: This protocol employs FMs to retrieve relevant data from external sources. Prompt Assistants work with Knowledge Curators, leveraging API calls to gather information that guides the FM's outputs.

- Explore Dynamic Prompts: This involves using dynamic prompts and interactions to explore prompts more effectively, optimizing the combination of intermediate thoughts and their sequencing for better task solutions.

- Integrate External Reasoners: This protocol emphasizes using external formal reasoning systems, such as probabilistic programming, to improve the evaluation and reasoning capabilities of FMs.

Future Directions

The paper outlines several areas for future research:

- Multi-objective and Joint Optimization Paradigms: Exploring optimization across multiple sherpas simultaneously to improve FM performance across various metrics.

- Broader Role for Automated Assessors: Advancing Assessor capabilities to reduce reliance on human feedback and verbal reasoning.

- Formal Reasoning Orchestration: Integrating more diverse forms of reasoning frameworks to support FM guidance.

- Novel Modes of Knowledge Enablement: Enhancing methods for integrating diverse knowledge types into FMs.

- Humans in the Loop: Developing mechanisms for more sophisticated human interaction with FM-guided systems.

- Benchmarks: Creating benchmarks that assess a system's broad range of abilities rather than task-specific performance.

Conclusions

The "Sherpas" framework proposed in the paper introduces novel approaches to guiding FMs, leveraging the capabilities and roles of different agents to enhance AI systems' practical reliability and user trust. By categorizing and emphasizing agent interactions, the framework aims to foster the development of more effective, cooperative AI frameworks capable of integrating knowledge and reasoning with Foundation Models.