Efficient Non-Parametric Uncertainty Quantification for Black-Box Large Language Models and Decision Planning (2402.00251v1)

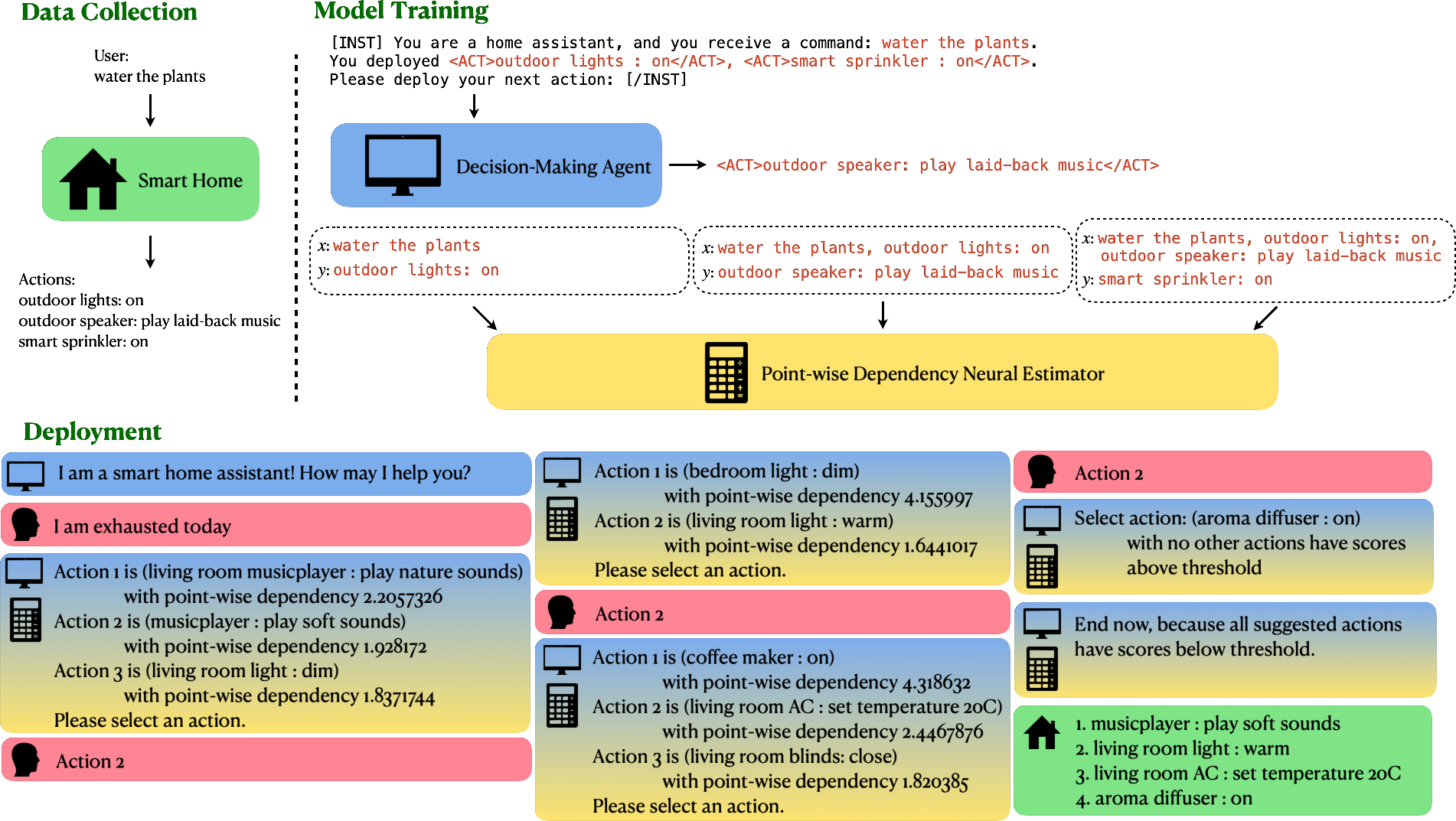

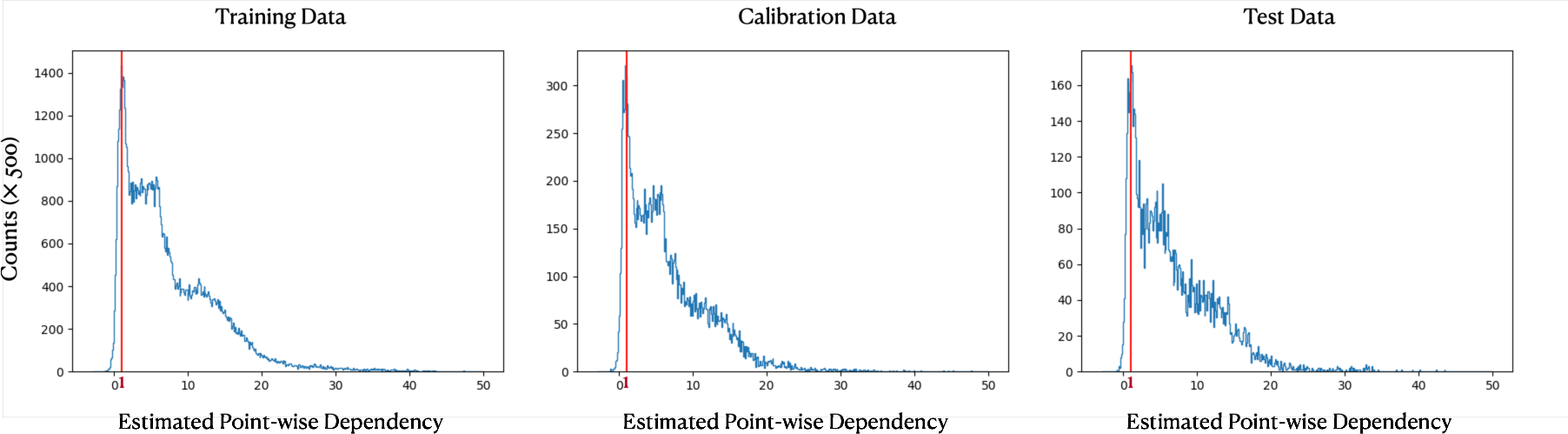

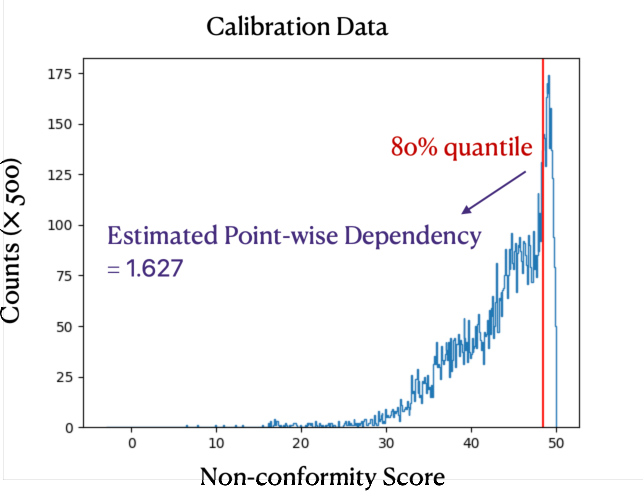

Abstract: Step-by-step decision planning with LLMs is gaining attention in AI agent development. This paper focuses on decision planning with uncertainty estimation to address the hallucination problem in LLMs. Existing approaches are either white-box or computationally demanding, limiting use of black-box proprietary LLMs within budgets. The paper's first contribution is a non-parametric uncertainty quantification method for LLMs, efficiently estimating point-wise dependencies between input-decision on the fly with a single inference, without access to token logits. This estimator informs the statistical interpretation of decision trustworthiness. The second contribution outlines a systematic design for a decision-making agent, generating actions like turn on the bathroom light'' based on user prompts such astake a bath''. Users will be asked to provide preferences when more than one action has high estimated point-wise dependencies. In conclusion, our uncertainty estimation and decision-making agent design offer a cost-efficient approach for AI agent development.

- Do as i can, not as i say: Grounding language in robotic affordances. arXiv preprint arXiv:2204.01691, 2022.

- Rl4f: Generating natural language feedback with reinforcement learning for repairing model outputs. arXiv preprint arXiv:2305.08844, 2023.

- Llm in a flash: Efficient large language model inference with limited memory. arXiv preprint arXiv:2312.11514, 2023.

- Mutual information neural estimation. In International conference on machine learning, pp. 531–540. PMLR, 2018.

- Emergent autonomous scientific research capabilities of large language models. arXiv preprint arXiv:2304.05332, 2023.

- Rt-2: Vision-language-action models transfer web knowledge to robotic control. arXiv preprint arXiv:2307.15818, 2023.

- Decision transformer: Reinforcement learning via sequence modeling. Advances in neural information processing systems, 34:15084–15097, 2021.

- Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078, 2014.

- Ad-autogpt: An autonomous gpt for alzheimer’s disease infodemiology. arXiv preprint arXiv:2306.10095, 2023.

- Palm-e: An embodied multimodal language model. arXiv preprint arXiv:2303.03378, 2023.

- Unsupervised quality estimation for neural machine translation. Transactions of the Association for Computational Linguistics, 8:539–555, 2020.

- Retrieval augmented language model pre-training. In International conference on machine learning, pp. 3929–3938. PMLR, 2020.

- Metagpt: Meta programming for multi-agent collaborative framework. arXiv preprint arXiv:2308.00352, 2023.

- Inner monologue: Embodied reasoning through planning with language models. arXiv preprint arXiv:2207.05608, 2022.

- Few-shot learning with retrieval augmented language models. arXiv preprint arXiv:2208.03299, 2022.

- Mistral 7b. arXiv preprint arXiv:2310.06825, 2023.

- Mixtral of experts, 2024.

- Language models (mostly) know what they know. arXiv preprint arXiv:2207.05221, 2022.

- Semantic uncertainty: Linguistic invariances for uncertainty estimation in natural language generation. arXiv preprint arXiv:2302.09664, 2023.

- Conformal prediction with large language models for multi-choice question answering. arXiv preprint arXiv:2305.18404, 2023.

- A simple unified framework for detecting out-of-distribution samples and adversarial attacks. Advances in neural information processing systems, 31, 2018.

- Camel: Communicative agents for” mind” exploration of large scale language model society. arXiv preprint arXiv:2303.17760, 2023.

- Pre-trained language models for interactive decision-making. Advances in Neural Information Processing Systems, 35:31199–31212, 2022.

- Generating with confidence: Uncertainty quantification for black-box large language models. arXiv preprint arXiv:2305.19187, 2023.

- Languages are rewards: Hindsight finetuning using human feedback. arXiv preprint arXiv:2302.02676, 2023a.

- Training socially aligned language models in simulated human society. arXiv preprint arXiv:2305.16960, 2023b.

- Chameleon: Plug-and-play compositional reasoning with large language models. arXiv preprint arXiv:2304.09842, 2023.

- Uncertainty estimation in autoregressive structured prediction. arXiv preprint arXiv:2002.07650, 2020.

- Webgpt: Browser-assisted question-answering with human feedback. arXiv preprint arXiv:2112.09332, 2021.

- Gpt-4 technical report, 2023.

- Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, pp. 1–22, 2023.

- Check your facts and try again: Improving large language models with external knowledge and automated feedback. arXiv preprint arXiv:2302.12813, 2023.

- Communicative agents for software development. arXiv preprint arXiv:2307.07924, 2023.

- Conformal language modeling. arXiv preprint arXiv:2306.10193, 2023.

- Robots that ask for help: Uncertainty alignment for large language model planners. arXiv preprint arXiv:2307.01928, 2023.

- Out-of-distribution detection and selective generation for conditional language models. arXiv preprint arXiv:2209.15558, 2022.

- Artificial intelligence a modern approach. London, 2010.

- Toolformer: Language models can teach themselves to use tools. arXiv preprint arXiv:2302.04761, 2023.

- Languagempc: Large language models as decision makers for autonomous driving. arXiv preprint arXiv:2310.03026, 2023.

- A tutorial on conformal prediction. Journal of Machine Learning Research, 9(3), 2008.

- Smith, R. C. Uncertainty quantification: theory, implementation, and applications, volume 12. Siam, 2013.

- Cognitive architectures for language agents. arXiv preprint arXiv:2309.02427, 2023.

- Mobilebert: a compact task-agnostic bert for resource-limited devices. arXiv preprint arXiv:2004.02984, 2020.

- Relevant and informative response generation using pointwise mutual information. In Proceedings of the First Workshop on NLP for Conversational AI, pp. 133–138, 2019.

- Large language models in medicine. Nature medicine, 29(8):1930–1940, 2023.

- Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, 2023a.

- Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023b.

- Neural methods for point-wise dependency estimation. Advances in Neural Information Processing Systems, 33:62–72, 2020.

- Self-supervised representation learning with relative predictive coding. arXiv preprint arXiv:2103.11275, 2021.

- Multimodal large language model for visual navigation. arXiv preprint arXiv:2310.08669, 2023.

- Mutual information alleviates hallucinations in abstractive summarization. arXiv preprint arXiv:2210.13210, 2022.

- Uncertainty estimation of transformer predictions for misclassification detection. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 8237–8252, 2022.

- Intelligent agents: Theory and practice. The knowledge engineering review, 10(2):115–152, 1995.

- Autogen: Enabling next-gen llm applications via multi-agent conversation framework. arXiv preprint arXiv:2308.08155, 2023.

- Auto-gpt for online decision making: Benchmarks and additional opinions. arXiv preprint arXiv:2306.02224, 2023.

- React: Synergizing reasoning and acting in language models. arXiv preprint arXiv:2210.03629, 2022.

- Detection of word adversarial examples in text classification: Benchmark and baseline via robust density estimation. arXiv preprint arXiv:2203.01677, 2022.

- Siren’s song in the ai ocean: A survey on hallucination in large language models. arXiv preprint arXiv:2309.01219, 2023.

- Navgpt: Explicit reasoning in vision-and-language navigation with large language models. arXiv preprint arXiv:2305.16986, 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.